2025년 7월 14일

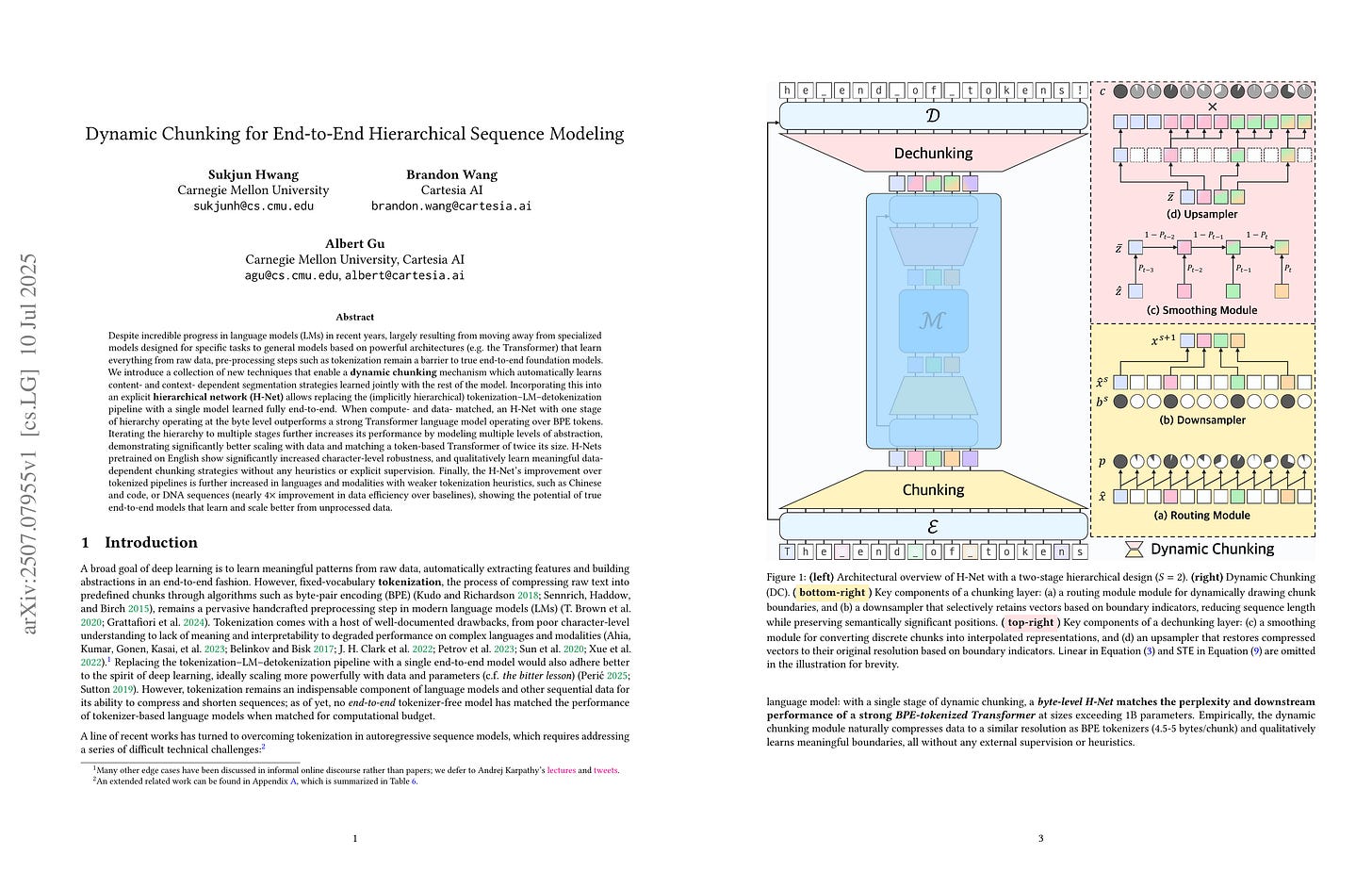

Dynamic Chunking for End-to-End Hierarchical Sequence Modeling

(Sukjun Hwang, Brandon Wang, Albert Gu)

Despite incredible progress in language models (LMs) in recent years, largely resulting from moving away from specialized models designed for specific tasks to general models based on powerful architectures (e.g. the Transformer) that learn everything from raw data, pre-processing steps such as tokenization remain a barrier to true end-to-end foundation models. We introduce a collection of new techniques that enable a dynamic chunking mechanism which automatically learns content -- and context -- dependent segmentation strategies learned jointly with the rest of the model. Incorporating this into an explicit hierarchical network (H-Net) allows replacing the (implicitly hierarchical) tokenization-LM-detokenization pipeline with a single model learned fully end-to-end. When compute- and data- matched, an H-Net with one stage of hierarchy operating at the byte level outperforms a strong Transformer language model operating over BPE tokens. Iterating the hierarchy to multiple stages further increases its performance by modeling multiple levels of abstraction, demonstrating significantly better scaling with data and matching a token-based Transformer of twice its size. H-Nets pretrained on English show significantly increased character-level robustness, and qualitatively learn meaningful data-dependent chunking strategies without any heuristics or explicit supervision. Finally, the H-Net's improvement over tokenized pipelines is further increased in languages and modalities with weaker tokenization heuristics, such as Chinese and code, or DNA sequences (nearly 4x improvement in data efficiency over baselines), showing the potential of true end-to-end models that learn and scale better from unprocessed data.

Tokenizer-free LM. 꽤 복잡하지마 지금까지 나온 Tokenizer-free 방법 중에서는 가장 근사하군요. MoE와 비슷하게 라우팅으로 시퀀스를 쪼개는데 학습 시에 길이가 일정하지 않다는 건 성가실 듯 하네요.

Tokenizer-free LM. It's quite complex, but this seems to be the most promising approach among the tokenizer-free methods that have emerged so far. Similar to MoE, it uses routing to segment sequences, but the variable sequence lengths during training could be troublesome.

#tokenizer #llm

BlockFFN: Towards End-Side Acceleration-Friendly Mixture-of-Experts with Chunk-Level Activation Sparsity

(Chenyang Song, Weilin Zhao, Xu Han, Chaojun Xiao, Yingfa Chen, Yuxuan Li, Zhiyuan Liu, Maosong Sun)

To alleviate the computational burden of large language models (LLMs), architectures with activation sparsity, represented by mixture-of-experts (MoE), have attracted increasing attention. However, the non-differentiable and inflexible routing of vanilla MoE hurts model performance. Moreover, while each token activates only a few parameters, these sparsely-activated architectures exhibit low chunk-level sparsity, indicating that the union of multiple consecutive tokens activates a large ratio of parameters. Such a sparsity pattern is unfriendly for acceleration under low-resource conditions (e.g., end-side devices) and incompatible with mainstream acceleration techniques (e.g., speculative decoding). To address these challenges, we introduce a novel MoE architecture, BlockFFN, as well as its efficient training and deployment techniques. Specifically, we use a router integrating ReLU activation and RMSNorm for differentiable and flexible routing. Next, to promote both token-level sparsity (TLS) and chunk-level sparsity (CLS), CLS-aware training objectives are designed, making BlockFFN more acceleration-friendly. Finally, we implement efficient acceleration kernels, combining activation sparsity and speculative decoding for the first time. The experimental results demonstrate the superior performance of BlockFFN over other MoE baselines, achieving over 80% TLS and 70% 8-token CLS. Our kernels achieve up to 3.67$\times$ speedup on real end-side devices than dense models. All codes and checkpoints are available publicly (https://github.com/thunlp/BlockFFN).

ReMoE와 유사한 (https://arxiv.org/abs/2412.14711) ReLU 기반 라우팅에 청크 내에서 선택된 Expert들이 Sparse하게 만드는 Loss를 사용해 추론을 가속한다는 아이디어. 한 토큰에 대해 선택되는 Expert의 수가 일정하지 않다는 것이 감수할만할지 모르겠네요.

An idea similar to ReMoE (https://arxiv.org/abs/2412.14711) that uses ReLU-based routing combined with a loss function that enforces sparsity among the experts selected within a chunk to accelerate inference. I'm not sure if the variable number of experts selected per token is an acceptable trade-off.

#moe

Vision Foundation Models as Effective Visual Tokenizers for Autoregressive Image Generation

(Anlin Zheng, Xin Wen, Xuanyang Zhang, Chuofan Ma, Tiancai Wang, Gang Yu, Xiangyu Zhang, Xiaojuan Qi)

Leveraging the powerful representations of pre-trained vision foundation models -- traditionally used for visual comprehension -- we explore a novel direction: building an image tokenizer directly atop such models, a largely underexplored area. Specifically, we employ a frozen vision foundation model as the encoder of our tokenizer. To enhance its effectiveness, we introduce two key components: (1) a region-adaptive quantization framework that reduces redundancy in the pre-trained features on regular 2D grids, and (2) a semantic reconstruction objective that aligns the tokenizer's outputs with the foundation model's representations to preserve semantic fidelity. Based on these designs, our proposed image tokenizer, VFMTok, achieves substantial improvements in image reconstruction and generation quality, while also enhancing token efficiency. It further boosts autoregressive (AR) generation -- achieving a gFID of 2.07 on ImageNet benchmarks, while accelerating model convergence by three times, and enabling high-fidelity class-conditional synthesis without the need for classifier-free guidance (CFG). The code will be released publicly to benefit the community.

프리트레이닝된 이미지 인코더와 Deformable Attention을 사용한 이미지 토크나이저.

An image tokenizer using pre-trained image encoders and Deformable Attention.

#vq