2025년 6월 30일

ERNIE 4.5 Technical Report

(ERNIE Team, Baidu)

In this report, we introduce ERNIE 4.5, a new family of large-scale multimodal models comprising 10 distinct variants. The model family consist of Mixture-of-Experts (MoE) models with 47B and 3B active parameters, with the largest model having 424B total parameters, as well as a 0.3B dense model. For the MoE architecture, we propose a novel heterogeneous modality structure, which supports parameter sharing across modalities while also allowing dedicated parameters for each individual modality. This MoE architecture has the advantage to enhance multimodal understanding without compromising, and even improving, performance on text-related tasks. All of our models are trained with optimal efficiency using the PaddlePaddle deep learning framework, which also enables high-performance inference and streamlined deployment for them. We achieve 47% Model FLOPs Utilization (MFU) in our largest ERNIE 4.5 language model pre-training. Experimental results show that our models achieve state-of-the-art performance across multiple text and multimodal benchmarks, especially in instruction following, world knowledge memorization, visual understanding and multimodal reasoning. All models are publicly accessible under Apache 2.0 to support future research and development in the field. Additionally, we open source the development toolkits for ERNIE 4.5, featuring industrial-grade capabilities, resourceefficient training and inference workflows, and multi-hardware compatibility.

바이두의 멀티모달 파운데이션 모델. 학습과 추론 인프라에 대해서까지 굉장히 상세하네요. MoE 라우터 직교화가 벌써 쓰이고 있군요. LR 스케줄을 EMA로 완전히 대체하는 것도 채택했네요.

Baidu's multimodal foundation model. It is very detailed on training and inference infrastructure. MoE router orthogonalization is already used. They also adopted completely replacing LR schedules with EMA.

#llm #multimodal #pretraining #moe #post-training

Baidu's multimodal foundation model. The report is detailed on covering the training and inference infrastructure. Interestingly, they are already using MoE router orthogonalization. They've also completely replaced LR schedules with EMA.

GPAS: Accelerating Convergence of LLM Pretraining via Gradient-Preserving Activation Scaling

(Tianhao Chen, Xin Xu, Zijing Liu, Pengxiang Li, Xinyuan Song, Ajay Kumar Jaiswal, Fan Zhang, Jishan Hu, Yang Wang, Hao Chen, Shizhe Diao, Shiwei Liu, Yu Li, Yin Lu, Can Yang)

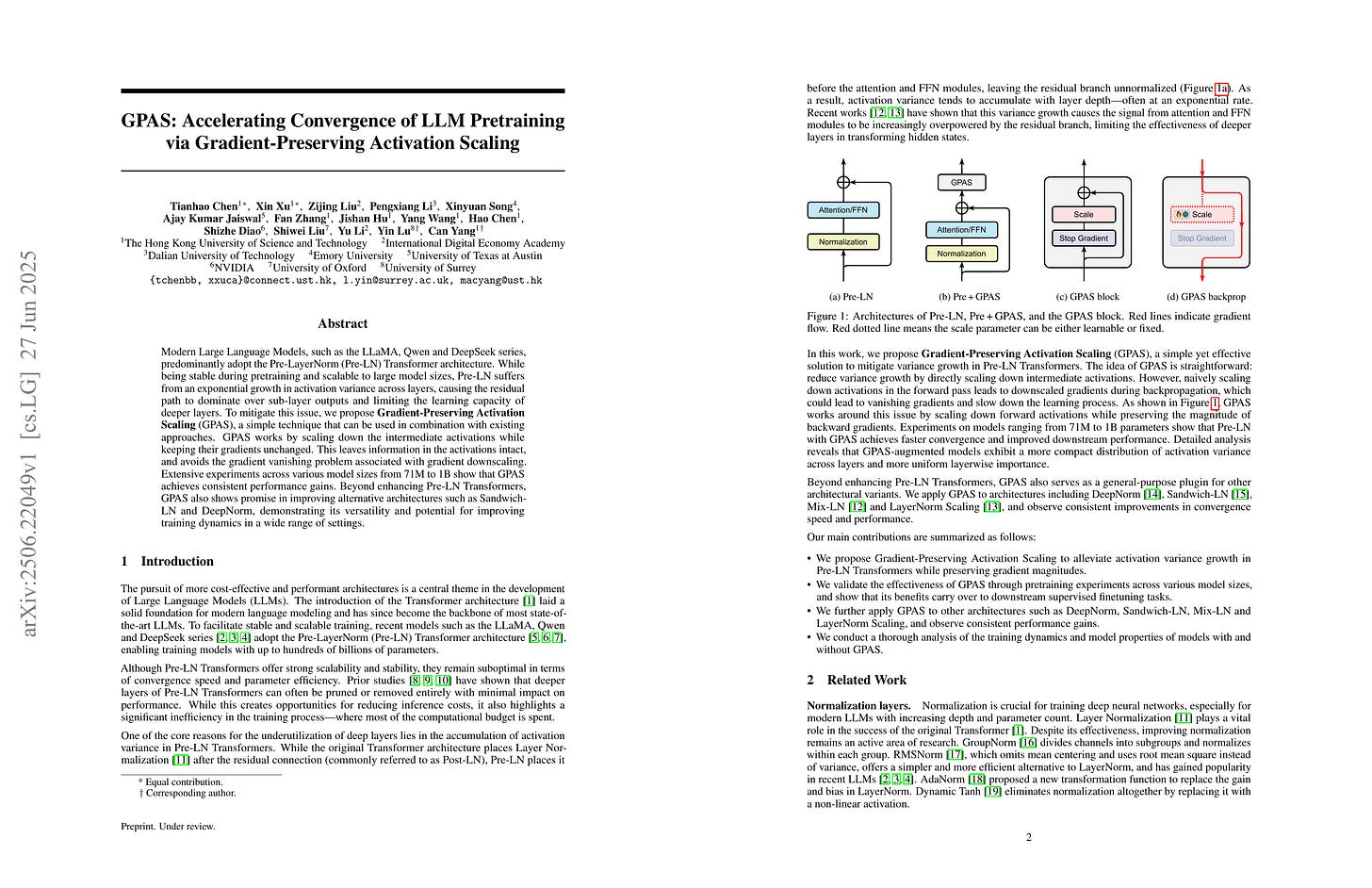

Modern Large Language Models, such as the LLaMA, Qwen and DeepSeek series, predominantly adopt the Pre-LayerNorm (Pre-LN) Transformer architecture. While being stable during pretraining and scalable to large model sizes, Pre-LN suffers from an exponential growth in activation variance across layers, causing the residual path to dominate over sub-layer outputs and limiting the learning capacity of deeper layers. To mitigate this issue, we propose Gradient-Preserving Activation Scaling (GPAS), a simple technique that can be used in combination with existing approaches. GPAS works by scaling down the intermediate activations while keeping their gradients unchanged. This leaves information in the activations intact, and avoids the gradient vanishing problem associated with gradient downscaling. Extensive experiments across various model sizes from 71M to 1B show that GPAS achieves consistent performance gains. Beyond enhancing Pre-LN Transformers, GPAS also shows promise in improving alternative architectures such as Sandwich-LN and DeepNorm, demonstrating its versatility and potential for improving training dynamics in a wide range of settings.

Activation의 분산 증가를 억제하기 위한 방법. x' = x - SiLU(α) * stop_gradient(x) 형태의 레이어를 끼워넣었습니다. 흥미롭네요.

A method for suppressing the increase in activation variance. They inserted a layer of the form x' = x - SiLU(α) * stop_gradient(x). Interesting.

#transformer