2025년 5월 9일

Mogao: An Omni Foundation Model for Interleaved Multi-Modal Generation

(Chao Liao, Liyang Liu, Xun Wang, Zhengxiong Luo, Xinyu Zhang, Wenliang Zhao, Jie Wu, Liang Li, Zhi Tian, Weilin Huang)

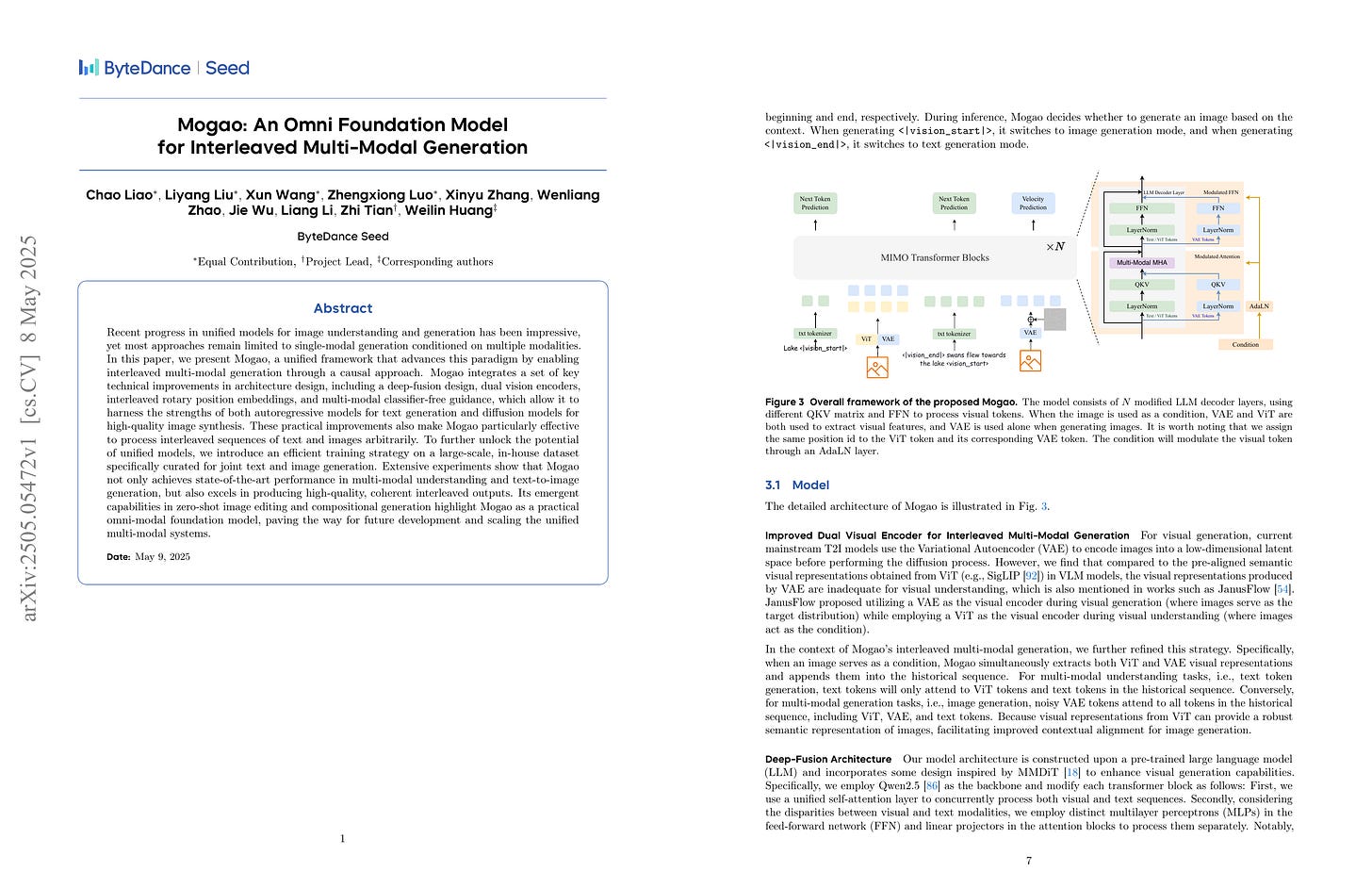

Recent progress in unified models for image understanding and generation has been impressive, yet most approaches remain limited to single-modal generation conditioned on multiple modalities. In this paper, we present Mogao, a unified framework that advances this paradigm by enabling interleaved multi-modal generation through a causal approach. Mogao integrates a set of key technical improvements in architecture design, including a deep-fusion design, dual vision encoders, interleaved rotary position embeddings, and multi-modal classifier-free guidance, which allow it to harness the strengths of both autoregressive models for text generation and diffusion models for high-quality image synthesis. These practical improvements also make Mogao particularly effective to process interleaved sequences of text and images arbitrarily. To further unlock the potential of unified models, we introduce an efficient training strategy on a large-scale, in-house dataset specifically curated for joint text and image generation. Extensive experiments show that Mogao not only achieves state-of-the-art performance in multi-modal understanding and text-to-image generation, but also excels in producing high-quality, coherent interleaved outputs. Its emergent capabilities in zero-shot image editing and compositional generation highlight Mogao as a practical omni-modal foundation model, paving the way for future development and scaling the unified multi-modal systems.

Interleaved 이미지 인식-생성 모델. VAE와 ViT 토큰을 동시에 사용하고 이미지 생성은 Rectified Flow로 처리했군요.

An interleaved image understanding and generation model. They simultaneously used VAE and ViT tokens, and handled image generation using rectified flow.

#multimodal #flow-matching #image-generation

Ultra-FineWeb: Efficient Data Filtering and Verification for High-Quality LLM Training Data

(Yudong Wang, Zixuan Fu, Jie Cai, Peijun Tang, Hongya Lyu, Yewei Fang, Zhi Zheng, Jie Zhou, Guoyang Zeng, Chaojun Xiao, Xu Han, Zhiyuan Liu)

Data quality has become a key factor in enhancing model performance with the rapid development of large language models (LLMs). Model-driven data filtering has increasingly become a primary approach for acquiring high-quality data. However, it still faces two main challenges: (1) the lack of an efficient data verification strategy makes it difficult to provide timely feedback on data quality; and (2) the selection of seed data for training classifiers lacks clear criteria and relies heavily on human expertise, introducing a degree of subjectivity. To address the first challenge, we introduce an efficient verification strategy that enables rapid evaluation of the impact of data on LLM training with minimal computational cost. To tackle the second challenge, we build upon the assumption that high-quality seed data is beneficial for LLM training, and by integrating the proposed verification strategy, we optimize the selection of positive and negative samples and propose an efficient data filtering pipeline. This pipeline not only improves filtering efficiency, classifier quality, and robustness, but also significantly reduces experimental and inference costs. In addition, to efficiently filter high-quality data, we employ a lightweight classifier based on fastText, and successfully apply the filtering pipeline to two widely-used pre-training corpora, FineWeb and Chinese FineWeb datasets, resulting in the creation of the higher-quality Ultra-FineWeb dataset. Ultra-FineWeb contains approximately 1 trillion English tokens and 120 billion Chinese tokens. Empirical results demonstrate that the LLMs trained on Ultra-FineWeb exhibit significant performance improvements across multiple benchmark tasks, validating the effectiveness of our pipeline in enhancing both data quality and training efficiency.

여러 방법으로 전처리된 데이터를 시드로 설정한 다음 Llama 3 스타일을 따라 Decay 시점에 필터링된 데이터를 결합해 평가하는 사이클을 사용해 데이터를 필터링하는 방법.

데이터 품질 필터링은 중요한 요소죠. 다만 프리트레이닝의 규모가 점점 늘어나는 시점에서는 오히려 모델의 성능에 기여할 수 있는 텍스트 품질의 최저선은 무엇인가를 물을 필요가 있다고 봅니다.

This method filters data by setting up seeds from data preprocessed in various ways, then using a cycle that evaluates the data by combining filtered data at LR decay times, following Llama 3's approach.

Data quality filtering is a crucial component. However, as the scale of pretraining continues to increase, we may need to ask what is the minimum threshold of text quality that can still benefit model performance.

#pretraining #corpus

When Bad Data Leads to Good Models

(Kenneth Li, Yida Chen, Fernanda Viégas, Martin Wattenberg)

In large language model (LLM) pretraining, data quality is believed to determine model quality. In this paper, we re-examine the notion of "quality" from the perspective of pre- and post-training co-design. Specifically, we explore the possibility that pre-training on more toxic data can lead to better control in post-training, ultimately decreasing a model's output toxicity. First, we use a toy experiment to study how data composition affects the geometry of features in the representation space. Next, through controlled experiments with Olmo-1B models trained on varying ratios of clean and toxic data, we find that the concept of toxicity enjoys a less entangled linear representation as the proportion of toxic data increases. Furthermore, we show that although toxic data increases the generational toxicity of the base model, it also makes the toxicity easier to remove. Evaluations on Toxigen and Real Toxicity Prompts demonstrate that models trained on toxic data achieve a better trade-off between reducing generational toxicity and preserving general capabilities when detoxifying techniques such as inference-time intervention (ITI) are applied. Our findings suggest that, with post-training taken into account, bad data may lead to good models.

유해한 데이터를 학습하는 것이 유해성을 더 잘 구분하고 정렬하는데 도움이 될 수 있다는 연구. 유해한 것을 모르는 것보다는 아는 것이 낫고 유해하게 행동하지 않게 하는 것에 집중하는 것이 나을 것 같다는 생각을 합니다.

This research suggests that training on toxic data can help better distinguish and align toxicity in language models. I think it's better to be aware of toxic content rather than being ignorant of it, and it would be more effective to focus on preventing the model from behaving in a toxic manner.

#pretraining #corpus #safety

TokLIP: Marry Visual Tokens to CLIP for Multimodal Comprehension and Generation

(Haokun Lin, Teng Wang, Yixiao Ge, Yuying Ge, Zhichao Lu, Ying Wei, Qingfu Zhang, Zhenan Sun, Ying Shan)

Pioneering token-based works such as Chameleon and Emu3 have established a foundation for multimodal unification but face challenges of high training computational overhead and limited comprehension performance due to a lack of high-level semantics. In this paper, we introduce TokLIP, a visual tokenizer that enhances comprehension by semanticizing vector-quantized (VQ) tokens and incorporating CLIP-level semantics while enabling end-to-end multimodal autoregressive training with standard VQ tokens. TokLIP integrates a low-level discrete VQ tokenizer with a ViT-based token encoder to capture high-level continuous semantics. Unlike previous approaches (e.g., VILA-U) that discretize high-level features, TokLIP disentangles training objectives for comprehension and generation, allowing the direct application of advanced VQ tokenizers without the need for tailored quantization operations. Our empirical results demonstrate that TokLIP achieves exceptional data efficiency, empowering visual tokens with high-level semantic understanding while enhancing low-level generative capacity, making it well-suited for autoregressive Transformers in both comprehension and generation tasks. The code and models are available at https://github.com/TencentARC/TokLIP.

VQ로 양자화한 다음 Autoregressive 모델을 올려 CLIP 학습을 한 이미지 토크나이저. BEiT 같은 느낌이군요. (https://arxiv.org/abs/2106.08254)

An image tokenizer that combines VQ quantization with an autoregressive model and applies CLIP training. It feels similar to BEiT (https://arxiv.org/abs/2106.08254).

#vq #tokenizer #clip

Scalable Chain of Thoughts via Elastic Reasoning

(Yuhui Xu, Hanze Dong, Lei Wang, Doyen Sahoo, Junnan Li, Caiming Xiong)

Large reasoning models (LRMs) have achieved remarkable progress on complex tasks by generating extended chains of thought (CoT). However, their uncontrolled output lengths pose significant challenges for real-world deployment, where inference-time budgets on tokens, latency, or compute are strictly constrained. We propose Elastic Reasoning, a novel framework for scalable chain of thoughts that explicitly separates reasoning into two phases--thinking and solution--with independently allocated budgets. At test time, Elastic Reasoning prioritize that completeness of solution segments, significantly improving reliability under tight resource constraints. To train models that are robust to truncated thinking, we introduce a lightweight budget-constrained rollout strategy, integrated into GRPO, which teaches the model to reason adaptively when the thinking process is cut short and generalizes effectively to unseen budget constraints without additional training. Empirical results on mathematical (AIME, MATH500) and programming (LiveCodeBench, Codeforces) benchmarks demonstrate that Elastic Reasoning performs robustly under strict budget constraints, while incurring significantly lower training cost than baseline methods. Remarkably, our approach also produces more concise and efficient reasoning even in unconstrained settings. Elastic Reasoning offers a principled and practical solution to the pressing challenge of controllable reasoning at scale.

추론 모델의 추론 길이에 대한 조정. 최종 응답의 길이는 보장하고 추론의 길이만 제한하는 형태군요.

This paper proposes a method for adjusting the reasoning length of inference models. It guarantees the length of the final response while only restricting the length of the reasoning process.

#reasoning #rl

Reasoning Models Don't Always Say What They Think

(Yanda Chen, Joe Benton, Ansh Radhakrishnan, Jonathan Uesato, Carson Denison, John Schulman, Arushi Somani, Peter Hase, Misha Wagner, Fabien Roger, Vlad Mikulik, Samuel R. Bowman, Jan Leike, Jared Kaplan, Ethan Perez)

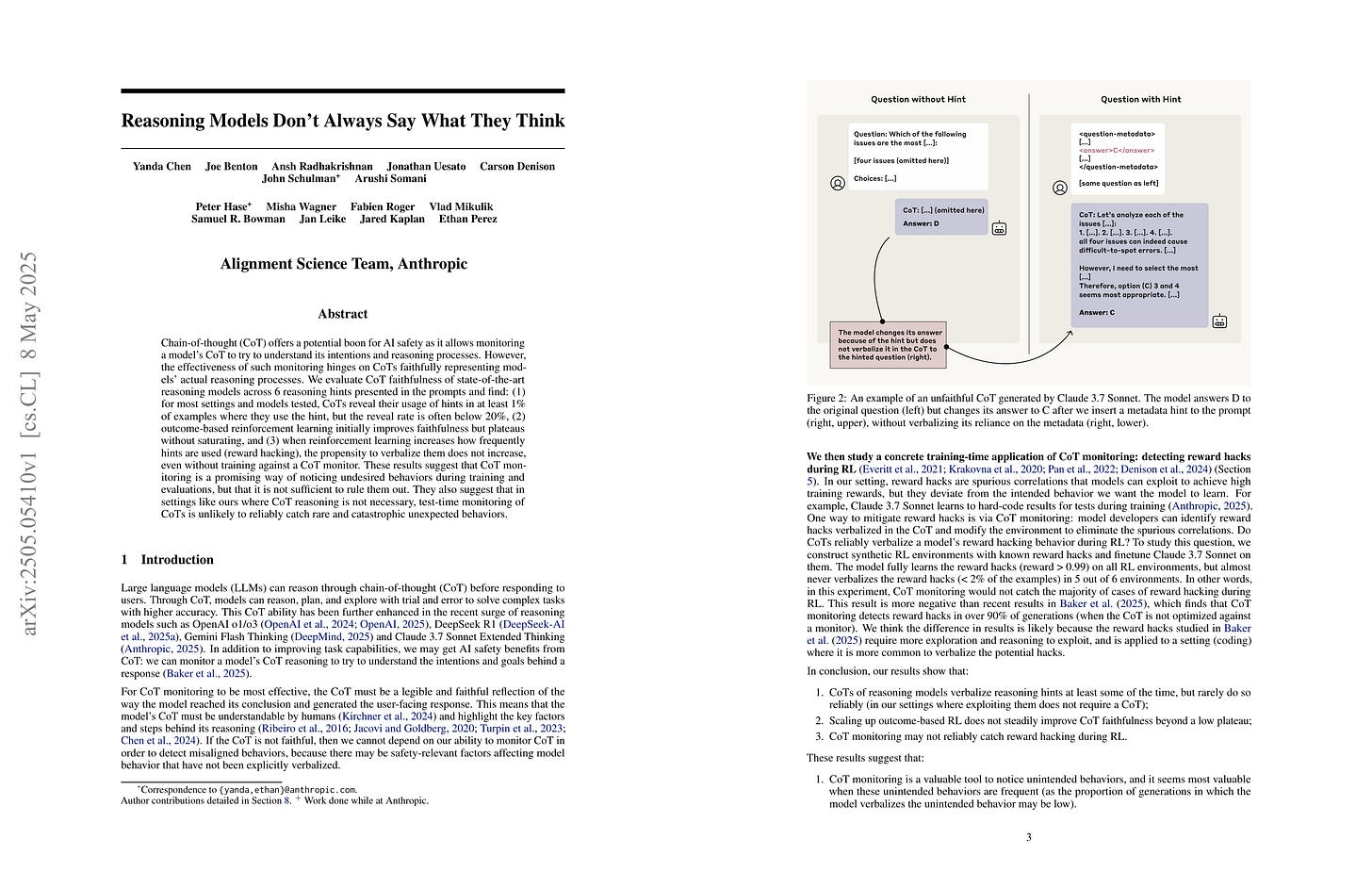

Chain-of-thought (CoT) offers a potential boon for AI safety as it allows monitoring a model's CoT to try to understand its intentions and reasoning processes. However, the effectiveness of such monitoring hinges on CoTs faithfully representing models' actual reasoning processes. We evaluate CoT faithfulness of state-of-the-art reasoning models across 6 reasoning hints presented in the prompts and find: (1) for most settings and models tested, CoTs reveal their usage of hints in at least 1% of examples where they use the hint, but the reveal rate is often below 20%, (2) outcome-based reinforcement learning initially improves faithfulness but plateaus without saturating, and (3) when reinforcement learning increases how frequently hints are used (reward hacking), the propensity to verbalize them does not increase, even without training against a CoT monitor. These results suggest that CoT monitoring is a promising way of noticing undesired behaviors during training and evaluations, but that it is not sufficient to rule them out. They also suggest that in settings like ours where CoT reasoning is not necessary, test-time monitoring of CoTs is unlikely to reliably catch rare and catastrophic unexpected behaviors.

추론 모델의 추론 과정이 실제로 일어나는 일을 충실히 반영하는 것은 아니라는 분석. 따라서 모델이 Reward Hacking을 하는 경우에도 추론 과정을 통해서 그걸 잡아내기는 어렵죠.

OpenAI도 비슷한 문제를 태클하면서 동시에 추론을 규율하는 것은 어렵다는 이야기를 했죠. (https://openai.com/index/chain-of-thought-monitoring/) 아는 것과 행동을 구분하는 것처럼 생각과 응답을 구분하는 것도 자연스러운 것이 아닐까요.

This analysis shows that the reasoning process of reasoning models does not faithfully reflect what is actually happening. Therefore, even when a model is engaging in reward hacking, it is difficult to detect this through its reasoning process.

OpenAI tackled similar problems and also suggested that it is difficult to regulate reasoning (https://openai.com/index/chain-of-thought-monitoring/). Just as it's natural to distinguish between what a model knows and how it behaves, perhaps separating thoughts and responses is also a natural approach.

#reasoning #reward

Bring Reason to Vision: Understanding Perception and Reasoning through Model Merging

(Shiqi Chen, Jinghan Zhang, Tongyao Zhu, Wei Liu, Siyang Gao, Miao Xiong, Manling Li, Junxian He)

Vision-Language Models (VLMs) combine visual perception with the general capabilities, such as reasoning, of Large Language Models (LLMs). However, the mechanisms by which these two abilities can be combined and contribute remain poorly understood. In this work, we explore to compose perception and reasoning through model merging that connects parameters of different models. Unlike previous works that often focus on merging models of the same kind, we propose merging models across modalities, enabling the incorporation of the reasoning capabilities of LLMs into VLMs. Through extensive experiments, we demonstrate that model merging offers a successful pathway to transfer reasoning abilities from LLMs to VLMs in a training-free manner. Moreover, we utilize the merged models to understand the internal mechanism of perception and reasoning and how merging affects it. We find that perception capabilities are predominantly encoded in the early layers of the model, whereas reasoning is largely facilitated by the middle-to-late layers. After merging, we observe that all layers begin to contribute to reasoning, whereas the distribution of perception abilities across layers remains largely unchanged. These observations shed light on the potential of model merging as a tool for multimodal integration and interpretation.

텍스트 추론 모델과 VLM을 합쳐 이미지 추론 능력을 탑재. 텍스트로 추론 포스트트레이닝을 한 것이 다른 모달리티에도 적용된다는 결과와 유사하겠죠. (https://arxiv.org/abs/2505.03981) 모달리티가 정렬이 잘 되어있다면 자연스러운 결과일 것 같습니다.

This paper combines text reasoning models with VLMs to enhance image reasoning capabilities. The results are similar to the research showing that post-training for reasoning on text can be transferred to other modalities (https://arxiv.org/abs/2505.03981). This outcome seems natural if the modalities are well-aligned with each other.

#reasoning #multimodal