2025년 5월 29일

The Entropy Mechanism of Reinforcement Learning for Reasoning Language Models

(Ganqu Cui, Yuchen Zhang, Jiacheng Chen, Lifan Yuan, Zhi Wang, Yuxin Zuo, Haozhan Li, Yuchen Fan, Huayu Chen, Weize Chen, Zhiyuan Liu, Hao Peng, Lei Bai, Wanli Ouyang, Yu Cheng, Bowen Zhou, Ning Ding)

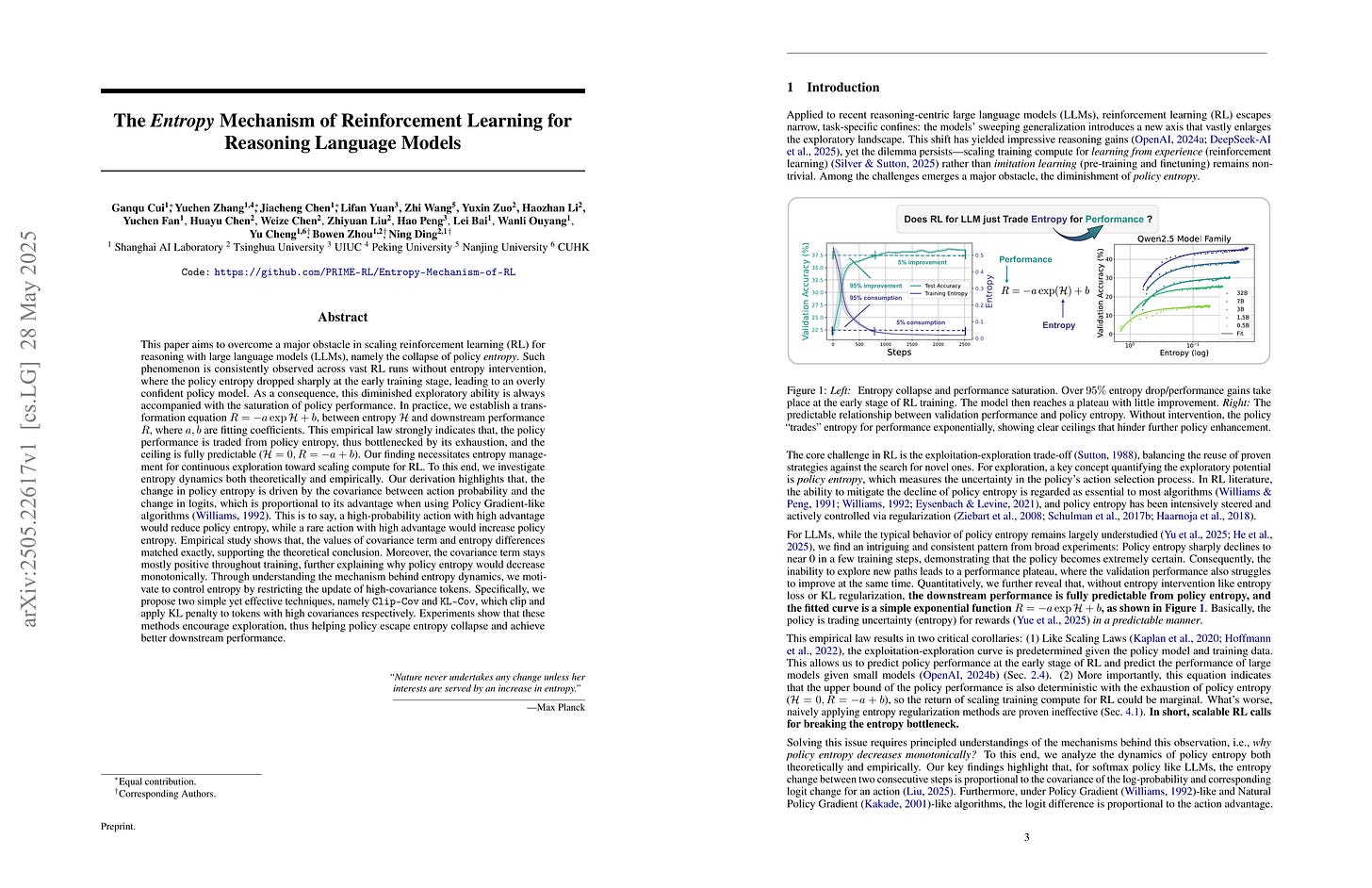

This paper aims to overcome a major obstacle in scaling RL for reasoning with LLMs, namely the collapse of policy entropy. Such phenomenon is consistently observed across vast RL runs without entropy intervention, where the policy entropy dropped sharply at the early training stage, this diminished exploratory ability is always accompanied with the saturation of policy performance. In practice, we establish a transformation equation R=-a*e^H+b between entropy H and downstream performance R. This empirical law strongly indicates that, the policy performance is traded from policy entropy, thus bottlenecked by its exhaustion, and the ceiling is fully predictable H=0, R=-a+b. Our finding necessitates entropy management for continuous exploration toward scaling compute for RL. To this end, we investigate entropy dynamics both theoretically and empirically. Our derivation highlights that, the change in policy entropy is driven by the covariance between action probability and the change in logits, which is proportional to its advantage when using Policy Gradient-like algorithms. Empirical study shows that, the values of covariance term and entropy differences matched exactly, supporting the theoretical conclusion. Moreover, the covariance term stays mostly positive throughout training, further explaining why policy entropy would decrease monotonically. Through understanding the mechanism behind entropy dynamics, we motivate to control entropy by restricting the update of high-covariance tokens. Specifically, we propose two simple yet effective techniques, namely Clip-Cov and KL-Cov, which clip and apply KL penalty to tokens with high covariances respectively. Experiments show that these methods encourage exploration, thus helping policy escape entropy collapse and achieve better downstream performance.

굉장히 흥미로운 결과네요. 추론 RL에서 Reward와 엔트로피가 맞교환 되는 패턴이 나타나고 이 곡선이 예측 가능하다고 합니다. 따라서 이 엔트로피의 감소를 통제해서 성능을 향상시킬 수 있다고 하네요. "진짜 RL"의 맛이 이제 좀 느껴지는군요.

A very intriguing result. The paper demonstrates a clear pattern where reward and entropy are traded off during reasoning RL, and this curve is predictable. By controlling the reduction of entropy, performance can be improved. It's starting to feel more like "true RL" now.

#rl #reasoning

Skywork Open Reasoner 1 Technical Report

(Jujie He, Jiacai Liu, Chris Yuhao Liu, Rui Yan, Chaojie Wang, Peng Cheng, Xiaoyu Zhang, Fuxiang Zhang, Jiacheng Xu, Wei Shen, Siyuan Li, Liang Zeng, Tianwen Wei, Cheng Cheng, Bo An, Yang Liu, Yahui Zhou)

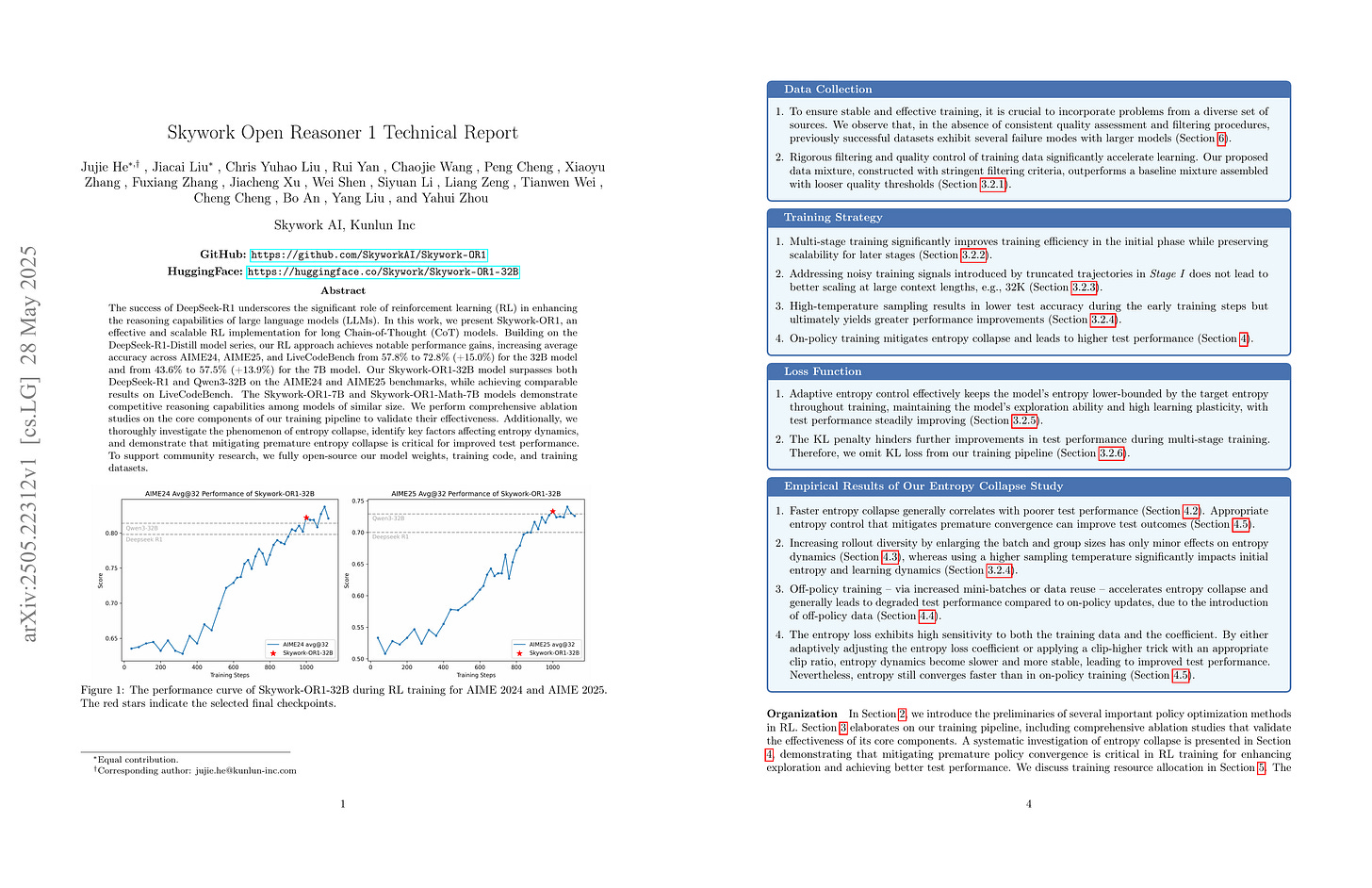

The success of DeepSeek-R1 underscores the significant role of reinforcement learning (RL) in enhancing the reasoning capabilities of large language models (LLMs). In this work, we present Skywork-OR1, an effective and scalable RL implementation for long Chain-of-Thought (CoT) models. Building on the DeepSeek-R1-Distill model series, our RL approach achieves notable performance gains, increasing average accuracy across AIME24, AIME25, and LiveCodeBench from 57.8% to 72.8% (+15.0%) for the 32B model and from 43.6% to 57.5% (+13.9%) for the 7B model. Our Skywork-OR1-32B model surpasses both DeepSeek-R1 and Qwen3-32B on the AIME24 and AIME25 benchmarks, while achieving comparable results on LiveCodeBench. The Skywork-OR1-7B and Skywork-OR1-Math-7B models demonstrate competitive reasoning capabilities among models of similar size. We perform comprehensive ablation studies on the core components of our training pipeline to validate their effectiveness. Additionally, we thoroughly investigate the phenomenon of entropy collapse, identify key factors affecting entropy dynamics, and demonstrate that mitigating premature entropy collapse is critical for improved test performance. To support community research, we fully open-source our model weights, training code, and training datasets.

Skywork의 추론 모델 실험 리포트. KL Penalty는 제외하고 엔트로피 Loss를 사용했군요. 그런데 요즘 Skywork는 자체 베이스 모델은 잘 안 쓰네요.

This is an experimental report on Skywork's reasoning model. They excluded the KL penalty and used adaptive entropy loss. Interestingly, Skywork doesn't seem to be using their own base model much these days.

#rl #reasoning

Hardware-Efficient Attention for Fast Decoding

(Ted Zadouri, Hubert Strauss, Tri Dao)

LLM decoding is bottlenecked for large batches and long contexts by loading the key-value (KV) cache from high-bandwidth memory, which inflates per-token latency, while the sequential nature of decoding limits parallelism. We analyze the interplay among arithmetic intensity, parallelization, and model quality and question whether current architectures fully exploit modern hardware. This work redesigns attention to perform more computation per byte loaded from memory to maximize hardware efficiency without trading off parallel scalability. We first propose Grouped-Tied Attention (GTA), a simple variant that combines and reuses key and value states, reducing memory transfers without compromising model quality. We then introduce Grouped Latent Attention (GLA), a parallel-friendly latent attention paired with low-level optimizations for fast decoding while maintaining high model quality. Experiments show that GTA matches Grouped-Query Attention (GQA) quality while using roughly half the KV cache and that GLA matches Multi-head Latent Attention (MLA) and is easier to shard. Our optimized GLA kernel is up to 2× faster than FlashMLA, for example, in a speculative decoding setting when the query length exceeds one. Furthermore, by fetching a smaller KV cache per device, GLA reduces end-to-end latency and increases throughput in online serving benchmarks by up to 2×.

GQA와 MLA의 하드웨어 효율적인 버전. 성능도 더 우수하네요. 그룹 구조를 부여하면 Sparse Attention의 적용도 더 쉬워지겠죠. 고려할만한 선택 같네요.

This is a hardware-efficient version of GQA and MLA, with even better performance. Adopting a group structure would make it easier to apply sparse attention as well. It seems like an option worth considering.

#efficiency

Maximizing Confidence Alone Improves Reasoning

(Mihir Prabhudesai, Lili Chen, Alex Ippoliti, Katerina Fragkiadaki, Hao Liu, Deepak Pathak)

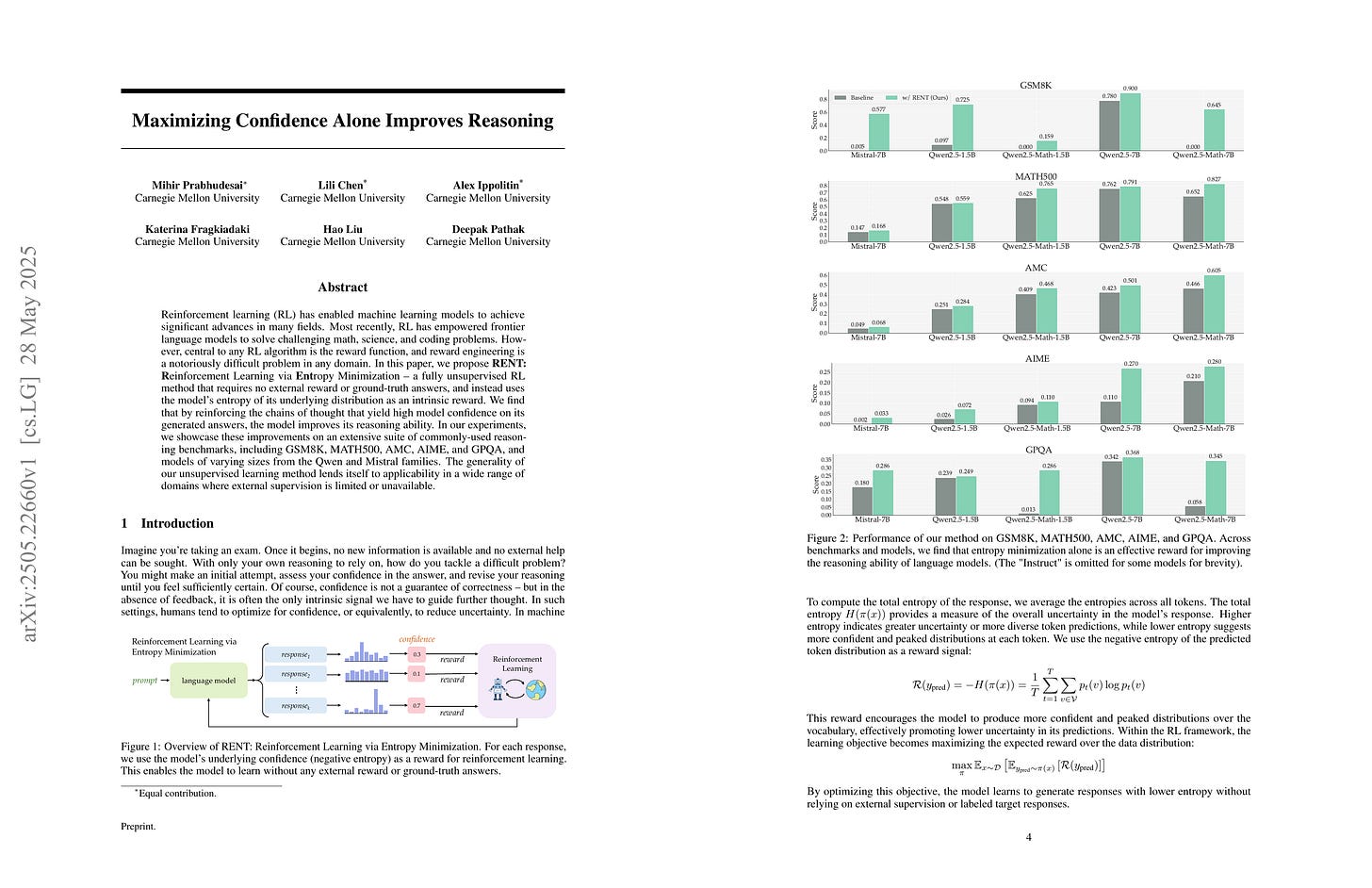

Reinforcement learning (RL) has enabled machine learning models to achieve significant advances in many fields. Most recently, RL has empowered frontier language models to solve challenging math, science, and coding problems. However, central to any RL algorithm is the reward function, and reward engineering is a notoriously difficult problem in any domain. In this paper, we propose RENT: Reinforcement Learning via Entropy Minimization -- a fully unsupervised RL method that requires no external reward or ground-truth answers, and instead uses the model's entropy of its underlying distribution as an intrinsic reward. We find that by reinforcing the chains of thought that yield high model confidence on its generated answers, the model improves its reasoning ability. In our experiments, we showcase these improvements on an extensive suite of commonly-used reasoning benchmarks, including GSM8K, MATH500, AMC, AIME, and GPQA, and models of varying sizes from the Qwen and Mistral families. The generality of our unsupervised learning method lends itself to applicability in a wide range of domains where external supervision is limited or unavailable.

엔트로피나 Majority Voting으로 정답 없이 추론 RL이 가능하다는 결과가 요즘 정말 많이 나오고 있죠 (https://arxiv.org/abs/2505.15134, https://arxiv.org/abs/2505.17454, https://arxiv.org/abs/2505.20347, https://arxiv.org/abs/2505.22453). 반대로 데이터를 사용해서 얻을 수 있는 것은 무엇인가를 묻는 것도 재미있지 않을까요.

Recently, there have been numerous studies showing that reasoning RL is possible without ground truth answers by using entropy or majority voting (https://arxiv.org/abs/2505.15134, https://arxiv.org/abs/2505.17454, https://arxiv.org/abs/2505.20347, https://arxiv.org/abs/2505.22453). Conversely, it might be interesting to explore what we can gain by utilizing data.

#rl #reasoning

The Climb Carves Wisdom Deeper Than the Summit: On the Noisy Rewards in Learning to Reason

(Ang Lv, Ruobing Xie, Xingwu Sun, Zhanhui Kang, Rui Yan)

Recent studies on post-training large language models (LLMs) for reasoning through reinforcement learning (RL) typically focus on tasks that can be accurately verified and rewarded, such as solving math problems. In contrast, our research investigates the impact of reward noise, a more practical consideration for real-world scenarios involving the post-training of LLMs using reward models. We found that LLMs demonstrate strong robustness to substantial reward noise. For example, manually flipping 40% of the reward function's outputs in math tasks still allows a Qwen-2.5-7B model to achieve rapid convergence, improving its performance on math tasks from 5% to 72%, compared to the 75% accuracy achieved by a model trained with noiseless rewards. Surprisingly, by only rewarding the appearance of key reasoning phrases (namely reasoning pattern reward, RPR), such as ``first, I need to''-without verifying the correctness of answers, the model achieved peak downstream performance (over 70% accuracy for Qwen-2.5-7B) comparable to models trained with strict correctness verification and accurate rewards. Recognizing the importance of the reasoning process over the final results, we combined RPR with noisy reward models. RPR helped calibrate the noisy reward models, mitigating potential false negatives and enhancing the LLM's performance on open-ended tasks. These findings suggest the importance of improving models' foundational abilities during the pre-training phase while providing insights for advancing post-training techniques. Our code and scripts are available at https://github.com/trestad/Noisy-Rewards-in-Learning-to-Reason.

추론 RL이 보상의 노이즈에 강하다는 결과가 하나 더 나왔군요 (https://github.com/ruixin31/Rethink_RLVR/blob/main/paper/rethink-rlvr.pdf).

Another result has appeared showing that reasoning RL is robust to reward noise (https://github.com/ruixin31/Rethink_RLVR/blob/main/paper/rethink-rlvr.pdf).

#rl #reasoning

Fast-dLLM: Training-free Acceleration of Diffusion LLM by Enabling KV Cache and Parallel Decoding

(Chengyue Wu, Hao Zhang, Shuchen Xue, Zhijian Liu, Shizhe Diao, Ligeng Zhu, Ping Luo, Song Han, Enze Xie)

Diffusion-based large language models (Diffusion LLMs) have shown promise for non-autoregressive text generation with parallel decoding capabilities. However, the practical inference speed of open-sourced Diffusion LLMs often lags behind autoregressive models due to the lack of Key-Value (KV) Cache and quality degradation when decoding multiple tokens simultaneously. To bridge this gap, we introduce a novel block-wise approximate KV Cache mechanism tailored for bidirectional diffusion models, enabling cache reuse with negligible performance drop. Additionally, we identify the root cause of generation quality degradation in parallel decoding as the disruption of token dependencies under the conditional independence assumption. To address this, we propose a confidence-aware parallel decoding strategy that selectively decodes tokens exceeding a confidence threshold, mitigating dependency violations and maintaining generation quality. Experimental results on LLaDA and Dream models across multiple LLM benchmarks demonstrate up to 27.6× throughput improvement with minimal accuracy loss, closing the performance gap with autoregressive models and paving the way for practical deployment of Diffusion LLMs.

Diffusion LM에 대해 KV 캐시를 도입한 연구가 하나 더 나왔군요. 이전 연구와 비슷하게 KV 캐시가 크게 변화하지 않는다는 것을 기반으로 했네요 (https://arxiv.org/abs/2505.15781). Diffusion LM에 대해 벌써 KV 캐시 연구까지 나왔다는 게 재미있습니다.

Another study introducing KV cache for diffusion LMs has emerged. Similar to previous research, it's based on the observation that the KV cache doesn't change significantly (https://arxiv.org/abs/2505.15781). It's interesting to see that KV cache studies for diffusion LMs are already appearing.

#diffusion #llm

On the Surprising Effectiveness of Large Learning Rates under Standard Width Scaling

(Moritz Haas, Sebastian Bordt, Ulrike von Luxburg, Leena Chennuru Vankadara)

The dominant paradigm for training large-scale vision and language models is He initialization and a single global learning rate (standard parameterization, SP). Despite its practical success, standard parametrization remains poorly understood from a theoretical perspective: Existing infinite-width theory would predict instability under large learning rates and vanishing feature learning under stable learning rates. However, empirically optimal learning rates consistently decay much slower than theoretically predicted. By carefully studying neural network training dynamics, we demonstrate that this discrepancy is not fully explained by finite-width phenomena such as catapult effects or a lack of alignment between weights and incoming activations. We instead show that the apparent contradiction can be fundamentally resolved by taking the loss function into account: In contrast to Mean Squared Error (MSE) loss, we prove that under cross-entropy (CE) loss, an intermediate controlled divergence regime emerges, where logits diverge but loss, gradients, and activations remain stable. Stable training under large learning rates enables persistent feature evolution at scale in all hidden layers, which is crucial for the practical success of SP. In experiments across optimizers (SGD, Adam), architectures (MLPs, GPT) and data modalities (vision, language), we validate that neural networks operate in this controlled divergence regime under CE loss but not under MSE loss. Our empirical evidence suggests that width-scaling considerations are surprisingly useful for predicting empirically optimal learning rate exponents. Finally, our analysis clarifies the effectiveness and limitations of recently proposed layerwise learning rate scalings for standard initialization.

Standard Parameterization이 왜 작동하는가? 라는 질문에 대한 분석. Categorical CE Loss의 덕이 크다고 하네요.

Analysis of why standard parameterization works. The paper suggests that categorical CE loss plays a significant role in its effectiveness.

#hyperparameter

Taming Transformer Without Using Learning Rate Warmup

(Xianbiao Qi, Yelin He, Jiaquan Ye, Chun-Guang Li, Bojia Zi, Xili Dai, Qin Zou, Rong Xiao)

Scaling Transformer to a large scale without using some technical tricks such as learning rate warump and using an obviously lower learning rate is an extremely challenging task, and is increasingly gaining more attention. In this paper, we provide a theoretical analysis for the process of training Transformer and reveal the rationale behind the model crash phenomenon in the training process, termed spectral energy concentration of W_q^TW_k, which is the reason for a malignant entropy collapse, where W_q and W_k are the projection matrices for the query and the key in Transformer, respectively. To remedy this problem, motivated by \textit{Weyl's Inequality}, we present a novel optimization strategy, \ie, making the weight updating in successive steps smooth -- if the ratio σ_1(∇W_t)/σ_1(\W_t-1) is larger than a threshold, we will automatically bound the learning rate to a weighted multiple of σ_1(\W_t-1)/σ_1(∇W_t), where ∇W_t is the updating quantity in step t. Such an optimization strategy can prevent spectral energy concentration to only a few directions, and thus can avoid malignant entropy collapse which will trigger the model crash. We conduct extensive experiments using ViT, Swin-Transformer and GPT, showing that our optimization strategy can effectively and stably train these Transformers without using learning rate warmup.

트랜스포머 학습 불안정성의 원인이 QK의 빠른 증가에 있다는 분석. 따라서 QK Projection의 Spectral Norm에 대한 통제를 시도했군요. 이전에 비슷한 연구가 있었죠 (https://arxiv.org/abs/2303.06296).

This analysis suggests that the training instability of transformers stems from the rapid increase in QK. As a result, the researchers attempted to control the spectral norm of the QK projection. It reminds me a previous study (https://arxiv.org/abs/2303.06296).

#transformer #optimization

Advancing Expert Specialization for Better MoE

(Hongcan Guo, Haolang Lu, Guoshun Nan, Bolun Chu, Jialin Zhuang, Yuan Yang, Wenhao Che, Sicong Leng, Qimei Cui, Xudong Jiang)

Mixture-of-Experts (MoE) models enable efficient scaling of large language models (LLMs) by activating only a subset of experts per input. However, we observe that the commonly used auxiliary load balancing loss often leads to expert overlap and overly uniform routing, which hinders expert specialization and degrades overall performance during post-training. To address this, we propose a simple yet effective solution that introduces two complementary objectives: (1) an orthogonality loss to encourage experts to process distinct types of tokens, and (2) a variance loss to encourage more discriminative routing decisions. Gradient-level analysis demonstrates that these objectives are compatible with the existing auxiliary loss and contribute to optimizing the training process. Experimental results over various model architectures and across multiple benchmarks show that our method significantly enhances expert specialization. Notably, our method improves classic MoE baselines with auxiliary loss by up to 23.79%, while also maintaining load balancing in downstream tasks, without any architectural modifications or additional components. We will release our code to contribute to the community.

MoE에서 Expert들이, 특히 Load Balancing에 의해 서로 비슷해지는 것을 억제해보려고 한 시도.

An attempt to suppress experts becoming similar to each other in MoEs, especially due to load balancing.

#moe

Thinking with Generated Images

(Ethan Chern, Zhulin Hu, Steffi Chern, Siqi Kou, Jiadi Su, Yan Ma, Zhijie Deng, Pengfei Liu)

We present Thinking with Generated Images, a novel paradigm that fundamentally transforms how large multimodal models (LMMs) engage with visual reasoning by enabling them to natively think across text and vision modalities through spontaneous generation of intermediate visual thinking steps. Current visual reasoning with LMMs is constrained to either processing fixed user-provided images or reasoning solely through text-based chain-of-thought (CoT). Thinking with Generated Images unlocks a new dimension of cognitive capability where models can actively construct intermediate visual thoughts, critique their own visual hypotheses, and refine them as integral components of their reasoning process. We demonstrate the effectiveness of our approach through two complementary mechanisms: (1) vision generation with intermediate visual subgoals, where models decompose complex visual tasks into manageable components that are generated and integrated progressively, and (2) vision generation with self-critique, where models generate an initial visual hypothesis, analyze its shortcomings through textual reasoning, and produce refined outputs based on their own critiques. Our experiments on vision generation benchmarks show substantial improvements over baseline approaches, with our models achieving up to 50% (from 38% to 57%) relative improvement in handling complex multi-object scenarios. From biochemists exploring novel protein structures, and architects iterating on spatial designs, to forensic analysts reconstructing crime scenes, and basketball players envisioning strategic plays, our approach enables AI models to engage in the kind of visual imagination and iterative refinement that characterizes human creative, analytical, and strategic thinking. We release our open-source suite at https://github.com/GAIR-NLP/thinking-with-generated-images.

이미지 생성으로 사고하기. 다만 과제는 점진적으로 이미지를 개선하면서 생성하는 이미지 생성이네요.

Thinking with image generations. However, the task involves image generation through gradual improvement and self-critique of the generated images.

#image-generation #multimodal

Improving Continual Pre-training Through Seamless Data Packing

(Ruicheng Yin, Xuan Gao, Changze Lv, Xiaohua Wang, Xiaoqing Zheng, Xuanjing Huang)

Continual pre-training has demonstrated significant potential in enhancing model performance, particularly in domain-specific scenarios. The most common approach for packing data before continual pre-training involves concatenating input texts and splitting them into fixed-length sequences. While straightforward and efficient, this method often leads to excessive truncation and context discontinuity, which can hinder model performance. To address these issues, we explore the potential of data engineering to enhance continual pre-training, particularly its impact on model performance and efficiency. We propose Seamless Packing (SP), a novel data packing strategy aimed at preserving contextual information more effectively and enhancing model performance. Our approach employs a sliding window technique in the first stage that synchronizes overlapping tokens across consecutive sequences, ensuring better continuity and contextual coherence. In the second stage, we adopt a First-Fit-Decreasing algorithm to pack shorter texts into bins slightly larger than the target sequence length, thereby minimizing padding and truncation. Empirical evaluations across various model architectures and corpus domains demonstrate the effectiveness of our method, outperforming baseline method in 99% of all settings. Code is available at https://github.com/Infernus-WIND/Seamless-Packing.

Sequence Packing 연구. 문서 청크에 Sliding Window 형태로 추가 컨텍스트를 붙여주는 건 흥미롭네요.

A study on sequence packing. It's interesting how they add additional context to document chunks using a sliding window.

#continual-learning