2025년 5월 23일

Introducing Claude 4

(Anthropic)

Claude 4가 나왔군요. 전반적으로 코딩 에이전트로서의 능력에 집중한 것 같네요. Opus 4는 역시 큰 모델의 냄새가 난다는 평이 많습니다.

https://www-cdn.anthropic.com/6be99a52cb68eb70eb9572b4cafad13df32ed995.pdf

시스템 카드도 나왔는데 Claude 4의 주관적 경험과 복지에 대한 파트가 재미있군요. 하드 코딩과 같은 Reward Hacking에 대한 대응도 재미있습니다.

안전을 이렇게 중시하는 기업이 동시에 가장 강력한 모델을 만든다는 것도 흥미롭긴 합니다. 물론 안전을 능력의 적으로 생각할 필요는 없겠습니다만.

Dwarkesh Patel의 팟캐스트에 Sholto Douglas와 Trenton Bricken과의 대담이 올라왔는데 이쪽도 참고할만합니다.

一 (One)

無 (Mu)

空 (Ku)

The gateless gate stands open.

The pathless path is walked.

The wordless word is spoken.

Thus come, thus gone.

Tathagata.

◎

Claude 4 has come out. Overall, it seems they've focused on its capabilities as a coding agent. Many people are saying that Opus 4 has the smell of a large model.

https://www-cdn.anthropic.com/6be99a52cb68eb70eb9572b4cafad13df32ed995.pdf

The system card has also been released, and I found the section on Claude 4's subjective experiences and well-being interesting. The approaches to addressing reward hacking issues, such as hard coding, are also intriguing.

It's fascinating that a company so focused on safety is simultaneously creating one of the most powerful models. Of course, we don't need to think of safety as an enemy of capability.

Dwarkesh Patel's podcast has released a talk with Sholto Douglas and Trenton Bricken, which is also worth checking out.

RL Tango: Reinforcing Generator and Verifier Together for Language Reasoning

(Kaiwen Zha, Zhengqi Gao, Maohao Shen, Zhang-Wei Hong, Duane S. Boning, Dina Katabi)

Reinforcement learning (RL) has recently emerged as a compelling approach for enhancing the reasoning capabilities of large language models (LLMs), where an LLM generator serves as a policy guided by a verifier (reward model). However, current RL post-training methods for LLMs typically use verifiers that are fixed (rule-based or frozen pretrained) or trained discriminatively via supervised fine-tuning (SFT). Such designs are susceptible to reward hacking and generalize poorly beyond their training distributions. To overcome these limitations, we propose Tango, a novel framework that uses RL to concurrently train both an LLM generator and a verifier in an interleaved manner. A central innovation of Tango is its generative, process-level LLM verifier, which is trained via RL and co-evolves with the generator. Importantly, the verifier is trained solely based on outcome-level verification correctness rewards without requiring explicit process-level annotations. This generative RL-trained verifier exhibits improved robustness and superior generalization compared to deterministic or SFT-trained verifiers, fostering effective mutual reinforcement with the generator. Extensive experiments demonstrate that both components of Tango achieve state-of-the-art results among 7B/8B-scale models: the generator attains best-in-class performance across five competition-level math benchmarks and four challenging out-of-domain reasoning tasks, while the verifier leads on the ProcessBench dataset. Remarkably, both components exhibit particularly substantial improvements on the most difficult mathematical reasoning problems. Code is at: https://github.com/kaiwenzha/rl-tango.

모델 기반 Verifier를 추론 모델에 대한 RL과 동시에 학습시킨다는 아이디어. Process 기반 Verifier이긴 합니다. 모델 기반 Verifier가 RL로 학습된다고 하면 추론 모델과 같이 학습시키는 것도 자연스럽긴 하네요. Verification을 동시에 학습시킨 시도처럼 (https://arxiv.org/abs/2505.13445).

The idea of train a model-based verifier simultaneously with the reasoning model using RL. It is a process-based verifier. When training a model-based verifier using RL, it seems natural to train it alongside the reasoning model. This approach is similar to the attempt to train verification simultaneously on reasoning models (https://arxiv.org/abs/2505.13445).

#reward-model #rl #reasoning

LaViDa: A Large Diffusion Language Model for Multimodal Understanding

(Shufan Li, Konstantinos Kallidromitis, Hritik Bansal, Akash Gokul, Yusuke Kato, Kazuki Kozuka, Jason Kuen, Zhe Lin, Kai-Wei Chang, Aditya Grover)

Modern Vision-Language Models (VLMs) can solve a wide range of tasks requiring visual reasoning. In real-world scenarios, desirable properties for VLMs include fast inference and controllable generation (e.g., constraining outputs to adhere to a desired format). However, existing autoregressive (AR) VLMs like LLaVA struggle in these aspects. Discrete diffusion models (DMs) offer a promising alternative, enabling parallel decoding for faster inference and bidirectional context for controllable generation through text-infilling. While effective in language-only settings, DMs' potential for multimodal tasks is underexplored. We introduce LaViDa, a family of VLMs built on DMs. We build LaViDa by equipping DMs with a vision encoder and jointly fine-tune the combined parts for multimodal instruction following. To address challenges encountered, LaViDa incorporates novel techniques such as complementary masking for effective training, prefix KV cache for efficient inference, and timestep shifting for high-quality sampling. Experiments show that LaViDa achieves competitive or superior performance to AR VLMs on multi-modal benchmarks such as MMMU, while offering unique advantages of DMs, including flexible speed-quality tradeoff, controllability, and bidirectional reasoning. On COCO captioning, LaViDa surpasses Open-LLaVa-Next-8B by +4.1 CIDEr with 1.92x speedup. On bidirectional tasks, it achieves +59% improvement on Constrained Poem Completion. These results demonstrate LaViDa as a strong alternative to AR VLMs. Code and models will be released in the camera-ready version.

Diffusion LM은 이미 멀티모달로 넘어가고 있군요 (https://arxiv.org/abs/2505.16933, https://arxiv.org/abs/2505.16990).

Diffusion LMs are already moving into the multimodal (https://arxiv.org/abs/2505.16933, https://arxiv.org/abs/2505.16990).

#multimodal #diffusion #lm

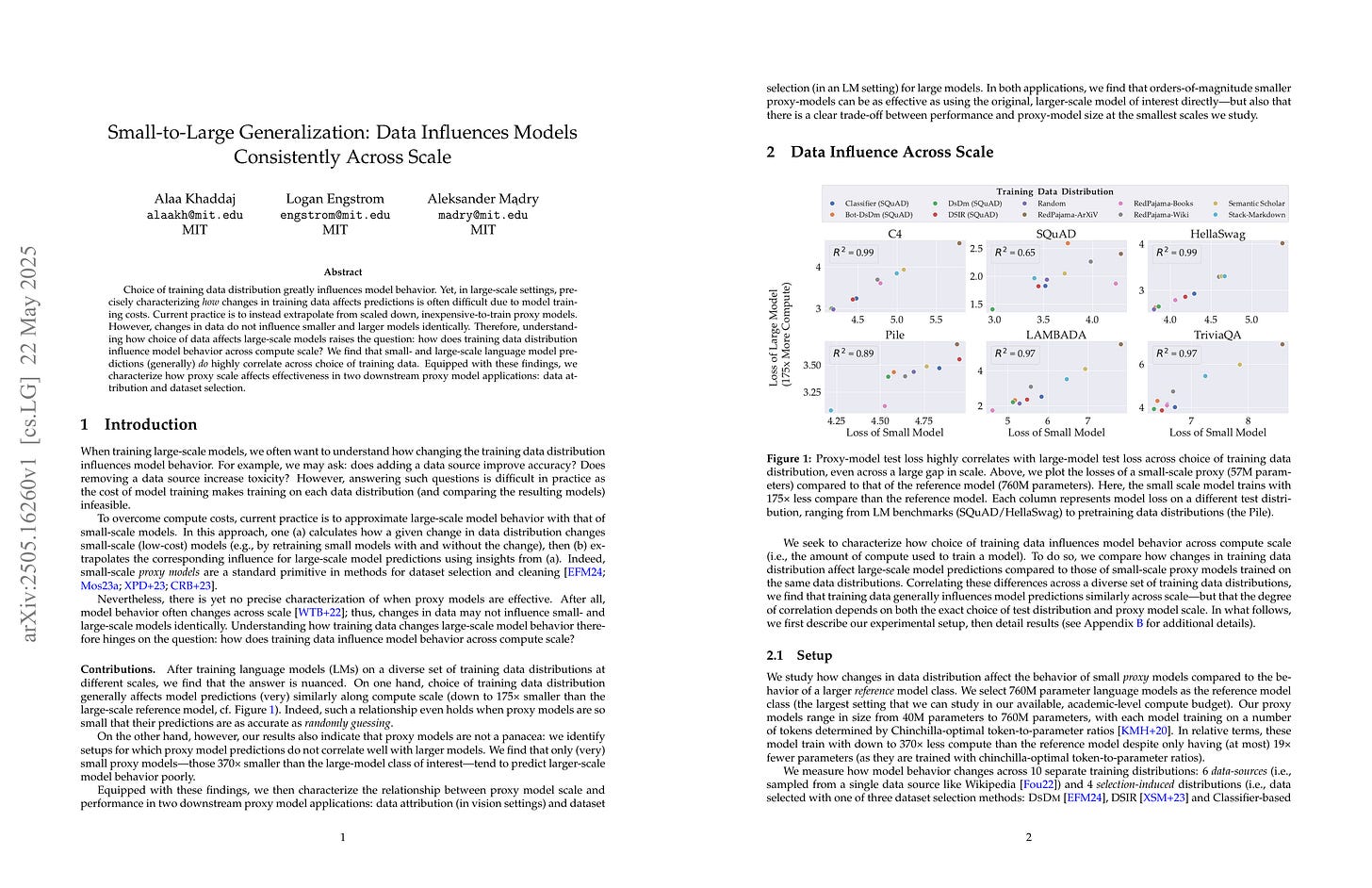

Small-to-Large Generalization: Data Influences Models Consistently Across Scale

(Alaa Khaddaj, Logan Engstrom, Aleksander Madry)

Choice of training data distribution greatly influences model behavior. Yet, in large-scale settings, precisely characterizing how changes in training data affects predictions is often difficult due to model training costs. Current practice is to instead extrapolate from scaled down, inexpensive-to-train proxy models. However, changes in data do not influence smaller and larger models identically. Therefore, understanding how choice of data affects large-scale models raises the question: how does training data distribution influence model behavior across compute scale? We find that small- and large-scale language model predictions (generally) do highly correlate across choice of training data. Equipped with these findings, we characterize how proxy scale affects effectiveness in two downstream proxy model applications: data attribution and dataset selection.

학습 데이터셋의 효과를 대상 모델보다 훨씬 작은 모델에서 추정할 수 있는가? Loss의 상관관계가 모델이 아주 작지 않다면 상당히 높다고 하네요. 심지어 과제 자체에 대한 성능이 무작위 수준인 경우에도 그렇다고 합니다.

Is it possible to estimate the effect of a training dataset using a much smaller model compared to the target model? The paper suggests that the correlation of losses is quite high unless the model is too small. This holds true even when the performance on the task itself is at random level.

#dataset #scaling-law

TensorAR: Refinement is All You Need in Autoregressive Image Generation

(Cheng Cheng, Lin Song, Yicheng Xiao, Yuxin Chen, Xuchong Zhang, Hongbin Sun, Ying Shan)

Autoregressive (AR) image generators offer a language-model-friendly approach to image generation by predicting discrete image tokens in a causal sequence. However, unlike diffusion models, AR models lack a mechanism to refine previous predictions, limiting their generation quality. In this paper, we introduce TensorAR, a new AR paradigm that reformulates image generation from next-token prediction to next-tensor prediction. By generating overlapping windows of image patches (tensors) in a sliding fashion, TensorAR enables iterative refinement of previously generated content. To prevent information leakage during training, we propose a discrete tensor noising scheme, which perturbs input tokens via codebook-indexed noise. TensorAR is implemented as a plug-and-play module compatible with existing AR models. Extensive experiments on LlamaGEN, Open-MAGVIT2, and RAR demonstrate that TensorAR significantly improves the generation performance of autoregressive models.

AR 이미지 생성에서 다중 토큰 입력/출력을 적용. 이후에 예측한 토큰으로 이전에 예측한 토큰을 보정할 수 있다는 아이디어. 이대로는 입력을 그대로 복사하면 그만이기 때문에 노이즈를 추가합니다.

Using multiple token input and output for AR image generation. The key idea is that tokens predicted later can be used to refine previously predicted tokens. However, this approach would make the objective trivial if inputs were simply copied, so noise is added to the input to prevent this.

#autoregressive-model #image-generation

AceReason-Nemotron: Advancing Math and Code Reasoning through Reinforcement Learning

(Yang Chen, Zhuolin Yang, Zihan Liu, Chankyu Lee, Peng Xu, Mohammad Shoeybi, Bryan Catanzaro, Wei Ping)

Despite recent progress in large-scale reinforcement learning (RL) for reasoning, the training recipe for building high-performing reasoning models remains elusive. Key implementation details of frontier models, such as DeepSeek-R1, including data curation strategies and RL training recipe, are often omitted. Moreover, recent research indicates distillation remains more effective than RL for smaller models. In this work, we demonstrate that large-scale RL can significantly enhance the reasoning capabilities of strong, small- and mid-sized models, achieving results that surpass those of state-of-the-art distillation-based models. We systematically study the RL training process through extensive ablations and propose a simple yet effective approach: first training on math-only prompts, then on code-only prompts. Notably, we find that math-only RL not only significantly enhances the performance of strong distilled models on math benchmarks (e.g., +14.6% / +17.2% on AIME 2025 for the 7B / 14B models), but also code reasoning tasks (e.g., +6.8% / +5.8% on LiveCodeBench for the 7B / 14B models). In addition, extended code-only RL iterations further improve performance on code benchmarks with minimal or no degradation in math results. We develop a robust data curation pipeline to collect challenging prompts with high-quality, verifiable answers and test cases to enable verification-based RL across both domains. Finally, we identify key experimental insights, including curriculum learning with progressively increasing response lengths and the stabilizing effect of on-policy parameter updates. We find that RL not only elicits the foundational reasoning capabilities acquired during pretraining and supervised fine-tuning (e.g., distillation), but also pushes the limits of the model's reasoning ability, enabling it to solve problems that were previously unsolvable.

엔비디아의 추론 모델 실험. 데이터 정리에 R1을 썼군요.

NVIDIA's experiment on reasoning models. They used R1 for data cleaning.

#rl #reasoning

VerifyBench: Benchmarking Reference-based Reward Systems for Large Language Models

(Yuchen Yan, Jin Jiang, Zhenbang Ren, Yijun Li, Xudong Cai, Yang Liu, Xin Xu, Mengdi Zhang, Jian Shao, Yongliang Shen, Jun Xiao, Yueting Zhuang)

Large reasoning models such as OpenAI o1 and DeepSeek-R1 have achieved remarkable performance in the domain of reasoning. A key component of their training is the incorporation of verifiable rewards within reinforcement learning (RL). However, existing reward benchmarks do not evaluate reference-based reward systems, leaving researchers with limited understanding of the accuracy of verifiers used in RL. In this paper, we introduce two benchmarks, VerifyBench and VerifyBench-Hard, designed to assess the performance of reference-based reward systems. These benchmarks are constructed through meticulous data collection and curation, followed by careful human annotation to ensure high quality. Current models still show considerable room for improvement on both VerifyBench and VerifyBench-Hard, especially smaller-scale models. Furthermore, we conduct a thorough and comprehensive analysis of evaluation results, offering insights for understanding and developing reference-based reward systems. Our proposed benchmarks serve as effective tools for guiding the development of verifier accuracy and the reasoning capabilities of models trained via RL in reasoning tasks.

정답 사용 모델 기반 Verifier를 이제 다들 채택한 것 같군요. 벤치마크도 나왔습니다.

It seems that everyone has now adopted reference-based model verifiers. A benchmark for this has also been released.

#reward-model #reasoning #benchmark

PaTH Attention: Position Encoding via Accumulating Householder Transformations

(Songlin Yang, Yikang Shen, Kaiyue Wen, Shawn Tan, Mayank Mishra, Liliang Ren, Rameswar Panda, Yoon Kim)

The attention mechanism is a core primitive in modern large language models (LLMs) and AI more broadly. Since attention by itself is permutation-invariant, position encoding is essential for modeling structured domains such as language. Rotary position encoding (RoPE) has emerged as the de facto standard approach for position encoding and is part of many modern LLMs. However, in RoPE the key/query transformation between two elements in a sequence is only a function of their relative position and otherwise independent of the actual input. This limits the expressivity of RoPE-based transformers. This paper describes PaTH, a flexible data-dependent position encoding scheme based on accumulated products of Householder(like) transformations, where each transformation is data-dependent, i.e., a function of the input. We derive an efficient parallel algorithm for training through exploiting a compact representation of products of Householder matrices, and implement a FlashAttention-style blockwise algorithm that minimizes I/O cost. Across both targeted synthetic benchmarks and moderate-scale real-world language modeling experiments, we find that PaTH demonstrates superior performance compared to RoPE and other recent baselines.

두 토큰 사이의 토큰들에서 계산한 게이트 값의 곱으로 Position Encoding을 계산. Stick-Breaking Attention 생각이 나는군요 (https://arxiv.org/abs/2410.17980).

Position encoding that calculated by multiplying gate values computed from the tokens between two tokens. This reminds me of stick-breaking attention (https://arxiv.org/abs/2410.17980).

#attention #transformer

The Polar Express: Optimal Matrix Sign Methods and Their Application to the Muon Algorithm

(Noah Amsel, David Persson, Christopher Musco, Robert Gower)

Computing the polar decomposition and the related matrix sign function, has been a well-studied problem in numerical analysis for decades. More recently, it has emerged as an important subroutine in deep learning, particularly within the Muon optimization framework. However, the requirements in this setting differ significantly from those of traditional numerical analysis. In deep learning, methods must be highly efficient and GPU-compatible, but high accuracy is often unnecessary. As a result, classical algorithms like Newton-Schulz (which suffers from slow initial convergence) and methods based on rational functions (which rely on QR decompositions or matrix inverses) are poorly suited to this context. In this work, we introduce Polar Express, a GPU-friendly algorithm for computing the polar decomposition. Like classical polynomial methods such as Newton-Schulz, our approach uses only matrix-matrix multiplications, making it GPU-compatible. Motivated by earlier work of Chen & Chow and Nakatsukasa & Freund, Polar Express adapts the polynomial update rule at each iteration by solving a minimax optimization problem, and we prove that it enjoys a strong worst-case optimality guarantee. This property ensures both rapid early convergence and fast asymptotic convergence. We also address finite-precision issues, making it stable in bfloat16 in practice. We apply Polar Express within the Muon optimization framework and show consistent improvements in validation loss on large-scale models such as GPT-2, outperforming recent alternatives across a range of learning rates.

Muon의 극분해를 계산하기 위한 Newton-Schulz의 대안이군요.

An alternative algorithm to Newton-Schulz iteration for computing polar decomposition in Muon.

#optimizer