2025년 5월 16일

Parallel Scaling Law for Language Models

(Mouxiang Chen, Binyuan Hui, Zeyu Cui, Jiaxi Yang, Dayiheng Liu, Jianling Sun, Junyang Lin, Zhongxin Liu)

It is commonly believed that scaling language models should commit a significant space or time cost, by increasing the parameters (parameter scaling) or output tokens (inference-time scaling). We introduce the third and more inference-efficient scaling paradigm: increasing the model's parallel computation during both training and inference time. We apply P diverse and learnable transformations to the input, execute forward passes of the model in parallel, and dynamically aggregate the P outputs. This method, namely parallel scaling (ParScale), scales parallel computation by reusing existing parameters and can be applied to any model structure, optimization procedure, data, or task. We theoretically propose a new scaling law and validate it through large-scale pre-training, which shows that a model with P parallel streams is similar to scaling the parameters by O(log P) while showing superior inference efficiency. For example, ParScale can use up to 22× less memory increase and 6× less latency increase compared to parameter scaling that achieves the same performance improvement. It can also recycle an off-the-shelf pre-trained model into a parallelly scaled one by post-training on a small amount of tokens, further reducing the training budget. The new scaling law we discovered potentially facilitates the deployment of more powerful models in low-resource scenarios, and provides an alternative perspective for the role of computation in machine learning.

변환된 입력들을 Forward한 다음 결합해서 Scaling을 시도한 방법. 변환은 Attention 레이어 입력에 Prefix를 붙이는 방식으로 했습니다. Classifier-Free Guidance를 언급하고 있는데 Test-Time Augmentation도 생각이 나네요.

This method attempts scaling by forwarding transformed inputs and then aggregating them. The transformation was done by appending a prefix to the input of the attention layer. While they mention classifier-free guidance, it also reminds me of test-time augmentation.

#scaling-law #transformer

WorldPM: Scaling Human Preference Modeling

(Binghai Wang, Runji Lin, Keming Lu, Le Yu, Zhenru Zhang, Fei Huang, Chujie Zheng, Kai Dang, Yang Fan, Xingzhang Ren, An Yang, Binyuan Hui, Dayiheng Liu, Tao Gui, Qi Zhang, Xuanjing Huang, Yu-Gang Jiang, Bowen Yu, Jingren Zhou, Junyang Lin)

Motivated by scaling laws in language modeling that demonstrate how test loss scales as a power law with model and dataset sizes, we find that similar laws exist in preference modeling. We propose World Preference Modeling (WorldPM) to emphasize this scaling potential, where World Preference embodies a unified representation of human preferences. In this paper, we collect preference data from public forums covering diverse user communities, and conduct extensive training using 15M-scale data across models ranging from 1.5B to 72B parameters. We observe distinct patterns across different evaluation metrics: (1) Adversarial metrics (ability to identify deceptive features) consistently scale up with increased training data and base model size; (2) Objective metrics (objective knowledge with well-defined answers) show emergent behavior in larger language models, highlighting WorldPM's scalability potential; (3) Subjective metrics (subjective preferences from a limited number of humans or AI) do not demonstrate scaling trends. Further experiments validate the effectiveness of WorldPM as a foundation for preference fine-tuning. Through evaluations on 7 benchmarks with 20 subtasks, we find that WorldPM broadly improves the generalization performance across human preference datasets of varying sizes (7K, 100K and 800K samples), with performance gains exceeding 5% on many key subtasks. Integrating WorldPM into our internal RLHF pipeline, we observe significant improvements on both in-house and public evaluation sets, with notable gains of 4% to 8% in our in-house evaluations.

인터넷 포럼 데이터를 사용한 Reward Model 학습. 논문에서도 언급하지만 정확하게 Preference Model Pretraining이군요. 여기서는 PMP의 규모를 증대시켰을 때 나타나는 현상에 집중하고 있네요.

선호란 기준에 따라 다른 것이니 그 부분에서의 충돌이 있네요. 그리고 PMP 과정에서 Loss가 급격히 낮아지는 통찰의 순간이 발생했다는 이야기도 흥미롭습니다.

The study on training a reward model using internet forum data. As mentioned in the paper, it's accurately preference model pretraining. The study concentrates on the phenomena that occur when scaling up PMP.

Since preferences vary depending on criteria, there are conflicts in this aspect. Interestingly, the paper also reports on moments of epiphany during the PMP process, where there's a sudden decrease in loss.

#reward-model

End-to-End Vision Tokenizer Tuning

(Wenxuan Wang, Fan Zhang, Yufeng Cui, Haiwen Diao, Zhuoyan Luo, Huchuan Lu, Jing Liu, Xinlong Wang)

Existing vision tokenization isolates the optimization of vision tokenizers from downstream training, implicitly assuming the visual tokens can generalize well across various tasks, e.g., image generation and visual question answering. The vision tokenizer optimized for low-level reconstruction is agnostic to downstream tasks requiring varied representations and semantics. This decoupled paradigm introduces a critical misalignment: The loss of the vision tokenization can be the representation bottleneck for target tasks. For example, errors in tokenizing text in a given image lead to poor results when recognizing or generating them. To address this, we propose ETT, an end-to-end vision tokenizer tuning approach that enables joint optimization between vision tokenization and target autoregressive tasks. Unlike prior autoregressive models that use only discrete indices from a frozen vision tokenizer, ETT leverages the visual embeddings of the tokenizer codebook, and optimizes the vision tokenizers end-to-end with both reconstruction and caption objectives. ETT can be seamlessly integrated into existing training pipelines with minimal architecture modifications. Our ETT is simple to implement and integrate, without the need to adjust the original codebooks or architectures of the employed large language models. Extensive experiments demonstrate that our proposed end-to-end vision tokenizer tuning unlocks significant performance gains, i.e., 2-6% for multimodal understanding and visual generation tasks compared to frozen tokenizer baselines, while preserving the original reconstruction capability. We hope this very simple and strong method can empower multimodal foundation models besides image generation and understanding.

VQ 토크나이저를 VLM과 함께 학습시켰군요. VQ 토크나이저의 코드북 임베딩을 활용하는 형태를 채택했다고 합니다.

Training the VQ tokenizer together with the VLM. They adopted an approach that utilizes the codebook embeddings from the VQ tokenizer.

#tokenizer #vq

Superposition Yields Robust Neural Scaling

(Yizhou liu, Ziming Liu, Jeff Gore)

The success of today's large language models (LLMs) depends on the observation that larger models perform better. However, the origin of this neural scaling law -- the finding that loss decreases as a power law with model size -- remains unclear. Starting from two empirical principles -- that LLMs represent more things than the model dimensions (widths) they have (i.e., representations are superposed), and that words or concepts in language occur with varying frequencies -- we constructed a toy model to study the loss scaling with model size. We found that when superposition is weak, meaning only the most frequent features are represented without interference, the scaling of loss with model size depends on the underlying feature frequency; if feature frequencies follow a power law, so does the loss. In contrast, under strong superposition, where all features are represented but overlap with each other, the loss becomes inversely proportional to the model dimension across a wide range of feature frequency distributions. This robust scaling behavior is explained geometrically: when many more vectors are packed into a lower dimensional space, the interference (squared overlaps) between vectors scales inversely with that dimension. We then analyzed four families of open-sourced LLMs and found that they exhibit strong superposition and quantitatively match the predictions of our toy model. The Chinchilla scaling law turned out to also agree with our results. We conclude that representation superposition is an important mechanism underlying the observed neural scaling laws. We anticipate that these insights will inspire new training strategies and model architectures to achieve better performance with less computation and fewer parameters.

모델 Scaling에 의한 Loss의 감소가 Power Law를 따르는 이유가 Superposition 때문일 수 있다는 주장. Feature의 분포 자체도 Power Law를 따르지 않을까 싶긴 합니다.

This paper suggests that superposition may be the reason why loss decreases as a power law with model scaling. I also think that the distribution of features itself might follow a power law.

#mechanistic-interpretation #scaling-law

J1: Incentivizing Thinking in LLM-as-a-Judge via Reinforcement Learning

(Chenxi Whitehouse, Tianlu Wang, Ping Yu, Xian Li, Jason Weston, Ilia Kulikov, Swarnadeep Saha)

The progress of AI is bottlenecked by the quality of evaluation, and powerful LLM-as-a-Judge models have proved to be a core solution. Improved judgment ability is enabled by stronger chain-of-thought reasoning, motivating the need to find the best recipes for training such models to think. In this work we introduce J1, a reinforcement learning approach to training such models. Our method converts both verifiable and non-verifiable prompts to judgment tasks with verifiable rewards that incentivize thinking and mitigate judgment bias. In particular, our approach outperforms all other existing 8B or 70B models when trained at those sizes, including models distilled from DeepSeek-R1. J1 also outperforms o1-mini, and even R1 on some benchmarks, despite training a smaller model. We provide analysis and ablations comparing Pairwise-J1 vs Pointwise-J1 models, offline vs online training recipes, reward strategies, seed prompts, and variations in thought length and content. We find that our models make better judgments by learning to outline evaluation criteria, comparing against self-generated reference answers, and re-evaluating the correctness of model responses.

추론 Judge 모델.

A reasoning-based judge model.

#reward-model #reasoning #rl

Learning to Think: Information-Theoretic Reinforcement Fine-Tuning for LLMs

(Jingyao Wang, Wenwen Qiang, Zeen Song, Changwen Zheng, Hui Xiong)

Large language models (LLMs) excel at complex tasks thanks to advances in reasoning abilities. However, existing methods overlook the trade-off between reasoning effectiveness and computational efficiency, often encouraging unnecessarily long reasoning chains and wasting tokens. To address this, we propose Learning to Think (L2T), an information-theoretic reinforcement fine-tuning framework for LLMs to make the models achieve optimal reasoning with fewer tokens. Specifically, L2T treats each query-response interaction as a hierarchical session of multiple episodes and proposes a universal dense process reward, i.e., quantifies the episode-wise information gain in parameters, requiring no extra annotations or task-specific evaluators. We propose a method to quickly estimate this reward based on PAC-Bayes bounds and the Fisher information matrix. Theoretical analyses show that it significantly reduces computational complexity with high estimation accuracy. By immediately rewarding each episode's contribution and penalizing excessive updates, L2T optimizes the model via reinforcement learning to maximize the use of each episode and achieve effective updates. Empirical results on various reasoning benchmarks and base models demonstrate the advantage of L2T across different tasks, boosting both reasoning effectiveness and efficiency.

추론 과정을 에피소드들로 나누고 각 에피소드들이 정답률 향상에 얼마나 기여하는지를 통해 추론 효율성을 향상. 이전 연구와 비슷한데 (https://arxiv.org/abs/2503.07572) 파라미터에 대한 페널티를 추가로 사용하는군요.

The study improves reasoning efficiency by dividing the reasoning process into episodes and evaluating how each episode contributes to increasing accuracy. This approach is similar to previous research (https://arxiv.org/abs/2503.07572), but this study additionally incorporates a penalty on parameters.

#rl #reasoning #reward

MathCoder-VL: Bridging Vision and Code for Enhanced Multimodal Mathematical Reasoning

(Ke Wang, Junting Pan, Linda Wei, Aojun Zhou, Weikang Shi, Zimu Lu, Han Xiao, Yunqiao Yang, Houxing Ren, Mingjie Zhan, Hongsheng Li)

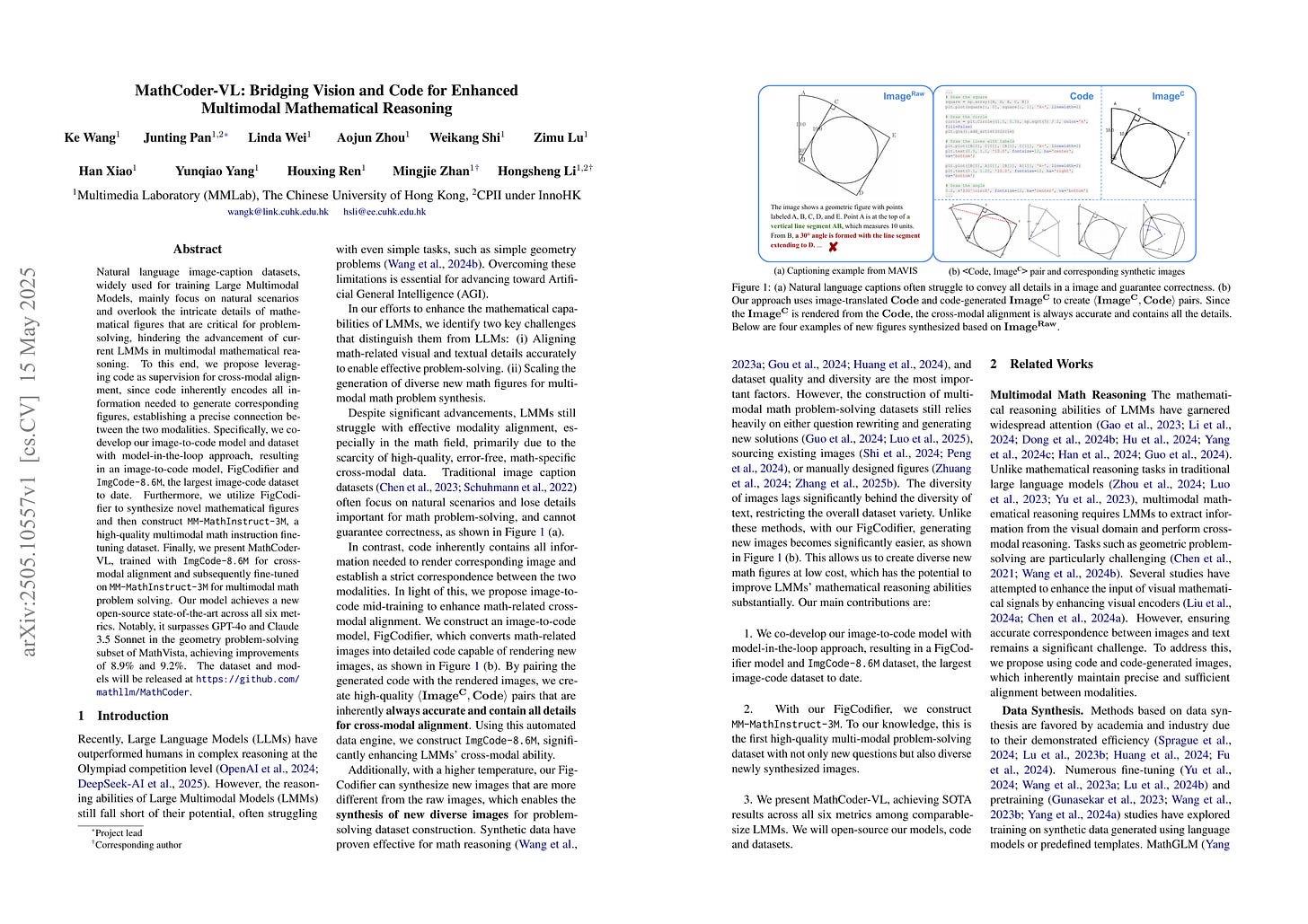

Natural language image-caption datasets, widely used for training Large Multimodal Models, mainly focus on natural scenarios and overlook the intricate details of mathematical figures that are critical for problem-solving, hindering the advancement of current LMMs in multimodal mathematical reasoning. To this end, we propose leveraging code as supervision for cross-modal alignment, since code inherently encodes all information needed to generate corresponding figures, establishing a precise connection between the two modalities. Specifically, we co-develop our image-to-code model and dataset with model-in-the-loop approach, resulting in an image-to-code model, FigCodifier and ImgCode-8.6M dataset, the largest image-code dataset to date. Furthermore, we utilize FigCodifier to synthesize novel mathematical figures and then construct MM-MathInstruct-3M, a high-quality multimodal math instruction fine-tuning dataset. Finally, we present MathCoder-VL, trained with ImgCode-8.6M for cross-modal alignment and subsequently fine-tuned on MM-MathInstruct-3M for multimodal math problem solving. Our model achieves a new open-source SOTA across all six metrics. Notably, it surpasses GPT-4o and Claude 3.5 Sonnet in the geometry problem-solving subset of MathVista, achieving improvements of 8.9% and 9.2%. The dataset and models will be released at https://github.com/mathllm/MathCoder.

이미지와 이미지를 생성하는 코드로 구성한 데이터셋. 코드로 이미지 생성이 가능하니 따라서 코드는 이미지에 대한 모든 정보를 포함하고 있다는 발상이네요.

This dataset consists of images and the corresponding codes that generate them. The key insight is that since images can be generated from code, the code inherently contains all the information about the image.

#vision-language #code