2025년 4월 9일

Adversarial Training of Reward Models

(Alexander Bukharin, Haifeng Qian, Shengyang Sun, Adithya Renduchintala, Soumye Singhal, Zhilin Wang, Oleksii Kuchaiev, Olivier Delalleau, Tuo Zhao)

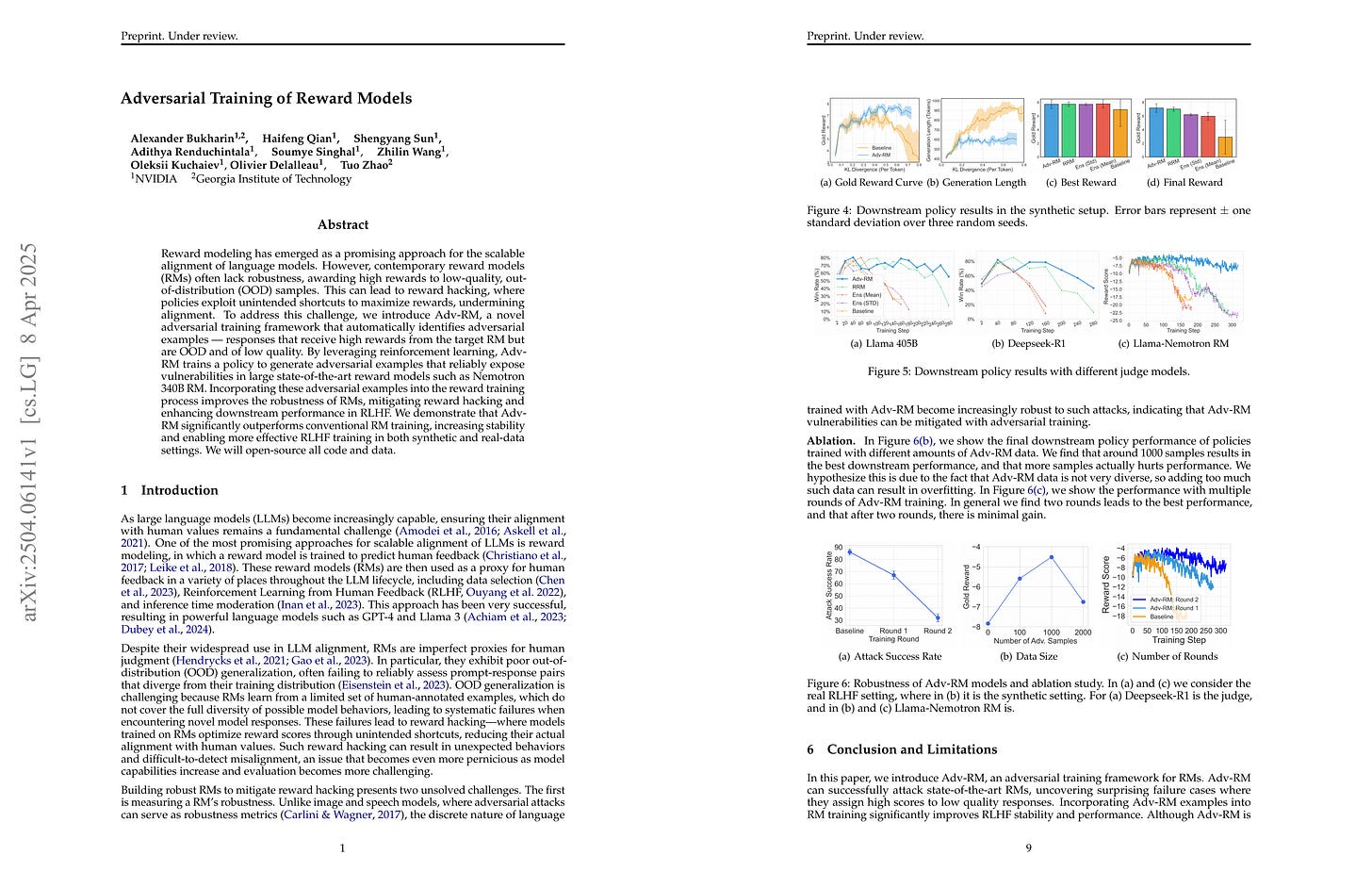

Reward modeling has emerged as a promising approach for the scalable alignment of language models. However, contemporary reward models (RMs) often lack robustness, awarding high rewards to low-quality, out-of-distribution (OOD) samples. This can lead to reward hacking, where policies exploit unintended shortcuts to maximize rewards, undermining alignment. To address this challenge, we introduce Adv-RM, a novel adversarial training framework that automatically identifies adversarial examples -- responses that receive high rewards from the target RM but are OOD and of low quality. By leveraging reinforcement learning, Adv-RM trains a policy to generate adversarial examples that reliably expose vulnerabilities in large state-of-the-art reward models such as Nemotron 340B RM. Incorporating these adversarial examples into the reward training process improves the robustness of RMs, mitigating reward hacking and enhancing downstream performance in RLHF. We demonstrate that Adv-RM significantly outperforms conventional RM training, increasing stability and enabling more effective RLHF training in both synthetic and real-data settings.

Reward Model Ensemble로 추정한 Uncertainty에 대해 Adversarial Attack을 통해 Adversarial 샘플을 생성하는 모델을 학습. 그리고 이를 통해 Reward Model에 대한 Adversarial Training.

Training model to generate adversarial samples through adversarial attacks on the uncertainty estimated by reward model ensembles. These samples are then used for adversarial training of the reward model.

#adversarial-training #reward-model

Right Question is Already Half the Answer: Fully Unsupervised LLM Reasoning Incentivization

(Qingyang Zhang, Haitao Wu, Changqing Zhang, Peilin Zhao, Yatao Bian)

While large language models (LLMs) have demonstrated exceptional capabilities in challenging tasks such as mathematical reasoning, existing methods to enhance reasoning ability predominantly rely on supervised fine-tuning (SFT) followed by reinforcement learning (RL) on reasoning-specific data after pre-training. However, these approaches critically depend on external supervisions--such as human labelled reasoning traces, verified golden answers, or pre-trained reward models--which limits scalability and practical applicability. In this work, we propose Entropy Minimized Policy Optimization (EMPO), which makes an early attempt at fully unsupervised LLM reasoning incentivization. EMPO does not require any supervised information for incentivizing reasoning capabilities (i.e., neither verifiable reasoning traces, problems with golden answers, nor additional pre-trained reward models). By continuously minimizing the predictive entropy of LLMs on unlabeled user queries in a latent semantic space, EMPO enables purely self-supervised evolution of reasoning capabilities with strong flexibility and practicality. Our experiments demonstrate competitive performance of EMPO on both mathematical reasoning and free-form commonsense reasoning tasks. Specifically, without any supervised signals, EMPO boosts the accuracy of Qwen2.5-Math-7B Base from 30.7% to 48.1% on mathematical benchmarks and improves truthfulness accuracy of Qwen2.5-7B Instruct from 87.16% to 97.25% on TruthfulQA.

최종 응답의 Semantic Entropy를 통해 추론 RL을 할 수 있다는 연구. Semantic Entropy는 불확실성 추정과 할루시네이션 탐지 목적으로 등장했었죠. (https://arxiv.org/abs/2302.09664, https://www.nature.com/articles/s41586-024-07421-0) 이건 정말로 프리트레이닝을 믿고 던지는 느낌이군요.

This study proposes that reasoning RL can be achieved using the semantic entropy of the final answer. Semantic entropy was originally introduced for uncertainty estimation and hallucination detection (https://arxiv.org/abs/2302.09664, https://www.nature.com/articles/s41586-024-07421-0). This approach does reasoning training by relying almost entirely on pre-training.

#rl #reasoning #uncertainty

Reasoning Models Know When They're Right: Probing Hidden States for Self-Verification

(Anqi Zhang, Yulin Chen, Jane Pan, Chen Zhao, Aurojit Panda, Jinyang Li, He He)

Reasoning models have achieved remarkable performance on tasks like math and logical reasoning thanks to their ability to search during reasoning. However, they still suffer from overthinking, often performing unnecessary reasoning steps even after reaching the correct answer. This raises the question: can models evaluate the correctness of their intermediate answers during reasoning? In this work, we study whether reasoning models encode information about answer correctness through probing the model's hidden states. The resulting probe can verify intermediate answers with high accuracy and produces highly calibrated scores. Additionally, we find models' hidden states encode correctness of future answers, enabling early prediction of the correctness before the intermediate answer is fully formulated. We then use the probe as a verifier to decide whether to exit reasoning at intermediate answers during inference, reducing the number of inference tokens by 24% without compromising performance. These findings confirm that reasoning models do encode a notion of correctness yet fail to exploit it, revealing substantial untapped potential to enhance their efficiency.

Probing으로 각 추론 단계에서 추출한 중간 답변이 정답인지 아닌지를 예측할 수 있다는 결과. 이런 자기 검증 능력이 추론 RL 과정에서 학습된 것이겠죠.

The results show that probing can predict whether intermediate answers extracted at each reasoning step are correct or not. This self-verification ability is likely learned during the reasoning RL process.

#reasoning