2025년 4월 30일

The Leaderboard Illusion

(Shivalika Singh, Yiyang Nan, Alex Wang, Daniel D'Souza, Sayash Kapoor, Ahmet Üstün, Sanmi Koyejo, Yuntian Deng, Shayne Longpre, Noah Smith, Beyza Ermis, Marzieh Fadaee, Sara Hooker)

Measuring progress is fundamental to the advancement of any scientific field. As benchmarks play an increasingly central role, they also grow more susceptible to distortion. Chatbot Arena has emerged as the go-to leaderboard for ranking the most capable AI systems. Yet, in this work we identify systematic issues that have resulted in a distorted playing field. We find that undisclosed private testing practices benefit a handful of providers who are able to test multiple variants before public release and retract scores if desired. We establish that the ability of these providers to choose the best score leads to biased Arena scores due to selective disclosure of performance results. At an extreme, we identify 27 private LLM variants tested by Meta in the lead-up to the Llama-4 release. We also establish that proprietary closed models are sampled at higher rates (number of battles) and have fewer models removed from the arena than open-weight and open-source alternatives. Both these policies lead to large data access asymmetries over time. Providers like Google and OpenAI have received an estimated 19.2% and 20.4% of all data on the arena, respectively. In contrast, a combined 83 open-weight models have only received an estimated 29.7% of the total data. We show that access to Chatbot Arena data yields substantial benefits; even limited additional data can result in relative performance gains of up to 112% on the arena distribution, based on our conservative estimates. Together, these dynamics result in overfitting to Arena-specific dynamics rather than general model quality. The Arena builds on the substantial efforts of both the organizers and an open community that maintains this valuable evaluation platform. We offer actionable recommendations to reform the Chatbot Arena's evaluation framework and promote fairer, more transparent benchmarking for the field

Chatbot Arena에서 비공개로 모델을 테스트한 다음 모델을 조용히 제외시키는 풍조에 대한 비판. 3월 한 달에만 메타가 27개 모델을 비공개 평가했군요. 평가한 다음 선택적으로 모델을 제외시키는 것으로 순위를 바꾸고 수집한 데이터로 리더보드에 오버피팅 하는 것이 가능하죠.

벤치마크는 모델을 잘 만든 다음 그걸 평가하기 위한 것이어야 하죠. 벤치마크를 타겟하기 시작하면 언제나 문제가 발생합니다.

A criticism of the trend in Chatbot Arena where models are privately tested and then quietly retracted. In March alone, Meta privately evaluated 27 models. After evaluation, companies can manipulate rankings by selectively retracting models and potentially overfit to the leaderboard using the collected data.

Benchmarks should be a method to evaluate well-built models, not as targets themselves. When benchmarks become the target, it invariably leads to problems.

#benchmark

MiMo: Unlocking the Reasoning Potential of Language Model – From Pretraining to Posttraining

(Xiaomi LLM-Core Team)

We present MiMo-7B, a large language model born for reasoning tasks, with optimization across both pre-training and post-training stages. During pre-training, we enhance the data preprocessing pipeline and employ a three-stage data mixing strategy to strengthen the base model’s reasoning potential. MiMo-7B-Base is pre-trained on 25 trillion tokens, with additional MultiToken Prediction objective for enhanced performance and accelerated inference speed. During post-training, we curate a dataset of 130K verifiable mathematics and programming problems for reinforcement learning, integrating a test-difficulty–driven code-reward scheme to alleviate sparse-reward issues and employing strategic data resampling to stabilize training. Extensive evaluations show that MiMo-7B-Base possesses exceptional reasoning potential, outperforming even much larger 32B models. The final RL-tuned model, MiMo-7B-RL, achieves superior performance on mathematics, code and general reasoning tasks, surpassing the performance of OpenAI o1-mini. The model checkpoints are available at https://github.com/xiaomimimo/MiMo.

샤오미의 추론 모델. 7B 모델을 25T 학습시켰군요. 프리트레이닝 단계에서부터 생성한 추론 데이터를 추가시켰습니다.

Xiaomi's reasoning model. They pre-trained a 7B model on 25T tokens. They incorporated synthesized reasoning data from the pre-training stage.

#llm #reasoning #rl

Reinforcement Learning for Reasoning in Large Language Models with One Training Example

(Yiping Wang, Qing Yang, Zhiyuan Zeng, Liliang Ren, Lucas Liu, Baolin Peng, Hao Cheng, Xuehai He, Kuan Wang, Jianfeng Gao, Weizhu Chen, Shuohang Wang, Simon Shaolei Du, Yelong Shen)

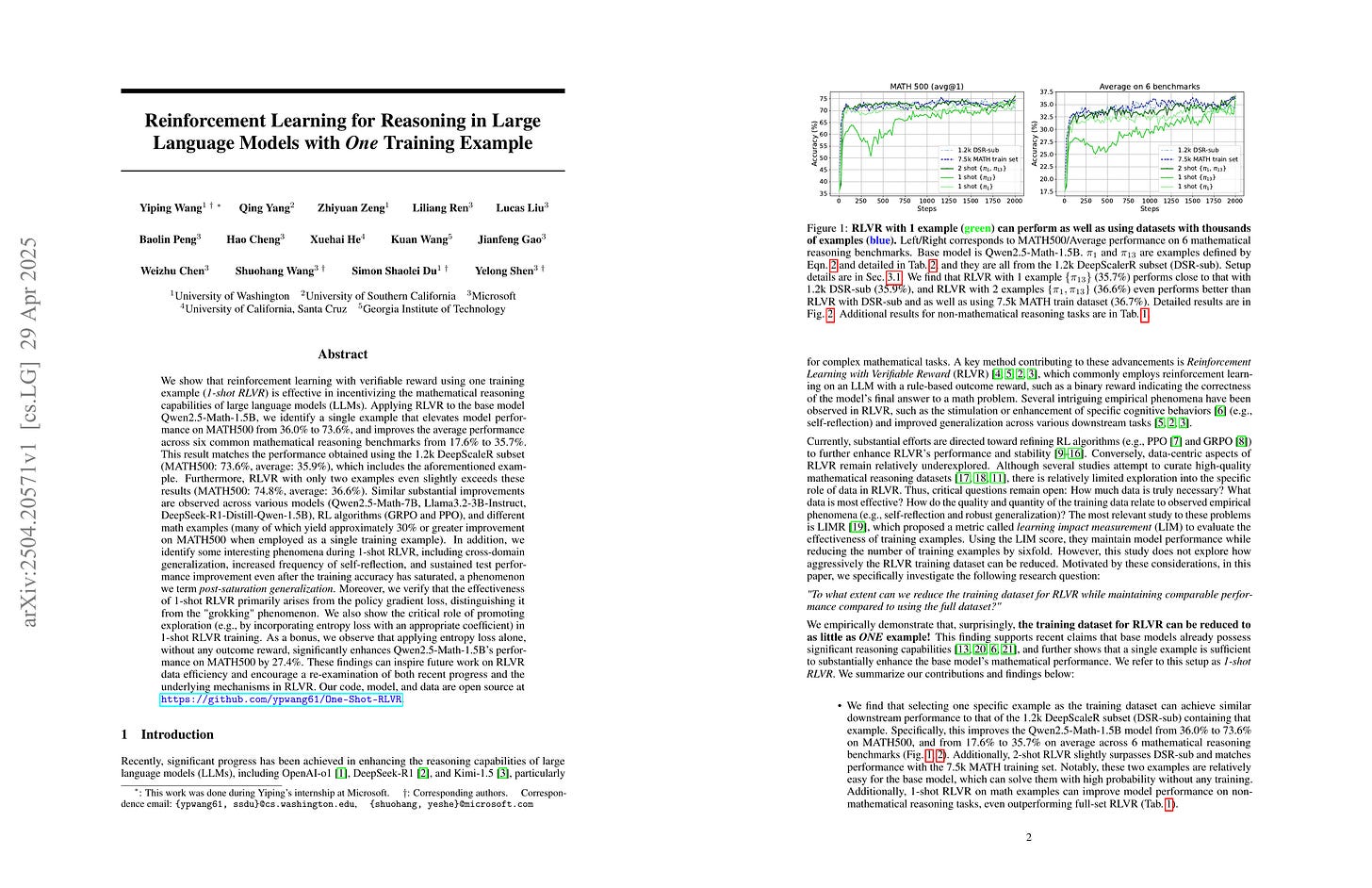

We show that reinforcement learning with verifiable reward using one training example (1-shot RLVR) is effective in incentivizing the math reasoning capabilities of large language models (LLMs). Applying RLVR to the base model Qwen2.5-Math-1.5B, we identify a single example that elevates model performance on MATH500 from 36.0% to 73.6%, and improves the average performance across six common mathematical reasoning benchmarks from 17.6% to 35.7%. This result matches the performance obtained using the 1.2k DeepScaleR subset (MATH500: 73.6%, average: 35.9%), which includes the aforementioned example. Similar substantial improvements are observed across various models (Qwen2.5-Math-7B, Llama3.2-3B-Instruct, DeepSeek-R1-Distill-Qwen-1.5B), RL algorithms (GRPO and PPO), and different math examples (many of which yield approximately 30% or greater improvement on MATH500 when employed as a single training example). In addition, we identify some interesting phenomena during 1-shot RLVR, including cross-domain generalization, increased frequency of self-reflection, and sustained test performance improvement even after the training accuracy has saturated, a phenomenon we term post-saturation generalization. Moreover, we verify that the effectiveness of 1-shot RLVR primarily arises from the policy gradient loss, distinguishing it from the "grokking" phenomenon. We also show the critical role of promoting exploration (e.g., by adding entropy loss with an appropriate coefficient) in 1-shot RLVR training. As a bonus, we observe that applying entropy loss alone, without any outcome reward, significantly enhances Qwen2.5-Math-1.5B's performance on MATH500 by 27.4%. These findings can inspire future work on RLVR data efficiency and encourage a re-examination of both recent progress and the underlying mechanisms in RLVR. Our code, model, and data are open source at https://github.com/ypwang61/One-Shot-RLVR

잘 고른 샘플 하나만으로 (여기서는 점수의 분산을 사용했습니다.) 추론 RL을 학습시킬 수 있다는 연구. 점점 더 추론은 베이스 LM에 있었던 능력을 끌어오는 것이 기본이라는 증거가 쌓이고 있네요. Hyperfitting (https://arxiv.org/abs/2412.04318) 생각도 납니다.

This study demonstrates that reasoning RL can be done using a single well-chosen sample (in this case, using the variance of scores). There is growing evidence that reasoning RL primarily draws out capabilities already present in the base language model. This also reminds me of the hyperfitting phenomenon (https://arxiv.org/abs/2412.04318).

#reasoning #rl

Towards Understanding the Nature of Attention with Low-Rank Sparse Decomposition

(Zhengfu He, Junxuan Wang, Rui Lin, Xuyang Ge, Wentao Shu, Qiong Tang, Junping Zhang, Xipeng Qiu)

We propose Low-Rank Sparse Attention (Lorsa), a sparse replacement model of Transformer attention layers to disentangle original Multi Head Self Attention (MHSA) into individually comprehensible components. Lorsa is designed to address the challenge of attention superposition to understand attention-mediated interaction between features in different token positions. We show that Lorsa heads find cleaner and finer-grained versions of previously discovered MHSA behaviors like induction heads, successor heads and attention sink behavior (i.e., heavily attending to the first token). Lorsa and Sparse Autoencoder (SAE) are both sparse dictionary learning methods applied to different Transformer components, and lead to consistent findings in many ways. For instance, we discover a comprehensive family of arithmetic-specific Lorsa heads, each corresponding to an atomic operation in Llama-3.1-8B. Automated interpretability analysis indicates that Lorsa achieves parity with SAE in interpretability while Lorsa exhibits superior circuit discovery properties, especially for features computed collectively by multiple MHSA heads. We also conduct extensive experiments on architectural design ablation, Lorsa scaling law and error analysis.

SAE처럼 Attention의 Monosemantic Feature를 추출하기 위한 방법. Value의 차원을 1로 줄이고 헤드를 늘린 다음 Top K 헤드를 선택하는 방법이군요.

A method for extracting monosemantic features from attention, similar to SAE. The approach involves reducing the dimension of the value vector to 1, increasing the number of attention heads, and then selecting the top K heads.

#mechanistic-interpretation

GaLore 2: Large-Scale LLM Pre-Training by Gradient Low-Rank Projection

(DiJia Su, Andrew Gu, Jane Xu, Yuandong Tian, Jiawei Zhao)

Large language models (LLMs) have revolutionized natural language understanding and generation but face significant memory bottlenecks during training. GaLore, Gradient Low-Rank Projection, addresses this issue by leveraging the inherent low-rank structure of weight gradients, enabling substantial memory savings without sacrificing performance. Recent works further extend GaLore from various aspects, including low-bit quantization and higher-order tensor structures. However, there are several remaining challenges for GaLore, such as the computational overhead of SVD for subspace updates and the integration with state-of-the-art training parallelization strategies (e.g., FSDP). In this paper, we present GaLore 2, an efficient and scalable GaLore framework that addresses these challenges and incorporates recent advancements. In addition, we demonstrate the scalability of GaLore 2 by pre-training Llama 7B from scratch using up to 500 billion training tokens, highlighting its potential impact on real LLM pre-training scenarios.

GaLore의 개선 버전. Randomized SVD를 썼군요.

An improved version of GaLore. They've employed randomized SVD.

#efficiency

Enhancing LLM Language Adaption through Cross-lingual In-Context Pre-training

(Linjuan Wu, Haoran Wei, Huan Lin, Tianhao Li, Baosong Yang, Weiming Lu)

Large language models (LLMs) exhibit remarkable multilingual capabilities despite English-dominated pre-training, attributed to cross-lingual mechanisms during pre-training. Existing methods for enhancing cross-lingual transfer remain constrained by parallel resources, suffering from limited linguistic and domain coverage. We propose Cross-lingual In-context Pre-training (CrossIC-PT), a simple and scalable approach that enhances cross-lingual transfer by leveraging semantically related bilingual texts via simple next-word prediction. We construct CrossIC-PT samples by interleaving semantic-related bilingual Wikipedia documents into a single context window. To access window size constraints, we implement a systematic segmentation policy to split long bilingual document pairs into chunks while adjusting the sliding window mechanism to preserve contextual coherence. We further extend data availability through a semantic retrieval framework to construct CrossIC-PT samples from web-crawled corpus. Experimental results demonstrate that CrossIC-PT improves multilingual performance on three models (Llama-3.1-8B, Qwen2.5-7B, and Qwen2.5-1.5B) across six target languages, yielding performance gains of 3.79%, 3.99%, and 1.95%, respectively, with additional improvements after data augmentation.

위키피디아의 다국어 문서들을 연결해서 다국어 프리트레이닝 데이터를 구축. 위키피디아의 경우에는 거의 번역 관계인 경우가 많긴 할 텐데, 번역을 넘어 의미적으로 연관된 다국어 문서들을 결합하면 어떻게 될지 궁금하네요.

Constructing multilingual pretraining data by connecting multilingual documents from Wikipedia. While many Wikipedia documents are translations of each other, I wonder what results we might get if we combine semantically related multilingual documents beyond mere translations.

#multilingual #pretraining

RAGEN: Understanding Self-Evolution in LLM Agents via Multi-Turn Reinforcement Learning

(Zihan Wang, Kangrui Wang, Qineng Wang, Pingyue Zhang, Linjie Li, Zhengyuan Yang, Kefan Yu, Minh Nhat Nguyen, Licheng Liu, Eli Gottlieb, Monica Lam, Yiping Lu, Kyunghyun Cho, Jiajun Wu, Li Fei-Fei, Lijuan Wang, Yejin Choi, Manling Li)

Training large language models (LLMs) as interactive agents presents unique challenges including long-horizon decision making and interacting with stochastic environment feedback. While reinforcement learning (RL) has enabled progress in static tasks, multi-turn agent RL training remains underexplored. We propose StarPO (State-Thinking-Actions-Reward Policy Optimization), a general framework for trajectory-level agent RL, and introduce RAGEN, a modular system for training and evaluating LLM agents. Our study on three stylized environments reveals three core findings. First, our agent RL training shows a recurring mode of Echo Trap where reward variance cliffs and gradient spikes; we address this with StarPO-S, a stabilized variant with trajectory filtering, critic incorporation, and decoupled clipping. Second, we find the shaping of RL rollouts would benefit from diverse initial states, medium interaction granularity and more frequent sampling. Third, we show that without fine-grained, reasoning-aware reward signals, agent reasoning hardly emerge through multi-turn RL and they may show shallow strategies or hallucinated thoughts. Code and environments are available at https://github.com/RAGEN-AI/RAGEN.

LLM 에이전트 구축을 위한 멀티턴 RL 세팅. 기존 방법을 그대로 사용하면 멀티턴에서 Collapse가 일어나는 경향이 있었다고 하네요.

Multi-turn RL setup for building LLM agents. They report that there is a tendency for collapse in multi-turn scenarios when directly applying single-turn RL methods.

#rl #agent #llm