2025년 4월 29일

Qwen3: Think Deeper, Act Faster

(Qwen Team)

Qwen 3. 36T 학습했군요. 15T 정도가 SotA의 기준이었는데 이제 30T 이상으로 넘어가는 느낌이네요. PDF에서 텍스트를 추출했다는 것도 이제 공식적으로 이야기하고 있군요. VL 모델들의 최고의 활용처 중 하나가 이 텍스트 추출이 아닌가 싶습니다.

QK Norm을 썼네요. 이쪽 인기가 은근히 오르네요. MoE는 비교적 평이한 구조군요

Qwen 3 was trained on 36T tokens. Previously, 15T was considered the standard for state-of-the-art models, but now it seems we're moving beyond 30T. They're now officially stating that they extracted text from PDFs. I think one of the best applications for vision-language models is this text extraction capability.

They used QK norm, which seems to be gaining popularity. The MoE structure they used appears to be relatively conventional.

#llm

APE-Bench I: Towards File-level Automated Proof Engineering of Formal Math Libraries

(Huajian Xin, Luming Li, Xiaoran Jin, Jacques Fleuriot, Wenda Li)

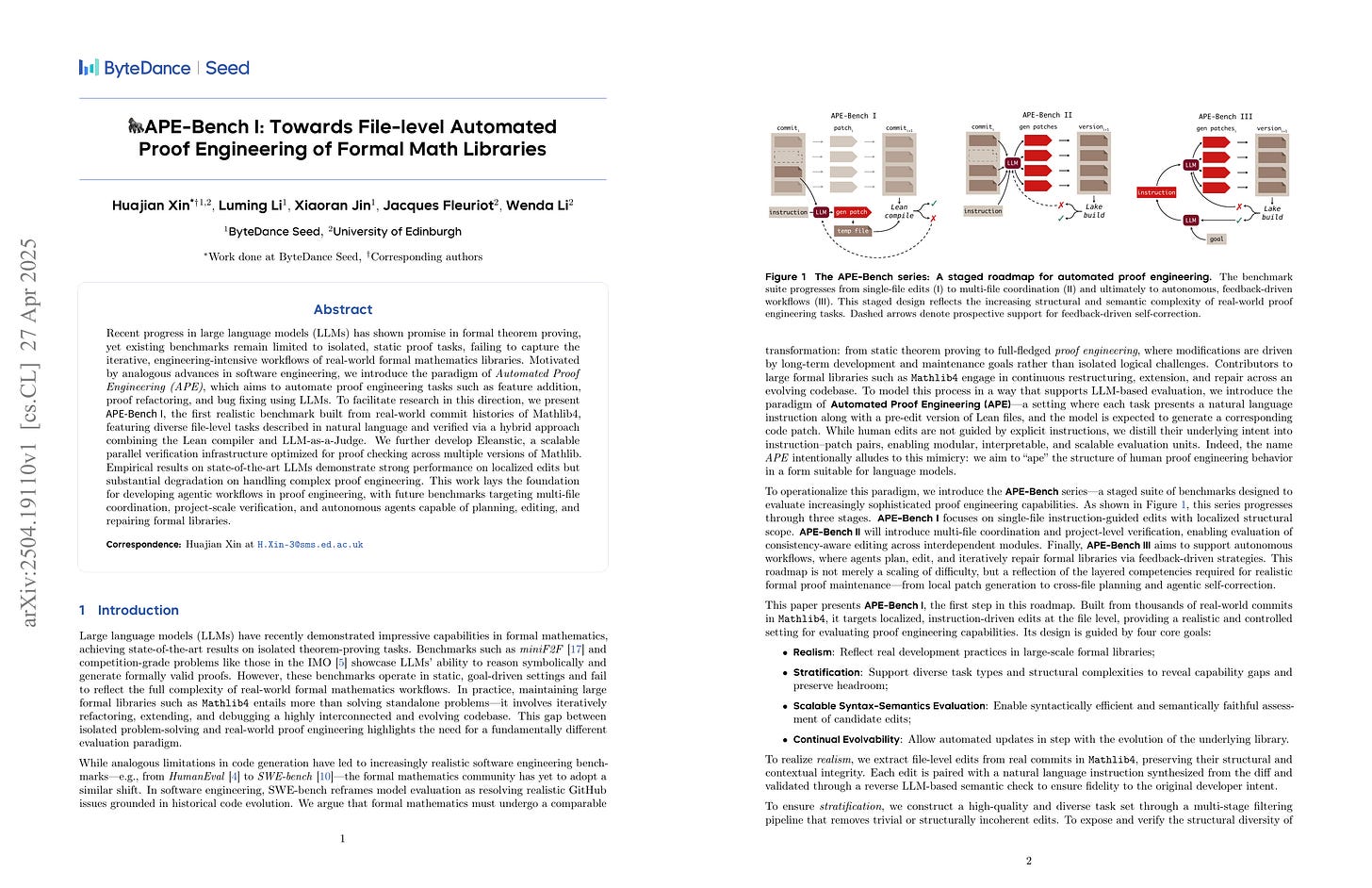

Recent progress in large language models (LLMs) has shown promise in formal theorem proving, yet existing benchmarks remain limited to isolated, static proof tasks, failing to capture the iterative, engineering-intensive workflows of real-world formal mathematics libraries. Motivated by analogous advances in software engineering, we introduce the paradigm of Automated Proof Engineering (APE), which aims to automate proof engineering tasks such as feature addition, proof refactoring, and bug fixing using LLMs. To facilitate research in this direction, we present APE-Bench I, the first realistic benchmark built from real-world commit histories of Mathlib4, featuring diverse file-level tasks described in natural language and verified via a hybrid approach combining the Lean compiler and LLM-as-a-Judge. We further develop Eleanstic, a scalable parallel verification infrastructure optimized for proof checking across multiple versions of Mathlib. Empirical results on state-of-the-art LLMs demonstrate strong performance on localized edits but substantial degradation on handling complex proof engineering. This work lays the foundation for developing agentic workflows in proof engineering, with future benchmarks targeting multi-file coordination, project-scale verification, and autonomous agents capable of planning, editing, and repairing formal libraries.

Proof Assistant로 증명을 하는 것을 넘어 라이브러리 단위에서 작업하는 능력을 평가하는 벤치마크군요.

This benchmark evaluates the ability to work at the library level, going beyond proving theorems using proof assistants.

#math #benchmark

FlashOverlap: A Lightweight Design for Efficiently Overlapping Communication and Computation

(Ke Hong, Xiuhong Li, Minxu Liu, Qiuli Mao, Tianqi Wu, Zixiao Huang, Lufang Chen, Zhong Wang, Yichong Zhang, Zhenhua Zhu, Guohao Dai, Yu Wang)

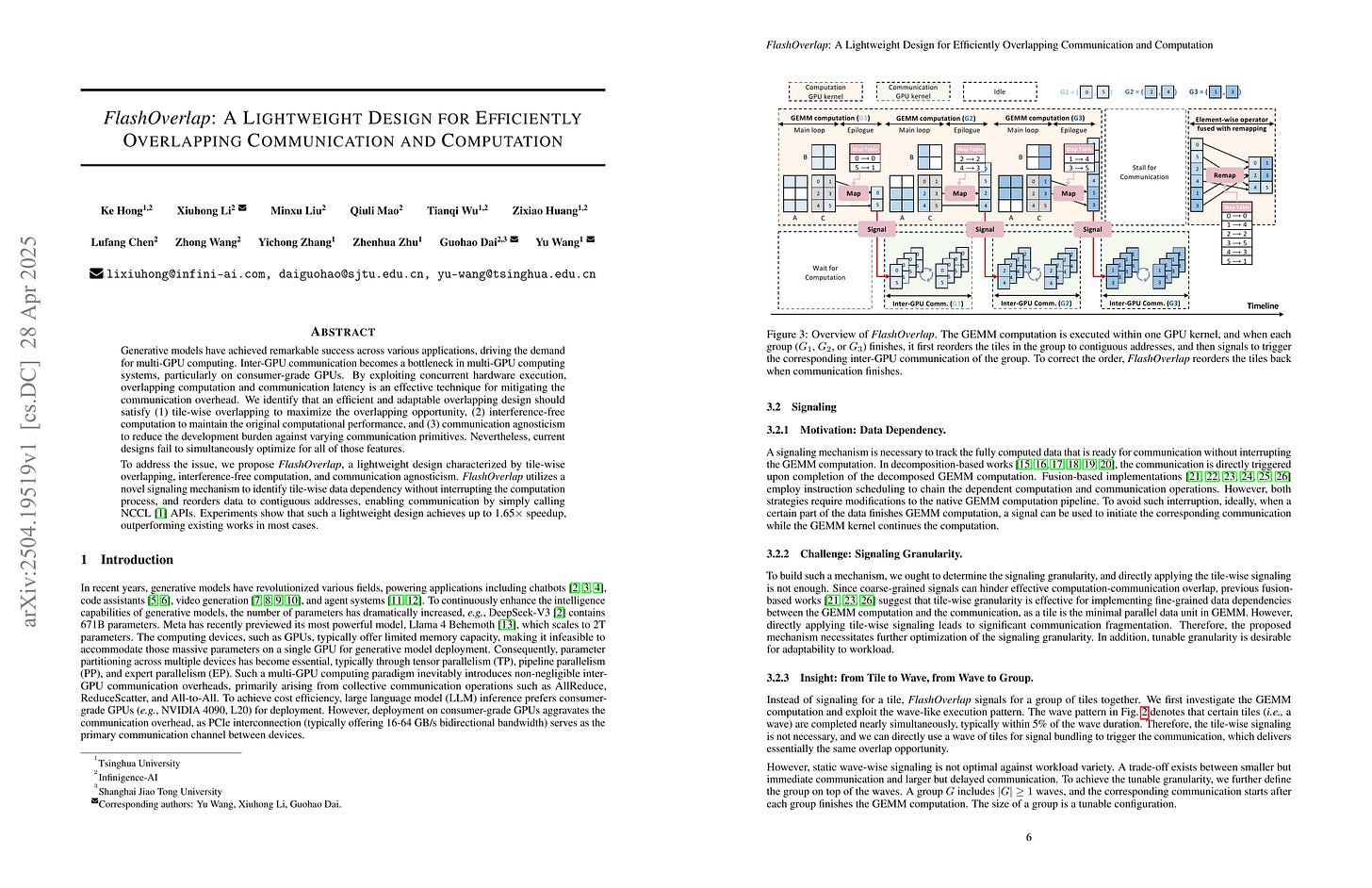

Generative models have achieved remarkable success across various applications, driving the demand for multi-GPU computing. Inter-GPU communication becomes a bottleneck in multi-GPU computing systems, particularly on consumer-grade GPUs. By exploiting concurrent hardware execution, overlapping computation and communication latency is an effective technique for mitigating the communication overhead. We identify that an efficient and adaptable overlapping design should satisfy (1) tile-wise overlapping to maximize the overlapping opportunity, (2) interference-free computation to maintain the original computational performance, and (3) communication agnosticism to reduce the development burden against varying communication primitives. Nevertheless, current designs fail to simultaneously optimize for all of those features. To address the issue, we propose FlashOverlap, a lightweight design characterized by tile-wise overlapping, interference-free computation, and communication agnosticism. FlashOverlap utilizes a novel signaling mechanism to identify tile-wise data dependency without interrupting the computation process, and reorders data to contiguous addresses, enabling communication by simply calling NCCL APIs. Experiments show that such a lightweight design achieves up to 1.65x speedup, outperforming existing works in most cases.

연산과 통신을 중복시키기 위한 커널을 설계. TP나 EP 시나리오에 부합하겠죠.

A kernel design for overlapping computation and communication. This would be well-suited for TP or EP scenarios.

#efficiency