2025년 3월 28일

4o Image Generation

Autoregressive Image Generation를 OpenAI가 어떻게 태클했는지에 대한 분석을 시도해봤습니다.

관찰

클라이언트에서 나타나는 블러 효과와는 별개로 실제로 전송되는 이미지는 전형적인 뉴럴넷의 Feature를 시각화하면 발생하는 것과 비슷한 패턴의 이미지들이다. 이러한 이미지들 몇 장이 jpeg 포맷으로 전송되고 최종 생성 결과가 png 파일로 전송된다.

타일/패치가 두드러지게 나타나는데 정확하게 8x8 픽셀이다.

생성은 클라이언트에서 나타나는 것과 같이 위에서 아래로 진행된다.

고해상도 이미지가 출력되는 스캔라인의 위쪽 부분과 아래쪽 부분은 생성 과정에서 모두 변화한다. 특히 스캔라인의 아래쪽 생성이 아직 진행되지 않은 부분은 상당히 크게 변화한다.

최종 생성 결과와 최종 생성 직전 이미지 사이에는 텍스처 등에서 꽤 큰 차이가 있다. 해상도의 차이라기보단 컨텐츠 자체가 다르다.

이미지를 입력으로 주고 그대로 생성하게 했을 때 생성 결과물이 입력과 꽤 큰 차이가 있다.

Representation에 대한 Autoregressive Prior, 그리고 Pixel Diffusion Decoder의 조합일 가능성이 있다. (https://x.com/ajabri/status/1904599427366739975)

생각

이미지 입력과 생성 사이의 갭이 있으니 입력을 위한 인코더/토크나이저와 생성을 위한 인코더/토크나이저가 분리되어 있을 가능성이 높다. Janus 같이. (https://arxiv.org/abs/2410.13848, https://arxiv.org/abs/2503.13436)

Autoregressive Prior와 Diffusion Decoder는 OpenAI의 unCLIP의 아이디어이도 하고 (https://arxiv.org/abs/2204.06125) 그 이후로도 꽤 나온 접근이다.

8x8 패치 패턴이 나타나는 걸 고려하면 어딘가에 8x8 패치에 대해 작동하는 모듈이 있다.

스캔라인이 지나가면서 Feature가 이미지로 전환되는 패턴은 왜 나타나는 것일까? VAR처럼 서로 다른 Scale에 대한 Autoregression이 포함되어 있을까? (https://arxiv.org/abs/2404.02905)

Random order 같은 트릭 (https://arxiv.org/abs/2411.00776, https://arxiv.org/abs/2412.01827) 대신 Left-to-Right, Top-to-Bottom이라는 래스터 스캔 순서를 그대로 따라가는 것 같다. 사실 그렇게 해야만 Autoregression에 의한 텍트 렌더링 성능 향상이 나타날 것 같기도 하다.

나타나지 않았던 텍스처가 최종 이미지에서 드러나는 것을 보면 최종 단계에 후처리를 하는 모듈이 있지 않을까 싶다. 어쩌면 Diffusion이 최종 디테일을 채우는 작업을 하는지도.

가능성

Discrete Token vs Continuous Token. Autoregressive 생성을 한다는 것은 분명한데 어떤 형태의 토큰을 사용하는지 불명. VQ 토큰이 Autoregressive 학습에 가장 자연스럽긴 하지만 VQ 토큰으로 생성될 수 있는 품질인지 의문. 다만 VQ 토큰을 예측한 다음 Pixel Diffusion이 위에 올라갈 가능성은 있을 듯.

Continuous Token이라면 어떤 Head로 예측하는 것인지도 문제. MSE 같은 것 외에 Mixture Density나 (https://arxiv.org/abs/2312.02116) Diffusion Head도 생각할 수 있다. (https://arxiv.org/abs/2406.11838)

Residual Quantization이나 Semantic Tokenizer를 사용했을 가능성은? 어쨌든 완전히 의미적이라기보단 위치적인 요소가 강한 토크나이저일 것 같긴 하다.

Multi Scale Prediction이 사용되고 있는가? 스캔라인 아래의 영역이 점진적으로 해상도가 높아지는 패턴이 나타난다. 이걸 그대로 생각하면 해상도가 높아지는 과정과 최종 해상도의 결과물이 생성되는 과정이 병렬적으로 일어난다고 할 수 있다. 그런데 Multi Scale Prediction은 보통 이전 Scale을 생성한 다음 그 다음 Scale을 생성하는 형태라서 조금 다르다. 결과물이 생성되는 과정은 순전히 중간 결과를 보여주기 위한 목적일지도.

어떤 Scale에서 Autoregressive 생성을 하고 있는가? 최종 출력 결과의 크기는 1024px 이상인데 1024x1024px라고 생각하면 패치 크기 8에서 16384 토큰이 필요. 생성 시간이 충분히 느리긴 하지만 그보다는 작은 해상도에서 생성되고 있을 가능성이 높다.

I tried to decode how OpenAI tackled the autoregressive image generation problem.

Observations

Unlike client-side effect of blurred images, the actual transferred images contain patterns similar to visualization results of features in neural nets. A few images of this kind are transferred in jpeg format, and the final generation result is transferred as a png image.

There are gridding effects, and they are precisely 8x8 pixels.

Generation progresses from top to bottom as shown in the client.

Both upper part of the scanline where high-resolution image is shown and the part below of it changes during generation processes. Especially bottom part that hasn't been rendered yet changes significantly.

The final image and the penultimate in-generation image have quite large differences, especially in textures. It seems that it's not just a resolution difference, but the content itself is different.

When given image inputs and instructed to reconstruct them as-is, the generation result is quite different from the input.

An OpenAI researcher suggest that it is maybe a combiation of autoregressive prior on representation and pixel diffusion decoder (https://x.com/ajabri/status/1904599427366739975).

Thoughts

As there is a gap between image inputs and generation, it is plausible that there are distinct encoder/tokenizers for inputs and generations, like Janus (https://arxiv.org/abs/2410.13848, https://arxiv.org/abs/2503.13436).

The combination of autoregressive prior and diffusion decoder was the idea of unCLIP from OpenAI (https://arxiv.org/abs/2204.06125) and similar approaches came out after it.

The 8x8 gridding effect suggests that a module working on 8x8 patches exists somewhere in the pipeline.

Why does the pattern of transforming features into images along with passing scanlines exists? Is there autoregression between scales like VAR exists? (https://arxiv.org/abs/2404.02905)

Instead of tricks like random ordering (https://arxiv.org/abs/2411.00776, https://arxiv.org/abs/2412.01827), it seems to follow raster scan order of left-to-right and top-to-bottom. I believe only in this way it is possible to get improvement on text rendering due to autoregression.

As new textures appears that didn't exist in the penultimate image, I think there is a postprocessing or decoding modules for final steps. Maybe diffusion handles the work of filling in final details.

Possibilities

Discrete vs Continuous token. It's evident that it performs autoregressive generation, but it's unknown to what type of tokens are used. VQ tokens are a natural choice for autoregressive training but it's not clear if the output quality can be achieved with VQ tokens. However, it's possible to have pixel diffusion on top of VQ tokens.

If they used continuous tokens, then what kind of heads are they using? Mixture density (https://arxiv.org/abs/2312.02116) or diffusion heads (https://arxiv.org/abs/2406.11838) can be used, beside of MSE.

Is it possible they have used residual quantizations or semantic tokenizers? I think the overall process is not done in completely semantic way, and the tokenizer has localized characteristics.

Are they using multi-scale prediction? There is a pattern where the resolution of the area below the scanline gradually increases. If we accept this at face value, then it means final outcome generation and resolution increases happen in parallel. But normally multi-scale prediction is done by generating the next scale after generating previous scales. Maybe the intermediate outcomes only meant to show the generation processes.

At what scale is autoregressive generation is done? The resolution of the final outcome is over 1024px, which would require 16384 tokens for 1024x1024px images with a patch size of 8. Although the overall generation process is slow, I think generation likely happens at much lower resolutions.

Understanding R1-Zero-Like Training: A Critical Perspective

(Zichen Liu, Changyu Chen, Wenjun Li, Penghui Qi, Tianyu Pang, Chao Du, Wee Sun Lee, Min Lin)

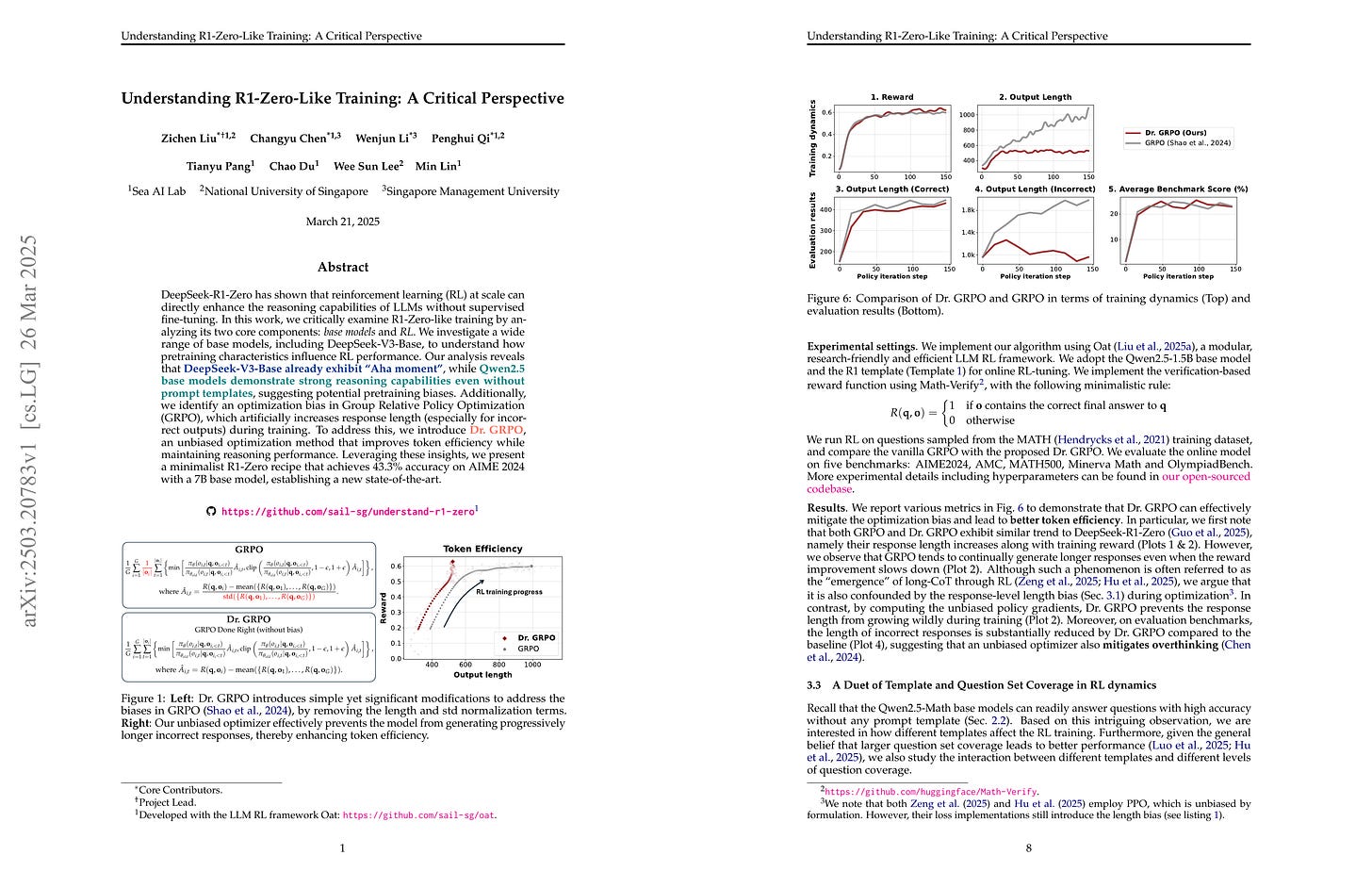

DeepSeek-R1-Zero has shown that reinforcement learning (RL) at scale can directly enhance the reasoning capabilities of LLMs without supervised fine-tuning. In this work, we critically examine R1-Zero-like training by analyzing its two core components: base models and RL. We investigate a wide range of base models, including DeepSeek-V3-Base, to understand how pretraining characteristics influence RL performance. Our analysis reveals that DeepSeek-V3-Base already exhibit ''Aha moment'', while Qwen2.5 base models demonstrate strong reasoning capabilities even without prompt templates, suggesting potential pretraining biases. Additionally, we identify an optimization bias in Group Relative Policy Optimization (GRPO), which artificially increases response length (especially for incorrect outputs) during training. To address this, we introduce Dr. GRPO, an unbiased optimization method that improves token efficiency while maintaining reasoning performance. Leveraging these insights, we present a minimalist R1-Zero recipe that achieves 43.3% accuracy on AIME 2024 with a 7B base model, establishing a new state-of-the-art. Our code is available at https://github.com/sail-sg/understand-r1-zero.

GRPO에서 Length와 Reward Normalization을 빼버렸네요. Reward Normalization은 GRPO에서 특징적인 부분이었지만 Length로 평균하는 것은 RLHF에서도 대부분 채택한 방식이었죠. DAPO에서도 비슷한 지적을 했었죠. (https://arxiv.org/abs/2503.14476)

이렇게 수정하니 오답에서도 응답이 길어지는 현상이 제거됐네요. 이 현상에 대한 연구가 여러 건 나왔는데 말이죠.

The authors removed length and reward normalization from GRPO. While reward normalization was a characteristic feature of GRPO, length averaging has been widely adopted in most RLHF implementations. DAPO also pointed out a similar issue (https://arxiv.org/abs/2503.14476).

With this modification, the tendency for responses to increase in length even for incorrect answers has been eliminated. There have been several studies targeted this phenomenon, though.

#rl #reasoning

Every Sample Matters: Leveraging Mixture-of-Experts and High-Quality Data for Efficient and Accurate Code LLM

(Codefuse, Ling Team: Wenting Cai, Yuchen Cao, Chaoyu Chen, Chen Chen, Siba Chen, Qing Cui, Peng Di, Junpeng Fang, Zi Gong, Ting Guo, Zhengyu He, Yang Huang, Cong Li, Jianguo Li, Zheng Li, Shijie Lian, BingChang Liu, Songshan Luo, Shuo Mao, Min Shen, Jian Wu, Jiaolong Yang, Wenjie Yang, Tong Ye, Hang Yu, Wei Zhang, Zhenduo Zhang, Hailin Zhao, Xunjin Zheng, Jun Zhou)

Recent advancements in code large language models (LLMs) have demonstrated remarkable capabilities in code generation and understanding. It is still challenging to build a code LLM with comprehensive performance yet ultimate efficiency. Many attempts have been released in the open source community to break the trade-off between performance and efficiency, such as the Qwen Coder series and the DeepSeek Coder series. This paper introduces yet another attempt in this area, namely Ling-Coder-Lite. We leverage the efficient Mixture-of-Experts (MoE) architecture along with a set of high-quality data curation methods (especially those based on program analytics) to build an efficient yet powerful code LLM. Ling-Coder-Lite exhibits on-par performance on 12 representative coding benchmarks compared to state-of-the-art models of similar size, such as Qwen2.5-Coder-7B and DeepSeek-Coder-V2-Lite, while offering competitive latency and throughput. In practice, we achieve a 50% reduction in deployment resources compared to the similar-sized dense model without performance loss. To facilitate further research and development in this area, we open-source our models as well as a substantial portion of high-quality data for the annealing and post-training stages. The models and data can be accessed at~\url{https://huggingface.co/inclusionAI/Ling-Coder-lite}.

MoE 코드 모델. 데이터 전처리에 Tree-sitter를 썼군요. 반갑네요.

MoE code model. They used Tree-sitter for data preprocessing. That's a glad choice.

#code #llm #moe

SimpleRL-Zoo: Investigating and Taming Zero Reinforcement Learning for Open Base Models in the Wild

(Weihao Zeng, Yuzhen Huang, Qian Liu, Wei Liu, Keqing He, Zejun Ma, Junxian He)

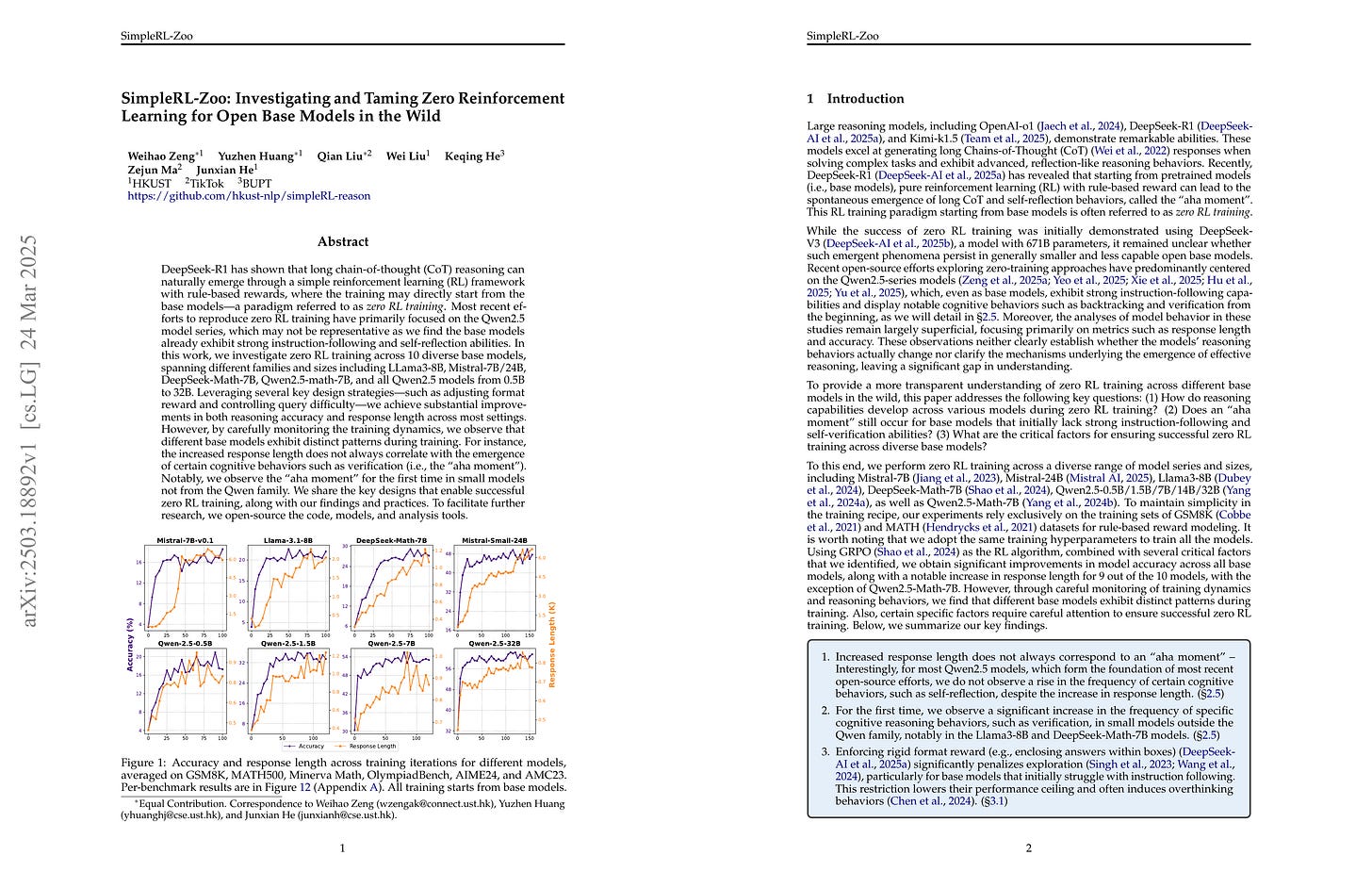

DeepSeek-R1 has shown that long chain-of-thought (CoT) reasoning can naturally emerge through a simple reinforcement learning (RL) framework with rule-based rewards, where the training may directly start from the base models-a paradigm referred to as zero RL training. Most recent efforts to reproduce zero RL training have primarily focused on the Qwen2.5 model series, which may not be representative as we find the base models already exhibit strong instruction-following and self-reflection abilities. In this work, we investigate zero RL training across 10 diverse base models, spanning different families and sizes including LLama3-8B, Mistral-7B/24B, DeepSeek-Math-7B, Qwen2.5-math-7B, and all Qwen2.5 models from 0.5B to 32B. Leveraging several key design strategies-such as adjusting format reward and controlling query difficulty-we achieve substantial improvements in both reasoning accuracy and response length across most settings. However, by carefully monitoring the training dynamics, we observe that different base models exhibit distinct patterns during training. For instance, the increased response length does not always correlate with the emergence of certain cognitive behaviors such as verification (i.e., the "aha moment"). Notably, we observe the "aha moment" for the first time in small models not from the Qwen family. We share the key designs that enable successful zero RL training, along with our findings and practices. To facilitate further research, we open-source the code, models, and analysis tools.

Qwen 외의 모델을 사용한 SFT 없는 추론 RL 실험. 포맷 Reward를 지나치게 강제하면 학습에 좋지 않다, 모델에 맞는 문제 난이도가 있다, 그리고 SFT에 지나치게 의존하면 고점이 낮아진다 등의 결론이네요.

Reasoning RL experiments without SFT using base models other than Qwen. The study concludes that overly strict format rewards are detrimental to learning, there's an appropriate difficulty level for each model, and excessive reliance on SFT lowers the performance ceiling.

#rl #reasoning

Reasoning to Learn from Latent Thoughts

(Yangjun Ruan, Neil Band, Chris J. Maddison, Tatsunori Hashimoto)

Compute scaling for language model (LM) pretraining has outpaced the growth of human-written texts, leading to concerns that data will become the bottleneck to LM scaling. To continue scaling pretraining in this data-constrained regime, we propose that explicitly modeling and inferring the latent thoughts that underlie the text generation process can significantly improve pretraining data efficiency. Intuitively, our approach views web text as the compressed final outcome of a verbose human thought process and that the latent thoughts contain important contextual knowledge and reasoning steps that are critical to data-efficient learning. We empirically demonstrate the effectiveness of our approach through data-constrained continued pretraining for math. We first show that synthetic data approaches to inferring latent thoughts significantly improve data efficiency, outperforming training on the same amount of raw data (5.7% →→ 25.4% on MATH). Furthermore, we demonstrate latent thought inference without a strong teacher, where an LM bootstraps its own performance by using an EM algorithm to iteratively improve the capability of the trained LM and the quality of thought-augmented pretraining data. We show that a 1B LM can bootstrap its performance across at least three iterations and significantly outperform baselines trained on raw data, with increasing gains from additional inference compute when performing the E-step. The gains from inference scaling and EM iterations suggest new opportunities for scaling data-constrained pretraining.

일반적인 텍스트에 대해 추론 텍스트를 생성해 데이터를 확장할 수 있는가 하는 연구. 프리트레이닝 데이터의 규모의 한계를 극복하기 위한 중요한 방향이겠죠. 더 중요한 것은 모델이 주어진 데이터를 통해 데이터의 증강 과정을 Bootstrapping 할 수 있는가일 텐데 Importance Sampling을 통해 그걸 시도해봤네요.

This research explores the possibility of expanding data by generating reasoning texts for general text inputs. It represents an important direction for overcoming the limitations of pretraining data scale. The more crucial question is whether a model can bootstrap its own data augmentation process using the given data. The authors attempted this using importance sampling.

#synthetic-data #reasoning #pretraining

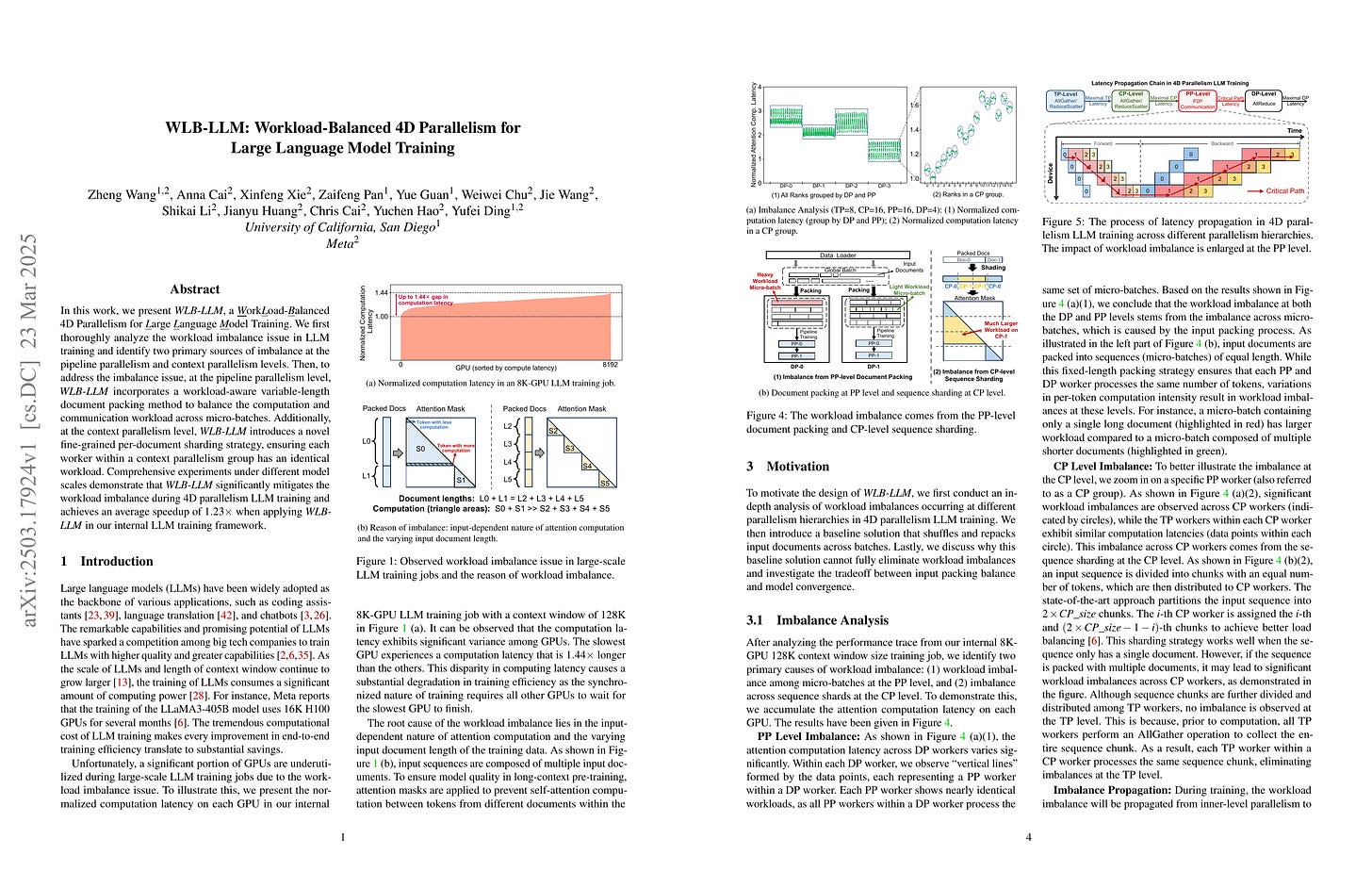

WLB-LLM: Workload-Balanced 4D Parallelism for Large Language Model Training

(Zheng Wang, Anna Cai, Xinfeng Xie, Zaifeng Pan, Yue Guan, Weiwei Chu, Jie Wang, Shikai Li, Jianyu Huang, Chris Cai, Yuchen Hao, Yufei Ding)

In this work, we present WLB-LLM, a workLoad-balanced 4D parallelism for large language model training. We first thoroughly analyze the workload imbalance issue in LLM training and identify two primary sources of imbalance at the pipeline parallelism and context parallelism levels. Then, to address the imbalance issue, at the pipeline parallelism level, WLB-LLM incorporates a workload-aware variable-length document packing method to balance the computation and communication workload across micro-batches. Additionally, at the context parallelism level, WLB-LLM introduces a novel fine-grained per-document sharding strategy, ensuring each worker within a context parallelism group has an identical workload. Comprehensive experiments under different model scales demonstrate that WLB-LLM significantly mitigates the workload imbalance during 4D parallelism LLM training and achieves an average speedup of 1.23x when applying WLB-LLM in our internal LLM training framework.

배치 내에 서로 다른 Context Length를 갖는 시퀀스들이 섞임으로 인해 발생하는 비효율성을 해소하려는 시도가 많이 나오네요. (https://arxiv.org/abs/2502.21231, https://arxiv.org/abs/2503.07680) 이쪽은 이전 시도들과 달리 짧은 시퀀스들을 잘 이어붙여서 해결해보자는 아이디어입니다.

Many attempts have been made to address the inefficiencies that arise when sequences with varying context lengths are packed into a single batch (https://arxiv.org/abs/2502.21231, https://arxiv.org/abs/2503.07680). Unlike previous approaches, this research proposes a solution by concatenating shorter sequences.

#long-context #efficiency #parallelism