SkyLadder: Better and Faster Pretraining via Context Window Scheduling

(Tongyao Zhu, Qian Liu, Haonan Wang, Shiqi Chen, Xiangming Gu, Tianyu Pang, Min-Yen Kan)

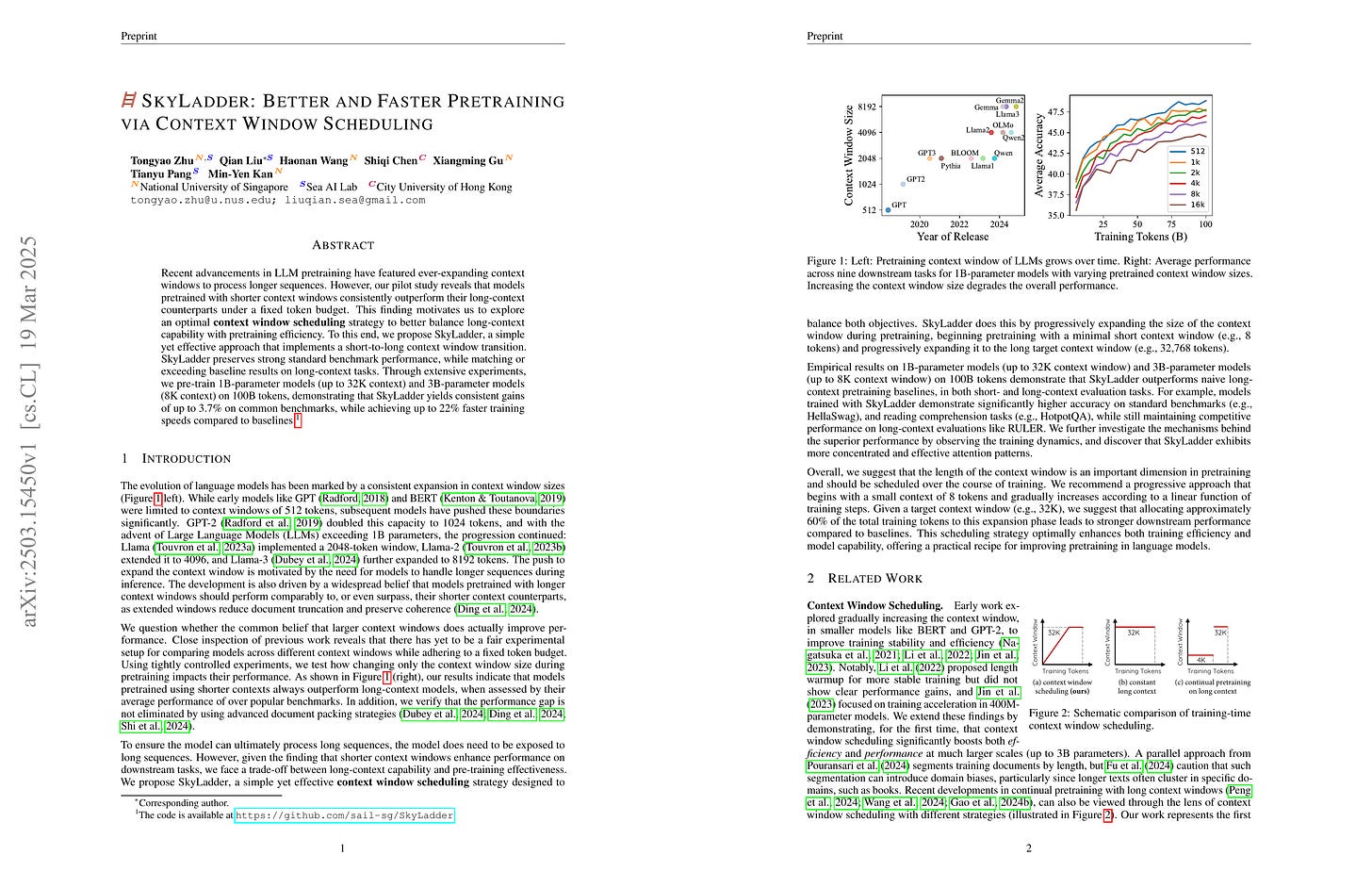

Recent advancements in LLM pretraining have featured ever-expanding context windows to process longer sequences. However, our pilot study reveals that models pretrained with shorter context windows consistently outperform their long-context counterparts under a fixed token budget. This finding motivates us to explore an optimal context window scheduling strategy to better balance long-context capability with pretraining efficiency. To this end, we propose SkyLadder, a simple yet effective approach that implements a short-to-long context window transition. SkyLadder preserves strong standard benchmark performance, while matching or exceeding baseline results on long context tasks. Through extensive experiments, we pre-train 1B-parameter models (up to 32K context) and 3B-parameter models (8K context) on 100B tokens, demonstrating that SkyLadder yields consistent gains of up to 3.7% on common benchmarks, while achieving up to 22% faster training speeds compared to baselines. The code is at https://github.com/sail-sg/SkyLadder.

Context Window가 작을 때 학습이 더 빠르다는 것을 기반으로 한 프리트레이닝에서 Context Window를 점진적으로 늘려나가는 아이디어. 완전히 Shortformer 시대의 아이디어네요. (https://arxiv.org/abs/2012.15832) 학습 과정에서 배치 크기를 늘려나가는 것은 요즘 흔한 트릭이니 그쪽으로 생각해도 될 것 같습니다.

This paper presents the idea of gradually increasing the context window during pretraining, based on the observation that training is faster with smaller context windows. This concept harkens back to the Shortformer era (https://arxiv.org/abs/2012.15832). Since gradually increasing batch sizes during training is now a common technique, we can consider this approach in a similar vein.

#pretraining #long-context

What Makes a Reward Model a Good Teacher? An Optimization Perspective

(Noam Razin, Zixuan Wang, Hubert Strauss, Stanley Wei, Jason D. Lee, Sanjeev Arora)

The success of Reinforcement Learning from Human Feedback (RLHF) critically depends on the quality of the reward model. While this quality is primarily evaluated through accuracy, it remains unclear whether accuracy fully captures what makes a reward model an effective teacher. We address this question from an optimization perspective. First, we prove that regardless of how accurate a reward model is, if it induces low reward variance, then the RLHF objective suffers from a flat landscape. Consequently, even a perfectly accurate reward model can lead to extremely slow optimization, underperforming less accurate models that induce higher reward variance. We additionally show that a reward model that works well for one language model can induce low reward variance, and thus a flat objective landscape, for another. These results establish a fundamental limitation of evaluating reward models solely based on accuracy or independently of the language model they guide. Experiments using models of up to 8B parameters corroborate our theory, demonstrating the interplay between reward variance, accuracy, and reward maximization rate. Overall, our findings highlight that beyond accuracy, a reward model needs to induce sufficient variance for efficient optimization.

Reward Model은 정확도 뿐만 아니라 Policy가 생성한 샘플에 대한 Reward Score의 분산도 중요하다는 연구. 분산은 Policy도 영향을 미치기에 Policy와 Reward Model 모두의 특성이 중요해지죠.

This research argues that for a reward model, not only accuracy but also the variance of reward scores for samples generated from the policy is important. Since the policy also affects variance, the characteristics of both the policy and the reward model become important.

#reward-model #rlhf

arXiv Daily 덕에 매일 좋은 논문들 읽고 있습니다.

배치 사이즈 늘려가며 학습하는 예시나 Shortformer 학습 관련해서 참고할만한 논문이 또 있을까요?