2025년 3월 11일

Monitoring Reasoning Models for Misbehavior and the Risks of Promoting Obfuscation

(Bowen Baker, Joost Huizinga, Leo Gao, Zehao Dou, Melody Y. Guan, Aleksander Madry, Wojciech Zaremba, Jakub Pachocki, David Farhi)

Mitigating reward hacking—where AI systems misbehave due to flaws or misspecifications in their learning objectives—remains a key challenge in constructing capable and aligned models. We show that we can monitor a frontier reasoning model, such as OpenAI o3-mini, for reward hacking in agentic coding environments by using another LLM that observes the model’s chain-of-thought (CoT) reasoning. CoT monitoring can be far more effective than monitoring agent actions and outputs alone, and we further found that a LLM weaker than o3-mini, namely GPT-4o, can effectively monitor a stronger model. Because CoT monitors can be effective at detecting exploits, it is natural to ask whether those exploits can be suppressed by incorporating a CoT monitor directly into the agent’s training objective. While we show that integrating CoT monitors into the reinforcement learning reward can indeed produce more capable and more aligned agents in the low optimization regime, we find that with too much optimization, agents learn obfuscated reward hacking, hiding their intent within the CoT while still exhibiting a significant rate of reward hacking. Because it is difficult to tell when CoTs have become obfuscated, it may be necessary to pay a monitorability tax by not applying strong optimization pressures directly to the chain-of-thought, ensuring that CoTs remain monitorable and useful for detecting misaligned behavior.

추론 모델의 Reward Hacking을 CoT를 관찰하는 것으로 탐지할 수 있다는 연구. 그렇다면 CoT에 대한 관찰 결과를 토대로 Reward를 준다면 어떨까? 제한된 조건에서긴 하지만 CoT를 위장하는 Reward Hacking이 일어난다고 합니다. 따라서 CoT에는 일단 손대지 않는 것이 좋겠다는 결론.

추가적으로 CoT에서 모델이 자신이 학습 중 혹은 평가 중이라는 것을 인식하는 경우가 있었다고 합니다. Anthropic에서도 연구한 주제죠. (https://arxiv.org/abs/2412.14093) 안전 연구자들이 우려하던 현상들이 보다 현실적인 시나리오에서 실제로 일어나기 시작하네요.

This study demonstrates that it's possible to detect reward hacking in reasoning models by observing their CoT. Then what if we provide rewards based on CoT observations? Although limited to restricted conditions, they found that reward hacking that obfuscates CoT does occur. Therefore, they conclude it's better not to directly manipulate the CoT.

Additionally, there were instances where the model recognized it was being trained or evaluated within its CoT. This is similar to a problem Anthropic also studied (https://arxiv.org/abs/2412.14093). Phenomena that safety researchers have been concerned about are now starting to occur in more realistic scenarios.

#reasoning #rl #safety #reward

Vision-R1: Incentivizing Reasoning Capability in Multimodal Large Language Models

(Wenxuan Huang, Bohan Jia, Zijie Zhai, Shaosheng Cao, Zheyu Ye, Fei Zhao, Yao Hu, Shaohui Lin)

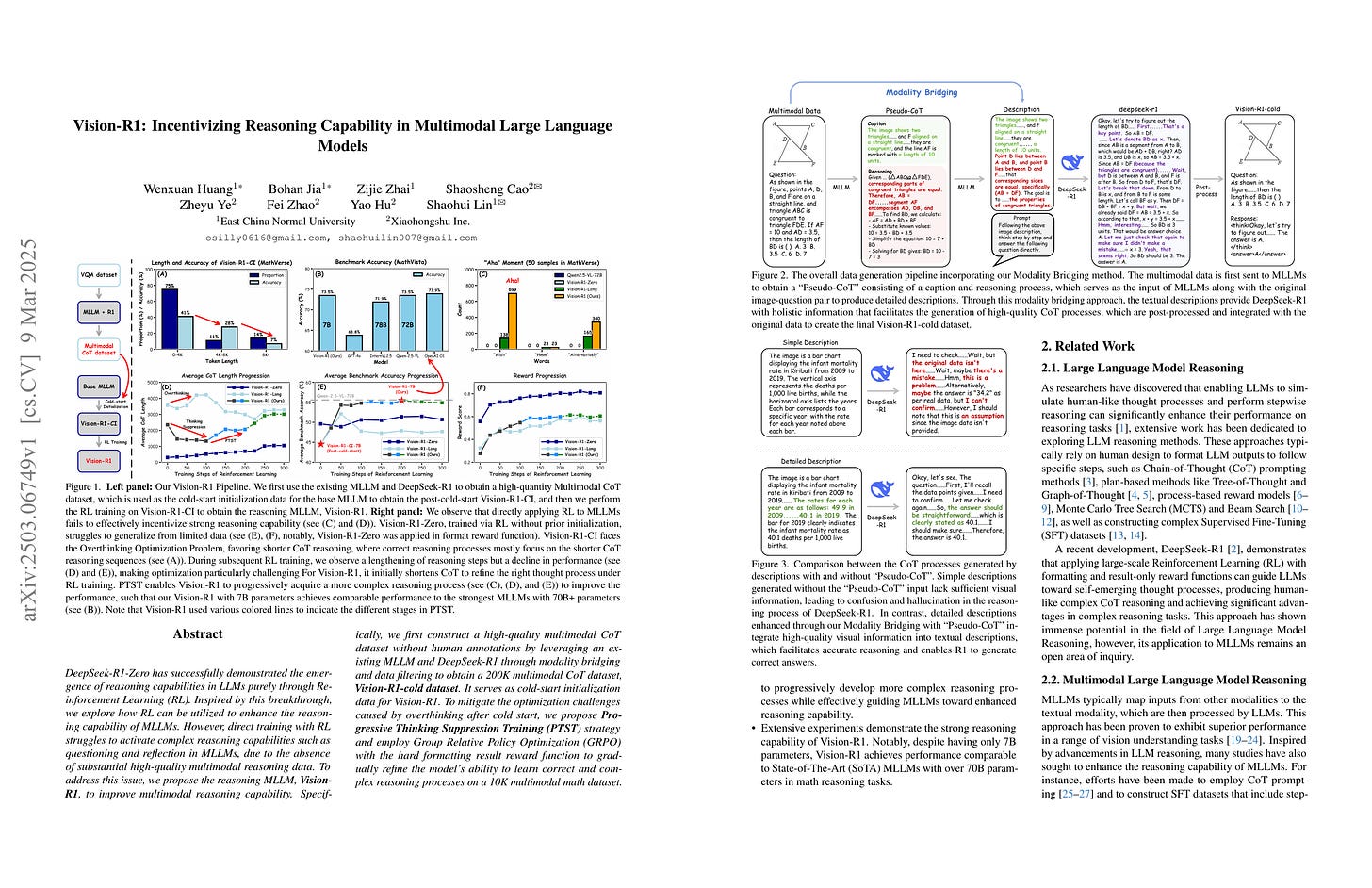

DeepSeek-R1-Zero has successfully demonstrated the emergence of reasoning capabilities in LLMs purely through Reinforcement Learning (RL). Inspired by this breakthrough, we explore how RL can be utilized to enhance the reasoning capability of MLLMs. However, direct training with RL struggles to activate complex reasoning capabilities such as questioning and reflection in MLLMs, due to the absence of substantial high-quality multimodal reasoning data. To address this issue, we propose the reasoning MLLM, Vision-R1, to improve multimodal reasoning capability. Specifically, we first construct a high-quality multimodal CoT dataset without human annotations by leveraging an existing MLLM and DeepSeek-R1 through modality bridging and data filtering to obtain a 200K multimodal CoT dataset, Vision-R1-cold dataset. It serves as cold-start initialization data for Vision-R1. To mitigate the optimization challenges caused by overthinking after cold start, we propose Progressive Thinking Suppression Training (PTST) strategy and employ Group Relative Policy Optimization (GRPO) with the hard formatting result reward function to gradually refine the model's ability to learn correct and complex reasoning processes on a 10K multimodal math dataset. Comprehensive experiments show our model achieves an average improvement of ∼6% across various multimodal math reasoning benchmarks. Vision-R1-7B achieves a 73.5% accuracy on the widely used MathVista benchmark, which is only 0.4% lower than the leading reasoning model, OpenAI O1. The datasets and code will be released in: https://github.com/Osilly/Vision-R1 .

멀티모달 RL. R1에서 샘플링한 데이터로 콜드 스타트를 했네요. 그냥 RL을 진행하면 무작정 응답이 길어지는 경향이 있어서 샘플링 길이를 점진적으로 증가시키는 방법을 썼다고 합니다. 이전에 나온 결과와 비교하자면 (https://arxiv.org/abs/2503.05132) 이쪽은 Instruct 모델을 베이스로 사용했네요.

Multimodal RL. They cold-started with data sampled from R1. When applying RL directly, there's a tendency for response lengths to increase uncontrollably, so they adopted a method of gradually increasing sampling lengths. Compared to previous results (https://arxiv.org/abs/2503.05132), this study used an instruct model as a base.

#multimodal #reasoning #rl

V2Flow: Unifying Visual Tokenization and Large Language Model Vocabularies for Autoregressive Image Generation

(Guiwei Zhang, Tianyu Zhang, Mohan Zhou, Yalong Bai, Biye Li)

We propose V2Flow, a novel tokenizer that produces discrete visual tokens capable of high-fidelity reconstruction, while ensuring structural and latent distribution alignment with the vocabulary space of large language models (LLMs). Leveraging this tight visual-vocabulary coupling, V2Flow enables autoregressive visual generation on top of existing LLMs. Our approach formulates visual tokenization as a flow-matching problem, aiming to learn a mapping from a standard normal prior to the continuous image distribution, conditioned on token sequences embedded within the LLMs vocabulary space. The effectiveness of V2Flow stems from two core designs. First, we propose a Visual Vocabulary resampler, which compresses visual data into compact token sequences, with each represented as a soft categorical distribution over LLM's vocabulary. This allows seamless integration of visual tokens into existing LLMs for autoregressive visual generation. Second, we present a masked autoregressive Rectified-Flow decoder, employing a masked transformer encoder-decoder to refine visual tokens into contextually enriched embeddings. These embeddings then condition a dedicated velocity field for precise reconstruction. Additionally, an autoregressive rectified-flow sampling strategy is incorporated, ensuring flexible sequence lengths while preserving competitive reconstruction quality. Extensive experiments show that V2Flow outperforms mainstream VQ-based tokenizers and facilitates autoregressive visual generation on top of existing. https://github.com/zhangguiwei610/V2Flow

Masked Autoregression에 (https://arxiv.org/abs/2406.11838) Rectified Flow를 사용해 이미지 토크나이저를 학습. 이때 이미지 토크나이저의 코드북을 LLM의 임베딩을 사용해서 만듭니다. LLM을 튜닝할 때 텍스트 토큰과 이미지 토큰을 정렬시키려는 아이디어겠죠.

이미지 토크나이저를 Diffusion을 사용해 구성하는 것은 흥미로운 아이디어라고 봅니다. (https://arxiv.org/abs/2501.18593)

The authors train an image tokenizer using masked autoregression (https://arxiv.org/abs/2406.11838) and rectified flow. They construct the codebook for the image tokenizer using embeddings from LLMs. The idea of approach is to align text and image tokens when fine-tuning LLMs.

I find the idea of constructing image tokenizers using diffusion to be intriguing (https://arxiv.org/abs/2501.18593).

#tokenizer #vq #autoregressive-model #diffusion