2025년 3월 10일

Every FLOP Counts: Scaling a 300B Mixture-of-Experts LING LLM without Premium GPUs

(Ling Team, Binwei Zeng, Chao Huang, Chao Zhang, Changxin Tian, Cong Chen, Dingnan Jin, Feng Yu, Feng Zhu, Feng Yuan, Fakang Wang, Gangshan Wang, Guangyao Zhai, Haitao Zhang, Huizhong Li, Jun Zhou, Jia Liu, Junpeng Fang, Junjie Ou, Jun Hu, Ji Luo, Ji Zhang, Jian Liu, Jian Sha, Jianxue Qian, Jiewei Wu, Junping Zhao, Jianguo Li, Jubao Feng, Jingchao Di, Junming Xu, Jinghua Yao, Kuan Xu, Kewei Du, Longfei Li, Lei Liang, Lu Yu, Li Tang, Lin Ju, Peng Xu, Qing Cui, Song Liu, Shicheng Li, Shun Song, Song Yan, Tengwei Cai, Tianyi Chen, Ting Guo, Ting Huang, Tao Feng, Tao Wu, Wei Wu, Xiaolu Zhang, Xueming Yang, Xin Zhao, Xiaobo Hu, Xin Lin, Yao Zhao, Yilong Wang, Yongzhen Guo, Yuanyuan Wang, Yue Yang, Yang Cao, Yuhao Fu, Yi Xiong, Yanzhe Li, Zhe Li, Zhiqiang Zhang, Ziqi Liu, Zhaoxin Huan, Zujie Wen, Zhenhang Sun, Zhuoxuan Du, Zhengyu He)

In this technical report, we tackle the challenges of training large-scale Mixture of Experts (MoE) models, focusing on overcoming cost inefficiency and resource limitations prevalent in such systems. To address these issues, we present two differently sized MoE large language models (LLMs), namely Ling-Lite and Ling-Plus (referred to as "Bailing" in Chinese, spelled Bailing in Pinyin). Ling-Lite contains 16.8 billion parameters with 2.75 billion activated parameters, while Ling-Plus boasts 290 billion parameters with 28.8 billion activated parameters. Both models exhibit comparable performance to leading industry benchmarks. This report offers actionable insights to improve the efficiency and accessibility of AI development in resource-constrained settings, promoting more scalable and sustainable technologies. Specifically, to reduce training costs for large-scale MoE models, we propose innovative methods for (1) optimization of model architecture and training processes, (2) refinement of training anomaly handling, and (3) enhancement of model evaluation efficiency. Additionally, leveraging high-quality data generated from knowledge graphs, our models demonstrate superior capabilities in tool use compared to other models. Ultimately, our experimental findings demonstrate that a 300B MoE LLM can be effectively trained on lower-performance devices while achieving comparable performance to models of a similar scale, including dense and MoE models. Compared to high-performance devices, utilizing a lower-specification hardware system during the pre-training phase demonstrates significant cost savings, reducing computing costs by approximately 20%. The models can be accessed at https://huggingface.co/inclusionAI.

29B Activated 290B Total Weight 9T 학습 MoE. 놀랍게도 서로 다른 디바이스를 사용하는 클러스터를 옮겨다니면서 Local SGD 기반 방법으로 학습했네요. (https://arxiv.org/abs/2412.07210) MoE에 대해서도 Scaling Law 등 흥미로운 점들이 많습니다.

29B activated 290B total parameters MoE model trained on 9T tokens. Surprisingly, they migrated the training run between heterogeneous clusters and used a method based on local SGD (https://arxiv.org/abs/2412.07210). There are many interesting points regarding MoE, including scaling law estimations.

#scaling-law #distributed-training #moe

Frequency Autoregressive Image Generation with Continuous Tokens

(Hu Yu, Hao Luo, Hangjie Yuan, Yu Rong, Feng Zhao)

Autoregressive (AR) models for image generation typically adopt a two-stage paradigm of vector quantization and raster-scan ``next-token prediction", inspired by its great success in language modeling. However, due to the huge modality gap, image autoregressive models may require a systematic reevaluation from two perspectives: tokenizer format and regression direction. In this paper, we introduce the frequency progressive autoregressive (FAR) paradigm and instantiate FAR with the continuous tokenizer. Specifically, we identify spectral dependency as the desirable regression direction for FAR, wherein higher-frequency components build upon the lower one to progressively construct a complete image. This design seamlessly fits the causality requirement for autoregressive models and preserves the unique spatial locality of image data. Besides, we delve into the integration of FAR and the continuous tokenizer, introducing a series of techniques to address optimization challenges and improve the efficiency of training and inference processes. We demonstrate the efficacy of FAR through comprehensive experiments on the ImageNet dataset and verify its potential on text-to-image generation.

주파수 영역에서의 Autoregressive Image Generation. Loss로는 MAR 스타일의 Diffusion Loss를 사용했습니다. VAR에서는 Continuous Token을 사용하는 것이 잘 안 됐다고 하는군요. 그 부분을 개선하려는 것이 주된 문제 의식이었던 것 같습니다. (성능과는 별개로.)

Autoregressive image generation in the frequency domain. They used a MAR-style (https://arxiv.org/abs/2406.11838) diffusion loss. The authors note that VAR (https://arxiv.org/abs/2404.02905) doesn't work well with continuous tokens. It seems the main focus of this research was to address that issue (regardless of performance outcomes).

#autoregressive-model #diffusion

R1-Zero's "Aha Moment" in Visual Reasoning on a 2B Non-SFT Model

(Hengguang Zhou, Xirui Li, Ruochen Wang, Minhao Cheng, Tianyi Zhou, Cho-Jui Hsieh)

Recently DeepSeek R1 demonstrated how reinforcement learning with simple rule-based incentives can enable autonomous development of complex reasoning in large language models, characterized by the "aha moment", in which the model manifest self-reflection and increased response length during training. However, attempts to extend this success to multimodal reasoning often failed to reproduce these key characteristics. In this report, we present the first successful replication of these emergent characteristics for multimodal reasoning on only a non-SFT 2B model. Starting with Qwen2-VL-2B and applying reinforcement learning directly on the SAT dataset, our model achieves 59.47% accuracy on CVBench, outperforming the base model by approximately ~30% and exceeding both SFT setting by ~2%. In addition, we share our failed attempts and insights in attempting to achieve R1-like reasoning using RL with instruct models. aiming to shed light on the challenges involved. Our key observations include: (1) applying RL on instruct model often results in trivial reasoning trajectories, and (2) naive length reward are ineffective in eliciting reasoning capabilities. The project code is available at https://github.com/turningpoint-ai/VisualThinker-R1-Zero

Qwen 2 VL 2B 기반으로 멀티모달 추론 RL을 시도. SFT 없이 GRPO로 추론 능력의 획득을 관찰할 수 있었다고 하네요. 역으로 Instruct 모델로는 잘 되지 않았다고 하는군요.

The authors attempted multimodal reasoning RL based on Qwen 2 VL 2B. They observed the acquisition of reasoning abilities using GRPO without SFT. Conversely, they report that this approach was not very effective when applied to instruct models.

#reasoning #rl #multimodal

R1-Searcher: Incentivizing the Search Capability in LLMs via Reinforcement Learning

(Huatong Song, Jinhao Jiang, Yingqian Min, Jie Chen, Zhipeng Chen, Wayne Xin Zhao, Lei Fang, Ji-Rong Wen)

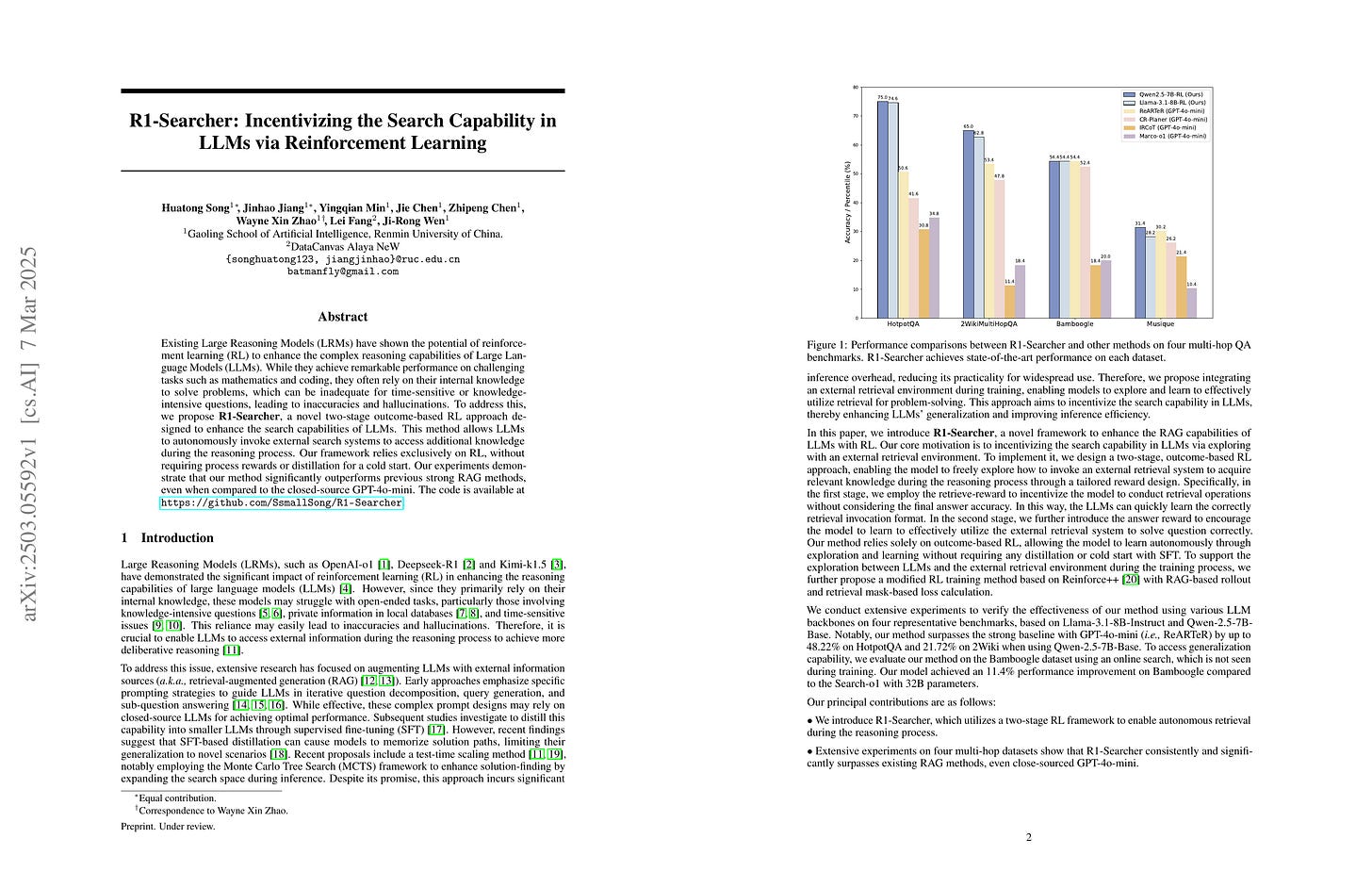

Existing Large Reasoning Models (LRMs) have shown the potential of reinforcement learning (RL) to enhance the complex reasoning capabilities of Large Language Models~(LLMs). While they achieve remarkable performance on challenging tasks such as mathematics and coding, they often rely on their internal knowledge to solve problems, which can be inadequate for time-sensitive or knowledge-intensive questions, leading to inaccuracies and hallucinations. To address this, we propose R1-Searcher, a novel two-stage outcome-based RL approach designed to enhance the search capabilities of LLMs. This method allows LLMs to autonomously invoke external search systems to access additional knowledge during the reasoning process. Our framework relies exclusively on RL, without requiring process rewards or distillation for a cold start. % effectively generalizing to out-of-domain datasets and supporting both Base and Instruct models. Our experiments demonstrate that our method significantly outperforms previous strong RAG methods, even when compared to the closed-source GPT-4o-mini.

검색 도구 사용을 RL로 주입하려는 시도. 도구를 반복적으로 사용하도록 RL로 학습시키는 것은 자연스러운 발전 방향이겠죠. (Deep Research에서도 비슷한 방법을 사용했을 것 같고요.)

This paper attempts to inject search tool usage through RL. Training models to use tools repeatedly via RL is a natural progression. (I suspect Deep Research might have employed similar methods.)

#reasoning #lm