2025년 2월 7일

BOLT: Bootstrap Long Chain-of-Thought in Language Models without Distillation

(Bo Pang, Hanze Dong, Jiacheng Xu, Silvio Savarese, Yingbo Zhou, Caiming Xiong)

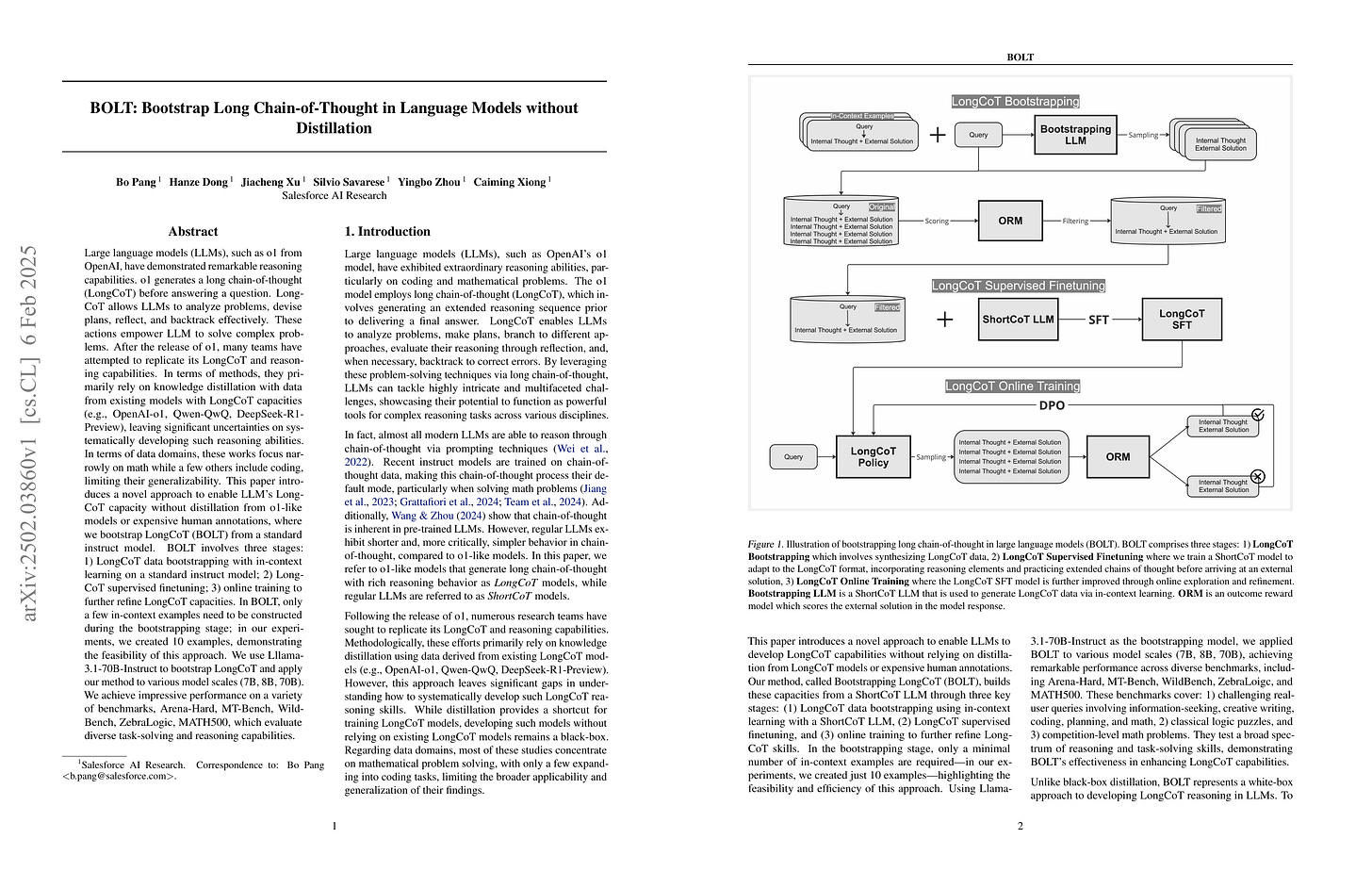

Large language models (LLMs), such as o1 from OpenAI, have demonstrated remarkable reasoning capabilities. o1 generates a long chain-of-thought (LongCoT) before answering a question. LongCoT allows LLMs to analyze problems, devise plans, reflect, and backtrack effectively. These actions empower LLM to solve complex problems. After the release of o1, many teams have attempted to replicate its LongCoT and reasoning capabilities. In terms of methods, they primarily rely on knowledge distillation with data from existing models with LongCoT capacities (e.g., OpenAI-o1, Qwen-QwQ, DeepSeek-R1-Preview), leaving significant uncertainties on systematically developing such reasoning abilities. In terms of data domains, these works focus narrowly on math while a few others include coding, limiting their generalizability. This paper introduces a novel approach to enable LLM's LongCoT capacity without distillation from o1-like models or expensive human annotations, where we bootstrap LongCoT (BOLT) from a standard instruct model. BOLT involves three stages: 1) LongCoT data bootstrapping with in-context learning on a standard instruct model; 2) LongCoT supervised finetuning; 3) online training to further refine LongCoT capacities. In BOLT, only a few in-context examples need to be constructed during the bootstrapping stage; in our experiments, we created 10 examples, demonstrating the feasibility of this approach. We use Llama-3.1-70B-Instruct to bootstrap LongCoT and apply our method to various model scales (7B, 8B, 70B). We achieve impressive performance on a variety of benchmarks, Arena-Hard, MT-Bench, WildBench, ZebraLogic, MATH500, which evaluate diverse task-solving and reasoning capabilities.

Distillation 대신 In-context Learning과 필터링으로 Long CoT 샘플을 생성한 시도. DeepSeek-R1이나 Kimi k1.5가 SFT 데이터셋을 구축하는데 사용한 방법 중 하나이기도 하겠죠.

An attempt to generate long CoT samples using in-context learning and filtering instead of distillation. It would be the one of the methods used by DeepSeek-R1 and Kimi k1.5 to construct their SFT datasets.

#reasoning #synthetic-data

Éclair -- Extracting Content and Layout with Integrated Reading Order for Documents

(Ilia Karmanov, Amala Sanjay Deshmukh, Lukas Voegtle, Philipp Fischer, Kateryna Chumachenko, Timo Roman, Jarno Seppänen, Jupinder Parmar, Joseph Jennings, Andrew Tao, Karan Sapra)

Optical Character Recognition (OCR) technology is widely used to extract text from images of documents, facilitating efficient digitization and data retrieval. However, merely extracting text is insufficient when dealing with complex documents. Fully comprehending such documents requires an understanding of their structure -- including formatting, formulas, tables, and the reading order of multiple blocks and columns across multiple pages -- as well as semantic information for detecting elements like footnotes and image captions. This comprehensive understanding is crucial for downstream tasks such as retrieval, document question answering, and data curation for training Large Language Models (LLMs) and Vision Language Models (VLMs). To address this, we introduce Éclair, a general-purpose text-extraction tool specifically designed to process a wide range of document types. Given an image, Éclair is able to extract formatted text in reading order, along with bounding boxes and their corresponding semantic classes. To thoroughly evaluate these novel capabilities, we introduce our diverse human-annotated benchmark for document-level OCR and semantic classification. Éclair achieves state-of-the-art accuracy on this benchmark, outperforming other methods across key metrics. Additionally, we evaluate Éclair on established benchmarks, demonstrating its versatility and strength across several evaluation standards.

레이아웃 정보를 보존하는 OCR 모델. 논문에서도 언급하고 있지만 LLM 프리트레이닝을 위해선 이제 좋은 OCR 파이프라인을 갖고 있는 것도 중요한 문제가 아닐까 싶습니다.

An OCR model that preserves layout information. As mentioned in the paper, having a good OCR pipeline might become an important factor for LLM pretraining.

#ocr

MAGA: MAssive Genre-Audience Reformulation to Pretraining Corpus Expansion

(Xintong Hao, Ke Shen, Chenggang Li)

Despite the remarkable capabilities of large language models across various tasks, their continued scaling faces a critical challenge: the scarcity of high-quality pretraining data. While model architectures continue to evolve, the natural language data struggles to scale up. To tackle this bottleneck, we propose MAssive Genre-Audience (MAGA) reformulation method, which systematic synthesizes diverse, contextually-rich pretraining data from existing corpus. This work makes three main contributions: (1) We propose MAGA reformulation method, a lightweight and scalable approach for pretraining corpus expansion, and build a 770B tokens MAGACorpus. (2) We evaluate MAGACorpus with different data budget scaling strategies, demonstrating consistent improvements across various model sizes (134M-13B), establishing the necessity for next-generation large-scale synthetic pretraining language models. (3) Through comprehensive analysis, we investigate prompt engineering's impact on synthetic training collapse and reveal limitations in conventional collapse detection metrics using validation losses. Our work shows that MAGA can substantially expand training datasets while maintaining quality, offering a reliably pathway for scaling models beyond data limitations.

재작성으로 프리트레이닝 코퍼스의 증폭을 시도. LLM을 사용해 프리트레이닝 코퍼스를 확장하는 것은 꽤 흥미로운 방향이라고 생각합니다. 그렇지만 이 논문에서 지적하는 것처럼 성공적인 레시피를 찾기 위해선 상당히 조심스러워야 하겠죠.

An attempt to expand the pretraining corpus through rewriting. I think using LLMs to expand the pretraining corpus is quite an interesting direction. However, as pointed out in this paper, we need to be very careful in finding successful recipes for this approach.

#synthetic-data #pretraining

UltraIF: Advancing Instruction Following from the Wild

(Kaikai An, Li Sheng, Ganqu Cui, Shuzheng Si, Ning Ding, Yu Cheng, Baobao Chang)

Instruction-following made modern large language models (LLMs) helpful assistants. However, the key to taming LLMs on complex instructions remains mysterious, for that there are huge gaps between models trained by open-source community and those trained by leading companies. To bridge the gap, we propose a simple and scalable approach UltraIF for building LLMs that can follow complex instructions with open-source data. UltraIF first decomposes real-world user prompts into simpler queries, constraints, and corresponding evaluation questions for the constraints. Then, we train an UltraComposer to compose constraint-associated prompts with evaluation questions. This prompt composer allows us to synthesize complicated instructions as well as filter responses with evaluation questions. In our experiment, for the first time, we successfully align LLaMA-3.1-8B-Base to catch up with its instruct version on 5 instruction-following benchmarks without any benchmark information, using only 8B model as response generator and evaluator. The aligned model also achieved competitive scores on other benchmarks. Moreover, we also show that UltraIF could further improve LLaMA-3.1-8B-Instruct through self-alignment, motivating broader use cases for the method. Our code will be available at https://github.com/kkk-an/UltraIF.

Instruction Following 능력을 위한 데이터 구축. 사용자의 지시를 단순화된 지시와 제약 조건으로 분해하고 평가를 위한 질문을 생성. 그리고 단순화된 지시에서 사용자의 지시와 제약 조건을 생성하는 것을 반복하는 것으로 복잡도를 높이는군요.

Data construction for enhancing instruction following capability. The approach decomposes user instructions into simplified instructions and constraints, then generates questions for evaluation. Complexity is increased by iteratively generating user inputs and constraints from simplified instructions.

#synthetic-data #instruction-tuning

Scaling Laws in Patchification: An Image Is Worth 50,176 Tokens And More

(Feng Wang, Yaodong Yu, Guoyizhe Wei, Wei Shao, Yuyin Zhou, Alan Yuille, Cihang Xie)

Since the introduction of Vision Transformer (ViT), patchification has long been regarded as a de facto image tokenization approach for plain visual architectures. By compressing the spatial size of images, this approach can effectively shorten the token sequence and reduce the computational cost of ViT-like plain architectures. In this work, we aim to thoroughly examine the information loss caused by this patchification-based compressive encoding paradigm and how it affects visual understanding. We conduct extensive patch size scaling experiments and excitedly observe an intriguing scaling law in patchification: the models can consistently benefit from decreased patch sizes and attain improved predictive performance, until it reaches the minimum patch size of 1x1, i.e., pixel tokenization. This conclusion is broadly applicable across different vision tasks, various input scales, and diverse architectures such as ViT and the recent Mamba models. Moreover, as a by-product, we discover that with smaller patches, task-specific decoder heads become less critical for dense prediction. In the experiments, we successfully scale up the visual sequence to an exceptional length of 50,176 tokens, achieving a competitive test accuracy of 84.6% with a base-sized model on the ImageNet-1k benchmark. We hope this study can provide insights and theoretical foundations for future works of building non-compressive vision models. Code is available at https://github.com/wangf3014/Patch_Scaling.

패치 크기에 대한 Scaling Law. 기본적으로 패치 크기가 작을수록 더 고성능입니다. 다만 최적점을 찾고자 한다면 해상도와 패치 크기, 모델 크기, Vision Language 모델이라면 LLM의 크기까지 같이 고려해야 하겠죠.

A scaling law for patch sizes. Generally, smaller patch sizes lead to better performance. However, if we want to find the optimal configuration, we should consider multiple factors like image resolution, patch size, model size, and for vision-language models, the size of the LLMs as well.

#scaling-law #vit