2025년 2월 6일

Gemini 2.0 is now available to everyone

(Google)

Gemini 2.0 프로가 나왔군요. 벤치마크 결과를 보면 프리트레이닝 결과가 실망스러웠다는 소문이 사실일지도 모르겠습니다. 물론 벤치마크 점수와는 별개로 큰 모델의 냄새는 맡을 수 있으리라고 생각합니다만 어쨌든 기대 이하라는 반응은 나올만 합니다.

구글 레벨에서도 데이터가 부족한지, 혹은 멀티 모달 데이터 등이 성능 향상에 큰 도움이 되지 않은 것인지, 혹은 Multi Epoch로 학습량을 끌어올려도 부족했는지 등등. 가능성은 여럿 생각해볼 수 있지만 무엇이 사실인지는 물론 알기 어렵습니다.

(원칙적으로는 학습 이전에 결과를 추정하는 것이 가능하고 따라서 "기대 이하"라고 표현하는 것은 조금 이상할 수는 있겠습니다.)

어쨌든 한동안 프리트레이닝 레벨에서 유의미한 향상이 나타나기 어려울 것 같네요. 후발주자에게는 좋은 일이죠. GPT-4 수준에 도달하면 한동안 프리트레이닝은 건드리지 않아도 된다는 의미니까요. 그리고 GPT-4 수준에는 H100 2천 개 정도면 충분하죠.

따라서 지금도 그렇지만 앞으로도 최소한 얼마동안은 Inference-time Scaling과 추론 능력이 주요한 주제가 되겠네요. 추론 능력 또한 점근선을 만날지도 모르겠지만 어쨌든 한동안은 할 일이 있을 겁니다.

추론 능력을 통해 프리트레이닝의 점근선을 돌파하는 일이 생길지도 모르겠지만 (그리고 되도록 그러기를 바라지만) 어떻게 될지는 모르겠습니다. 이미 OpenAI 오리온에 o1 생성 데이터가 들어갔지만 프리트레이닝이 성공적이지 않았다는 루머도 있긴 했으니까요.

(벤치마크 단위에서는 큰 차이가 없지만 추론 능력을 주입하기 시작하면 차이가 증가하는 가능성도 떠오르긴 합니다만 어떨지 모르겠네요.)

Google has released Gemini 2.0 Pro. Looking at the benchmark results, the rumors about disappointing pretraining results might be true. While we can smell of large models regardless benchmark scores, it's understandable why some are calling it below expectations.

There could be various reasons. Perhaps even Google ran out of data, or multimodal data didn't contribute significantly for performance, or increasing amount of training using multi epoch weren't sufficient, and so on. While we can speculate about various possibilities, it's difficult to know the true cause.

(In principle, since results can be estimated before training, it might seem odd to say it's "below expectations".)

Anyway, it is seems reasonable to expect it would be hard to get significant improvements in pretraining for a while. This is actually good news for those following behnind - once they reach GPT-4 level, they won't need to focus on pretraining for some time. And reaching GPT-4 level requires mere 2000 H100s.

Therefore, as is currently the case, inference-time scaling and reasoning capabilities will remain key focus areas for some time. While reasoning capabilities might also reach an asymptote, there's still work to be done in this area for now.

While it's possible that improved reasoning capabilities might help break through the asymptote of pretraining (and I hope so), it's uncertain. Actually there was rumors that OpenAI's Orion wasn't particularly successful despite incorporating o1 generated data.

(While benchmark scores might not show significant differences, it's possible that differences more apparent once reasoning capabilities are induced, but we don't know about this yet.)

#lm #pretraining

Demystifying Long Chain-of-Thought Reasoning in LLMs

(Edward Yeo, Yuxuan Tong, Morry Niu, Graham Neubig, Xiang Yue)

Scaling inference compute enhances reasoning in large language models (LLMs), with long chains-of-thought (CoTs) enabling strategies like backtracking and error correction. Reinforcement learning (RL) has emerged as a crucial method for developing these capabilities, yet the conditions under which long CoTs emerge remain unclear, and RL training requires careful design choices. In this study, we systematically investigate the mechanics of long CoT reasoning, identifying the key factors that enable models to generate long CoT trajectories. Through extensive supervised fine-tuning (SFT) and RL experiments, we present four main findings: (1) While SFT is not strictly necessary, it simplifies training and improves efficiency; (2) Reasoning capabilities tend to emerge with increased training compute, but their development is not guaranteed, making reward shaping crucial for stabilizing CoT length growth; (3) Scaling verifiable reward signals is critical for RL. We find that leveraging noisy, web-extracted solutions with filtering mechanisms shows strong potential, particularly for out-of-distribution (OOD) tasks such as STEM reasoning; and (4) Core abilities like error correction are inherently present in base models, but incentivizing these skills effectively for complex tasks via RL demands significant compute, and measuring their emergence requires a nuanced approach. These insights provide practical guidance for optimizing training strategies to enhance long CoT reasoning in LLMs. Our code is available at: https://github.com/eddycmu/demystify-long-cot.

SFT와 RL을 통한 추론 능력 획득에 대한 분석. 분석들이 많은데 KL Penalty와 생성 길이가 관계가 있을 가능성과 베이스 모델이 프리트레이닝에서 추론 과정에 필요한 능력들을 획득하는 원천이 되었을 가능성이 높은 문서들에 대한 분석이 재미있네요.

특히 포럼 쓰레드들에서 이런 문서를 발견할 가능성이 높다는 것이 흥미롭네요. 한 사람이 작성한 글에서는 사고의 과정이 명시적으로 드러나지 않는 경우가 많지만 여러 사람이 상호작용하는 상황 자체가 하나의 사고의 과정이 될 수 있다는 의미로 생각할 수 있겠습니다. 실용적으로는 이런 포럼 형태의 문서들을 잘 추출하는 것이 추론 능력에 도움이 된다고 생각할 수 있겠네요.

Analysis of acquiring reasoning capabilities through SFT and RL. Among the various analyses, I find particularly interesting the potential relationship between KL penalty and generation length, as well as the analysis of documents that likely served as sources for the base model to acquire abilities necessary for reasoning during pre-training.

Especially intriguing is the possibility of finding such documents in forum threads. While it's often difficult to find explicit thought processes in writings by individuals, the collective interactions among multiple people can themselves constitute a thought process. Practically, this suggests that effectively extracting these types of forum documents could be beneficial for enhancing reasoning abilities.

#reasoning #rl

LIMO: Less is More for Reasoning

(Yixin Ye, Zhen Huang, Yang Xiao, Ethan Chern, Shijie Xia, Pengfei Liu)

We present a fundamental discovery that challenges our understanding of how complex reasoning emerges in large language models. While conventional wisdom suggests that sophisticated reasoning tasks demand extensive training data (>100,000 examples), we demonstrate that complex mathematical reasoning abilities can be effectively elicited with surprisingly few examples. Through comprehensive experiments, our proposed model LIMO demonstrates unprecedented performance in mathematical reasoning. With merely 817 curated training samples, LIMO achieves 57.1% accuracy on AIME and 94.8% on MATH, improving from previous SFT-based models' 6.5% and 59.2% respectively, while only using 1% of the training data required by previous approaches. LIMO demonstrates exceptional out-of-distribution generalization, achieving 40.5% absolute improvement across 10 diverse benchmarks, outperforming models trained on 100x more data, challenging the notion that SFT leads to memorization rather than generalization. Based on these results, we propose the Less-Is-More Reasoning Hypothesis (LIMO Hypothesis): In foundation models where domain knowledge has been comprehensively encoded during pre-training, sophisticated reasoning capabilities can emerge through minimal but precisely orchestrated demonstrations of cognitive processes. This hypothesis posits that the elicitation threshold for complex reasoning is determined by two key factors: (1) the completeness of the model's encoded knowledge foundation during pre-training, and (2) the effectiveness of post-training examples as "cognitive templates" that show the model how to utilize its knowledge base to solve complex reasoning tasks. To facilitate reproducibility and future research in data-efficient reasoning, we release LIMO as a comprehensive open-source suite at https://github.com/GAIR-NLP/LIMO.

어려운 문제에 좋은 사고 과정이 포함된 소규모 데이터로 학습시키는 것으로 추론 능력을 끌어낼 수 있다는 결과. 베이스 모델이 충분한 능력을 갖추고 있다면 그걸 사용하도록 학습시키는 것으로 충분하다는 것.

This study suggests that reasoning abilities can be induced by training on a small-scale dataset containing difficult problems with high-quality reasoning processes. It indicates that if the base model has sufficient capabilities, then training it to utilize those capabilities is enough.

#reasoning

Masked Autoencoders Are Effective Tokenizers for Diffusion Models

(Hao Chen, Yujin Han, Fangyi Chen, Xiang Li, Yidong Wang, Jindong Wang, Ze Wang, Zicheng Liu, Difan Zou, Bhiksha Raj)

Recent advances in latent diffusion models have demonstrated their effectiveness for high-resolution image synthesis. However, the properties of the latent space from tokenizer for better learning and generation of diffusion models remain under-explored. Theoretically and empirically, we find that improved generation quality is closely tied to the latent distributions with better structure, such as the ones with fewer Gaussian Mixture modes and more discriminative features. Motivated by these insights, we propose MAETok, an autoencoder (AE) leveraging mask modeling to learn semantically rich latent space while maintaining reconstruction fidelity. Extensive experiments validate our analysis, demonstrating that the variational form of autoencoders is not necessary, and a discriminative latent space from AE alone enables state-of-the-art performance on ImageNet generation using only 128 tokens. MAETok achieves significant practical improvements, enabling a gFID of 1.69 with 76x faster training and 31x higher inference throughput for 512x512 generation. Our findings show that the structure of the latent space, rather than variational constraints, is crucial for effective diffusion models. Code and trained models are released.

MAE를 사용한 이미지 Semantic Tokenizer. Latent Code의 모드의 수가 생성 퀄리티와 반비례한다는 가설이 흥미롭네요.

An semantic image tokenizer using MAE. The hypothesis that the number of modes in latent codes is inversely proportional to generation quality is intriguing.

#tokenizer

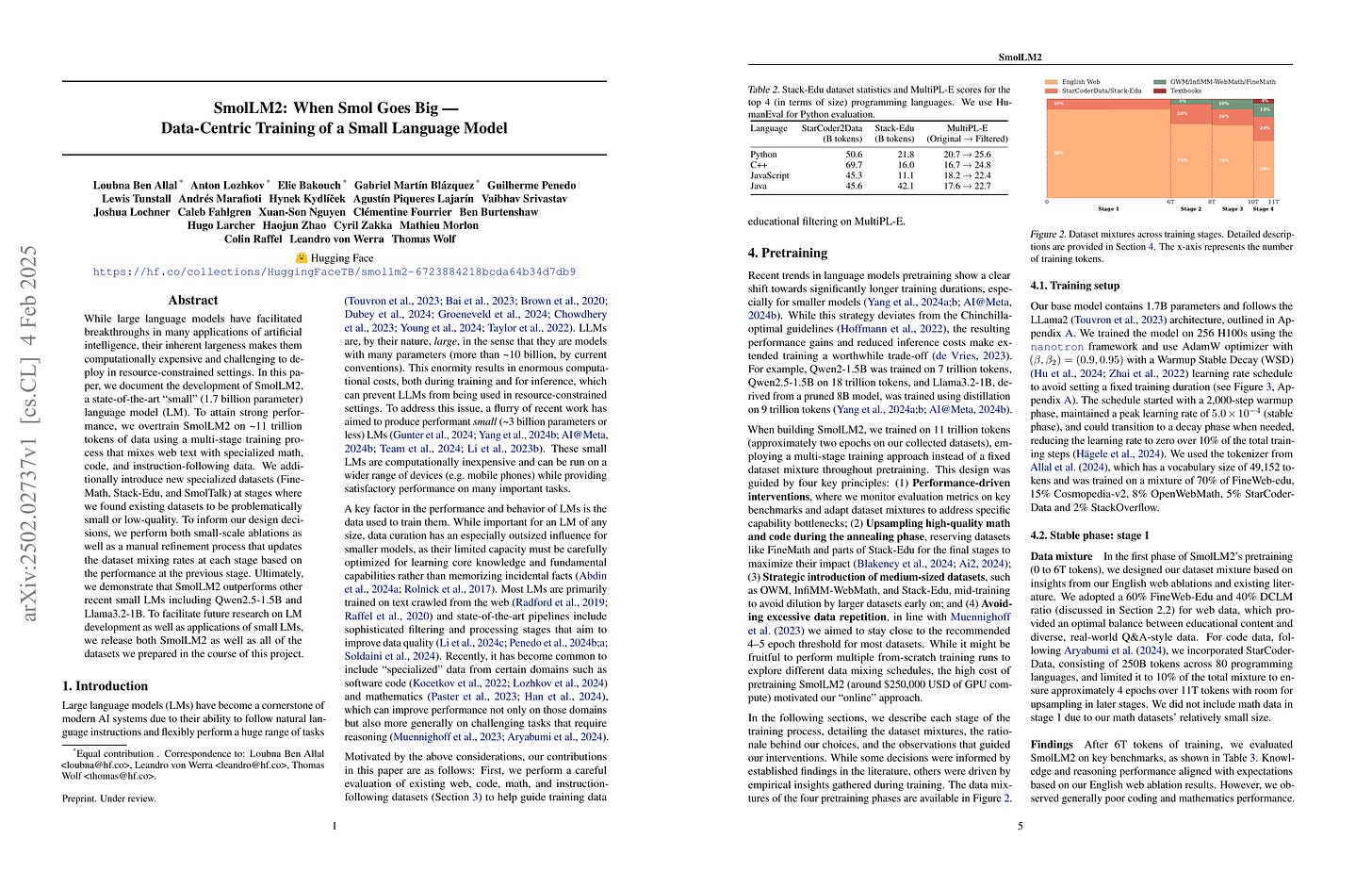

SmolLM2: When Smol Goes Big -- Data-Centric Training of a Small Language Model

(Loubna Ben Allal, Anton Lozhkov, Elie Bakouch, Gabriel Martín Blázquez, Guilherme Penedo, Lewis Tunstall, Andrés Marafioti, Hynek Kydlíček, Agustín Piqueres Lajarín, Vaibhav Srivastav, Joshua Lochner, Caleb Fahlgren, Xuan-Son Nguyen, Clémentine Fourrier, Ben Burtenshaw, Hugo Larcher, Haojun Zhao, Cyril Zakka, Mathieu Morlon, Colin Raffel, Leandro von Werra, Thomas Wolf)

While large language models have facilitated breakthroughs in many applications of artificial intelligence, their inherent largeness makes them computationally expensive and challenging to deploy in resource-constrained settings. In this paper, we document the development of SmolLM2, a state-of-the-art "small" (1.7 billion parameter) language model (LM). To attain strong performance, we overtrain SmolLM2 on ~11 trillion tokens of data using a multi-stage training process that mixes web text with specialized math, code, and instruction-following data. We additionally introduce new specialized datasets (FineMath, Stack-Edu, and SmolTalk) at stages where we found existing datasets to be problematically small or low-quality. To inform our design decisions, we perform both small-scale ablations as well as a manual refinement process that updates the dataset mixing rates at each stage based on the performance at the previous stage. Ultimately, we demonstrate that SmolLM2 outperforms other recent small LMs including Qwen2.5-1.5B and Llama3.2-1B. To facilitate future research on LM development as well as applications of small LMs, we release both SmolLM2 as well as all of the datasets we prepared in the course of this project.

SmolLM2의 리포트가 나왔군요. 수학 데이터를 추가 수집하고 코드 데이터를 필터링한 데이터셋을 새로 구축했네요.

The report on SmolLM2 has been released. They have constructed a new dataset by collecting additional mathematics data and filtering code data.

#lm #pretraining