2025년 2월 4일

The Surprising Agreement Between Convex Optimization Theory and Learning-Rate Scheduling for Large Model Training

(Fabian Schaipp, Alexander Hägele, Adrien Taylor, Umut Simsekli, Francis Bach)

We show that learning-rate schedules for large model training behave surprisingly similar to a performance bound from non-smooth convex optimization theory. We provide a bound for the constant schedule with linear cooldown; in particular, the practical benefit of cooldown is reflected in the bound due to the absence of logarithmic terms. Further, we show that this surprisingly close match between optimization theory and practice can be exploited for learning-rate tuning: we achieve noticeable improvements for training 124M and 210M Llama-type models by (i) extending the schedule for continued training with optimal learning-rate, and (ii) transferring the optimal learning-rate across schedules.

Last iterate bound가 (https://arxiv.org/abs/2310.07831) 모델 학습 과정의 Loss 커브와 개형이 유사하다는 분석. 이를 기반으로 Cooldown의 비율이 어떠해야 하는지 등을 분석했군요.

LR Annealing에 대한 Scaling Law가 (https://arxiv.org/abs/2408.11029) 생각나는군요.

This analysis suggests that the last iterate bound (https://arxiv.org/abs/2310.07831) is similar in shape to the loss curve during model training. Based on this, they analyzed various aspects, including the optimal proportion of cooldown.

It reminds me of the scaling law for LR annealing (https://arxiv.org/abs/2408.11029).

#optimizer

Process Reinforcement through Implicit Rewards

(Ganqu Cui, Lifan Yuan, Zefan Wang, Hanbin Wang, Wendi Li, Bingxiang He, Yuchen Fan, Tianyu Yu, Qixin Xu, Weize Chen, Jiarui Yuan, Huayu Chen, Kaiyan Zhang, Xingtai Lv, Shuo Wang, Yuan Yao, Xu Han, Hao Peng, Yu Cheng, Zhiyuan Liu, Maosong Sun, Bowen Zhou, Ning Ding)

Dense process rewards have proven a more effective alternative to the sparse outcome-level rewards in the inference-time scaling of large language models (LLMs), particularly in tasks requiring complex multi-step reasoning. While dense rewards also offer an appealing choice for the reinforcement learning (RL) of LLMs since their fine-grained rewards have the potential to address some inherent issues of outcome rewards, such as training efficiency and credit assignment, this potential remains largely unrealized. This can be primarily attributed to the challenges of training process reward models (PRMs) online, where collecting high-quality process labels is prohibitively expensive, making them particularly vulnerable to reward hacking. To address these challenges, we propose PRIME (Process Reinforcement through IMplicit rEwards), which enables online PRM updates using only policy rollouts and outcome labels through implict process rewards. PRIME combines well with various advantage functions and forgoes the dedicated reward model training phrase that existing approaches require, substantially reducing the development overhead. We demonstrate PRIME's effectiveness on competitional math and coding. Starting from Qwen2.5-Math-7B-Base, PRIME achieves a 15.1% average improvement across several key reasoning benchmarks over the SFT model. Notably, our resulting model, Eurus-2-7B-PRIME, surpasses Qwen2.5-Math-7B-Instruct on seven reasoning benchmarks with 10% of its training data.

Implicit PRM으로 학습하는 프로젝트 PRIME에 대한 리포트. (https://arxiv.org/abs/2412.01981) Implicit PRM은 Online 학습이 가능하니 Reward Hacking을 막을 수 있다는 것이 핵심입니다.

실수도 고칠 수 있다면 문제가 아니라고들 하죠. 그런 의미에서는 실수에 대해 Implicit PRM이 어떤 Reward를 부여할지 궁금하네요.

Report on the PRIME project, which trains a model using implicit PRM. (https://arxiv.org/abs/2412.01981) The key point is that implicit PRM enables online training, which can prevent reward hacking.

It's often said that mistakes are not a problem if the model can correct them. In this context, I'm curious about how implicit PRM would assign rewards for mistakes.

#reward-model #reasoning #rl

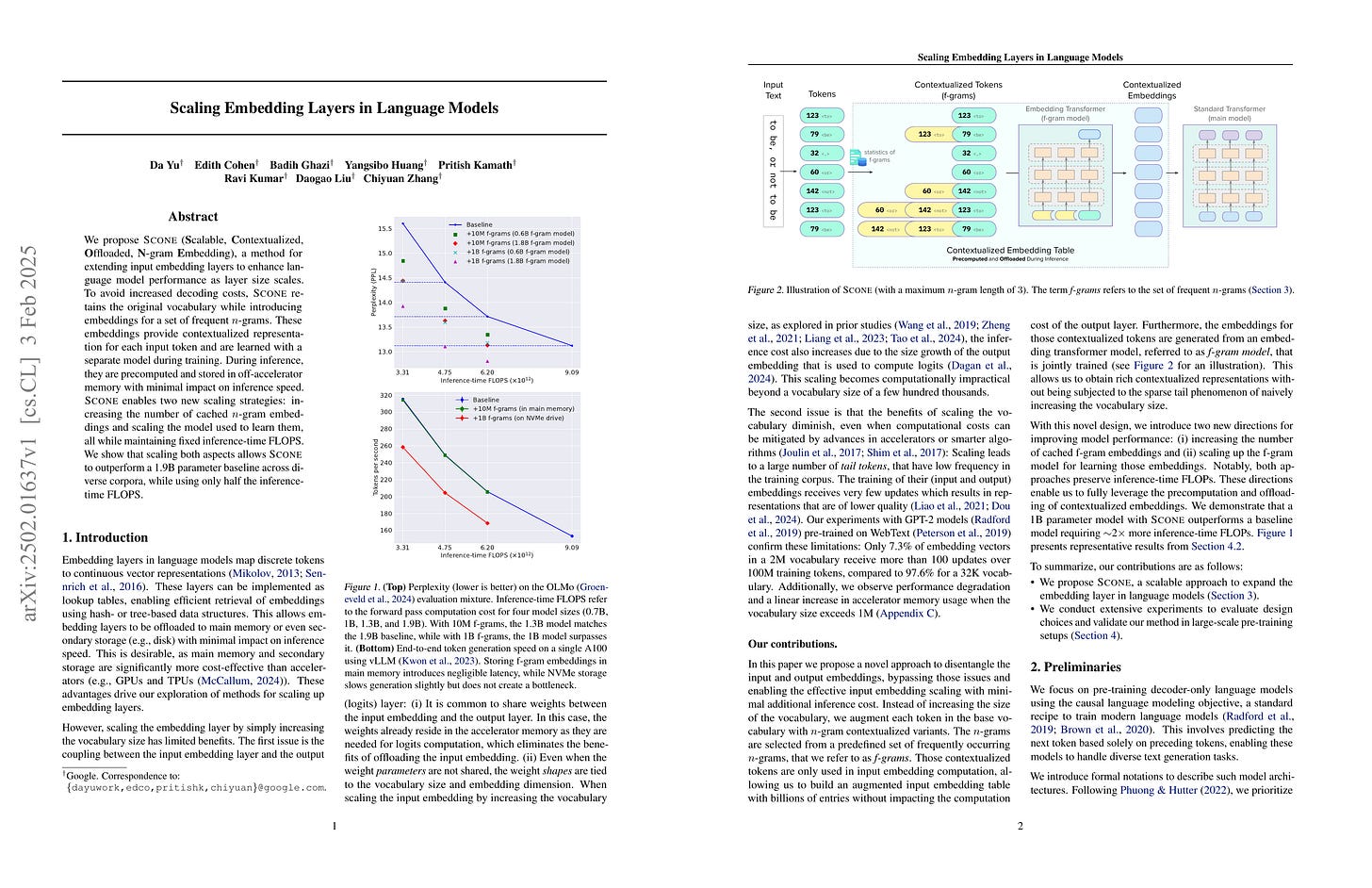

Scaling Embedding Layers in Language Models

(Da Yu, Edith Cohen, Badih Ghazi, Yangsibo Huang, Pritish Kamath, Ravi Kumar, Daogao Liu, Chiyuan Zhang)

We propose SCONE (Scalable, Contextualized, Offloaded, N-gram Embedding), a method for extending input embedding layers to enhance language model performance as layer size scales. To avoid increased decoding costs, SCONE retains the original vocabulary while introducing embeddings for a set of frequent n-grams. These embeddings provide contextualized representation for each input token and are learned with a separate model during training. During inference, they are precomputed and stored in off-accelerator memory with minimal impact on inference speed. SCONE enables two new scaling strategies: increasing the number of cached n-gram embeddings and scaling the model used to learn them, all while maintaining fixed inference-time FLOPS. We show that scaling both aspects allows SCONE to outperform a 1.9B parameter baseline across diverse corpora, while using only half the inference-time FLOPS.

n-gram 임베딩을 사용한 모델. 얼마 전 비슷한 아이디어가 나왔었죠. (https://arxiv.org/abs/2501.16975) 이쪽은 n-gram 임베딩을 트랜스포머로 출력하게 했네요. 추론 시에는 n-gram 임베딩을 캐시에 저장해놓을 수 있으니 괜찮다는 발상입니다.

A model using n-gram embeddings. A similar idea was presented recently (https://arxiv.org/abs/2501.16975). This approach uses a transformer to generate n-gram embeddings. The main idea is that it can be efficient since we can cache n-gram embeddings for inference.

#embedding #lm

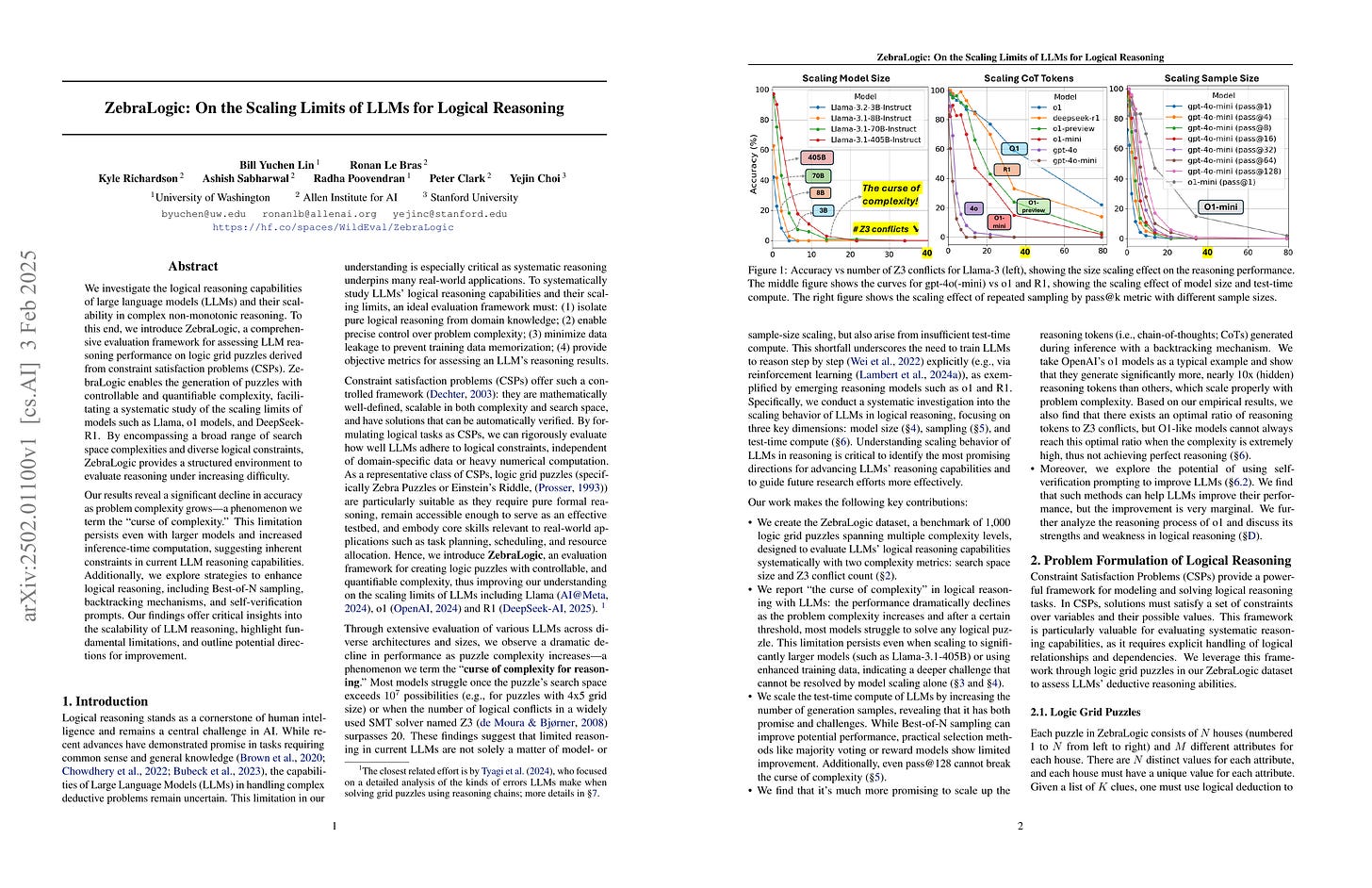

ZebraLogic: On the Scaling Limits of LLMs for Logical Reasoning

(Bill Yuchen Lin, Ronan Le Bras, Kyle Richardson, Ashish Sabharwal, Radha Poovendran, Peter Clark, Yejin Choi)

We investigate the logical reasoning capabilities of large language models (LLMs) and their scalability in complex non-monotonic reasoning. To this end, we introduce ZebraLogic, a comprehensive evaluation framework for assessing LLM reasoning performance on logic grid puzzles derived from constraint satisfaction problems (CSPs). ZebraLogic enables the generation of puzzles with controllable and quantifiable complexity, facilitating a systematic study of the scaling limits of models such as Llama, o1 models, and DeepSeek-R1. By encompassing a broad range of search space complexities and diverse logical constraints, ZebraLogic provides a structured environment to evaluate reasoning under increasing difficulty. Our results reveal a significant decline in accuracy as problem complexity grows -- a phenomenon we term the curse of complexity. This limitation persists even with larger models and increased inference-time computation, suggesting inherent constraints in current LLM reasoning capabilities. Additionally, we explore strategies to enhance logical reasoning, including Best-of-N sampling, backtracking mechanisms, and self-verification prompts. Our findings offer critical insights into the scalability of LLM reasoning, highlight fundamental limitations, and outline potential directions for improvement.

논리 퍼즐을 생성해 구축한 벤치마크. 논리 문제는 평가에 더해 생성을 고려할 수 있는 분야죠. (https://arxiv.org/abs/2411.12498) 추론 학습에 쓸 수 있을지도 모르겠네요.

A benchmark constructed using synthesized logic puzzles. The domain of logic problems is one where we can consider both evaluation and generation (https://arxiv.org/abs/2411.12498). This might also be useful for training reasoning models.

#reasoning #synthetic-data #benchmark

Self-Improving Transformers Overcome Easy-to-Hard and Length Generalization Challenges

(Nayoung Lee, Ziyang Cai, Avi Schwarzschild, Kangwook Lee, Dimitris Papailiopoulos)

Large language models often struggle with length generalization and solving complex problem instances beyond their training distribution. We present a self-improvement approach where models iteratively generate and learn from their own solutions, progressively tackling harder problems while maintaining a standard transformer architecture. Across diverse tasks including arithmetic, string manipulation, and maze solving, self-improving enables models to solve problems far beyond their initial training distribution-for instance, generalizing from 10-digit to 100-digit addition without apparent saturation. We observe that in some cases filtering for correct self-generated examples leads to exponential improvements in out-of-distribution performance across training rounds. Additionally, starting from pretrained models significantly accelerates this self-improvement process for several tasks. Our results demonstrate how controlled weak-to-strong curricula can systematically teach a model logical extrapolation without any changes to the positional embeddings, or the model architecture.

연산 문제 등에 대해서 길이 일반화를 시도한 방법. 짧은 길이에 대해 학습시킨 다음 긴 길이에 대한 모델 생성 결과를 길이와 Majority Voting으로 필터링해서 학습시켰습니다. 이전에 Chain of Thought로 길이를 점진적으로 증가시켰던 연구가(https://arxiv.org/abs/2309.08589) 생각나네요.

This study attempted length generalization for problems such as arithmetic. They first trained a model on short-length problems, then used the model to generate solutions for longer problems. Generated solutions were filtered based on length and majority voting. This approach reminds me of a previous study (https://arxiv.org/abs/2309.08589) that progressively increased length using chain of thought reasoning.

#extrapolation #synthetic-data #self-improvement