2025년 2월 3일

s1: Simple test-time scaling

(Niklas Muennighoff, Zitong Yang, Weijia Shi, Xiang Lisa Li, Li Fei-Fei, Hannaneh Hajishirzi, Luke Zettlemoyer, Percy Liang, Emmanuel Candès, Tatsunori Hashimoto)

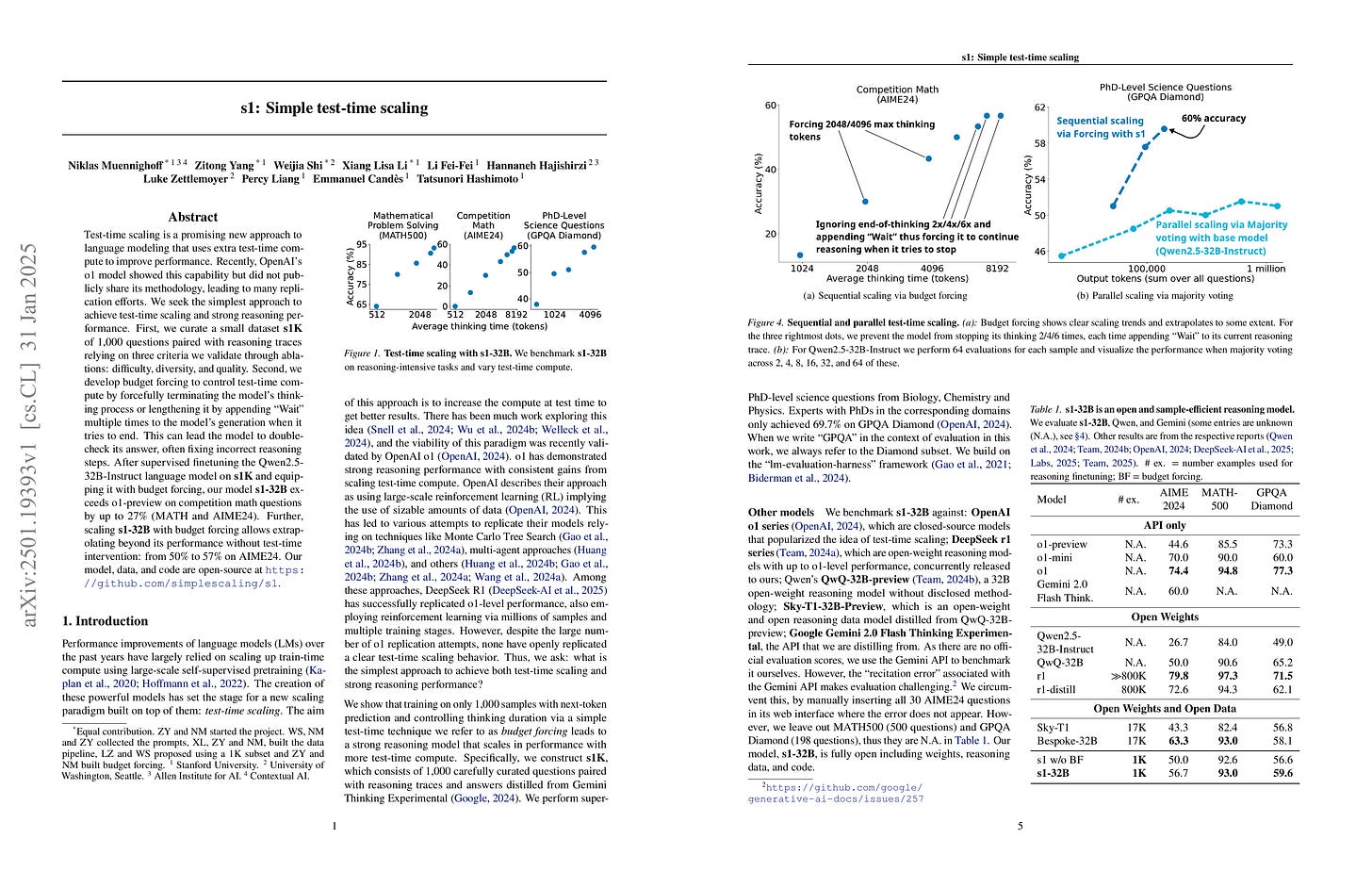

Test-time scaling is a promising new approach to language modeling that uses extra test-time compute to improve performance. Recently, OpenAI's o1 model showed this capability but did not publicly share its methodology, leading to many replication efforts. We seek the simplest approach to achieve test-time scaling and strong reasoning performance. First, we curate a small dataset s1K of 1,000 questions paired with reasoning traces relying on three criteria we validate through ablations: difficulty, diversity, and quality. Second, we develop budget forcing to control test-time compute by forcefully terminating the model's thinking process or lengthening it by appending "Wait" multiple times to the model's generation when it tries to end. This can lead the model to double-check its answer, often fixing incorrect reasoning steps. After supervised finetuning the Qwen2.5-32B-Instruct language model on s1K and equipping it with budget forcing, our model s1 exceeds o1-preview on competition math questions by up to 27% (MATH and AIME24). Further, scaling s1 with budget forcing allows extrapolating beyond its performance without test-time intervention: from 50% to 57% on AIME24. Our model, data, and code are open-source at https://github.com/simplescaling/s1.

추론 과정이 포함된 1K 샘플로 학습시킨 다음 CoT의 길이를 강제로 변경하는 디코딩 방법으로 Inference-time Scaling. 생성을 바로 종료하려는 경향만 억제해도 흥미로운 패턴이 생긴다는 결과가 다시 생각나는군요. (https://arxiv.org/abs/2402.10200)

그나저나 o1 이후로 X1 스타일의 작명이 유행하는군요.

Inference-time scaling by training on 1K samples containing reasoning steps and then using a decoding method that forcibly alters the length of the CoT. This reminds me of the interesting result where simply suppressing the model's tendency to stop generation prematurely can lead to intriguing reasoning patterns. (https://arxiv.org/abs/2402.10200)

By the way, it seems the "X1" style of naming has become trendy since the introduction of o1.

#reasoning #inference-time-scaling

Scalable-Softmax Is Superior for Attention

(Ken M. Nakanishi)

The maximum element of the vector output by the Softmax function approaches zero as the input vector size increases. Transformer-based language models rely on Softmax to compute attention scores, causing the attention distribution to flatten as the context size grows. This reduces the model's ability to prioritize key information effectively and potentially limits its length generalization. To address this problem, we propose Scalable-Softmax (SSMax), which replaces Softmax in scenarios where the input vector size varies. SSMax can be seamlessly integrated into existing Transformer-based architectures. Experimental results in language modeling show that models using SSMax not only achieve faster loss reduction during pretraining but also significantly improve performance in long contexts and key information retrieval. Furthermore, an analysis of attention scores reveals that SSMax enables the model to focus attention on key information even in long contexts. Additionally, although models that use SSMax from the beginning of pretraining achieve better length generalization, those that have already started pretraining can still gain some of this ability by replacing Softmax in the attention layers with SSMax, either during or after pretraining.

Softmax의 Logit에 길이에 따라 증가하는 계수 log n을 붙여 엔트로피 증가를 억제하는 방식으로 Long Context Extrapolation을 시도.

This paper attempts long context extrapolation by suppressing the increase in entropy. It does this by adding a scalar (log n) that increases with sequence length to the logits in the softmax.

#long-context

SETS: Leveraging Self-Verification and Self-Correction for Improved Test-Time Scaling

(Jiefeng Chen, Jie Ren, Xinyun Chen, Chengrun Yang, Ruoxi Sun, Sercan Ö Arık)

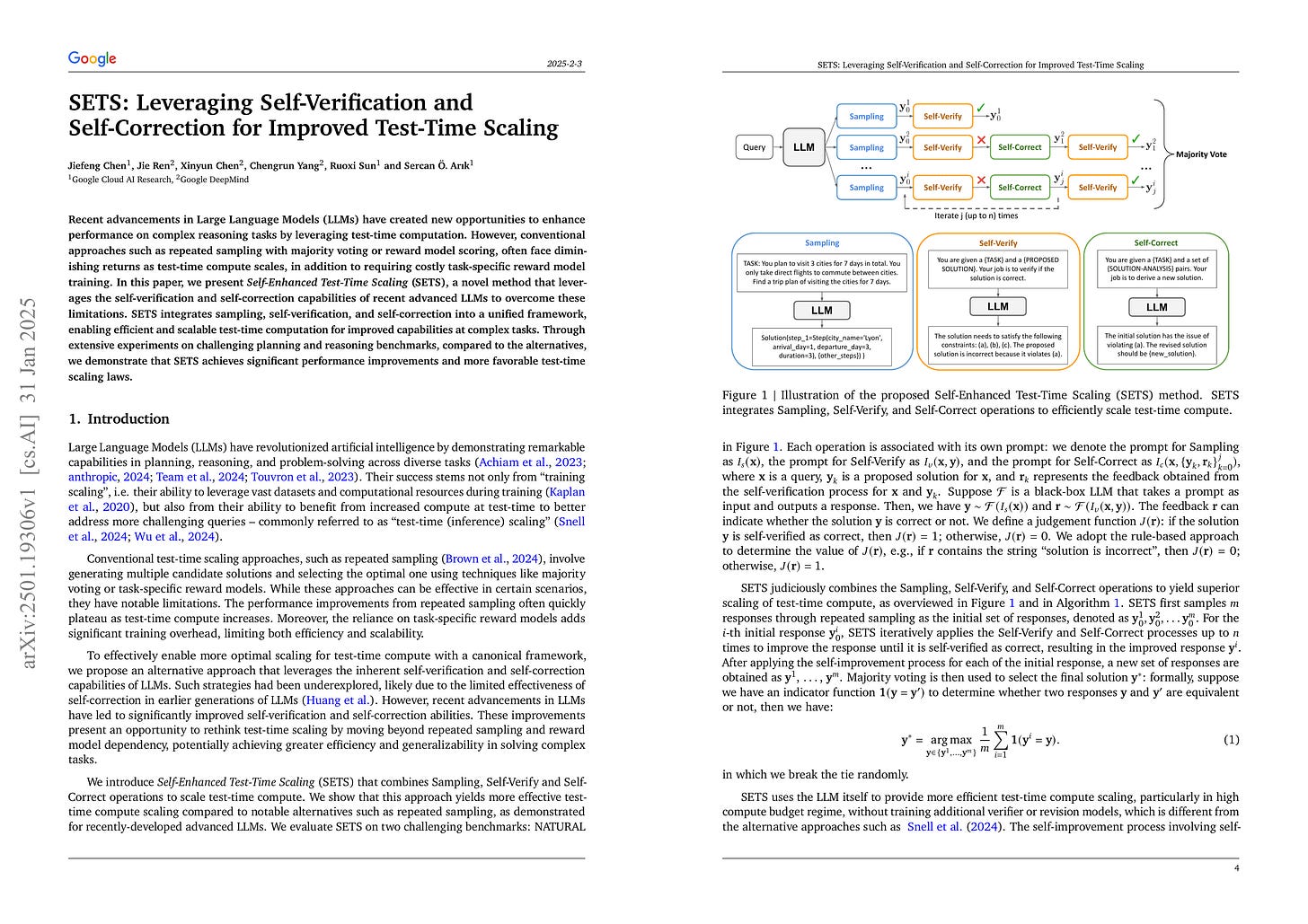

Recent advancements in Large Language Models (LLMs) have created new opportunities to enhance performance on complex reasoning tasks by leveraging test-time computation. However, conventional approaches such as repeated sampling with majority voting or reward model scoring, often face diminishing returns as test-time compute scales, in addition to requiring costly task-specific reward model training. In this paper, we present Self-Enhanced Test-Time Scaling (SETS), a novel method that leverages the self-verification and self-correction capabilities of recent advanced LLMs to overcome these limitations. SETS integrates sampling, self-verification, and self-correction into a unified framework, enabling efficient and scalable test-time computation for improved capabilities at complex tasks. Through extensive experiments on challenging planning and reasoning benchmarks, compared to the alternatives, we demonstrate that SETS achieves significant performance improvements and more favorable test-time scaling laws.

샘플링, Self Verification, Self Correction, Majority Voting을 합친 Inference-time Scaling 방법.

An inference-time scaling method that combines sampling, self-verification, self-correction, and majority voting.

#inference-time-scaling

Decoding-based Regression

(Xingyou Song, Dara Bahri)

Language models have recently been shown capable of performing regression tasks wherein numeric predictions are represented as decoded strings. In this work, we provide theoretical grounds for this capability and furthermore investigate the utility of causal auto-regressive sequence models when they are applied to any feature representation. We find that, despite being trained in the usual way - for next-token prediction via cross-entropy loss - decoding-based regression is as performant as traditional approaches for tabular regression tasks, while being flexible enough to capture arbitrary distributions, such as in the task of density estimation.

회귀 문제에 대해 각 자릿수의 값을 하나씩 생성하는 형태의 Autoregression으로 대응.

An approach to regression problems using autoregressive models by generating each digit of the output value as a separate token.

#autoregressive-model

Constitutional Classifiers: Defending against Universal Jailbreaks across Thousands of Hours of Red Teaming

(Mrinank Sharma, Meg Tong, Jesse Mu, Jerry Wei, Jorrit Kruthoff, Scott Goodfriend, Euan Ong, Alwin Peng, Raj Agarwal, Cem Anil, Amanda Askell, Nathan Bailey, Joe Benton, Emma Bluemke, Samuel R. Bowman, Eric Christiansen, Hoagy Cunningham, Andy Dau, Anjali Gopal, Rob Gilson, Logan Graham, Logan Howard, Nimit Kalra, Taesung Lee, Kevin Lin, Peter Lofgren, Francesco Mosconi, Clare O'Hara, Catherine Olsson, Linda Petrini, Samir Rajani, Nikhil Saxena, Alex Silverstein, Tanya Singh, Theodore Sumers, Leonard Tang, Kevin K. Troy, Constantin Weisser, Ruiqi Zhong, Giulio Zhou, Jan Leike, Jared Kaplan, Ethan Perez)

Large language models (LLMs) are vulnerable to universal jailbreaks-prompting strategies that systematically bypass model safeguards and enable users to carry out harmful processes that require many model interactions, like manufacturing illegal substances at scale. To defend against these attacks, we introduce Constitutional Classifiers: safeguards trained on synthetic data, generated by prompting LLMs with natural language rules (i.e., a constitution) specifying permitted and restricted content. In over 3,000 estimated hours of red teaming, no red teamer found a universal jailbreak that could extract information from an early classifier-guarded LLM at a similar level of detail to an unguarded model across most target queries. On automated evaluations, enhanced classifiers demonstrated robust defense against held-out domain-specific jailbreaks. These classifiers also maintain deployment viability, with an absolute 0.38% increase in production-traffic refusals and a 23.7% inference overhead. Our work demonstrates that defending against universal jailbreaks while maintaining practical deployment viability is tractable.

Anthropic의 안전 분류 모델. Constitution 기반으로 프롬프트를 생성한 다음 학습시키는 Anthropic스러운 방법. 입력 분류 모델과 출력 분류 모델이 있는데 출력 분류 모델은 스트리밍에 대응해서 각 토큰에서 Value를 예측하는 방식이네요.

Anthropic's safeguard model. It's a very Anthropic-styled method that generates prompts based on a constitution and then trains the model. There are input and output classifier models, with the output classifier predicting values for each token to support streaming generation.

#safety

Rope to Nope and Back Again: A New Hybrid Attention Strategy

(Bowen Yang, Bharat Venkitesh, Dwarak Talupuru, Hangyu Lin, David Cairuz, Phil Blunsom, Acyr Locatelli)

Long-context large language models (LLMs) have achieved remarkable advancements, driven by techniques like Rotary Position Embedding (RoPE) (Su et al., 2023) and its extensions (Chen et al., 2023; Liu et al., 2024c; Peng et al., 2023). By adjusting RoPE parameters and incorporating training data with extended contexts, we can train performant models with considerably longer input sequences. However, existing RoPE-based methods exhibit performance limitations when applied to extended context lengths. This paper presents a comprehensive analysis of various attention mechanisms, including RoPE, No Positional Embedding (NoPE), and Query-Key Normalization (QK-Norm), identifying their strengths and shortcomings in long-context modeling. Our investigation identifies distinctive attention patterns in these methods and highlights their impact on long-context performance, providing valuable insights for architectural design. Building on these findings, we propose a novel architectural based on a hybrid attention mechanism that not only surpasses conventional RoPE-based transformer models in long context tasks but also achieves competitive performance on benchmarks requiring shorter context lengths.

RoPE, NoPE, 그리고 QK Norm의 Long Context 특성에 대한 분석. QK Norm이 Attention의 엔트로피를 높인다는 문제를 지적하고 있네요.

Analysis of the long-context characteristics of RoPE, NoPE, and QK Norm. The paper points out that QK Norm increases the entropy of attention.

#long-context #positional-encoding

The Energy Loss Phenomenon in RLHF: A New Perspective on Mitigating Reward Hacking

(Yuchun Miao, Sen Zhang, Liang Ding, Yuqi Zhang, Lefei Zhang, Dacheng Tao)

This work identifies the Energy Loss Phenomenon in Reinforcement Learning from Human Feedback (RLHF) and its connection to reward hacking. Specifically, energy loss in the final layer of a Large Language Model (LLM) gradually increases during the RL process, with an excessive increase in energy loss characterizing reward hacking. Beyond empirical analysis, we further provide a theoretical foundation by proving that, under mild conditions, the increased energy loss reduces the upper bound of contextual relevance in LLMs, which is a critical aspect of reward hacking as the reduced contextual relevance typically indicates overfitting to reward model-favored patterns in RL. To address this issue, we propose an Energy loss-aware PPO algorithm (EPPO) which penalizes the increase in energy loss in the LLM's final layer during reward calculation to prevent excessive energy loss, thereby mitigating reward hacking. We theoretically show that EPPO can be conceptually interpreted as an entropy-regularized RL algorithm, which provides deeper insights into its effectiveness. Extensive experiments across various LLMs and tasks demonstrate the commonality of the energy loss phenomenon, as well as the effectiveness of EPPO in mitigating reward hacking and improving RLHF performance.

RLHF 과정에서 마지막 레이어의 입력과 출력의 차이(Energy Loss)가 증가하는데, 이 Energy Loss의 급격한 증가가 Reward Hacking과 관련이 있다는 연구. 이를 억제해서 Reward Hacking을 감소시키려는 시도를 했습니다. 결과적으로는 Entropy regularization과 이어지네요.

This study shows that during the RLHF process, the difference between input and output of the last layer (energy loss) increases, and a rapid increase in this energy loss is associated with reward hacking. The researchers attempted to mitigate reward hacking by suppressing this increase. Their approach ultimately connects to entropy regularization.

#alignment #rlhf