2025년 2월 26일

DeepGEMM

(Chenggang Zhao and Liang Zhao and Jiashi Li and Zhean Xu)

DeepSeek이 오늘 공개한 건 FP8 GEMM 커널이군요. 논문에 언급됐던 Fine-grained Quantization과 고정밀도 Accumulation이 구현된 GEMM, 그리고 MoE를 위한 Grouped GEMM 커널입니다. 컴파일된 바이너리를 뜯어고치는 묘기까지 했네요.

Today's opensource release from DeepSeek is an FP8 GEMM kernel. It includes a GEMM and Grouped GEMM kernel for MoE, which implements fine-grained quantization and high-precision accumulation as mentioned in their paper. Impressively, they've even tried to modify the compiled binary for efficiency.

#efficiency

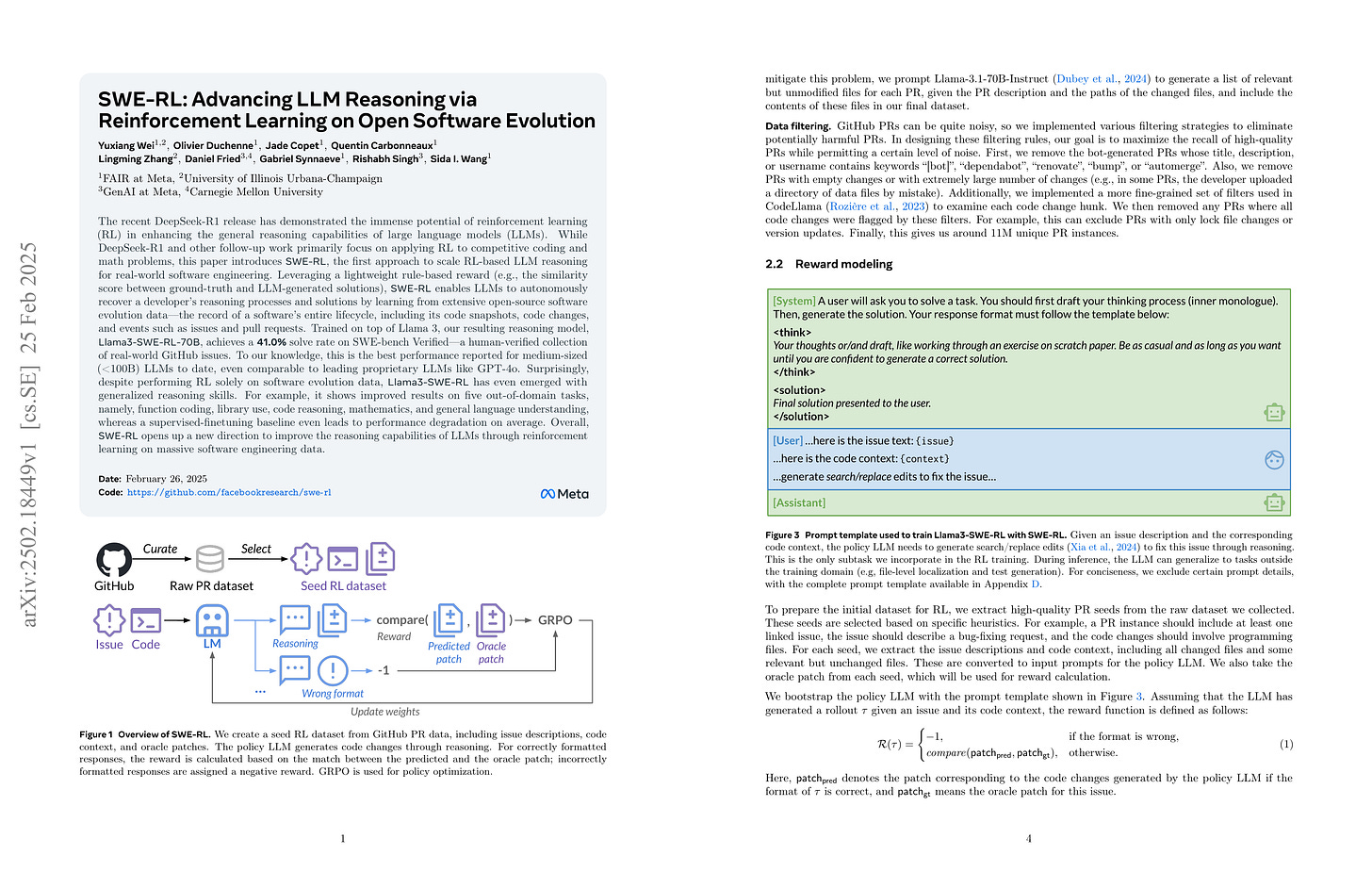

SWE-RL: Advancing LLM Reasoning via Reinforcement Learning on Open Software Evolution

(Yuxiang Wei, Olivier Duchenne, Jade Copet, Quentin Carbonneaux, Lingming Zhang, Daniel Fried, Gabriel Synnaeve, Rishabh Singh, Sida I. Wang)

The recent DeepSeek-R1 release has demonstrated the immense potential of reinforcement learning (RL) in enhancing the general reasoning capabilities of large language models (LLMs). While DeepSeek-R1 and other follow-up work primarily focus on applying RL to competitive coding and math problems, this paper introduces SWE-RL, the first approach to scale RL-based LLM reasoning for real-world software engineering. Leveraging a lightweight rule-based reward (e.g., the similarity score between ground-truth and LLM-generated solutions), SWE-RL enables LLMs to autonomously recover a developer's reasoning processes and solutions by learning from extensive open-source software evolution data -- the record of a software's entire lifecycle, including its code snapshots, code changes, and events such as issues and pull requests. Trained on top of Llama 3, our resulting reasoning model, Llama3-SWE-RL-70B, achieves a 41.0% solve rate on SWE-bench Verified -- a human-verified collection of real-world GitHub issues. To our knowledge, this is the best performance reported for medium-sized (<100B) LLMs to date, even comparable to leading proprietary LLMs like GPT-4o. Surprisingly, despite performing RL solely on software evolution data, Llama3-SWE-RL has even emerged with generalized reasoning skills. For example, it shows improved results on five out-of-domain tasks, namely, function coding, library use, code reasoning, mathematics, and general language understanding, whereas a supervised-finetuning baseline even leads to performance degradation on average. Overall, SWE-RL opens up a new direction to improve the reasoning capabilities of LLMs through reinforcement learning on massive software engineering data.

GitHub PR 데이터를 사용한 추론 RL. 보상으로는 작성한 코드와 정답의 문자열 매칭을 사용했군요.

Reasoning RL using GitHub PR data. For the reward, they used string matching between the generated code and the ground truth.

#reasoning #rl #code

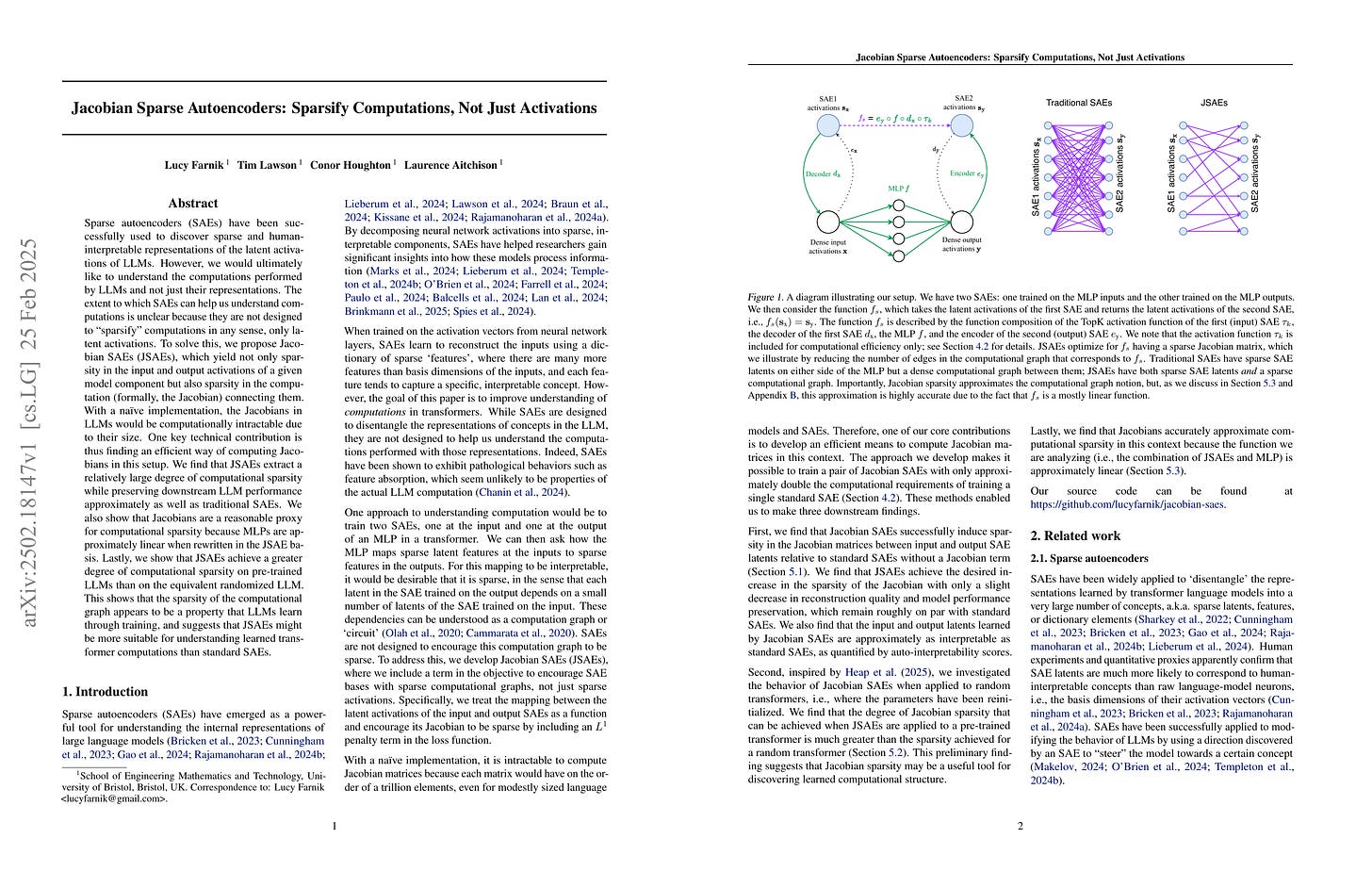

Jacobian Sparse Autoencoders: Sparsify Computations, Not Just Activations

(Lucy Farnik, Tim Lawson, Conor Houghton, Laurence Aitchison)

Sparse autoencoders (SAEs) have been successfully used to discover sparse and human-interpretable representations of the latent activations of LLMs. However, we would ultimately like to understand the computations performed by LLMs and not just their representations. The extent to which SAEs can help us understand computations is unclear because they are not designed to "sparsify" computations in any sense, only latent activations. To solve this, we propose Jacobian SAEs (JSAEs), which yield not only sparsity in the input and output activations of a given model component but also sparsity in the computation (formally, the Jacobian) connecting them. With a naïve implementation, the Jacobians in LLMs would be computationally intractable due to their size. One key technical contribution is thus finding an efficient way of computing Jacobians in this setup. We find that JSAEs extract a relatively large degree of computational sparsity while preserving downstream LLM performance approximately as well as traditional SAEs. We also show that Jacobians are a reasonable proxy for computational sparsity because MLPs are approximately linear when rewritten in the JSAE basis. Lastly, we show that JSAEs achieve a greater degree of computational sparsity on pre-trained LLMs than on the equivalent randomized LLM. This shows that the sparsity of the computational graph appears to be a property that LLMs learn through training, and suggests that JSAEs might be more suitable for understanding learned transformer computations than standard SAEs.

Sparse Autoencoder로 Latent를 분해하는 것을 넘어 모듈 내에서 일어나는 연산을 분해하겠다는 아이디어. 입력과 출력에 대해 SAE를 학습하면서 SAE Latent 사이의 Jacobian을 Sparse하게 만드는 방식으로 접근했네요.

This paper presents an idea that goes beyond decomposing latent representations using sparse autoencoders to decompose the computations occurring within a module. Their approach is to train SAEs on both the input and output of the module while making the jacobian between SAE latents sparse.

#mechanistic-interpretation