2025년 2월 25일

DeepEP

(Chenggang Zhao and Shangyan Zhou and Liyue Zhang and Chengqi Deng and Zhean Xu and Yuxuan Liu and Kuai Yu and Jiashi Li and Liang Zhao)

DeepSeek의 오픈소스 2일차는 DeepSeek V3에서 가장 화제가 됐던 부분 중 하나인 Expert Parallel을 위한 통신 라이브러리군요.

Day 2 of DeepSeek's open-source release is a communication library for expert parallel, which was one of the most talked thing from DeepSeek V3.

#efficiency

Fractal Generative Models

(Tianhong Li, Qinyi Sun, Lijie Fan, Kaiming He)

Modularization is a cornerstone of computer science, abstracting complex functions into atomic building blocks. In this paper, we introduce a new level of modularization by abstracting generative models into atomic generative modules. Analogous to fractals in mathematics, our method constructs a new type of generative model by recursively invoking atomic generative modules, resulting in self-similar fractal architectures that we call fractal generative models. As a running example, we instantiate our fractal framework using autoregressive models as the atomic generative modules and examine it on the challenging task of pixel-by-pixel image generation, demonstrating strong performance in both likelihood estimation and generation quality. We hope this work could open a new paradigm in generative modeling and provide a fertile ground for future research. Code is available at https://github.com/LTH14/fractalgen.

시퀀스를 재귀적으로 쪼개고 쪼개진 시퀀스 내에서 Autoregressive 학습을 하는 모델이군요. 구현조차도 재귀적입니다.

A generative model that recursively splits a sequence and performs autoregressive training on the split sequences. The implementation itself is also recursive.

#generative-model #autoregressive-model

Reasoning with Latent Thoughts: On the Power of Looped Transformers

(Nikunj Saunshi, Nishanth Dikkala, Zhiyuan Li, Sanjiv Kumar, Sashank J. Reddi)

Large language models have shown remarkable reasoning abilities and scaling laws suggest that large parameter count, especially along the depth axis, is the primary driver. In this work, we make a stronger claim -- many reasoning problems require a large depth but not necessarily many parameters. This unlocks a novel application of looped models for reasoning. Firstly, we show that for many synthetic reasoning problems like addition, p-hop induction, and math problems, a k-layer transformer looped L times nearly matches the performance of a kLkL-layer non-looped model, and is significantly better than a k-layer model. This is further corroborated by theoretical results showing that many such reasoning problems can be solved via iterative algorithms, and thus, can be solved effectively using looped models with nearly optimal depth. Perhaps surprisingly, these benefits also translate to practical settings of language modeling -- on many downstream reasoning tasks, a language model with k-layers looped L times can be competitive to, if not better than, a kL-layer language model. In fact, our empirical analysis reveals an intriguing phenomenon: looped and non-looped models exhibit scaling behavior that depends on their effective depth, akin to the inference-time scaling of chain-of-thought (CoT) reasoning. We further elucidate the connection to CoT reasoning by proving that looped models implicitly generate latent thoughts and can simulate T steps of CoT with T loops. Inspired by these findings, we also present an interesting dichotomy between reasoning and memorization, and design a looping-based regularization that is effective on both fronts.

Looped Transformer 연구가 또 나왔군요. Perplexity와는 별개로 추론 능력에서 강점이 있었다는 결과입니다. Weight가 유사하도록 Regularization을 걸어준 경우에도 비슷한 효과가 나타났다고 하네요.

Looped Transformer가 Continuous Thought라는 아이디어와 연관되어 등장하고 있죠. 일단 저는 Discrete Thought가 프리트레이닝에서 가이드를 더 크게 받을 수 있기에 유리하지 않을까 생각하긴 합니다. 그렇지만 늘 흥미로운 주제죠.

Another study on looped transformers has been published. The results show that, apart from perplexity, there were advantages in reasoning abilities. They also reported similar effects when applying regularization to make weights similar.

Looped transformers are emerging in connection with the idea of continuous thought. Personally, I think discrete thought might be more advantageous as it could benefit more from guidance during pre-training. Nevertheless, it remains an intriguing topic.

#transformer #reasoning

Distributional Scaling Laws for Emergent Capabilities

(Rosie Zhao, Tian Qin, David Alvarez-Melis, Sham Kakade, Naomi Saphra)

In this paper, we explore the nature of sudden breakthroughs in language model performance at scale, which stands in contrast to smooth improvements governed by scaling laws. While advocates of "emergence" view abrupt performance gains as capabilities unlocking at specific scales, others have suggested that they are produced by thresholding effects and alleviated by continuous metrics. We propose that breakthroughs are instead driven by continuous changes in the probability distribution of training outcomes, particularly when performance is bimodally distributed across random seeds. In synthetic length generalization tasks, we show that different random seeds can produce either highly linear or emergent scaling trends. We reveal that sharp breakthroughs in metrics are produced by underlying continuous changes in their distribution across seeds. Furthermore, we provide a case study of inverse scaling and show that even as the probability of a successful run declines, the average performance of a successful run continues to increase monotonically. We validate our distributional scaling framework on realistic settings by measuring MMLU performance in LLM populations. These insights emphasize the role of random variation in the effect of scale on LLM capabilities.

모델의 능력이 Emergent하게 발생하는 것이 사실 랜덤 시드에 따른 모델의 성능 분포가 Bimodal하기 때문에 발생할 수 있다는 분석. 모델의 분포가 문제가 된다는 것이죠. 흥미롭네요.

This analysis suggests that the seemingly emergent capabilities of models might actually result from a bimodal distribution of model performance across different random seeds. In other words, the distribution of model performance becomes a key factor. Interesting insight.

#scaling-law #llm

Function-Space Learning Rates

(Edward Milsom, Ben Anson, Laurence Aitchison)

We consider layerwise function-space learning rates, which measure the magnitude of the change in a neural network's output function in response to an update to a parameter tensor. This contrasts with traditional learning rates, which describe the magnitude of changes in parameter space. We develop efficient methods to measure and set function-space learning rates in arbitrary neural networks, requiring only minimal computational overhead through a few additional backward passes that can be performed at the start of, or periodically during, training. We demonstrate two key applications: (1) analysing the dynamics of standard neural network optimisers in function space, rather than parameter space, and (2) introducing FLeRM (Function-space Learning Rate Matching), a novel approach to hyperparameter transfer across model scales. FLeRM records function-space learning rates while training a small, cheap base model, then automatically adjusts parameter-space layerwise learning rates when training larger models to maintain consistent function-space updates. FLeRM gives hyperparameter transfer across model width, depth, initialisation scale, and LoRA rank in various architectures including MLPs with residual connections and transformers with different layer normalisation schemes.

작은 모델의 레이어의 출력의 변화를 기록하고 이 변화를 큰 모델 학습 시에 LR 조정에 사용한다는 아이디어.

The idea is to record the output changes of layers in a small model and then use this information to adjust the learning rate when training larger models.

#hyperparameter

Unveiling Downstream Performance Scaling of LLMs: A Clustering-Based Perspective

(Chengyin Xu, Kaiyuan Chen, Xiao Li, Ke Shen, Chenggang Li)

The rapid advancements in computing dramatically increase the scale and cost of training Large Language Models (LLMs). Accurately predicting downstream task performance prior to model training is crucial for efficient resource allocation, yet remains challenging due to two primary constraints: (1) the "emergence phenomenon", wherein downstream performance metrics become meaningful only after extensive training, which limits the ability to use smaller models for prediction; (2) Uneven task difficulty distributions and the absence of consistent scaling laws, resulting in substantial metric variability. Existing performance prediction methods suffer from limited accuracy and reliability, thereby impeding the assessment of potential LLM capabilities. To address these challenges, we propose a Clustering-On-Difficulty (COD) downstream performance prediction framework. COD first constructs a predictable support subset by clustering tasks based on difficulty features, strategically excluding non-emergent and non-scalable clusters. The scores on the selected subset serve as effective intermediate predictors of downstream performance on the full evaluation set. With theoretical support, we derive a mapping function that transforms performance metrics from the predictable subset to the full evaluation set, thereby ensuring accurate extrapolation of LLM downstream performance. The proposed method has been applied to predict performance scaling for a 70B LLM, providing actionable insights for training resource allocation and assisting in monitoring the training process. Notably, COD achieves remarkable predictive accuracy on the 70B LLM by leveraging an ensemble of small models, demonstrating an absolute mean deviation of 1.36% across eight important LLM evaluation benchmarks.

Task Scaling Law. 클러스터링으로 난이도가 비슷한 문제들을 묶은 다음 예측이 잘 되는 샘플들을 선택하고 이 샘플들에 대한 예측을 기반으로 벤치마크 전체에 대한 예측을 하는 형태군요.

A task scaling law. The approach starts from clustering problems of similar difficulty, then selecting a subset of samples that can be predicted accurately. The prediction for the entire benchmark is then based on the predictions for this chosen subset.

#scaling-law

Compression Scaling Laws:Unifying Sparsity and Quantization

(Elias Frantar, Utku Evci, Wonpyo Park, Neil Houlsby, Dan Alistarh)

We investigate how different compression techniques -- such as weight and activation quantization, and weight sparsity -- affect the scaling behavior of large language models (LLMs) during pretraining. Building on previous work showing that weight sparsity acts as a constant multiplier on model size in scaling laws, we demonstrate that this "effective parameter" scaling pattern extends to quantization as well. Specifically, we establish that weight-only quantization achieves strong parameter efficiency multipliers, while full quantization of both weights and activations shows diminishing returns at lower bitwidths. Our results suggest that different compression techniques can be unified under a common scaling law framework, enabling principled comparison and combination of these methods.

Quantization과 Sparsity에 대한 Scaling Law. 여기서는 4 bit 정도를 Pareto Frontier로 분석하고 있군요. 그런데 Quantization에 대해서는 학습량도 고려해야 한다는 이야기가 있었죠. (https://arxiv.org/abs/2411.17691)

Scaling law for quantization and sparsity. They identify around 4-bit as the pareto frontier. However, recent research suggests that we should also consider the amount of training for quantization. (https://arxiv.org/abs/2411.17691)

#scaling-law #quantization #sparsity

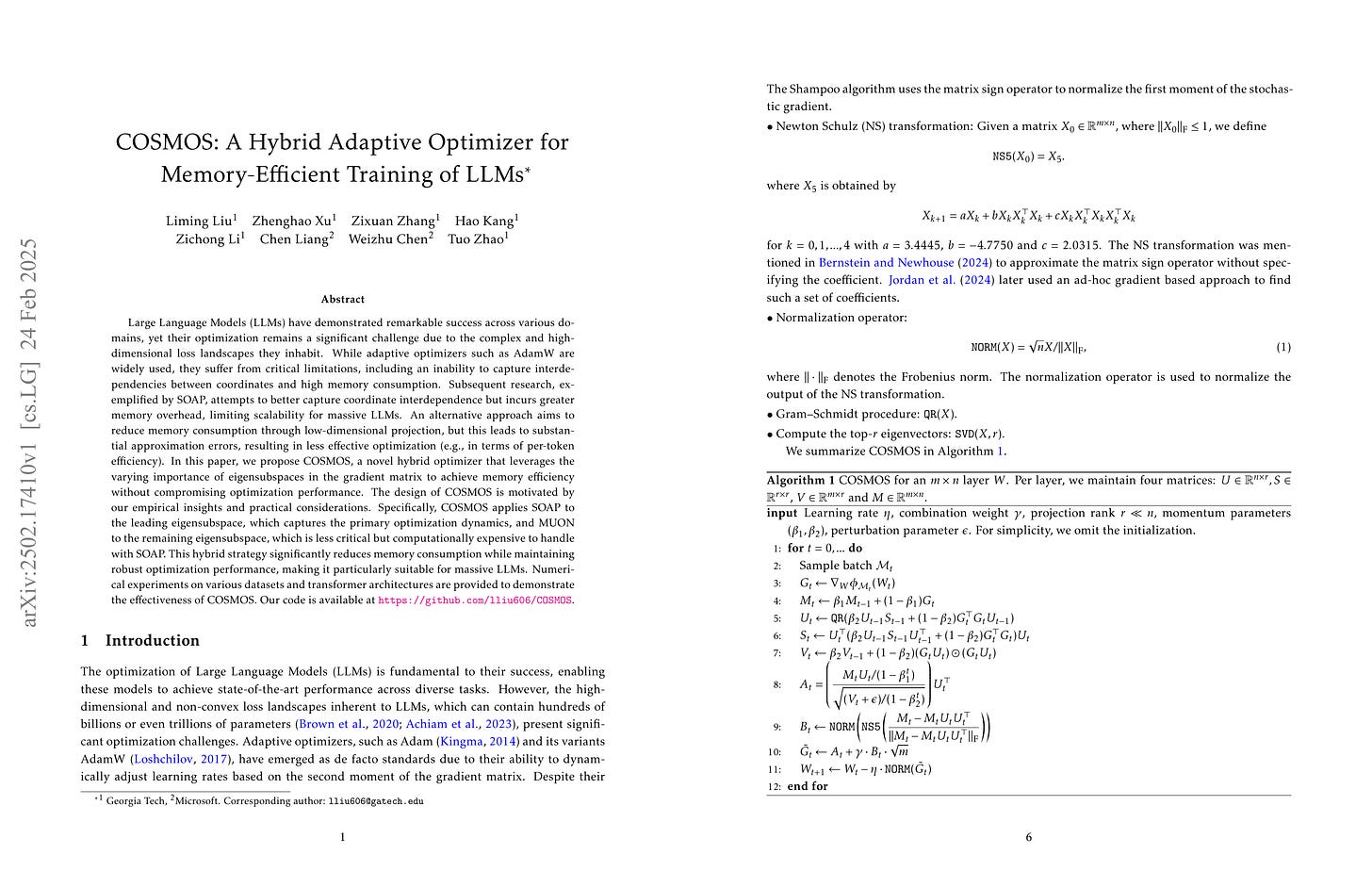

COSMOS: A Hybrid Adaptive Optimizer for Memory-Efficient Training of LLMs

(Liming Liu, Zhenghao Xu, Zixuan Zhang, Hao Kang, Zichong Li, Chen Liang, Weizhu Chen, Tuo Zhao)

Large Language Models (LLMs) have demonstrated remarkable success across various domains, yet their optimization remains a significant challenge due to the complex and high-dimensional loss landscapes they inhabit. While adaptive optimizers such as AdamW are widely used, they suffer from critical limitations, including an inability to capture interdependencies between coordinates and high memory consumption. Subsequent research, exemplified by SOAP, attempts to better capture coordinate interdependence but incurs greater memory overhead, limiting scalability for massive LLMs. An alternative approach aims to reduce memory consumption through low-dimensional projection, but this leads to substantial approximation errors, resulting in less effective optimization (e.g., in terms of per-token efficiency). In this paper, we propose COSMOS, a novel hybrid optimizer that leverages the varying importance of eigensubspaces in the gradient matrix to achieve memory efficiency without compromising optimization performance. The design of COSMOS is motivated by our empirical insights and practical considerations. Specifically, COSMOS applies SOAP to the leading eigensubspace, which captures the primary optimization dynamics, and MUON to the remaining eigensubspace, which is less critical but computationally expensive to handle with SOAP. This hybrid strategy significantly reduces memory consumption while maintaining robust optimization performance, making it particularly suitable for massive LLMs. Numerical experiments on various datasets and transformer architectures are provided to demonstrate the effectiveness of COSMOS. Our code is available at https://github.com/lliu606/COSMOS.

SOAP와 Muon을 합친 Optimizer. 한동안 Optimizer 연구들이 또 많이 나오겠네요.

An optimizer that combines SOAP and Muon. We'll likely see a surge of research on optimizers in the near future.

#optimizer

Straight to Zero: Why Linearly Decaying the Learning Rate to Zero Works Best for LLMs

(Shane Bergsma, Nolan Dey, Gurpreet Gosal, Gavia Gray, Daria Soboleva, Joel Hestness)

LLMs are commonly trained with a learning rate (LR) warmup, followed by cosine decay to 10% of the maximum (10x decay). In a large-scale empirical study, we show that under an optimal peak LR, a simple linear decay-to-zero (D2Z) schedule consistently outperforms other schedules when training at compute-optimal dataset sizes. D2Z is superior across a range of model sizes, batch sizes, datasets, and vocabularies. Benefits increase as dataset size increases. Leveraging a novel interpretation of AdamW as an exponential moving average of weight updates, we show how linear D2Z optimally balances the demands of early training (moving away from initial conditions) and late training (averaging over more updates in order to mitigate gradient noise). In experiments, a 610M-parameter model trained for 80 tokens-per-parameter (TPP) using D2Z achieves lower loss than when trained for 200 TPP using 10x decay, corresponding to an astonishing 60% compute savings. Models such as Llama2-7B, trained for 286 TPP with 10x decay, could likely have saved a majority of compute by training with D2Z.

선형으로 0으로 Decay하는 LR 스케줄이 최선이었다는 결과.

The results show that a learning rate schedule with linear decay to zero was the most effective.

#optimization

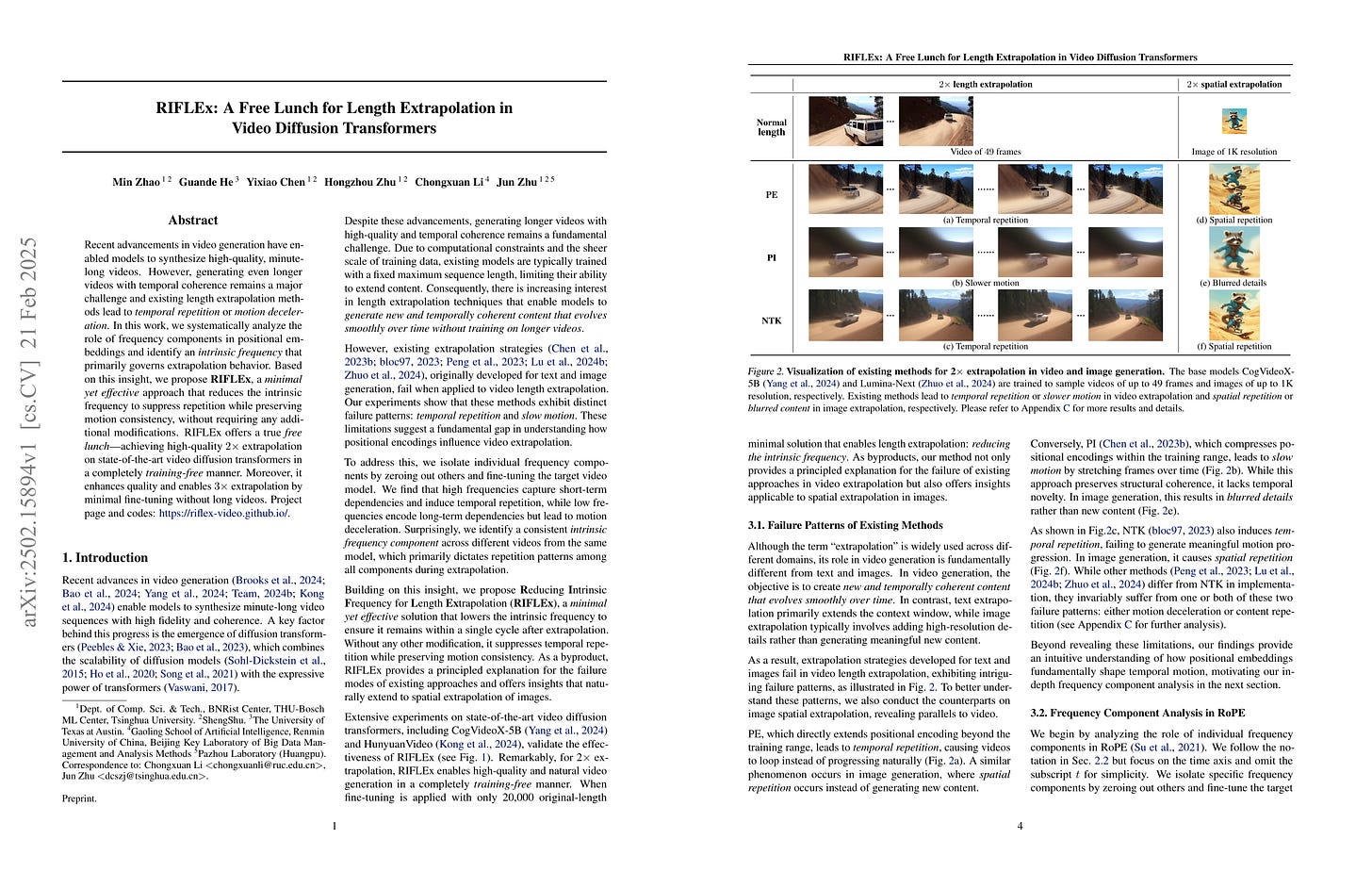

RIFLEx: A Free Lunch for Length Extrapolation in Video Diffusion Transformers

(Min Zhao, Guande He, Yixiao Chen, Hongzhou Zhu, Chongxuan Li, Jun Zhu)

Recent advancements in video generation have enabled models to synthesize high-quality, minute-long videos. However, generating even longer videos with temporal coherence remains a major challenge, and existing length extrapolation methods lead to temporal repetition or motion deceleration. In this work, we systematically analyze the role of frequency components in positional embeddings and identify an intrinsic frequency that primarily governs extrapolation behavior. Based on this insight, we propose RIFLEx, a minimal yet effective approach that reduces the intrinsic frequency to suppress repetition while preserving motion consistency, without requiring any additional modifications. RIFLEx offers a true free lunch--achieving high-quality 2× extrapolation on state-of-the-art video diffusion transformers in a completely training-free manner. Moreover, it enhances quality and enables 3× extrapolation by minimal fine-tuning without long videos. Project page and codes: https://riflex-video.github.io/.

비디오 생성 모델의 길이 증가. 반복이 일어나는 프레임을 찾아 주기가 그와 맞는 RoPE 컴포넌트를 찾고 주파수를 조정하는 방식이군요.

Length extrapolation for video generation models. The method identifies frames where repetition occurs, then finds the corresponding RoPE components with matching periods, and adjusts their frequencies.

#video-generation #long-context #positional-encoding

Optimizing Pre-Training Data Mixtures with Mixtures of Data Expert Models

(Lior Belenki, Alekh Agarwal, Tianze Shi, Kristina Toutanova)

We propose a method to optimize language model pre-training data mixtures through efficient approximation of the cross-entropy loss corresponding to each candidate mixture via a Mixture of Data Experts (MDE). We use this approximation as a source of additional features in a regression model, trained from observations of model loss for a small number of mixtures. Experiments with Transformer decoder-only language models in the range of 70M to 1B parameters on the SlimPajama dataset show that our method achieves significantly better performance than approaches that train regression models using only the mixture rates as input features. Combining this improved optimization method with an objective that takes into account cross-entropy on end task data leads to superior performance on few-shot downstream evaluations. We also provide theoretical insights on why aggregation of data expert predictions can provide good approximations to model losses for data mixtures.

프리트레이닝 데이터의 비율 결정. 각 도메인에 대해 모델을 학습시킨 다음 이 모델을 특정 비율로 앙상블했을 때 가장 좋은 비율을 사용한다는 아이디어.

Determining the ratio of pretraining data domains. The idea is to train a model on each domain and then use the best-performing ratio when ensembling these models with specific mixture ratios.

#pretraining