2025년 2월 18일

Native Sparse Attention: Hardware-Aligned and Natively Trainable Sparse Attention

(Jingyang Yuan, Huazuo Gao, Damai Dai, Junyu Luo, Liang Zhao, Zhengyan Zhang, Zhenda Xie, Y. X. Wei, Lean Wang, Zhiping Xiao, Yuqing Wang, Chong Ruan, Ming Zhang, Wenfeng Liang, Wangding Zeng)

Long-context modeling is crucial for next-generation language models, yet the high computational cost of standard attention mechanisms poses significant computational challenges. Sparse attention offers a promising direction for improving efficiency while maintaining model capabilities. We present NSA, a Natively trainable Sparse Attention mechanism that integrates algorithmic innovations with hardware-aligned optimizations to achieve efficient long-context modeling. NSA employs a dynamic hierarchical sparse strategy, combining coarse-grained token compression with fine-grained token selection to preserve both global context awareness and local precision. Our approach advances sparse attention design with two key innovations: (1) We achieve substantial speedups through arithmetic intensity-balanced algorithm design, with implementation optimizations for modern hardware. (2) We enable end-to-end training, reducing pretraining computation without sacrificing model performance. As shown in Figure 1, experiments show the model pretrained with NSA maintains or exceeds Full Attention models across general benchmarks, long-context tasks, and instruction-based reasoning. Meanwhile, NSA achieves substantial speedups over Full Attention on 64k-length sequences across decoding, forward propagation, and backward propagation, validating its efficiency throughout the model lifecycle.

DeepSeek은 Sparse Attention을 태클하고 있었군요. Token Pooling, Top-K Block Selection, Sliding Window의 조합이네요. DeepSeek V3에서 미래에 Long Context 문제를 태클할 것이라고 했었는데 예상하지 못했던 방향이네요. Sparse Attention을 다시 생각해보는 것이 좋을지도 모르겠습니다. (https://arxiv.org/abs/2502.12082)

DeepSeek has been tackling sparse attention. They're using a combination of token pooling, top-K block selection, and sliding window. In their DeepSeek V3 paper, they mentioned they would address long context problems in the future, but this approach wasn't what I expected. It might be worth reconsidering sparse attention. (https://arxiv.org/abs/2502.12082)

#sparsity #efficiency #efficient-training

LLMs on the Line: Data Determines Loss-to-Loss Scaling Laws

(Prasanna Mayilvahanan, Thaddäus Wiedemer, Sayak Mallick, Matthias Bethge, Wieland Brendel)

Scaling laws guide the development of large language models (LLMs) by offering estimates for the optimal balance of model size, tokens, and compute. More recently, loss-to-loss scaling laws that relate losses across pretraining datasets and downstream tasks have emerged as a powerful tool for understanding and improving LLM performance. In this work, we investigate which factors most strongly influence loss-to-loss scaling. Our experiments reveal that the pretraining data and tokenizer determine the scaling trend. In contrast, model size, optimization hyperparameters, and even significant architectural differences, such as between transformer-based models like Llama and state-space models like Mamba, have limited impact. Consequently, practitioners should carefully curate suitable pretraining datasets for optimal downstream performance, while architectures and other settings can be freely optimized for training efficiency.

Loss-to-Loss Scaling Law를 프리트레이닝 데이터나 아키텍처의 비교에 사용해봤군요. (https://arxiv.org/abs/2411.12925) 역시나 프리트레이닝 데이터의 차이가 가장 주효합니다. 다만 토크나이저에 따른 차이는 여러 요인들을 고려해야 하지 않을까 싶네요.

The authors applied loss-to-loss scaling laws to compare pretraining data and architectures (https://arxiv.org/abs/2411.12925). As expected, differences in pretraining data have the most significant impact. However, I think the differences observed between tokenizers might involve several factors that need to be considered.

#scaling-law

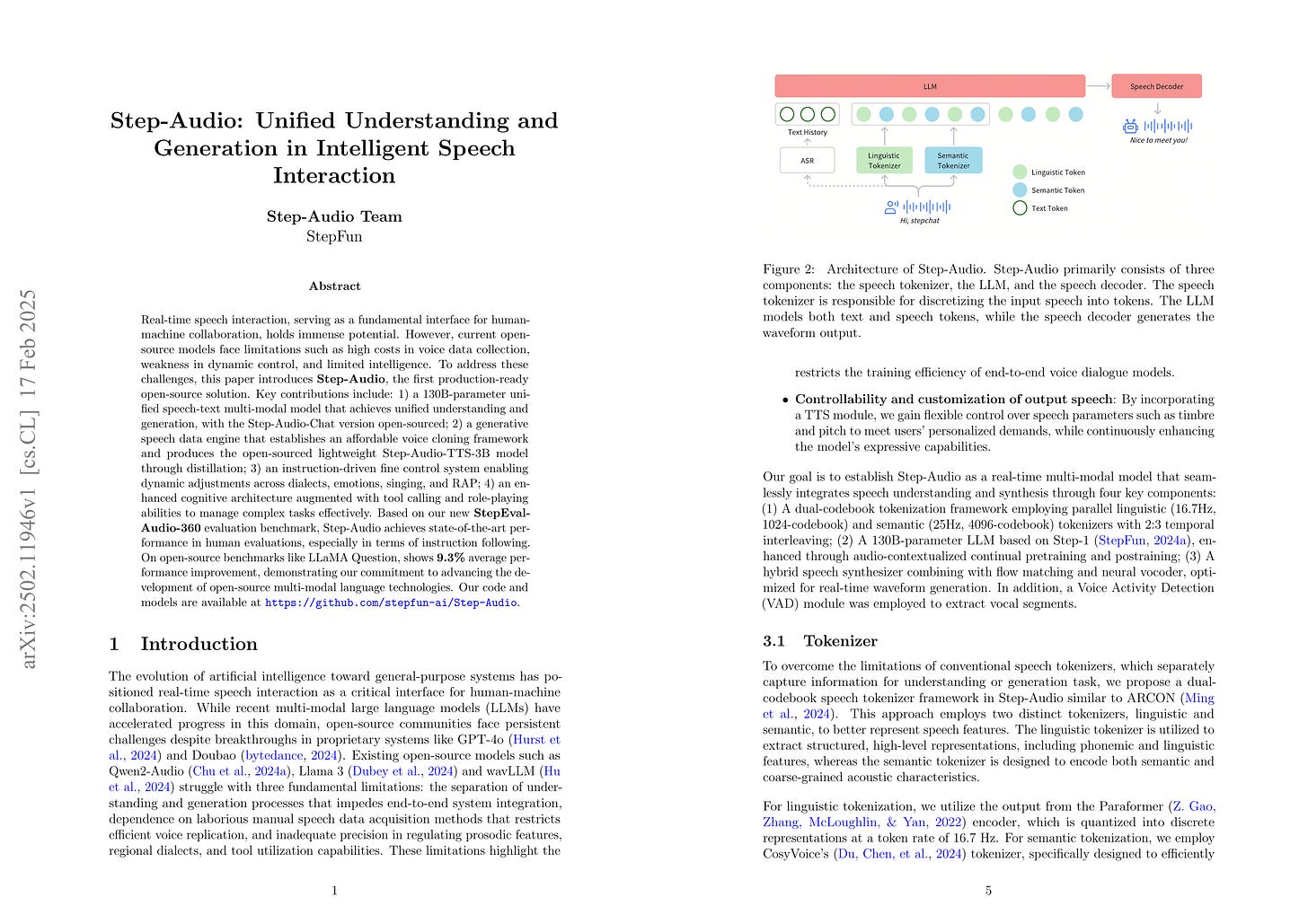

Step-Audio: Unified Understanding and Generation in Intelligent Speech Interaction

(Ailin Huang, Boyong Wu, Bruce Wang, Chao Yan, Chen Hu, Chengli Feng, Fei Tian, Feiyu Shen, Jingbei Li, Mingrui Chen, Peng Liu, Ruihang Miao, Wang You, Xi Chen, Xuerui Yang, Yechang Huang, Yuxiang Zhang, Zheng Gong, Zixin Zhang, Brian Li, Changyi Wan, Hanpeng Hu, Ranchen Ming, Song Yuan, Xuelin Zhang, Yu Zhou, Bingxin Li, Buyun Ma, Kang An, Wei Ji, Wen Li, Xuan Wen, Yuankai Ma, Yuanwei Liang, Yun Mou, Bahtiyar Ahmidi, Bin Wang, Bo Li, Changxin Miao, Chen Xu, Chengting Feng, Chenrun Wang, Dapeng Shi, Deshan Sun, Dingyuan Hu, Dula Sai, Enle Liu, Guanzhe Huang, Gulin Yan, Heng Wang, Haonan Jia, Haoyang Zhang, Jiahao Gong, Jianchang Wu, Jiahong Liu, Jianjian Sun, Jiangjie Zhen, Jie Feng, Jie Wu, Jiaoren Wu, Jie Yang, Jinguo Wang, Jingyang Zhang, Junzhe Lin, Kaixiang Li, Lei Xia, Li Zhou, Longlong Gu, Mei Chen, Menglin Wu, Ming Li, Mingxiao Li, Mingyao Liang, Na Wang, Nie Hao, Qiling Wu, Qinyuan Tan, Shaoliang Pang, Shiliang Yang, Shuli Gao, Siqi Liu, Sitong Liu, Tiancheng Cao, Tianyu Wang, Wenjin Deng, Wenqing He, Wen Sun, Xin Han, Xiaomin Deng, Xiaojia Liu, Xu Zhao, Yanan Wei, Yanbo Yu, Yang Cao, Yangguang Li, Yangzhen Ma, Yanming Xu, Yaqiang Shi, Yilei Wang, Yinmin Zhong , Yu Luo, Yuanwei Lu, Yuhe Yin, Yuting Yan, Yuxiang Yang, Zhe Xie, Zheng Ge, Zheng Sun, Zhewei Huang, Zhichao Chang, Zidong Yang, Zili Zhang, Binxing Jiao, Daxin Jiang, Heung-Yeung Shum, Jiansheng Chen, Jing Li, Shuchang Zhou, Xiangyu Zhang, Xinhao Zhang, Yibo Zhu)

Real-time speech interaction, serving as a fundamental interface for human-machine collaboration, holds immense potential. However, current open-source models face limitations such as high costs in voice data collection, weakness in dynamic control, and limited intelligence. To address these challenges, this paper introduces Step-Audio, the first production-ready open-source solution. Key contributions include: 1) a 130B-parameter unified speech-text multi-modal model that achieves unified understanding and generation, with the Step-Audio-Chat version open-sourced; 2) a generative speech data engine that establishes an affordable voice cloning framework and produces the open-sourced lightweight Step-Audio-TTS-3B model through distillation; 3) an instruction-driven fine control system enabling dynamic adjustments across dialects, emotions, singing, and RAP; 4) an enhanced cognitive architecture augmented with tool calling and role-playing abilities to manage complex tasks effectively. Based on our new StepEval-Audio-360 evaluation benchmark, Step-Audio achieves state-of-the-art performance in human evaluations, especially in terms of instruction following. On open-source benchmarks like LLaMA Question, shows 9.3% average performance improvement, demonstrating our commitment to advancing the development of open-source multi-modal language technologies. Our code and models are available at https://github.com/stepfun-ai/Step-Audio.

어제 비디오 생성 모델을 공개했던 StepFun에서 (https://arxiv.org/abs/2502.10248) 130B 규모의 Speech 모델을 공개했군요. 익숙하지 않은 회사입니다만 공개하고 있는 학습 인프라부터 굉장하네요.

StepFun, which released a video generation model yesterday (https://arxiv.org/abs/2502.10248), has now published a 130B-scale speech model. While I'm not familiar with this company, the training infrastructure they mention is impressive.

#speech

LIMR: Less is More for RL Scaling

(Xuefeng Li, Haoyang Zou, Pengfei Liu)

In this paper, we ask: what truly determines the effectiveness of RL training data for enhancing language models' reasoning capabilities? While recent advances like o1, Deepseek R1, and Kimi1.5 demonstrate RL's potential, the lack of transparency about training data requirements has hindered systematic progress. Starting directly from base models without distillation, we challenge the assumption that scaling up RL training data inherently improves performance. we demonstrate that a strategically selected subset of just 1,389 samples can outperform the full 8,523-sample dataset. We introduce Learning Impact Measurement (LIM), an automated method to evaluate and prioritize training samples based on their alignment with model learning trajectories, enabling efficient resource utilization and scalable implementation. Our method achieves comparable or even superior performance using only 1,389 samples versus the full 8,523 samples dataset. Notably, while recent data-efficient approaches (e.g., LIMO and s1) show promise with 32B-scale models, we find it significantly underperforms at 7B-scale through supervised fine-tuning (SFT). In contrast, our RL-based LIMR achieves 16.7% higher accuracy on AIME24 and outperforms LIMO and s1 by 13.0% and 22.2% on MATH500. These results fundamentally reshape our understanding of RL scaling in LLMs, demonstrating that precise sample selection, rather than data scale, may be the key to unlocking enhanced reasoning capabilities. For reproducible research and future innovation, we are open-sourcing LIMR, including implementation of LIM, training and evaluation code, curated datasets, and trained models at https://github.com/GAIR-NLP/LIMR.

SFT에 이어 RL에도 많은 샘플이 필요하지 않다는 결과. (https://arxiv.org/abs/2502.03387) 모델의 전반적인 학습 경향과 경향이 비슷한 샘플을 찾는 방식이군요. Kimi k1.5의 Curriculum도 생각나네요. (https://arxiv.org/abs/2501.12599)

또한 RL이 SFT보다 더 샘플 효율적이었다고 합니다.

Following the findings about SFT, this paper shows that RL also doesn't require a large number of samples (https://arxiv.org/abs/2502.03387). The main method involves finding samples with trends similar to the overall learning trajectory of the model. This reminds me of the curriculum used in Kimi k1.5 (https://arxiv.org/abs/2501.12599).

The authors also conclude that RL is more sample-efficient than SFT.

#rl #reasoning

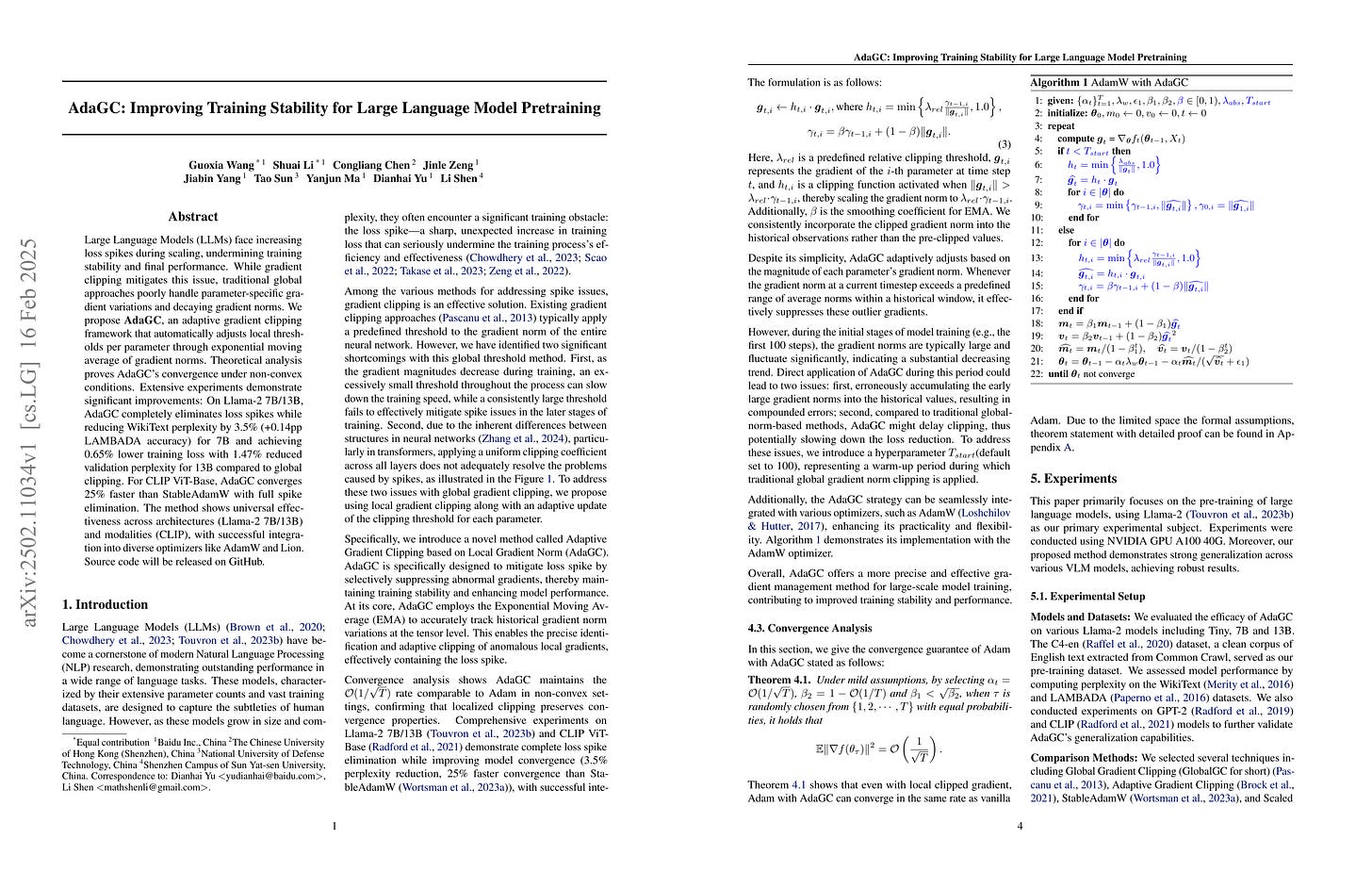

AdaGC: Improving Training Stability for Large Language Model Pretraining

(Guoxia Wang, Shuai Li, Congliang Chen, Jinle Zeng, Jiabin Yang, Tao Sun, Yanjun Ma, Dianhai Yu, Li Shen)

Large Language Models (LLMs) face increasing loss spikes during scaling, undermining training stability and final performance. While gradient clipping mitigates this issue, traditional global approaches poorly handle parameter-specific gradient variations and decaying gradient norms. We propose AdaGC, an adaptive gradient clipping framework that automatically adjusts local thresholds per parameter through exponential moving average of gradient norms. Theoretical analysis proves AdaGC's convergence under non-convex conditions. Extensive experiments demonstrate significant improvements: On Llama-2 7B/13B, AdaGC completely eliminates loss spikes while reducing WikiText perplexity by 3.5% (+0.14pp LAMBADA accuracy) for 7B and achieving 0.65% lower training loss with 1.47% reduced validation perplexity for 13B compared to global clipping. For CLIP ViT-Base, AdaGC converges 25% faster than StableAdamW with full spike elimination. The method shows universal effectiveness across architectures (Llama-2 7B/13B) and modalities (CLIP), with successful integration into diverse optimizers like AdamW and Lion. Source code will be released on GitHub.

Adaptive Gradient Clipping을 사용한 학습 안정화 테크닉. Adafactor 같은 사례 외에 Adam에 대한 비슷한 변형이 이전에도 있었을까 싶네요.

A technique for training stabilization using adaptive gradient clipping. I wonder if there were similar variants of Adam before, besides methods like Adafactor.

#optimizer