2025년 2월 14일

EQ-VAE: Equivariance Regularized Latent Space for Improved Generative Image Modeling

(Theodoros Kouzelis, Ioannis Kakogeorgiou, Spyros Gidaris, Nikos Komodakis)

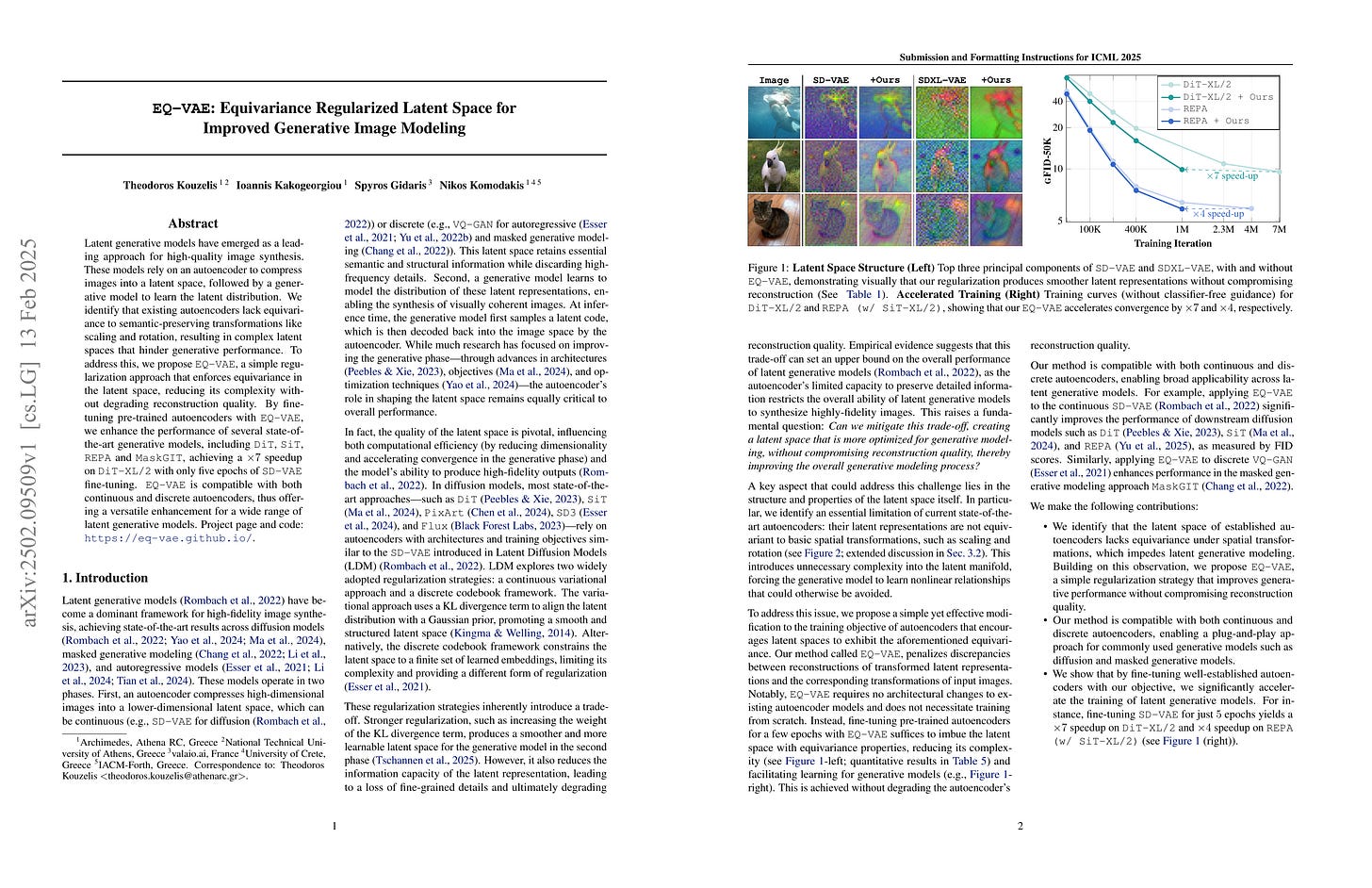

Latent generative models have emerged as a leading approach for high-quality image synthesis. These models rely on an autoencoder to compress images into a latent space, followed by a generative model to learn the latent distribution. We identify that existing autoencoders lack equivariance to semantic-preserving transformations like scaling and rotation, resulting in complex latent spaces that hinder generative performance. To address this, we propose EQ-VAE, a simple regularization approach that enforces equivariance in the latent space, reducing its complexity without degrading reconstruction quality. By finetuning pre-trained autoencoders with EQ-VAE, we enhance the performance of several state-of-the-art generative models, including DiT, SiT, REPA and MaskGIT, achieving a 7 speedup on DiT-XL/2 with only five epochs of SD-VAE fine-tuning. EQ-VAE is compatible with both continuous and discrete autoencoders, thus offering a versatile enhancement for a wide range of latent generative models. Project page and code:

https://eq-vae.github.io/

.

Augmentation으로 VAE의 Latent의 구조를 개선하자는 아이디어. Latent의 구조를 개선하자는 것은 최근 많이 나오는 아이디어죠. (https://arxiv.org/abs/2501.09755, https://arxiv.org/abs/2502.03444) Semantic한 정보를 결합하는 형태의 접근들이 사실 Semantic한 정보 이전에 Equivariance 같은 보다 기본적인 측면에서도 개선해주는 효과가 있었던 것이 아닌가 싶네요.

This paper presents the idea of improving the latent structure of VAEs using augmentation. Improving the structure of latent spaces has been a popular approach recently (https://arxiv.org/abs/2501.09755, https://arxiv.org/abs/2502.03444). Maybe approaches incorporating semantic information have had the effect of improving more basic aspects like equivariance, even before adding semantic information itself.

#vae #tokenizer

Score-of-Mixture Training: Training One-Step Generative Models Made Simple

(Tejas Jayashankar, J. Jon Ryu, Gregory Wornell)

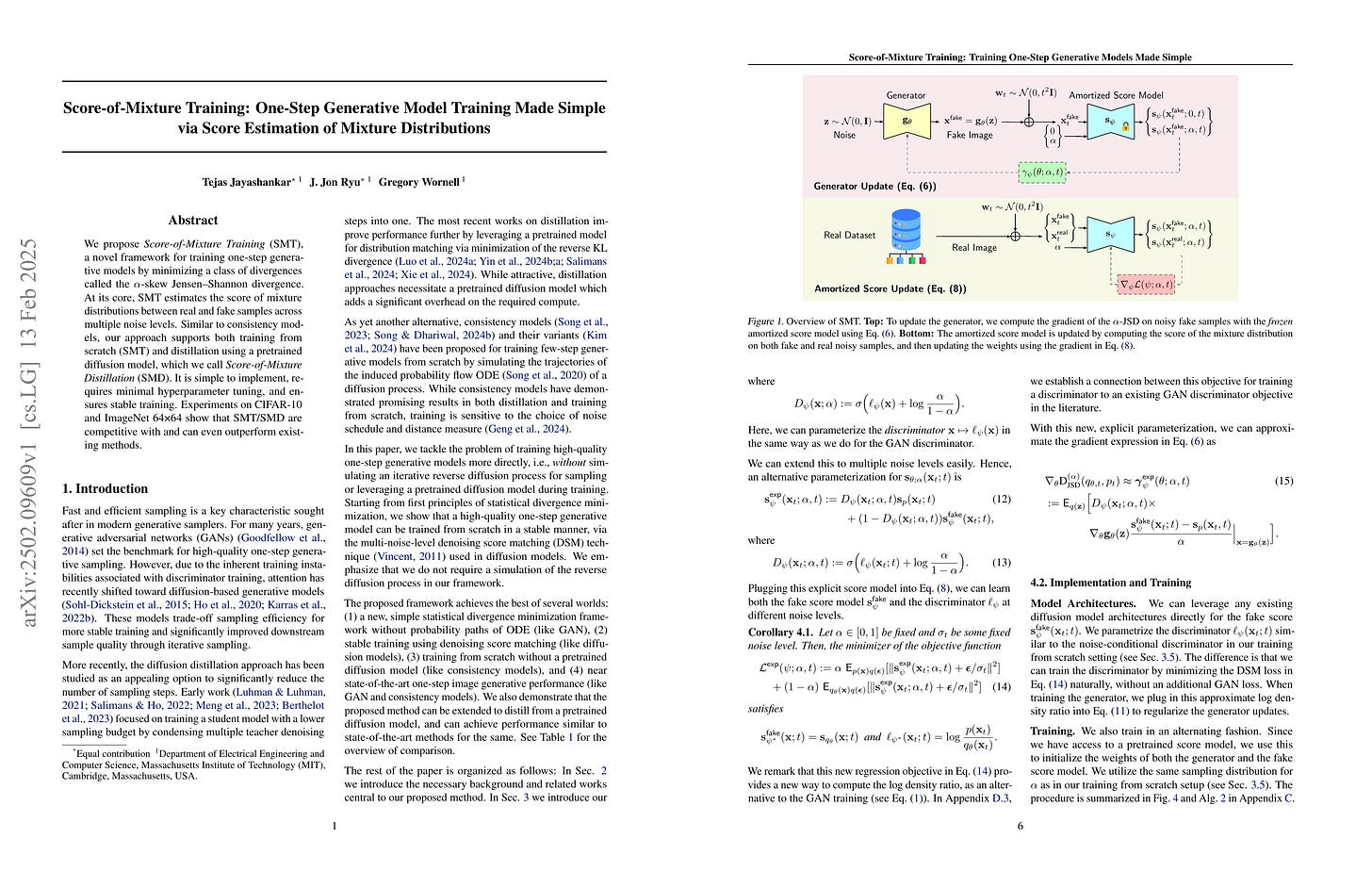

We propose Score-of-Mixture Training (SMT), a novel framework for training one-step generative models by minimizing a class of divergences called the αα-skew Jensen-Shannon divergence. At its core, SMT estimates the score of mixture distributions between real and fake samples across multiple noise levels. Similar to consistency models, our approach supports both training from scratch (SMT) and distillation using a pretrained diffusion model, which we call Score-of-Mixture Distillation (SMD). It is simple to implement, requires minimal hyperparameter tuning, and ensures stable training. Experiments on CIFAR-10 and ImageNet 64x64 show that SMT/SMD are competitive with and can even outperform existing methods.

Forward와 Reverse KL을 Interpolate하는 Divergence를 Objective로 하여 학습. 이 Objective에 대한 학습을 위해서는 Score Model을 사용.

Training one-step generative models using a divergence that interpolates between forward and reverse KL as an objective. To train a model with this objective, an amortized score model is used.

#image-generation #diffusion #gan