2025년 1월 6일

Process Reinforcement through Implicit Rewards

(Ganqu Cui, Lifan Yuan, Zefan Wang, Hanbin Wang, Wendi Li, Bingxiang He, Yuchen Fan, Tianyu Yu, Qixin Xu, Weize Chen, Jiarui Yuan, Huayu Chen, Kaiyan Zhang, Xingtai Lv, Shuo Wang, Yuan Yao, Xu Han, Hao Peng, Yu Cheng, Zhiyuan Liu, Maosong Sun, Bowen Zhou, Ning Ding)

Implicit PRM으로 (https://arxiv.org/abs/2412.01981) RL을 진행한 결과가 나왔군요.

Result of RL with implicit PRM (https://arxiv.org/abs/2412.01981).

#reward-model #reasoning #rl

Result of RL using implicit PRM (https://arxiv.org/abs/2412.01981).

2 OLMo 2 Furious

(Team OLMo, Pete Walsh, Luca Soldaini, Dirk Groeneveld, Kyle Lo, Shane Arora, Akshita Bhagia, Yuling Gu, Shengyi Huang, Matt Jordan, Nathan Lambert, Dustin Schwenk, Oyvind Tafjord, Taira Anderson, David Atkinson, Faeze Brahman, Christopher Clark, Pradeep Dasigi, Nouha Dziri, Michal Guerquin, Hamish Ivison, Pang Wei Koh, Jiacheng Liu, Saumya Malik, William Merrill, Lester James V. Miranda, Jacob Morrison, Tyler Murray, Crystal Nam, Valentina Pyatkin, Aman Rangapur, Michael Schmitz, Sam Skjonsberg, David Wadden, Christopher Wilhelm, Michael Wilson, Luke Zettlemoyer, Ali Farhadi, Noah A. Smith, Hannaneh Hajishirzi)

We present OLMo 2, the next generation of our fully open language models. OLMo 2 includes dense autoregressive models with improved architecture and training recipe, pretraining data mixtures, and instruction tuning recipes. Our modified model architecture and training recipe achieve both better training stability and improved per-token efficiency. Our updated pretraining data mixture introduces a new, specialized data mix called Dolmino Mix 1124, which significantly improves model capabilities across many downstream task benchmarks when introduced via late-stage curriculum training (i.e. specialized data during the annealing phase of pretraining). Finally, we incorporate best practices from Tülu 3 to develop OLMo 2-Instruct, focusing on permissive data and extending our final-stage reinforcement learning with verifiable rewards (RLVR). Our OLMo 2 base models sit at the Pareto frontier of performance to compute, often matching or outperforming open-weight only models like Llama 3.1 and Qwen 2.5 while using fewer FLOPs and with fully transparent training data, code, and recipe. Our fully open OLMo 2-Instruct models are competitive with or surpassing open-weight only models of comparable size, including Qwen 2.5, Llama 3.1 and Gemma 2. We release all OLMo 2 artifacts openly -- models at 7B and 13B scales, both pretrained and post-trained, including their full training data, training code and recipes, training logs and thousands of intermediate checkpoints. The final instruction model is available on the Ai2 Playground as a free research demo.

OLMo 2의 리포트가 나왔군요. OLMo 팀이 학습 안정성에 관심이 많아서인지 QK norm, Post Norm 같은 학습 안정성 테크닉을 적극적으로 사용했네요. 포스트트레이닝은 Tülu 3 레시피입니다.

The technical report for OLMo 2 has been released. It appears that the OLMo team is highly focused on training stability, as they have actively incorporated training stabilization techniques such as QK norm and post norm. The post-training recipe follows that of Tülu 3.

#llm #pre-training #post-training

LTX-Video: Realtime Video Latent Diffusion

(Yoav HaCohen, Nisan Chiprut, Benny Brazowski, Daniel Shalem, Dudu Moshe, Eitan Richardson, Eran Levin, Guy Shiran, Nir Zabari, Ori Gordon, Poriya Panet, Sapir Weissbuch, Victor Kulikov, Yaki Bitterman, Zeev Melumian, Ofir Bibi)

We introduce LTX-Video, a transformer-based latent diffusion model that adopts a holistic approach to video generation by seamlessly integrating the responsibilities of the Video-VAE and the denoising transformer. Unlike existing methods, which treat these components as independent, LTX-Video aims to optimize their interaction for improved efficiency and quality. At its core is a carefully designed Video-VAE that achieves a high compression ratio of 1:192, with spatiotemporal downscaling of 32 x 32 x 8 pixels per token, enabled by relocating the patchifying operation from the transformer's input to the VAE's input. Operating in this highly compressed latent space enables the transformer to efficiently perform full spatiotemporal self-attention, which is essential for generating high-resolution videos with temporal consistency. However, the high compression inherently limits the representation of fine details. To address this, our VAE decoder is tasked with both latent-to-pixel conversion and the final denoising step, producing the clean result directly in pixel space. This approach preserves the ability to generate fine details without incurring the runtime cost of a separate upsampling module. Our model supports diverse use cases, including text-to-video and image-to-video generation, with both capabilities trained simultaneously. It achieves faster-than-real-time generation, producing 5 seconds of 24 fps video at 768x512 resolution in just 2 seconds on an Nvidia H100 GPU, outperforming all existing models of similar scale. The source code and pre-trained models are publicly available, setting a new benchmark for accessible and scalable video generation.

비디오 생성 모델도 경쟁이 치열하군요. Patchify를 뺀 대신 32x32x8이라는 상당한 고압축을 사용했네요. 대신 생성 과정에서 발생하는 문제를 해소하기 위해 마지막 디노이징 스텝은 VAE 디코더를 사용해서 Latent에서 픽셀로 디노이징을 했네요.

The competition in video generation models is becoming fierce. Instead of using patchify, they employed a high compression ratio of 32x32x8. To address issues arising during the generation process, they performed the final denoising step using the VAE decoder on latent to pixel space.

#video-generation #vae

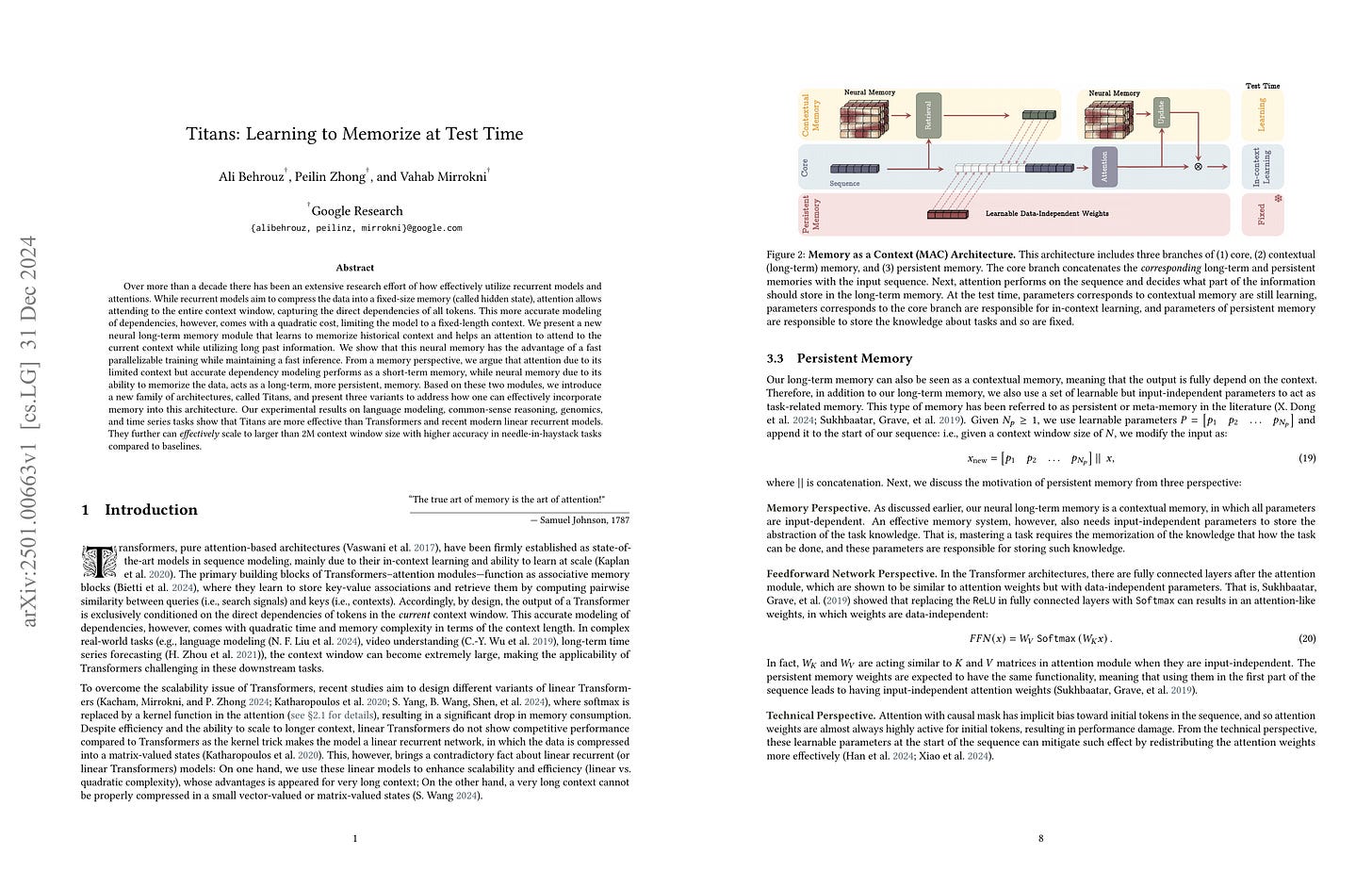

Titans: Learning to Memorize at Test Time

(Ali Behrouz, Peilin Zhong, Vahab Mirrokni)

Over more than a decade there has been an extensive research effort on how to effectively utilize recurrent models and attention. While recurrent models aim to compress the data into a fixed-size memory (called hidden state), attention allows attending to the entire context window, capturing the direct dependencies of all tokens. This more accurate modeling of dependencies, however, comes with a quadratic cost, limiting the model to a fixed-length context. We present a new neural long-term memory module that learns to memorize historical context and helps attention to attend to the current context while utilizing long past information. We show that this neural memory has the advantage of fast parallelizable training while maintaining a fast inference. From a memory perspective, we argue that attention due to its limited context but accurate dependency modeling performs as a short-term memory, while neural memory due to its ability to memorize the data, acts as a long-term, more persistent, memory. Based on these two modules, we introduce a new family of architectures, called Titans, and present three variants to address how one can effectively incorporate memory into this architecture. Our experimental results on language modeling, common-sense reasoning, genomics, and time series tasks show that Titans are more effective than Transformers and recent modern linear recurrent models. They further can effectively scale to larger than 2M context window size with higher accuracy in needle-in-haystack tasks compared to baselines.

모델에 테스트 시점에 저장 가능한 메모리를 추가하는 방법. Test Time Train과 (https://arxiv.org/abs/2407.04620) 비슷하다고 할 수 있겠네요.

학습 시점에 모델을 수정할 수 있는 능력도 중요한 방향이라고 봅니다. 다만 단순히 추가적인 정보를 저장하는 것을 넘어 능력을 수정할 수 있는 것이 필요할 것 같네요. 그건 좀 더 어려운 문제겠죠.

A method for adding memory that can store information at test time. This is similar to Test Time Train (https://arxiv.org/abs/2407.04620).

I believe it's an important research direction to add the ability to modify the model at test time. However, I think we need to go beyond merely storing additional information and develop the ability to modify the model's capabilities. That would be a more challenging problem.

#transformer

Reconstruction vs. Generation: Taming Optimization Dilemma in Latent Diffusion Models

(Jingfeng Yao, Xinggang Wang)

Latent diffusion models with Transformer architectures excel at generating high-fidelity images. However, recent studies reveal an optimization dilemma in this two-stage design: while increasing the per-token feature dimension in visual tokenizers improves reconstruction quality, it requires substantially larger diffusion models and more training iterations to achieve comparable generation performance. Consequently, existing systems often settle for sub-optimal solutions, either producing visual artifacts due to information loss within tokenizers or failing to converge fully due to expensive computation costs. We argue that this dilemma stems from the inherent difficulty in learning unconstrained high-dimensional latent spaces. To address this, we propose aligning the latent space with pre-trained vision foundation models when training the visual tokenizers. Our proposed VA-VAE (Vision foundation model Aligned Variational AutoEncoder) significantly expands the reconstruction-generation frontier of latent diffusion models, enabling faster convergence of Diffusion Transformers (DiT) in high-dimensional latent spaces. To exploit the full potential of VA-VAE, we build an enhanced DiT baseline with improved training strategies and architecture designs, termed LightningDiT. The integrated system achieves state-of-the-art (SOTA) performance on ImageNet 256x256 generation with an FID score of 1.35 while demonstrating remarkable training efficiency by reaching an FID score of 2.11 in just 64 epochs--representing an over 21 times convergence speedup compared to the original DiT. Models and codes are available at: https://github.com/hustvl/LightningDiT.

VAE의 차원을 증가시켜 Reconstruction 성능을 높였을 때 Generation 모델을 학습시키는 것이 어려워지는 문제에 대한 해결. VAE의 인코딩 결과에 이미지 백본의 출력으로 제약을 걸어 학습을 쉽게 만든다는 아이디어. 요즘 많이 나오는 문제 의식이죠. (https://arxiv.org/abs/2412.16326, https://arxiv.org/abs/2412.03069)

본질적으로는 VAE와 Diffusion의 학습이 분리되어 있다는 것이 문제이긴 하겠습니다.

This paper addresses the problem of increased difficulty in training generation models when enhancing reconstruction performance by increasing the dimensions of VAE. The key idea is to make training easier by constraining the encoded features from VAE using the outputs of image backbone models. This is a frequently appearing research these days (https://arxiv.org/abs/2412.16326, https://arxiv.org/abs/2412.03069).

Essentially, the root of the problem lies in the separation of VAE and diffusion training.

#vq #vae #diffusion

2.5 Years in Class: A Multimodal Textbook for Vision-Language Pretraining

(Wenqi Zhang, Hang Zhang, Xin Li, Jiashuo Sun, Yongliang Shen, Weiming Lu, Deli Zhao, Yueting Zhuang, Lidong Bing)

Compared to image-text pair data, interleaved corpora enable Vision-Language Models (VLMs) to understand the world more naturally like humans. However, such existing datasets are crawled from webpage, facing challenges like low knowledge density, loose image-text relations, and poor logical coherence between images. On the other hand, the internet hosts vast instructional videos (e.g., online geometry courses) that are widely used by humans to learn foundational subjects, yet these valuable resources remain underexplored in VLM training. In this paper, we introduce a high-quality multimodal textbook corpus with richer foundational knowledge for VLM pretraining. It collects over 2.5 years of instructional videos, totaling 22,000 class hours. We first use an LLM-proposed taxonomy to systematically gather instructional videos. Then we progressively extract and refine visual (keyframes), audio (ASR), and textual knowledge (OCR) from the videos, and organize as an image-text interleaved corpus based on temporal order. Compared to its counterparts, our video-centric textbook offers more coherent context, richer knowledge, and better image-text alignment. Experiments demonstrate its superb pretraining performance, particularly in knowledge- and reasoning-intensive tasks like ScienceQA and MathVista. Moreover, VLMs pre-trained on our textbook exhibit outstanding interleaved context awareness, leveraging visual and textual cues in their few-shot context for task solving. Our code are available at https://github.com/DAMO-NLP-SG/multimodal_textbook.

교육 영상에서 정보를 추출해서 Vision Language 모델을 위한 데이터를 구축한 시도. 굉장히 흥미롭네요. 퀄리티에서 우수하면서도 독특한 데이터를 추출할 수 있는 방법이지 않을까 싶습니다.

This is an interesting attempt to construct data for vision-language models by extracting information from instructional videos. I think it could be an effective method for obtaining high-quality and unique data.

#dataset #vision-language #video

Understanding and Mitigating Bottlenecks of State Space Models through the Lens of Recency and Over-smoothing

(Peihao Wang, Ruisi Cai, Yuehao Wang, Jiajun Zhu, Pragya Srivastava, Zhangyang Wang, Pan Li)

Structured State Space Models (SSMs) have emerged as alternatives to transformers. While SSMs are often regarded as effective in capturing long-sequence dependencies, we rigorously demonstrate that they are inherently limited by strong recency bias. Our empirical studies also reveal that this bias impairs the models' ability to recall distant information and introduces robustness issues. Our scaling experiments then discovered that deeper structures in SSMs can facilitate the learning of long contexts. However, subsequent theoretical analysis reveals that as SSMs increase in depth, they exhibit another inevitable tendency toward over-smoothing, e.g., token representations becoming increasingly indistinguishable. This fundamental dilemma between recency and over-smoothing hinders the scalability of existing SSMs. Inspired by our theoretical findings, we propose to polarize two channels of the state transition matrices in SSMs, setting them to zero and one, respectively, simultaneously addressing recency bias and over-smoothing. Experiments demonstrate that our polarization technique consistently enhances the associative recall accuracy of long-range tokens and unlocks SSMs to benefit further from deeper architectures. All source codes are released at https://github.com/VITA-Group/SSM-Bottleneck.

State Space Model의 Time decay로 인한 Long range dependency 모델링에서의 한계, 그리고 그것을 해결하기 위해 레이어를 쌓았을 때 SSM이 Low pass filter라는 것에 의해 발생하는 Over smoothing, 즉 토큰 임베딩이 서로 비슷해지는 문제에 대한 분석.

이에 대해 Transition 행렬의 최대/최소를 1과 0으로 고정하기 위해 일부를 상수로 고정해버리는 방법을 시도했네요.

Analysis of the limitations of state space models in modeling long-range dependencies due to time decay, and the over-smoothing problem which means token embeddings became similar to each other that occurs when stacking layers to address this issue, because of SSMs act as low-pass filters.

To address these issues, the authors attempted to fix the maximum and minimum values of the transition matrix to 1 and 0 by setting some elements as constants.

#state-space-model

Metadata Conditioning Accelerates Language Model Pre-training

(Tianyu Gao, Alexander Wettig, Luxi He, Yihe Dong, Sadhika Malladi, Danqi Chen)

The vast diversity of styles, domains, and quality levels present in language model pre-training corpora is essential in developing general model capabilities, but efficiently learning and deploying the correct behaviors exemplified in each of these heterogeneous data sources is challenging. To address this, we propose a new method, termed Metadata Conditioning then Cooldown (MeCo), to incorporate additional learning cues during pre-training. MeCo first provides metadata (e.g., URLs like en.wikipedia.org) alongside the text during training and later uses a cooldown phase with only the standard text, thereby enabling the model to function normally even without metadata. MeCo significantly accelerates pre-training across different model scales (600M to 8B parameters) and training sources (C4, RefinedWeb, and DCLM). For instance, a 1.6B language model trained with MeCo matches the downstream task performance of standard pre-training while using 33% less data. Additionally, MeCo enables us to steer language models by conditioning the inference prompt on either real or fabricated metadata that encodes the desired properties of the output: for example, prepending wikipedia.org to reduce harmful generations or factquizmaster.com (fabricated) to improve common knowledge task performance. We also demonstrate that MeCo is compatible with different types of metadata, such as model-generated topics. MeCo is remarkably simple, adds no computational overhead, and demonstrates promise in producing more capable and steerable language models.

프리트레이닝 문서 앞에 문서 URL을 붙이는 방법으로 학습 속도 가속. 물론 문서 URL이 주어지지 않으면 성능이 깎이기에 학습 후반에는 URL 없이 학습을 시킵니다.

Accelerating pre-training speed by prepending URLs to documents. Naturally, performance decreases when URLs are not provided, so the model is trained without URLs in the later stages of training.

#pre-training