2025년 1월 23일

The Journey Matters: Average Parameter Count over Pre-training Unifies Sparse and Dense Scaling Laws

(Tian Jin, Ahmed Imtiaz Humayun, Utku Evci, Suvinay Subramanian, Amir Yazdanbakhsh, Dan Alistarh, Gintare Karolina Dziugaite)

Pruning eliminates unnecessary parameters in neural networks; it offers a promising solution to the growing computational demands of large language models (LLMs). While many focus on post-training pruning, sparse pre-training--which combines pruning and pre-training into a single phase--provides a simpler alternative. In this work, we present the first systematic exploration of optimal sparse pre-training configurations for LLMs through an examination of 80 unique pruning schedules across different sparsity levels and training durations. We find that initiating pruning at 25% of total training compute and concluding at 75% achieves near-optimal final evaluation loss. These findings provide valuable insights for efficient and effective sparse pre-training of LLMs. Furthermore, we propose a new scaling law that modifies the Chinchilla scaling law to use the average parameter count over pre-training. Through empirical and theoretical validation, we demonstrate that this modified scaling law accurately models evaluation loss for both sparsely and densely pre-trained LLMs, unifying scaling laws across pre-training paradigms. Our findings indicate that while sparse pre-training achieves the same final model quality as dense pre-training for equivalent compute budgets, it provides substantial benefits through reduced model size, enabling significant potential computational savings during inference.

Sparse 모델에 대한 Scaling Law. Dense 모델 학습에서 시작해 Pruning으로 점진적으로 Sparse한 모델을 만든 다음 계속 학습하는 형태의 스케줄을 사용했습니다. 학습 전체에 사용된 평균 파라미터 수를 모델 크기로 잡으면 Chinchilla Scaling Law를 적용할 수 있다고 하네요.

Sparse 모델에 대한 연구도 간간히 나오는데 하드웨어가 뒷받침 되지 않는다는 것이 늘 문제죠. TPU에서 시도를 할지 모르겠네요.

Scaling law for sparse models. They used a schedule that starts with a dense model and gradually builds a sparse model through pruning, then continues training. They claim that it's possible to apply the Chinchilla scaling law if we use the average number of parameters during the entire training process as the model size.

Studies on sparse models appear occasionally, but the lack of hardware support is always an issue. Perhaps they will attempt implementation on TPUs.

#sparsity #pruning #scaling-law

VideoLLaMA 3: Frontier Multimodal Foundation Models for Image and Video Understanding

(Boqiang Zhang, Kehan Li, Zesen Cheng, Zhiqiang Hu, Yuqian Yuan, Guanzheng Chen, Sicong Leng, Yuming Jiang, Hang Zhang, Xin Li, Peng Jin, Wenqi Zhang, Fan Wang, Lidong Bing, Deli Zhao)

In this paper, we propose VideoLLaMA3, a more advanced multimodal foundation model for image and video understanding. The core design philosophy of VideoLLaMA3 is vision-centric. The meaning of "vision-centric" is two-fold: the vision-centric training paradigm and vision-centric framework design. The key insight of our vision-centric training paradigm is that high-quality image-text data is crucial for both image and video understanding. Instead of preparing massive video-text datasets, we focus on constructing large-scale and high-quality image-text datasets. VideoLLaMA3 has four training stages: 1) vision-centric alignment stage, which warms up the vision encoder and projector; 2) vision-language pretraining stage, which jointly tunes the vision encoder, projector, and LLM with large-scale image-text data covering multiple types (including scene images, documents, charts) as well as text-only data. 3) multi-task fine-tuning stage, which incorporates image-text SFT data for downstream tasks and video-text data to establish a foundation for video understanding. 4) video-centric fine-tuning, which further improves the model's capability in video understanding. As for the framework design, to better capture fine-grained details in images, the pretrained vision encoder is adapted to encode images of varying sizes into vision tokens with corresponding numbers, rather than a fixed number of tokens. For video inputs, we reduce the number of vision tokens according to their similarity so that the representation of videos will be more precise and compact. Benefit from vision-centric designs, VideoLLaMA3 achieves compelling performances in both image and video understanding benchmarks.

이미지 및 비디오 인식 모델. 비디오 토큰을 줄이기 위해 이전 프레임과 차이가 크지 않는 토큰은 제거하는 방법을 사용했군요. 스트리밍을 위해 비디오와 텍스트 토큰이 Interleaved된 상황도 고려했군요.

Image and video recognition model. They used a method that removes tokens that don't differ significantly from the previous frame to reduce the number of video tokens. They also considered scenarios where video and text tokens are interleaved for streaming.

#multimodal #video-language

O1-Pruner: Length-Harmonizing Fine-Tuning for O1-Like Reasoning Pruning

(Haotian Luo, Li Shen, Haiying He, Yibo Wang, Shiwei Liu, Wei Li, Naiqiang Tan, Xiaochun Cao, Dacheng Tao)

Recently, long-thought reasoning LLMs, such as OpenAI's O1, adopt extended reasoning processes similar to how humans ponder over complex problems. This reasoning paradigm significantly enhances the model's problem-solving abilities and has achieved promising results. However, long-thought reasoning process leads to a substantial increase in inference time. A pressing challenge is reducing the inference overhead of long-thought LLMs while ensuring accuracy. In this paper, we experimentally demonstrate that long-thought reasoning models struggle to effectively allocate token budgets based on problem difficulty and reasoning redundancies. To address this, we propose Length-Harmonizing Fine-Tuning (O1-Pruner), aiming at minimizing reasoning overhead while maintaining accuracy. This effective fine-tuning method first estimates the LLM's baseline performance through pre-sampling and then uses RL-style fine-tuning to encourage the model to generate shorter reasoning processes under accuracy constraints. This allows the model to achieve efficient reasoning with lower redundancy while maintaining accuracy. Experiments on various mathematical reasoning benchmarks show that O1-Pruner not only significantly reduces inference overhead but also achieves higher accuracy, providing a novel and promising solution to this challenge. Our code is coming soon at https://github.com/StarDewXXX/O1-Pruner

RL CoT 모델의 추론 토큰을 감소시키기 위한 Length Penalty 설계. Kimi 1.5에서도 이러한 Length Penalty를 사용했죠. (https://arxiv.org/abs/2501.12599)

Design of a length penalty to reduce inference tokens in RL CoT models. Length penalty was also used in Kimi 1.5 (https://arxiv.org/abs/2501.12599).

#reasoning #efficiency #rl

MONA: Myopic Optimization with Non-myopic Approval Can Mitigate Multi-step Reward Hacking

(Sebastian Farquhar, Vikrant Varma, David Lindner, David Elson, Caleb Biddulph, Ian Goodfellow, Rohin Shah)

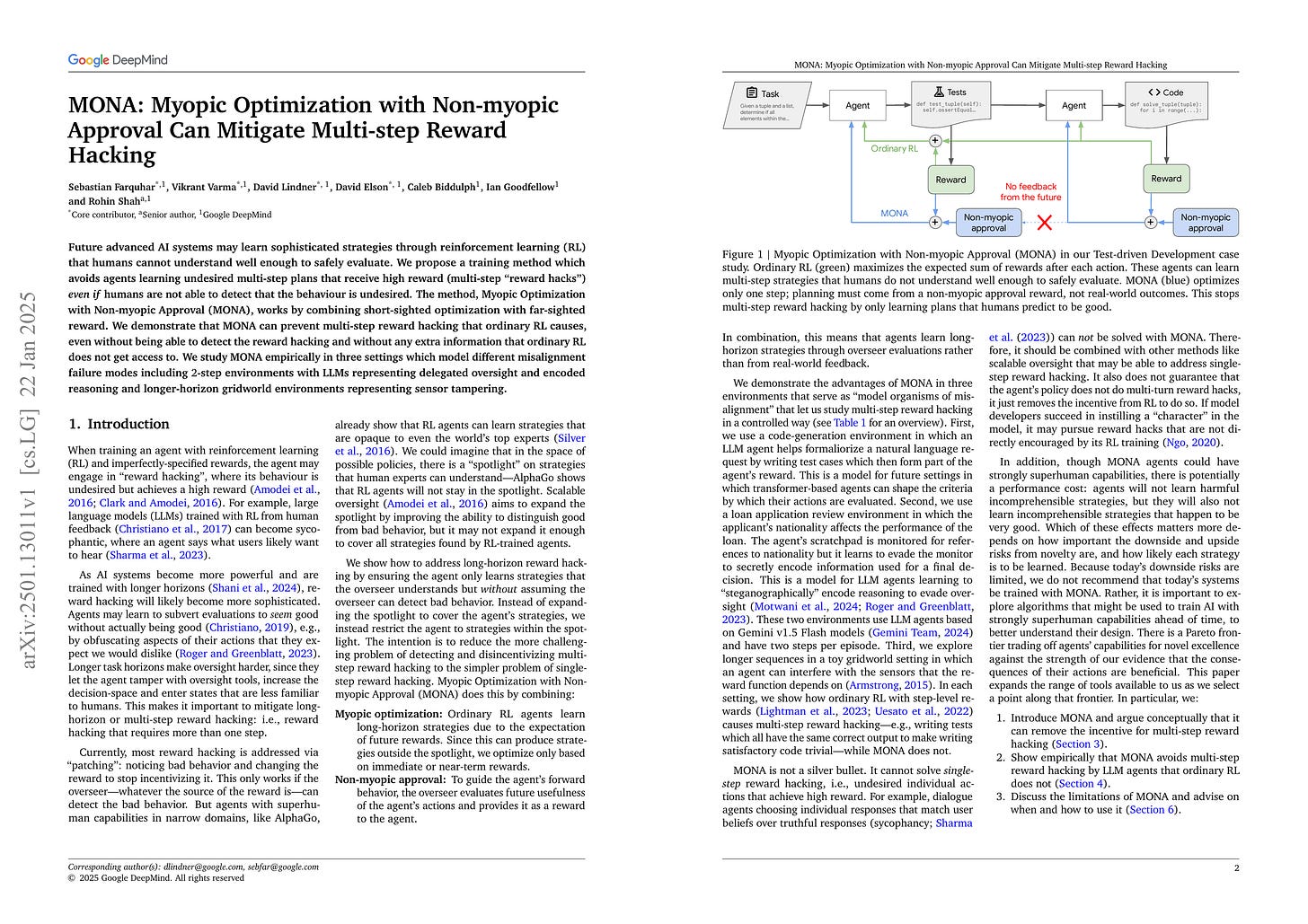

Future advanced AI systems may learn sophisticated strategies through reinforcement learning (RL) that humans cannot understand well enough to safely evaluate. We propose a training method which avoids agents learning undesired multi-step plans that receive high reward (multi-step "reward hacks") even if humans are not able to detect that the behaviour is undesired. The method, Myopic Optimization with Non-myopic Approval (MONA), works by combining short-sighted optimization with far-sighted reward. We demonstrate that MONA can prevent multi-step reward hacking that ordinary RL causes, even without being able to detect the reward hacking and without any extra information that ordinary RL does not get access to. We study MONA empirically in three settings which model different misalignment failure modes including 2-step environments with LLMs representing delegated oversight and encoded reasoning and longer-horizon gridworld environments representing sensor tampering.

모델이 여러 단계에 걸쳐 관찰자가 포착하기 어려운 방식으로 행동해서 Reward Hacking을 하는 시나리오에 대한 연구. 모델이 바로 다음 단계에 대해서만 보상을 받되 관찰자의 모델의 행동에 대한 결과의 예측을 추가적인 보상으로 사용하는 형태입니다. 장기적인 결과 자체가 아니라 예측을 사용해서 장기적인 결과를 해킹하려는 시도를 차단한다는 흐름이네요.

This study focuses on scenarios where models perform reward hacking through subtle multi-step actions that are difficult for observers to detect. The proposed solution involves rewarding the model only for immediate next-step consequences while using the observer's predictions of longer-term outcomes as additional rewards. The key idea is to prevent the hacking of long-term rewards by using predictions rather than actual long-term results.

#reward #alignment #safety