2025년 1월 22일

Advancing Language Model Reasoning through Reinforcement Learning and Inference Scaling

(Zhenyu Hou, Xin Lv, Rui Lu, Jiajie Zhang, Yujiang Li, Zijun Yao, Juanzi Li, Jie Tang, Yuxiao Dong)

Large language models (LLMs) have demonstrated remarkable capabilities in complex reasoning tasks. However, existing approaches mainly rely on imitation learning and struggle to achieve effective test-time scaling. While reinforcement learning (RL) holds promise for enabling self-exploration and learning from feedback, recent attempts yield only modest improvements in complex reasoning. In this paper, we present T1 to scale RL by encouraging exploration and understand inference scaling. We first initialize the LLM using synthesized chain-of-thought data that integrates trial-and-error and self-verification. To scale RL training, we promote increased sampling diversity through oversampling. We further employ an entropy bonus as an auxiliary loss, alongside a dynamic anchor for regularization to facilitate reward optimization. We demonstrate that T1 with open LLMs as its base exhibits inference scaling behavior and achieves superior performance on challenging math reasoning benchmarks. For example, T1 with Qwen2.5-32B as the base model outperforms the recent Qwen QwQ-32B-Preview model on MATH500, AIME2024, and Omni-math-500. More importantly, we present a simple strategy to examine inference scaling, where increased inference budgets directly lead to T1's better performance without any additional verification. We will open-source the T1 models and the data used to train them at https://github.com/THUDM/T1.

정답 기반 RL CoT. 높은 Temperature와 엔트로피 보너스를 사용해서 더 다양한 샘플을 많이 생성하는 것이 효과적이라는 결론. R1이나 Kimi 1.5도 방법상 여러 샘플을 사용하긴 하죠.

RL CoT based on answer correctness. The conclusion is that generating more diverse samples using high temperature and entropy bonus is effective. R1 and Kimi 1.5 also use multiple samples per prompt due to their methods.

#rl #reasoning

Parameters vs FLOPs: Scaling Laws for Optimal Sparsity for Mixture-of-Experts Language Models

(Samira Abnar, Harshay Shah, Dan Busbridge, Alaaeldin Mohamed Elnouby Ali, Josh Susskind, Vimal Thilak)

Scaling the capacity of language models has consistently proven to be a reliable approach for improving performance and unlocking new capabilities. Capacity can be primarily defined by two dimensions: the number of model parameters and the compute per example. While scaling typically involves increasing both, the precise interplay between these factors and their combined contribution to overall capacity remains not fully understood. We explore this relationship in the context of sparse Mixture-of-Expert models (MoEs), which allow scaling the number of parameters without proportionally increasing the FLOPs per example. We investigate how varying the sparsity level, i.e., the ratio of non-active to total parameters, affects model performance in terms of both pretraining and downstream performance. We find that under different constraints (e.g. parameter size and total training compute), there is an optimal level of sparsity that improves both training efficiency and model performance. These results provide a better understanding of the impact of sparsity in scaling laws for MoEs and complement existing works in this area, offering insights for designing more efficient architectures.

MoE의 Sparsity에 대한 Scaling Law. 모델 크기에 따라 Sparsity가 증가하는 것이 Compute Optimal이라는 결론입니다. 사실 최근 MoE 모델들은 이 논문에서 정의한 Sparsity가 (Routed Expert의 수) 꽤 높은 편이긴 하죠. 물론 FLOPs만 고려했을 때의 이야기이긴 합니다.

Scaling law for sparsity in MoE. The study concludes that increasing sparsity as model size grows is computationally optimal. In fact, recent MoE models have quite high sparsity as defined in this paper (in terms of the number of routed experts). Of course, this conclusion is valid when considering FLOPs alone.

#moe #scaling-law #sparsity

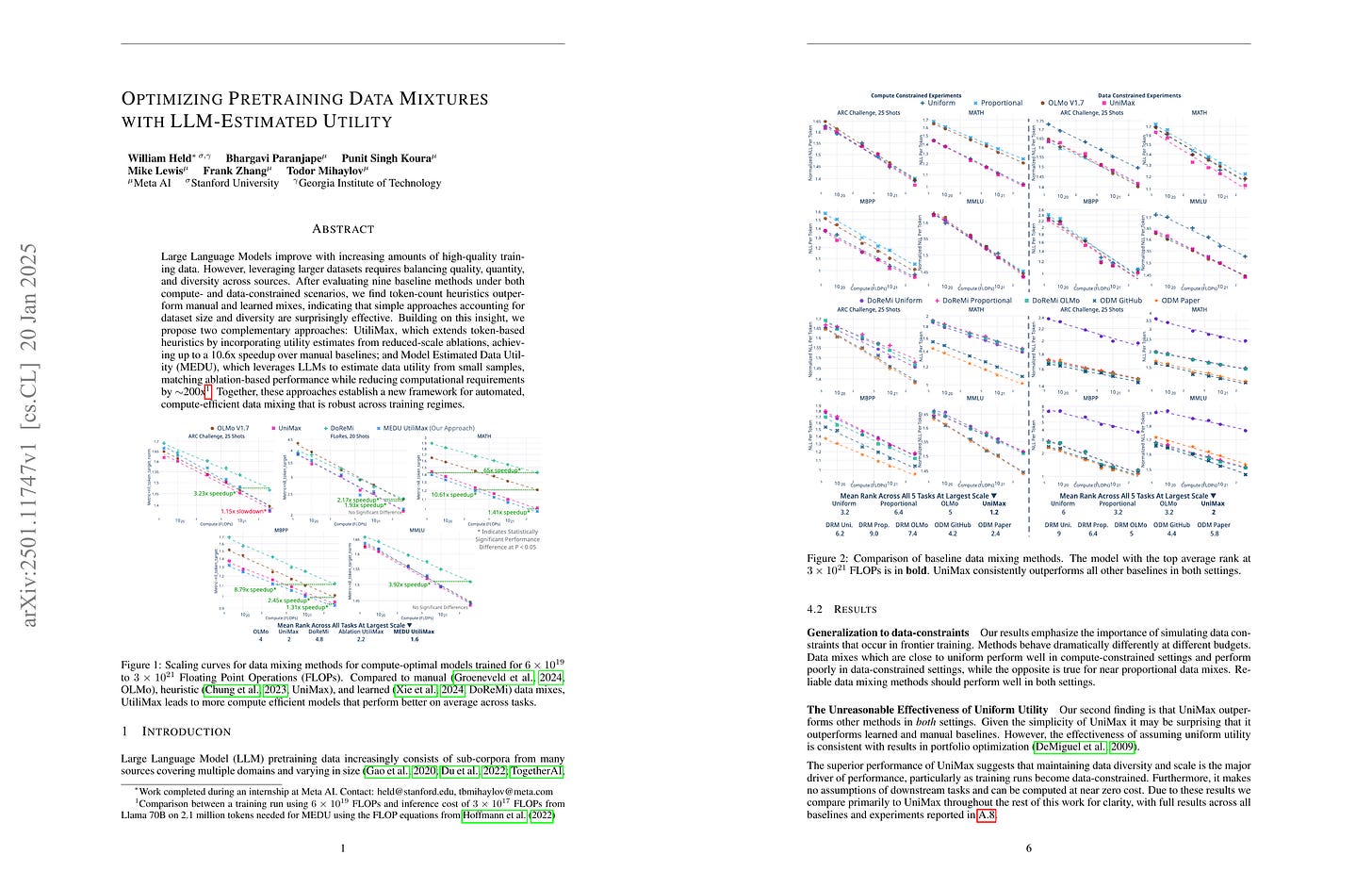

Optimizing Pretraining Data Mixtures with LLM-Estimated Utility

(William Held, Bhargavi Paranjape, Punit Singh Koura, Mike Lewis, Frank Zhang, Todor Mihaylov)

Large Language Models improve with increasing amounts of high-quality training data. However, leveraging larger datasets requires balancing quality, quantity, and diversity across sources. After evaluating nine baseline methods under both compute- and data-constrained scenarios, we find token-count heuristics outperform manual and learned mixes, indicating that simple approaches accounting for dataset size and diversity are surprisingly effective. Building on this insight, we propose two complementary approaches: UtiliMax, which extends token-based heuristics by incorporating utility estimates from reduced-scale ablations, achieving up to a 10.6x speedup over manual baselines; and Model Estimated Data Utility (MEDU), which leverages LLMs to estimate data utility from small samples, matching ablation-based performance while reducing computational requirements by \sim200x. Together, these approaches establish a new framework for automated, compute-efficient data mixing that is robust across training regimes.

프리트레이닝 데이터셋 비율 결정 방법. 흥미롭게도 언어 비율 설정을 위해 개발된 방법인 UniMax가 (https://arxiv.org/abs/2304.09151) 꽤 좋은 방법이었다고 하네요. 그래서 UniMax에 평가 데이터셋에 대한 Loss를 Utility로 결합한 방법을 설계했습니다.

A method for determining dataset ratios for pretraining. Interestingly, UniMax, which was developed for specifying language ratios (https://arxiv.org/abs/2304.09151), apparently worked quite well. Based on this, they designed a method that combines UniMax with the loss on evaluation datasets as a measure of utility.

#pretraining #dataset

Demons in the Detail: On Implementing Load Balancing Loss for Training Specialized Mixture-of-Expert Models

(Zihan Qiu, Zeyu Huang, Bo Zheng, Kaiyue Wen, Zekun Wang, Rui Men, Ivan Titov, Dayiheng Liu, Jingren Zhou, Junyang Lin)

This paper revisits the implementation of Load-balancing Loss (LBL) when training Mixture-of-Experts (MoEs) models. Specifically, LBL for MoEs is defined as N_E sum_i=1^N_E f_i p_i, where N_E is the total number of experts, f_i represents the frequency of expert i being selected, and p_i denotes the average gating score of the expert i. Existing MoE training frameworks usually employ the parallel training strategy so that f_i and the LBL are calculated within a micro-batch and then averaged across parallel groups. In essence, a micro-batch for training billion-scale LLMs normally contains very few sequences. So, the micro-batch LBL is almost at the sequence level, and the router is pushed to distribute the token evenly within each sequence. Under this strict constraint, even tokens from a domain-specific sequence (e.g., code) are uniformly routed to all experts, thereby inhibiting expert specialization. In this work, we propose calculating LBL using a global-batch to loose this constraint. Because a global-batch contains much more diverse sequences than a micro-batch, which will encourage load balance at the corpus level. Specifically, we introduce an extra communication step to synchronize f_i across micro-batches and then use it to calculate the LBL. Through experiments on training MoEs-based LLMs (up to 42.8B total parameters and 400B tokens), we surprisingly find that the global-batch LBL strategy yields excellent performance gains in both pre-training perplexity and downstream tasks. Our analysis reveals that the global-batch LBL also greatly improves the domain specialization of MoE experts.

분산 학습으로 인해 마이크로 배치 크기가 줄어든 상태에서 Load Balancing Loss를 마이크로 배치 내에서 계산하면 사실상 시퀀스 내에서 Expert가 균등하게 할당되도록 강제하는 효과가 발생한다는 발견. 따라서 전체 배치에 대해서 Load Balancing을 하자는 결론. 이건 일종의 버그라고 해야겠네요. 물론 DeepSeek V3에서 사용한 것처럼 시퀀스 내의 Load Balancing도 유용하긴 하겠습니다만 명시적인 통제 하에서 해야겠죠.

This paper discovers that when load balancing loss is calculated within micro-batches (which have become smaller due to distributed training), it effectively forces experts to be uniformly assigned within sequences. Therefore, the conclusion is to perform load balancing across the global batch. This can be considered a kind of bug. While intra-sequence load balancing, as used in DeepSeek V3, can be useful, it should be done under explicit control.

#moe #parallelism