2025년 1월 21일

DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

(DeepSeek-AI)

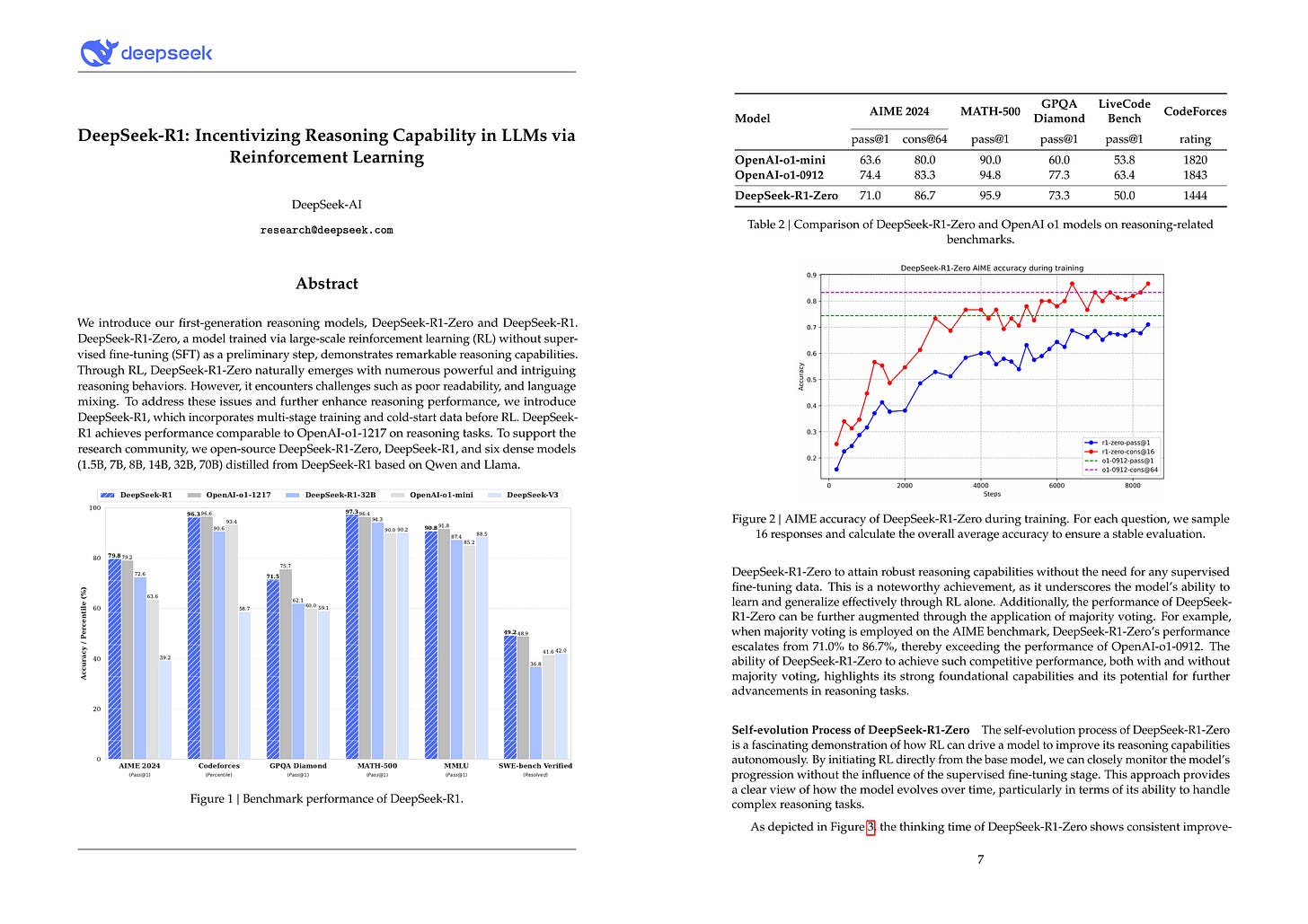

We introduce our first-generation reasoning models, DeepSeek-R1-Zero and DeepSeek-R1. DeepSeek-R1-Zero, a model trained via large-scale reinforcement learning (RL) without super- vised fine-tuning (SFT) as a preliminary step, demonstrates remarkable reasoning capabilities. Through RL, DeepSeek-R1-Zero naturally emerges with numerous powerful and intriguing reasoning behaviors. However, it encounters challenges such as poor readability, and language mixing. To address these issues and further enhance reasoning performance, we introduce DeepSeek-R1, which incorporates multi-stage training and cold-start data before RL. DeepSeek- R1 achieves performance comparable to OpenAI-o1-1217 on reasoning tasks. To support the research community, we open-source DeepSeek-R1-Zero, DeepSeek-R1, and six dense models (1.5B, 7B, 8B, 14B, 32B, 70B) distilled from DeepSeek-R1 based on Qwen and Llama.

DeepSeek의 추론 모델. PRM, ORM, MCTS 등을 전혀 사용하지 않고 규칙(정답) 기반의 Reward로 GRPO를 한 형태입니다. TÜLU 3의 RLVR과 (https://arxiv.org/abs/2411.15124) 비슷합니다. 이를 통해 자신의 생각에 대해 재검토하는 등의 새로운 능력이 등장하는 것을 관찰할 수 있었다고 합니다. (Aha moment, Nathan Lambert가 RLVR에 대해서 Outcome Reward만으로도 새로운 능력을 나타내게 할 수 있다고 언급했는데 이에 대한 사례겠네요.)

가장 기본적인 RL만으로 추론 능력을 부여하는 것이 가능하다는 것을 확인한 후 일반화된 모델을 만들기 위한 작업을 했습니다. 우선 CoT 데이터를 조금 사용해 깔끔한 CoT를 생성할 수 있게 한 다음 정답 기반 추론 RL을 진행하고, 기존 SFT 데이터에 추론 데이터, Generative Reward Model로 Rejection Sampling한 데이터를 합쳐 SFT 데이터를 구축하고, 이 위에 RLHF와 추론 RL을 진행했습니다.

그리고 이 SFT 데이터를 통해 작은 모델에 Distill 하는 실험을 했네요. Distill로 추론 능력을 부여할 수 있다는 것은 최근 사례들에서 여러 번 드러났죠. 작은 모델에 대해 RL로 학습하는 것보다 Distill 쪽이 더 나은 결과가 나왔다고 합니다. RL을 통해 더 나은 성능을 얻기 위해서는 베이스 모델의 퀄리티가 뒷받침 되어야 한다는 것을 시사하는 결과겠네요.

Distill 쪽이 훨씬 쉽게 결과를 얻을 수 있기 때문에 지금까지 그랬던 것처럼 앞으로도 이쪽에 대한 관심이 높을 것 같습니다. 그러나 논문에서 지적하듯 더 나아가기 위해선 여전히 더 강력한 베이스 모델과 대규모 RL이 필요하겠죠.

DeepSeek's reasoning model. They used GRPO with rule-based (correctness-based) rewards without utilizing PRM, ORM, MCTS, etc. This is similar to RLVR from TÜLU 3 (https://arxiv.org/abs/2411.15124). They observed the emergence of new abilities, such as re-evaluating previous thoughts. ("Aha moment", exemplifying Nathan Lambert's mention that new abilities can emerge using only outcome rewards.)

After confirming that basic RL could impart reasoning abilities, they worked on building generalized models. First, they used a small amount of CoT data to enable the model to generate clean CoT, then performed reasoning RL based on correctness. They constructed SFT data by combining previous SFT data with rejection-sampled data using reasoning correctness or generative reward models. Finally, they applied RLHF and reasoning RL to the model.

They also experimented with distilling these SFT data into smaller models. Recent studies have shown that reasoning abilities can be imparted through distillation. They report better results for small models through distillation rather than RL, suggesting that strong base models are necessary for improved RL outcomes.

As distillation offers an easier path to results, it will likely continue to garner interest, as it has in the past. However, as the paper points out, advancing model capabilities further will still require more powerful base models and large-scale RL.

#rl #reasoning

Kimi k1.5: Scaling Reinforcement Learning with LLMs

(Kimi Team)

Language model pretraining with next token prediction has proved effective for scaling compute but is limited to the amount of available training data. Scaling reinforcement learning (RL) unlocks a new axis for the continued improvement of artificial intelligence, with the promise that large language models (LLMs) can scale their training data by learning to explore with rewards. However, prior published work has not produced competitive results. In light of this, we report on the training practice of Kimi k1.5, our latest multi-modal LLM trained with RL, including its RL training techniques, multi-modal data recipes, and infrastructure optimization. Long context scaling and improved policy optimization methods are key ingredients of our approach, which establishes a simplistic, effective RL framework without relying on more complex techniques such as Monte Carlo tree search, value functions, and process reward models. Notably, our system achieves state-of-the-art reasoning performance across multiple benchmarks and modalities—e.g., 77.5 on AIME, 96.2 on MATH 500, 94-th percentile on Codeforces, 74.9 on MathVista—matching OpenAI’s o1. Moreover, we present effective long2short methods that use long-CoT techniques to improve short-CoT models, yielding state-of-the-art short-CoT reasoning results—e.g., 60.8 on AIME, 94.6 on MATH500, 47.3 on LiveCodeBench—outperforming existing short-CoT models such as GPT-4o and Claude Sonnet 3.5 by a large margin (up to +550%). The service of Kimi k1.5 on kimi.ai will be available soon.

Moonshot의 RL CoT 모델. DeepSeek과 마찬가지로 PRM이나 트리 서치 등에 의존하지 않는 정답 기반의 RL 방법입니다. 이쪽이 사용한 데이터 등에 대해서 좀 더 많이 밝히고 있네요. 그리고 멀티모달 문제에 대해서도 학습했군요.

추론 문제에 대한 접근은 그동안 트리 서치나 PRM에 많이 현혹됐죠. 트리 서치도 PRM도 CoT에 일정한 구조를 부과하는 것이고 그것에서 발생하는 한계가 있을 겁니다. 물론 이 두 방법이 전혀 도움이 되지 않는다고 확언할 수는 없겠죠. 그렇지만 더 단순한 방법을 먼저 시도하는 것이 순서일 것입니다.

Moonshot's RL CoT model. Like DeepSeek, they employed a correctness-based RL method without relying on PRM or tree search. This paper provides more details about the data they used. They also trained the model on multimodal tasks.

Tree search and PRM have attracted a lot of interest in approaches for reasoning problems. Both tree search and PRM impose a certain structure on CoT, which inevitably leads to some limitations. Of course, we can't definitively say that these two methods are not helpful. However, it's better to start from simpler methods.

#reasoning #rl #multimodal