2025년 1월 13일

Enabling Scalable Oversight via Self-Evolving Critic

(Zhengyang Tang, Ziniu Li, Zhenyang Xiao, Tian Ding, Ruoyu Sun, Benyou Wang, Dayiheng Liu, Fei Huang, Tianyu Liu, Bowen Yu, Junyang Lin)

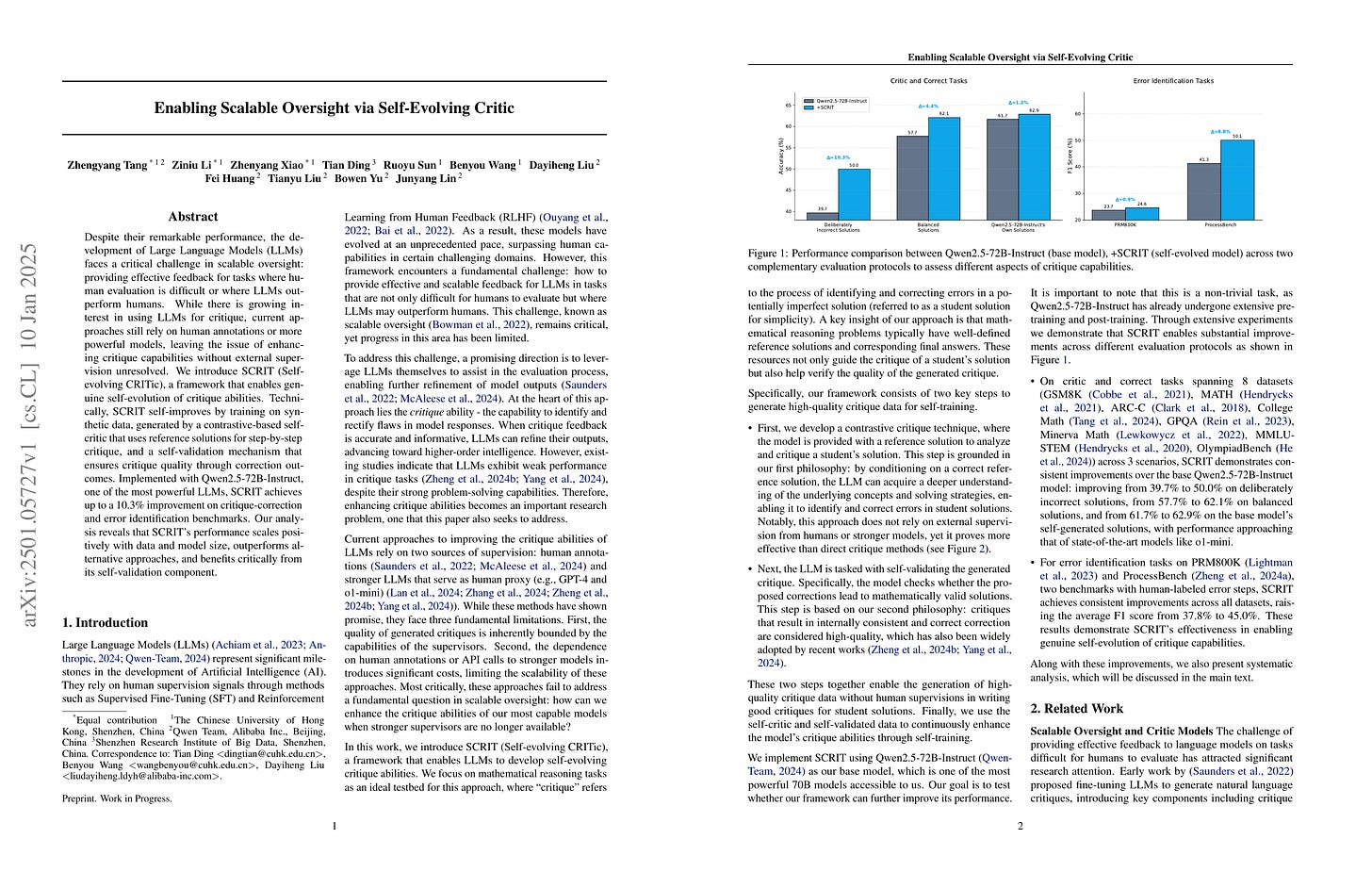

Despite their remarkable performance, the development of Large Language Models (LLMs) faces a critical challenge in scalable oversight: providing effective feedback for tasks where human evaluation is difficult or where LLMs outperform humans. While there is growing interest in using LLMs for critique, current approaches still rely on human annotations or more powerful models, leaving the issue of enhancing critique capabilities without external supervision unresolved. We introduce SCRIT (Self-evolving CRITic), a framework that enables genuine self-evolution of critique abilities. Technically, SCRIT self-improves by training on synthetic data, generated by a contrastive-based self-critic that uses reference solutions for step-by-step critique, and a self-validation mechanism that ensures critique quality through correction outcomes. Implemented with Qwen2.5-72B-Instruct, one of the most powerful LLMs, SCRIT achieves up to a 10.3% improvement on critique-correction and error identification benchmarks. Our analysis reveals that SCRIT's performance scales positively with data and model size, outperforms alternative approaches, and benefits critically from its self-validation component.

Critic 모델을 학습하기 위한 방법. 정답인 응답을 레퍼런스로 삼고 레퍼런스를 기준으로 응답을 평가하게 하는 방법입니다.

A method for training a critic model. This approach uses correct responses as reference answers and let the critic to evaluate other responses in comparison to these references.

#reward-model

Scalable Vision Language Model Training via High Quality Data Curation

(Hongyuan Dong, Zijian Kang, Weijie Yin, Xiao Liang, Chao Feng, Jiao Ran)

In this paper, we introduce SAIL-VL (ScAlable Vision Language Model TraIning via High QuaLity Data Curation), an open-source vision language model (VLM) of state-of-the-art (SOTA) performance with 2B parameters. We introduce three key improvements that contribute to SAIL-VL's leading performance: (1) Scalable high-quality visual understanding data construction: We implement a visual understanding data construction pipeline, which enables hundred-million-scale high-quality recaption data annotation. Equipped with this pipeline, we curate SAIL-Caption, a large-scale caption dataset with large quantity and the highest data quality compared with opensource caption datasets. (2) Scalable Pretraining with High-Quality Visual Understanding Data: We scale SAIL-VL's pretraining budget up to 131B tokens and show that even a 2B VLM benefits from scaled up training data sizes, exhibiting expected data size scaling laws in visual understanding and instruction following performance. (3) Scalable SFT via quantity and quality scaling: We introduce general guidance for instruction data curation to scale up instruction data continuously, allowing us to construct a large SFT dataset with the highest quality. To further improve SAIL-VL's performance, we propose quality scaling, a multi-stage training recipe with curriculum learning, to improve model performance scaling curves w.r.t. data sizes from logarithmic to be near-linear. SAIL-VL obtains the highest average score in 19 commonly used benchmarks in our evaluation and achieves top1 performance among VLMs of comparable sizes on OpenCompass (https://rank.opencompass.org.cn/leaderboard-multimodal). We release our SAIL-VL-2B model at HuggingFace (https://huggingface.co/BytedanceDouyinContent/SAIL-VL-2B).

Vision Language 프리트레이닝과 SFT의 규모 증대를 통한 성능 향상. 프리트레이닝은 Recaptioning을 통한 데이터 구축이 중심이고 SFT는 각 데이터소스에 대해서 소규모 실험으로 퀄리티를 평가해나가는 것이 중심이군요.

Improving performance of vision-language models through scaling up pretraining and SFT. The pretraining primarily focuses on data construction through recaptioning, while the SFT approach centers on evaluating the quality of each data source through small-scale experiments.

#vision-language #captioning #scaling-law