2024년 9월 19일

Moshi: a speech-text foundation model for real-time dialogue

(Alexandre Défossez, Laurent Mazaré, Manu Orsini, Amélie Royer, Patrick Pérez, Hervé Jégou, Edouard Grave, Neil Zeghidour)

We introduce Moshi, a speech-text foundation model and full-duplex spoken dialogue framework. Current systems for spoken dialogue rely on pipelines of independent components, namely voice activity detection, speech recognition, textual dialogue and text-to-speech. Such frameworks cannot emulate the experience of real conversations. First, their complexity induces a latency of several seconds between interactions. Second, text being the intermediate modality for dialogue, non-linguistic information that modifies meaning— such as emotion or non-speech sounds— is lost in the interaction. Finally, they rely on a segmentation into speaker turns, which does not take into account overlapping speech, interruptions and interjections. Moshi solves these independent issues altogether by casting spoken dialogue as speech-to-speech generation. Starting from a text language model backbone, Moshi generates speech as tokens from the residual quantizer of a neural audio codec, while modeling separately its own speech and that of the user into parallel streams. This allows for the removal of explicit speaker turns, and the modeling of arbitrary conversational dynamics. We moreover extend the hierarchical semantic-to-acoustic token generation of previous work to first predict time-aligned text tokens as a prefix to audio tokens. Not only this “Inner Monologue” method significantly improves the linguistic quality of generated speech, but we also illustrate how it can provide streaming speech recognition and text-to-speech. Our resulting model is the first real-time full-duplex spoken large language model, with a theoretical latency of 160ms, 200ms in practice, and is available at github.com/kyutai-labs/moshi.

Kyutai가 (https://kyutai.org/) 자신들의 Speech-Text 모델을 공개하면서 테크니컬 리포트도 같이 공개했네요.

언어 모델인 Helium과 오디오 코덱 모델인 Mimi로 구성되어 있습니다.

Helium은 Llama와 비슷한 세팅이군요. WET 기반으로 Line Dedup, fastText로 Duplicate vs Non-duplicate를 분류하는 방식으로 Fuzzy Dedup. 이쪽은 좀 특이하네요. 여기에 9개 카테고리에 대한 fastText 분류기로 라인 기반 퀄리티 필터링. 이쪽도 좀 특이하네요.

오디오 코덱 모델 Mimi는 RQ-VAE 기반 Autoregressive 모델이고 WavLM으로 Semantic한 정보를 Distill했습니다.

그 다음 Helium에 Depth Transformer를 붙여 RQ Transformer로 수정한 다음 학습시키는군요. 1단계에서는 단일 스트림으로 학습시키지만 2단계에서는 유저와 Moshi의 음성 스트림을 고려해 멀티 스트림으로 학습시킵니다. 그리고 텍스트와 오디오를 정렬한 다음 텍스트 토큰이 오디오 토큰의 Prefix로 기능하도록 만들었네요.

재미있네요. 다만 더 단순하게 할 수 있는 방법을 생각해보는 것도 재미있을 것 같습니다.

#speech-text

GRIN: GRadient-INformed MoE

(Liyuan Liu, Young Jin Kim, Shuohang Wang, Chen Liang, Yelong Shen, Hao Cheng, Xiaodong Liu, Masahiro Tanaka, Xiaoxia Wu, Wenxiang Hu, Vishrav Chaudhary, Zeqi Lin, Chenruidong Zhang, Jilong Xue, Hany Awadalla, Jianfeng Gao, Weizhu Chen)

Mixture-of-Experts (MoE) models scale more effectively than dense models due to sparse computation through expert routing, selectively activating only a small subset of expert modules. However, sparse computation challenges traditional training practices, as discrete expert routing hinders standard backpropagation and thus gradient-based optimization, which are the cornerstone of deep learning. To better pursue the scaling power of MoE, we introduce GRIN (GRadient-INformed MoE training), which incorporates sparse gradient estimation for expert routing and configures model parallelism to avoid token dropping. Applying GRIN to autoregressive language modeling, we develop a top-2 16$\times$3.8B MoE model. Our model, with only 6.6B activated parameters, outperforms a 7B dense model and matches the performance of a 14B dense model trained on the same data. Extensive evaluations across diverse tasks demonstrate the potential of GRIN to significantly enhance MoE efficacy, achieving 79.4 on MMLU, 83.7 on HellaSwag, 74.4 on HumanEval, and 58.9 on MATH.

SparseMixer를 (https://arxiv.org/abs/2310.00811) 개선해서 Autoregressive LM에 적용해봤군요. SparseMixer는 간단하게는 Straight-Through Estimator나 REINFORCE를 적용하는 것처럼 MoE 라우터에 대한 그래디언트를 추정하기 위한 방법입니다. 좀 더 큰 규모에서 잘 작동할지 궁금했었는데 큰 문제가 없는 것 같군요.

Sparse MoE는 본질적으로 가장 중요한 부분이라고 할 수 있는 라우터 학습에 대해 Workaround를 적용할 수밖에 없죠. 이 부분을 어떻게 개선하는가에 따라 잠재적으로 상당히 유의미한 개선을 기대할 수 있지 않을까 싶습니다. 최근 이런 시도들이 나오고 있는데 (https://arxiv.org/abs/2408.15664) 실험해보면 재미있을 것 같네요.

#moe

A Controlled Study on Long Context Extension and Generalization in LLMs

(Yi Lu, Jing Nathan Yan, Songlin Yang, Justin T. Chiu, Siyu Ren, Fei Yuan, Wenting Zhao, Zhiyong Wu, Alexander M. Rush)

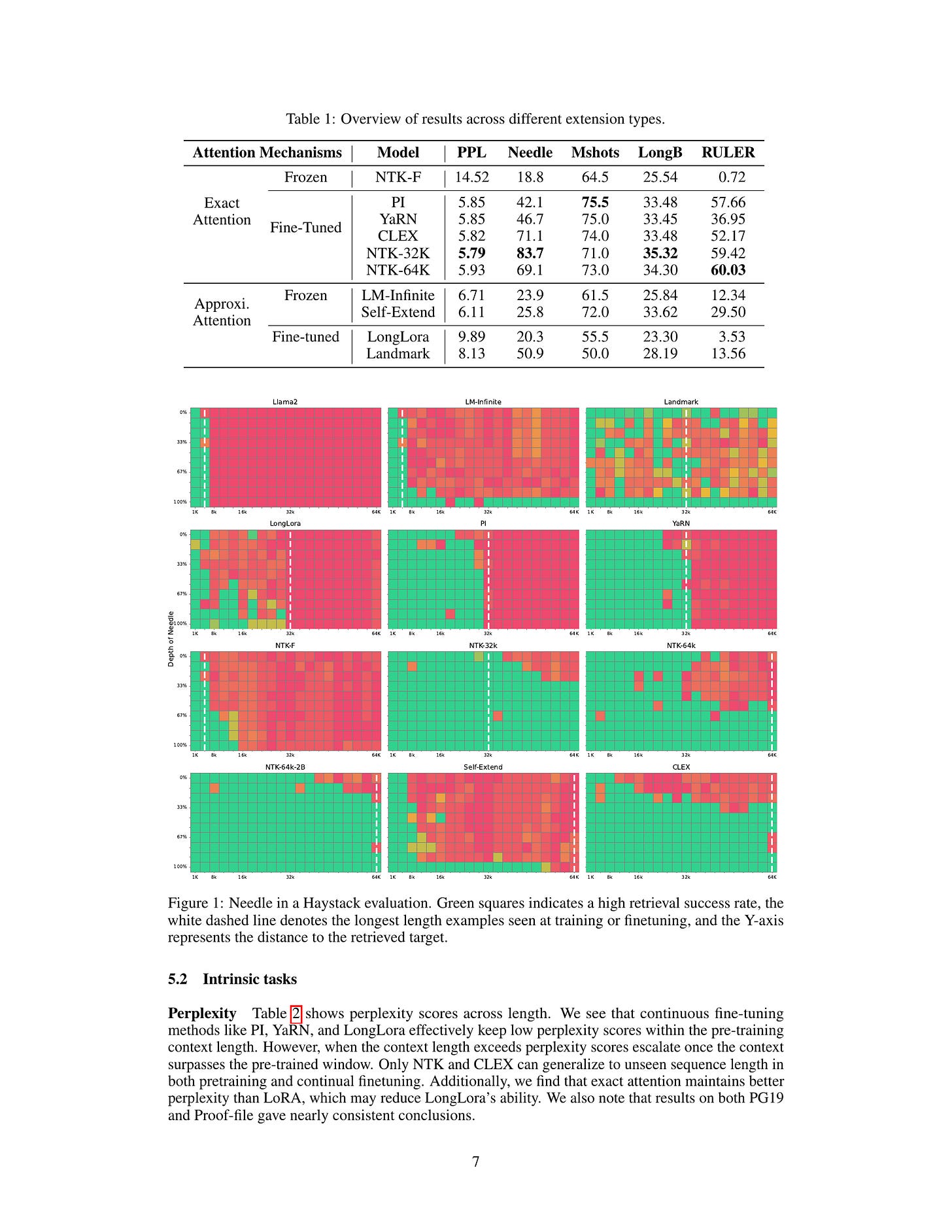

Broad textual understanding and in-context learning require language models that utilize full document contexts. Due to the implementation challenges associated with directly training long-context models, many methods have been proposed for extending models to handle long contexts. However, owing to differences in data and model classes, it has been challenging to compare these approaches, leading to uncertainty as to how to evaluate long-context performance and whether it differs from standard evaluation. We implement a controlled protocol for extension methods with a standardized evaluation, utilizing consistent base models and extension data. Our study yields several insights into long-context behavior. First, we reaffirm the critical role of perplexity as a general-purpose performance indicator even in longer-context tasks. Second, we find that current approximate attention methods systematically underperform across long-context tasks. Finally, we confirm that exact fine-tuning based methods are generally effective within the range of their extension, whereas extrapolation remains challenging. All codebases, models, and checkpoints will be made available open-source, promoting transparency and facilitating further research in this critical area of AI development.

Long Context Extension 방법들에 대한 평가. 다른 거 하지 말고 YaRN 같은 방법을 그냥 쓰라는 결론으로 생각하면 되지 않을까요?

#long-context

To CoT or not to CoT? Chain-of-thought helps mainly on math and symbolic reasoning

(Zayne Sprague, Fangcong Yin, Juan Diego Rodriguez, Dongwei Jiang, Manya Wadhwa, Prasann Singhal, Xinyu Zhao, Xi Ye, Kyle Mahowald, Greg Durrett)

Chain-of-thought (CoT) via prompting is the de facto method for eliciting reasoning capabilities from large language models (LLMs). But for what kinds of tasks is this extra ``thinking'' really helpful? To analyze this, we conducted a quantitative meta-analysis covering over 100 papers using CoT and ran our own evaluations of 20 datasets across 14 models. Our results show that CoT gives strong performance benefits primarily on tasks involving math or logic, with much smaller gains on other types of tasks. On MMLU, directly generating the answer without CoT leads to almost identical accuracy as CoT unless the question or model's response contains an equals sign, indicating symbolic operations and reasoning. Following this finding, we analyze the behavior of CoT on these problems by separating planning and execution and comparing against tool-augmented LLMs. Much of CoT's gain comes from improving symbolic execution, but it underperforms relative to using a symbolic solver. Our results indicate that CoT can be applied selectively, maintaining performance while saving inference costs. Furthermore, they suggest a need to move beyond prompt-based CoT to new paradigms that better leverage intermediate computation across the whole range of LLM applications.

Chain of Thought가 도움이 되는 경우는 수학이나 논리적 사고가 필요한 문제로 한정되는 것 같다는 연구. 그리고 이런 경우에는 Chain of Thought 대신 도구 사용으로 대체할 수 있는 경우도 많지 않은가 하는 주장이네요.

Quiet-STaR에서 (https://arxiv.org/abs/2403.09629) 지적한 것처럼 모든 토큰의 생성에 많은 추론 과정이 필요한 것은 아니겠죠. 비슷하게 생각할 수 있지 않을까 싶습니다.

#reasoning

Qwen2.5-Math Technical Report: Toward Mathematical Expert Model via Self-Improvement

(An Yang, Beichen Zhang, Binyuan Hui, Bofei Gao, Bowen Yu, Chengpeng Li, Dayiheng Liu, Jianhong Tu, Jingren Zhou, Junyang Lin, Keming Lu, Mingfeng Xue, Runji Lin, Tianyu Liu, Xingzhang Ren, Zhenru Zhang)

In this report, we present a series of math-specific large language models: Qwen2.5-Math and Qwen2.5-Math-Instruct-1.5B/7B/72B. The core innovation of the Qwen2.5 series lies in integrating the philosophy of self-improvement throughout the entire pipeline, from pre-training and post-training to inference: (1) During the pre-training phase, Qwen2-Math-Instruct is utilized to generate large-scale, high-quality mathematical data. (2) In the post-training phase, we develop a reward model (RM) by conducting massive sampling from Qwen2-Math-Instruct. This RM is then applied to the iterative evolution of data in supervised fine-tuning (SFT). With a stronger SFT model, it's possible to iteratively train and update the RM, which in turn guides the next round of SFT data iteration. On the final SFT model, we employ the ultimate RM for reinforcement learning, resulting in the Qwen2.5-Math-Instruct. (3) Furthermore, during the inference stage, the RM is used to guide sampling, optimizing the model's performance. Qwen2.5-Math-Instruct supports both Chinese and English, and possess advanced mathematical reasoning capabilities, including Chain-of-Thought (CoT) and Tool-Integrated Reasoning (TIR). We evaluate our models on 10 mathematics datasets in both English and Chinese, such as GSM8K, MATH, GaoKao, AMC23, and AIME24, covering a range of difficulties from grade school level to math competition problems.

Qwen 2.5 Math. DeepSeekMath 스타일의 (https://arxiv.org/abs/2402.03300) Common Crawl을 통한 추가 데이터 수집, 그리고 이 추가 데이터를 활용해 Qwen 2 72B 모델로 QA 데이터 생성. 이렇게 구축한 700B 데이터로 학습한 모델로 새로운 데이터를 추가 생성하고 데이터를 더 수집해 1T 데이터를 구축했습니다.

네, 수학 관련 데이터만 1T네요.

SFT와 Reward Model 학습용으로는 답이나 파이썬 인터프리터를 사용한 Rejection Sampling을 사용했군요.

#math #llm

Qwen2.5-Coder Technical Report

(Binyuan Hui, Jian Yang, Zeyu Cui, Jiaxi Yang, Dayiheng Liu, Lei Zhang, Tianyu Liu, Jiajun Zhang, Bowen Yu, Kai Dang, An Yang, Rui Men, Fei Huang, Xingzhang Ren, Xuancheng Ren, Jingren Zhou, Junyang Lin)

In this report, we introduce the Qwen2.5-Coder series, a significant upgrade from its predecessor, CodeQwen1.5. This series includes two models: Qwen2.5-Coder-1.5B and Qwen2.5-Coder-7B. As a code-specific model, Qwen2.5-Coder is built upon the Qwen2.5 architecture and continues pretrained on a vast corpus of over 5.5 trillion tokens. Through meticulous data cleaning, scalable synthetic data generation, and balanced data mixing, Qwen2.5-Coder demonstrates impressive code generation capabilities while retaining general versatility. The model has been evaluated on a wide range of code-related tasks, achieving state-of-the-art (SOTA) performance across more than 10 benchmarks, including code generation, completion, reasoning, and repair, consistently outperforming larger models of the same model size. We believe that the release of the Qwen2.5-Coder series will not only push the boundaries of research in code intelligence but also, through its permissive licensing, encourage broader adoption by developers in real-world applications.

Qwen 2.5 Coder. 코드와 코드 관련 데이터를 수집하고, 데이터 생성하고, 수학 데이터를 사용. Common Crawl에서 데이터를 수집하는 작업도 했는데 DeepSeekMath와는 달리 URL 기반이 아니라 Coarse-to-Fine 필터링을 했다고 하는데...디테일이 나와 있지는 않네요. 느낌으로는 잠재적으로 코드와 관련이 없는 데이터도 성기게 수집한 다음 점진적으로 코드 관련성이 높은 데이터를 추려나가는 작업을 한 것이 아닌가 싶습니다.

그리고 이렇게 구축한 데이터로 5.5T 학습. 5.2T는 파일 단위로 학습하고 300B는 Long Context Extension을 한 다음 레포 레벨로 학습했습니다.

Instruction은 코드 스니펫에서 Instruction을 생성한 다음 코드를 생성하고 스니펫과 비교해서 필터링, Multi Agent 세팅으로 Multilingual 데이터 생성, Instruction 데이터에 대해 체크리스트를 만들고 (코드가 정확한가, 명료한가 등) 평가, 그리고 샌드박스 내에서 유닛 테스트를 생성하고 테스트하는 등의 과정을 진행. 데이터 관련 작업이 정말 많긴 합니다.

#code #llm