2024년 9월 18일

CPL: Critical Planning Step Learning Boosts LLM Generalization in Reasoning Tasks

(Tianlong Wang, Xueting Han, Jing Bai)

Post-training large language models (LLMs) to develop reasoning capabilities has proven effective across diverse domains, such as mathematical reasoning and code generation. However, existing methods primarily focus on improving task-specific reasoning but have not adequately addressed the model's generalization capabilities across a broader range of reasoning tasks. To tackle this challenge, we introduce Critical Planning Step Learning (CPL), which leverages Monte Carlo Tree Search (MCTS) to explore diverse planning steps in multi-step reasoning tasks. Based on long-term outcomes, CPL learns step-level planning preferences to improve the model's planning capabilities and, consequently, its general reasoning capabilities. Furthermore, while effective in many scenarios for aligning LLMs, existing preference learning approaches like Direct Preference Optimization (DPO) struggle with complex multi-step reasoning tasks due to their inability to capture fine-grained supervision at each step. We propose Step-level Advantage Preference Optimization (Step-APO), which integrates an advantage estimate for step-level preference pairs obtained via MCTS into the DPO. This enables the model to more effectively learn critical intermediate planning steps, thereby further improving its generalization in reasoning tasks. Experimental results demonstrate that our method, trained exclusively on GSM8K and MATH, not only significantly improves performance on GSM8K (+10.5%) and MATH (+6.5%), but also enhances out-of-domain reasoning benchmarks, such as ARC-C (+4.0%), BBH (+1.8%), MMLU-STEM (+2.2%), and MMLU (+0.9%).

MCTS로 Reasoning Trace를 생성한 다음 스텝 단위 Advantage를 고려한 DPO의 변형으로 학습. MCTS 과정에서 Plan과 Solution을 구분해 Plan의 다양성을 고려한 것이 학습 과제 이외의 과제에 대한 일반화를 촉진했다고 하는군요.

#mcts #search #rl

Introducing Async Tensor Parallelism in PyTorch

(Yifu Wang, Horace He, Less Wright, Luca Wehrstedt, Tianyu Liu, Wanchao Liang)

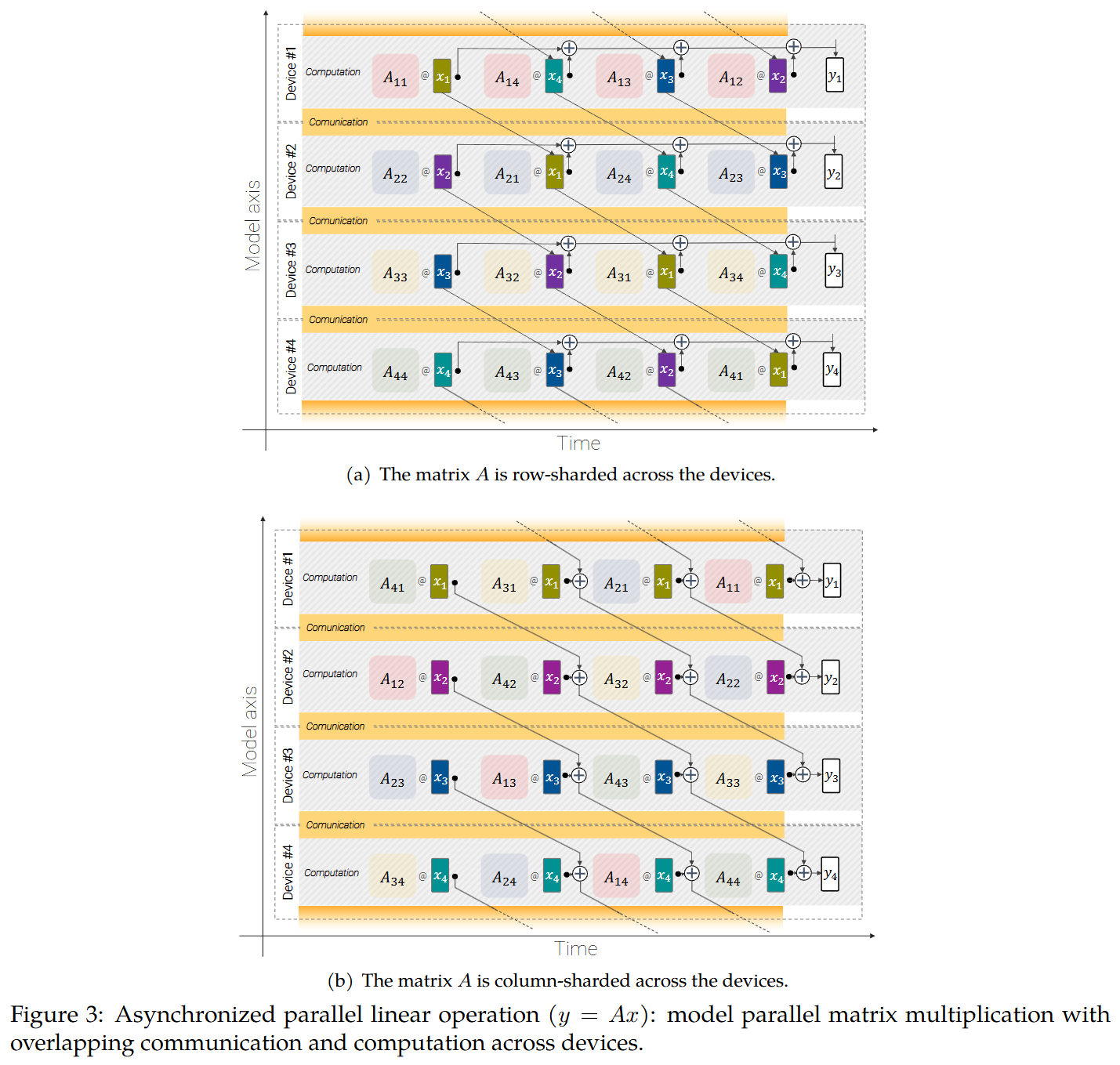

PyTorch에 Async Tensor Parallel 구현이 들어왔군요. 이 방법을 적용한 대표적인 사례가 ViT-22B죠. (https://arxiv.org/abs/2302.05442)

이런 방법의 핵심은 연산과 통신을 중첩하는 것인데 그냥은 구현이 쉽지 않았다고 하는군요. SymmetricMemory라는 추상화 위에 구현되어 있는데 참조해보면 재미있을 것 같습니다.

#parallelism #efficiency

Rethinking KenLM: Good and Bad Model Ensembles for Efficient Text Quality Filtering in Large Web Corpora

(Yungi Kim, Hyunsoo Ha, Sukyung Lee, Jihoo Kim, Seonghoon Yang, Chanjun Park)

With the increasing demand for substantial amounts of high-quality data to train large language models (LLMs), efficiently filtering large web corpora has become a critical challenge. For this purpose, KenLM, a lightweight n-gram-based language model that operates on CPUs, is widely used. However, the traditional method of training KenLM utilizes only high-quality data and, consequently, does not explicitly learn the linguistic patterns of low-quality data. To address this issue, we propose an ensemble approach that leverages two contrasting KenLMs: (i) Good KenLM, trained on high-quality data; and (ii) Bad KenLM, trained on low-quality data. Experimental results demonstrate that our approach significantly reduces noisy content while preserving high-quality content compared to the traditional KenLM training method. This indicates that our method can be a practical solution with minimal computational overhead for resource-constrained environments.

n-gram LM을 사용한 필터링은 주로 Positive 셋에 대해 LM을 학습시킨 다음 Perplexity로 필터링하는 방법을 사용하죠. 여기서는 명시적으로 Negative 셋에 대해 LM을 학습시켜서 Perplexity의 차이를 측정하는 방법을 사용합니다.

LM Loss의 차이라는 점에서 Learnability와 (https://arxiv.org/abs/2404.07965, https://arxiv.org/abs/2408.08310) 연결할 수 있지 않을까 싶네요. 보통 학습하는 LM 혹은 작은 LM을 사용하는데 여기에 n-gram LM을 사용했다고 생각할 수 있지 않을까 싶습니다.

#corpus

OmniGen: Unified Image Generation

(Shitao Xiao, Yueze Wang, Junjie Zhou, Huaying Yuan, Xingrun Xing, Ruiran Yan, Shuting Wang, Tiejun Huang, Zheng Liu)

In this work, we introduce OmniGen, a new diffusion model for unified image generation. Unlike popular diffusion models (e.g., Stable Diffusion), OmniGen no longer requires additional modules such as ControlNet or IP-Adapter to process diverse control conditions. OmniGenis characterized by the following features: 1) Unification: OmniGen not only demonstrates text-to-image generation capabilities but also inherently supports other downstream tasks, such as image editing, subject-driven generation, and visual-conditional generation. Additionally, OmniGen can handle classical computer vision tasks by transforming them into image generation tasks, such as edge detection and human pose recognition. 2) Simplicity: The architecture of OmniGen is highly simplified, eliminating the need for additional text encoders. Moreover, it is more user-friendly compared to existing diffusion models, enabling complex tasks to be accomplished through instructions without the need for extra preprocessing steps (e.g., human pose estimation), thereby significantly simplifying the workflow of image generation. 3) Knowledge Transfer: Through learning in a unified format, OmniGen effectively transfers knowledge across different tasks, manages unseen tasks and domains, and exhibits novel capabilities. We also explore the model's reasoning capabilities and potential applications of chain-of-thought mechanism. This work represents the first attempt at a general-purpose image generation model, and there remain several unresolved issues. We will open-source the related resources at https://github.com/VectorSpaceLab/OmniGen to foster advancements in this field.

Transfusion과 (https://www.arxiv.org/abs/2408.11039) 비슷한 Diffusion 결합을 통한 이미지 생성. 여기서는 이미지 생성으로 처리할 수 있는 Computer Vision 관련 과제들도 고려했군요.

#image-generation #diffusion #autoregressive-model

SOAP: Improving and Stabilizing Shampoo using Adam

(Nikhil Vyas, Depen Morwani, Rosie Zhao, Itai Shapira, David Brandfonbrener, Lucas Janson, Sham Kakade)

There is growing evidence of the effectiveness of Shampoo, a higher-order preconditioning method, over Adam in deep learning optimization tasks. However, Shampoo's drawbacks include additional hyperparameters and computational overhead when compared to Adam, which only updates running averages of first- and second-moment quantities. This work establishes a formal connection between Shampoo (implemented with the 1/2 power) and Adafactor -- a memory-efficient approximation of Adam -- showing that Shampoo is equivalent to running Adafactor in the eigenbasis of Shampoo's preconditioner. This insight leads to the design of a simpler and computationally efficient algorithm: ShampoO with Adam in the Preconditioner's eigenbasis (SOAP). With regards to improving Shampoo's computational efficiency, the most straightforward approach would be to simply compute Shampoo's eigendecomposition less frequently. Unfortunately, as our empirical results show, this leads to performance degradation that worsens with this frequency. SOAP mitigates this degradation by continually updating the running average of the second moment, just as Adam does, but in the current (slowly changing) coordinate basis. Furthermore, since SOAP is equivalent to running Adam in a rotated space, it introduces only one additional hyperparameter (the preconditioning frequency) compared to Adam. We empirically evaluate SOAP on language model pre-training with 360m and 660m sized models. In the large batch regime, SOAP reduces the number of iterations by over 40% and wall clock time by over 35% compared to AdamW, with approximately 20% improvements in both metrics compared to Shampoo. An implementation of SOAP is available at https://github.com/nikhilvyas/SOAP.

Shampoo가 Shampoo에서 사용된 Preconditioner의 고유 기저에 대해 Adafactor를 적용한 것과 같다는 아이디어. 그렇다면 이 고유 기저에 대해 Adafactor 대신 Adam도 적용해볼 수 있겠죠. 흥미롭네요.

학습 속도가 증가한다는 것 - 같은 스텝에서 더 낮은 Loss를 달성할 수 있다는 것은 샘플 효율성이 높다는 것이고, 샘플 효율성은 곧 실질적으로 갖고 있는 데이터의 양을 늘려주는 효과로 생각할 수 있겠죠. 확보한 데이터의 양이 중요한 시점에서 그 의미가 더 크다고 할 수 있겠습니다.

#optimizer

Announcing Pixtral 12B

(Mistral AI)

얼마 전 체크포인트를 공개했었던 Pixtral에 대한 블로그 포스트가 올라왔군요. 비전 인코더를 사용하되 이미지의 해상도와 종횡비를 그대로 사용하고 이미지 토큰의 각 라인(?)에 Line Break 토큰을 사용한 형탠네요.

다양한 해상도 지원, 종횡비 보존, 그리고 Interleaved 입력 지원, 추가적으로 반드시 그래야 하는 것은 아니겠지만 Decoder-only 구조. 비슷비슷해지는 느낌이군요.

#vision-language

NVLM: Open Frontier-Class Multimodal LLMs

(Wenliang Dai, Nayeon Lee, Boxin Wang, Zhuoling Yang, Zihan Liu, Jon Barker, Tuomas Rintamaki, Mohammad Shoeybi, Bryan Catanzaro, Wei Ping)

We introduce NVLM 1.0, a family of frontier-class multimodal large language models (LLMs) that achieve state-of-the-art results on vision-language tasks, rivaling the leading proprietary models (e.g., GPT-4o) and open-access models (e.g., Llama 3-V 405B and InternVL 2). Remarkably, NVLM 1.0 shows improved text-only performance over its LLM backbone after multimodal training. In terms of model design, we perform a comprehensive comparison between decoder-only multimodal LLMs (e.g., LLaVA) and cross-attention-based models (e.g., Flamingo). Based on the strengths and weaknesses of both approaches, we propose a novel architecture that enhances both training efficiency and multimodal reasoning capabilities. Furthermore, we introduce a 1-D tile-tagging design for tile-based dynamic high-resolution images, which significantly boosts performance on multimodal reasoning and OCR-related tasks. Regarding training data, we meticulously curate and provide detailed information on our multimodal pretraining and supervised fine-tuning datasets. Our findings indicate that dataset quality and task diversity are more important than scale, even during the pretraining phase, across all architectures. Notably, we develop production-grade multimodality for the NVLM-1.0 models, enabling them to excel in vision-language tasks while maintaining and even improving text-only performance compared to their LLM backbones. To achieve this, we craft and integrate a high-quality text-only dataset into multimodal training, alongside a substantial amount of multimodal math and reasoning data, leading to enhanced math and coding capabilities across modalities. To advance research in the field, we are releasing the model weights and will open-source the code for the community:

https://nvlm-project.github.io/.

Qwen2 기반 Multimodal 모델. 여기서는 이미지 토큰을 Decoder 입력으로 사용하는 Decoder-only와 인코더-디코더 Cross Attention 구조를 비교했군요. 그러면서 Decoder-only의 (Cross Attention이 없기 때문에 발생하는) 파라미터 효율성 모달리티 통합으로 인한 효과성 Cross Attention의 연산 효율성을 결합하는 방법으로 썸네일 이미지를 Decoder-only로 사용하고 Cross Attention으로 고해상도 이미지를 결합하는 하이브리드를 만들었습니다.

#multimodal #vision-language