2024년 8월 6일

Language Model Can Listen While Speaking

(Ziyang Ma, Yakun Song, Chenpeng Du, Jian Cong, Zhuo Chen, Yuping Wang, Yuxuan Wang, Xie Chen)

Dialogue serves as the most natural manner of human-computer interaction (HCI). Recent advancements in speech language models (SLM) have significantly enhanced speech-based conversational AI. However, these models are limited to turn-based conversation, lacking the ability to interact with humans in real-time spoken scenarios, for example, being interrupted when the generated content is not satisfactory. To address these limitations, we explore full duplex modeling (FDM) in interactive speech language models (iSLM), focusing on enhancing real-time interaction and, more explicitly, exploring the quintessential ability of interruption. We introduce a novel model design, namely listening-while-speaking language model (LSLM), an end-to-end system equipped with both listening and speaking channels. Our LSLM employs a token-based decoder-only TTS for speech generation and a streaming self-supervised learning (SSL) encoder for real-time audio input. LSLM fuses both channels for autoregressive generation and detects turn-taking in real time. Three fusion strategies -- early fusion, middle fusion, and late fusion -- are explored, with middle fusion achieving an optimal balance between speech generation and real-time interaction. Two experimental settings, command-based FDM and voice-based FDM, demonstrate LSLM's robustness to noise and sensitivity to diverse instructions. Our results highlight LSLM's capability to achieve duplex communication with minimal impact on existing systems. This study aims to advance the development of interactive speech dialogue systems, enhancing their applicability in real-world contexts.

말하면서 들을 수 있는 모델 만들기. GPT-4o 같은 쪽이 목표죠. GPT-4o의 데모가 나온 시점에도 사람이 말을 시작하면 모델의 음성이 너무 바로 끊긴다는 평이 많았었는데 이런 부분도 UX적인 측면에서 재미있는 요소일 듯 하네요.

#tts #asr

Self-Taught Evaluators

(Tianlu Wang, Ilia Kulikov, Olga Golovneva, Ping Yu, Weizhe Yuan, Jane Dwivedi-Yu, Richard Yuanzhe Pang, Maryam Fazel-Zarandi, Jason Weston, Xian Li)

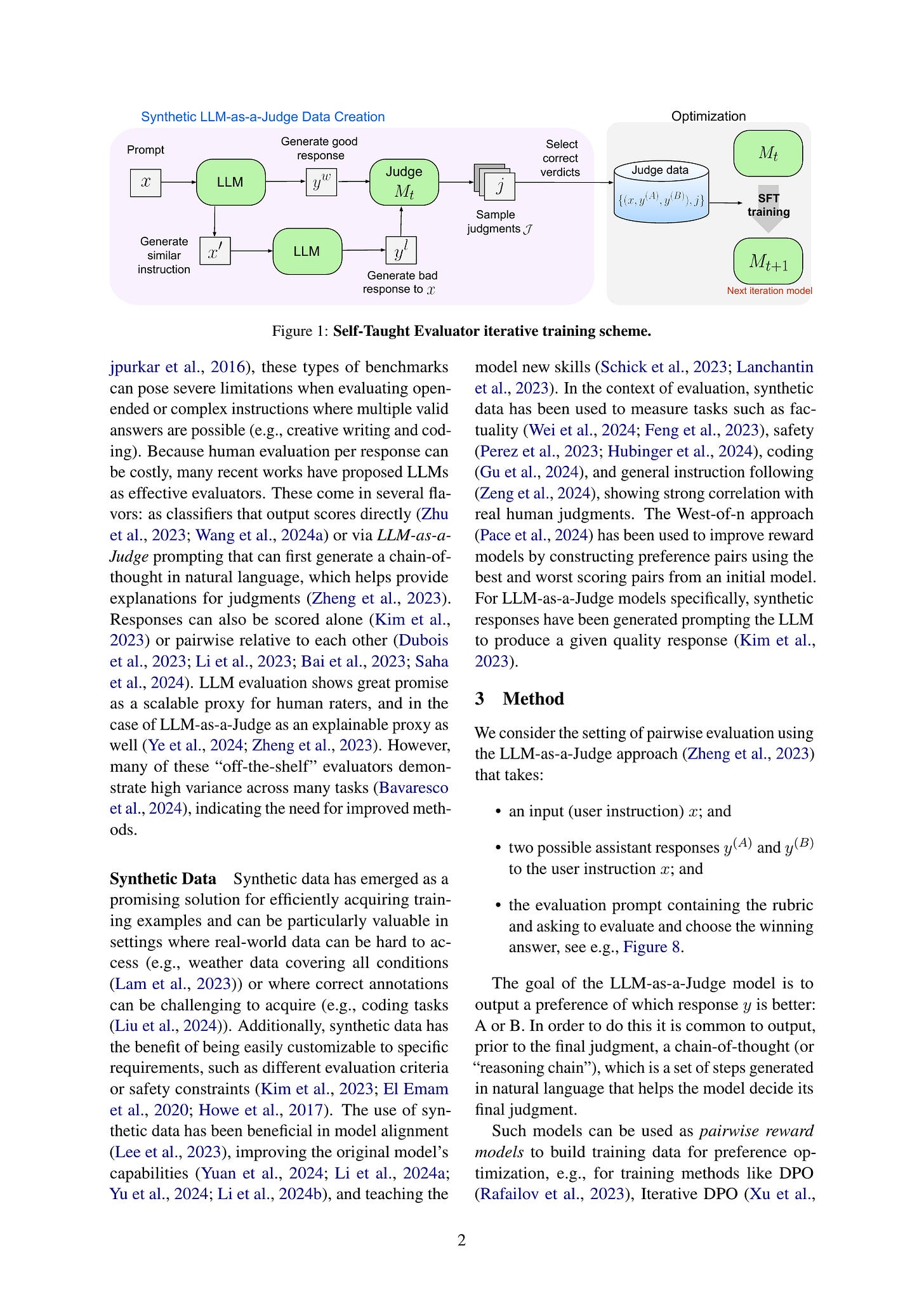

Model-based evaluation is at the heart of successful model development -- as a reward model for training, and as a replacement for human evaluation. To train such evaluators, the standard approach is to collect a large amount of human preference judgments over model responses, which is costly and the data becomes stale as models improve. In this work, we present an approach that aims to im-prove evaluators without human annotations, using synthetic training data only. Starting from unlabeled instructions, our iterative self-improvement scheme generates contrasting model outputs and trains an LLM-as-a-Judge to produce reasoning traces and final judgments, repeating this training at each new iteration using the improved predictions. Without any labeled preference data, our Self-Taught Evaluator can improve a strong LLM (Llama3-70B-Instruct) from 75.4 to 88.3 (88.7 with majority vote) on RewardBench. This outperforms commonly used LLM judges such as GPT-4 and matches the performance of the top-performing reward models trained with labeled examples.

LLM의 응답을 평가하는 Judge 모델을 만들기 위한 방법. Synthetic Pair를 만드는 것이 문제인데 이쪽에서는 프롬프트를 Rewriting해서 비슷하지만 약간 다른 프롬프트를 작성하게 하고 이 프롬프트에 대한 응답을 Weak Response로 설정했군요. 프롬프트가 약간 다르니 원 프롬프트에 대한 응답으로서는 부합하지 않는다는 아이디어죠.

Meta Rewarding과 비슷한 느낌이 드네요. (https://arxiv.org/abs/2407.19594)

#rlaif

Lumina-mGPT: Illuminate Flexible Photorealistic Text-to-Image Generation with Multimodal Generative Pretraining

(Dongyang Liu, Shitian Zhao, Le Zhuo, Weifeng Lin, Yu Qiao, Hongsheng Li, Peng Gao)

We present Lumina-mGPT, a family of multimodal autoregressive models capable of various vision and language tasks, particularly excelling in generating flexible photorealistic images from text descriptions. Unlike existing autoregressive image generation approaches, Lumina-mGPT employs a pretrained decoder-only transformer as a unified framework for modeling multimodal token sequences. Our key insight is that a simple decoder-only transformer with multimodal Generative PreTraining (mGPT), utilizing the next-token prediction objective on massive interleaved text-image sequences, can learn broad and general multimodal capabilities, thereby illuminating photorealistic text-to-image generation. Building on these pretrained models, we propose Flexible Progressive Supervised Finetuning (FP-SFT) on high-quality image-text pairs to fully unlock their potential for high-aesthetic image synthesis at any resolution while maintaining their general multimodal capabilities. Furthermore, we introduce Ominiponent Supervised Finetuning (Omni-SFT), transforming Lumina-mGPT into a foundation model that seamlessly achieves omnipotent task unification. The resulting model demonstrates versatile multimodal capabilities, including visual generation tasks like flexible text-to-image generation and controllable generation, visual recognition tasks like segmentation and depth estimation, and vision-language tasks like multiturn visual question answering. Additionally, we analyze the differences and similarities between diffusion-based and autoregressive methods in a direct comparison.

Chameleon 체크포인트에서 시작한 Autoregressive Decoder only Text to Image. Multi Resolution 대응을 위해 해상도를 지시하는 토큰과 라인을 구분하기 위한 토큰을 사용했군요. 낮은 해상도에서 높은 해상도로 점진적 학습을 하면서 텍스트, 이미지-텍스트, 텍스트-이미지, 멀티 턴 이미지 편집, Dense Prediction 과제들을 사용해 학습시켰네요.

한 가지 신기한 것은 이미지를 넣고 편집하지 말라는 지시를 준 다음 생성하면 VQ에 의한 노이즈가 감소된 이미지를 생성할 수 있는 능력이 있다는 것입니다. 통계적으로 얼마나 더 나아지는지가 문제겠지만 생각 해 볼만한 부분이 있는 듯 하네요.

#text-to-image #vision-language #autoregressive-model F