2024년 8월 5일

Intermittent Semi-working Mask: A New Masking Paradigm for LLMs

(Mingcong Lu, Jiangcai Zhu, Wang Hao, Zheng Li, Shusheng Zhang, Kailai Shao, Chao Chen, Nan Li, Feng Wang, Xin Lu)

Multi-turn dialogues are a key interaction method between humans and Large Language Models (LLMs), as conversations extend over multiple rounds, keeping LLMs' high generation quality and low latency is a challenge. Mainstream LLMs can be grouped into two categories based on masking strategy: causal LLM and prefix LLM. Several works have demonstrated that prefix LLMs tend to outperform causal ones in scenarios that heavily depend on historical context such as multi-turn dialogues or in-context learning, thanks to their bidirectional attention on prefix sequences. However, prefix LLMs have an inherent inefficient training problem in multi-turn dialogue datasets. In addition, the attention mechanism of prefix LLM makes it unable to reuse Key-Value Cache (KV Cache) across dialogue rounds to reduce generation latency. In this paper, we propose a novel masking scheme called Intermittent Semi-working Mask (ISM) to address these problems. Specifically, we apply alternate bidirectional and unidirectional attention on queries and answers in the dialogue history. In this way, ISM is able to maintain the high quality of prefix LLM and low generation latency of causal LLM, simultaneously. Extensive experiments illustrate that our ISM achieves significant performance.

Prefix LM을 효율적으로 쓰기 위한 방법. Question 부분에만 Bidirectional Attention을 사용한다는 느낌이군요. Prefix LM의 효과는 만년 떡밥이지만 일단 효율적으로 사용하는 것은 가능하다는 증거가 될 수 있겠네요. (이런 형태의 마스킹을 지원하는 Attention 커널을 구현하는 것이 성가시긴 하겠습니다만.)

#lm #attention

An Empirical Analysis of Compute-Optimal Inference for Problem-Solving with Language Models

(Yangzhen Wu, Zhiqing Sun, Shanda Li, Sean Welleck, Yiming Yang)

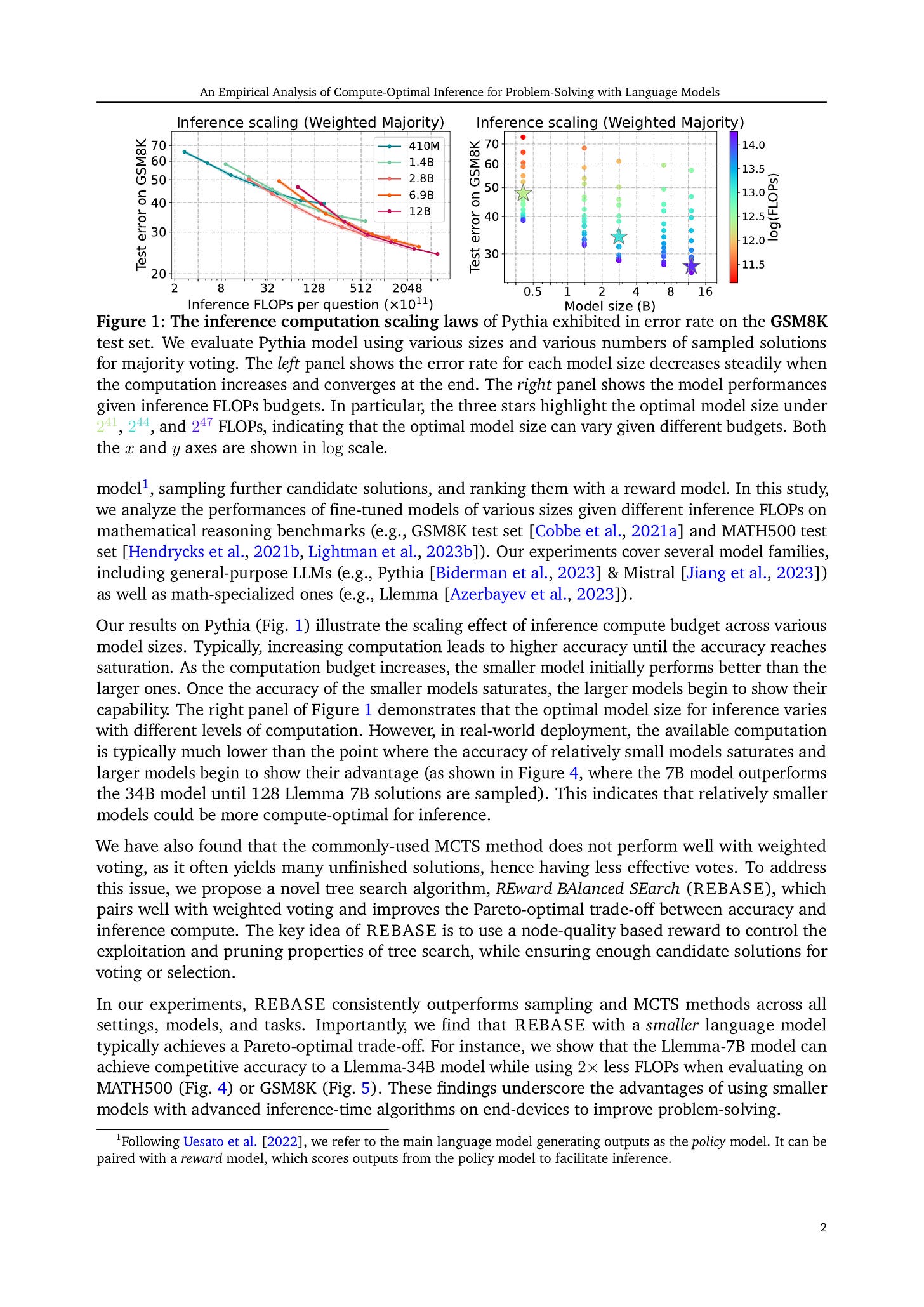

The optimal training configurations of large language models (LLMs) with respect to model sizes and compute budgets have been extensively studied. But how to optimally configure LLMs during inference has not been explored in sufficient depth. We study compute-optimal inference: designing models and inference strategies that optimally trade off additional inference-time compute for improved performance. As a first step towards understanding and designing compute-optimal inference methods, we assessed the effectiveness and computational efficiency of multiple inference strategies such as Greedy Search, Majority Voting, Best-of-N, Weighted Voting, and their variants on two different Tree Search algorithms, involving different model sizes and computational budgets. We found that a smaller language model with a novel tree search algorithm typically achieves a Pareto-optimal trade-off. These results highlight the potential benefits of deploying smaller models equipped with more sophisticated decoding algorithms in budget-constrained scenarios, e.g., on end-devices, to enhance problem-solving accuracy. For instance, we show that the Llemma-7B model can achieve competitive accuracy to a Llemma-34B model on MATH500 while using 2×2× less FLOPs. Our findings could potentially apply to any generation task with a well-defined measure of success.

추론 시 사용 연산량에 따른 성능 변화에 대한 Scaling Law. 얼마 전 나온 논문과 비슷하고 (https://arxiv.org/abs/2407.21787) 이쪽에서도 (Reward Model을 사용했기에 더더욱) 꺾이는 지점이 나타나는 듯 합니다. 다만 서치 알고리즘에 따라 이 점근선을 이동시킬 수 있겠다는 생각도 들긴 하네요.

#search #scaling-law