2024년 8월 29일

Eagle: Exploring The Design Space for Multimodal LLMs with Mixture of Encoders

(Min Shi, Fuxiao Liu, Shihao Wang, Shijia Liao, Subhashree Radhakrishnan, De-An Huang, Hongxu Yin, Karan Sapra, Yaser Yacoob, Humphrey Shi, Bryan Catanzaro, Andrew Tao, Jan Kautz, Zhiding Yu, Guilin Liu)

The ability to accurately interpret complex visual information is a crucial topic of multimodal large language models (MLLMs). Recent work indicates that enhanced visual perception significantly reduces hallucinations and improves performance on resolution-sensitive tasks, such as optical character recognition and document analysis. A number of recent MLLMs achieve this goal using a mixture of vision encoders. Despite their success, there is a lack of systematic comparisons and detailed ablation studies addressing critical aspects, such as expert selection and the integration of multiple vision experts. This study provides an extensive exploration of the design space for MLLMs using a mixture of vision encoders and resolutions. Our findings reveal several underlying principles common to various existing strategies, leading to a streamlined yet effective design approach. We discover that simply concatenating visual tokens from a set of complementary vision encoders is as effective as more complex mixing architectures or strategies. We additionally introduce Pre-Alignment to bridge the gap between vision-focused encoders and language tokens, enhancing model coherence. The resulting family of MLLMs, Eagle, surpasses other leading open-source models on major MLLM benchmarks. Models and code: https://github.com/NVlabs/Eagle

Vision Language 모델의 인코더, 특히 여러 인코더를 결합하는 방법에 대해서 정말 온갖 시도를 다 해봤군요. Deformable Attention 같은 쪽은 정말 클래식한 컴퓨터 비전쪽 사람만 생각할 법한 방법이죠.

#vision-language

Nexus: Specialization meets Adaptability for Efficiently Training Mixture of Experts

(Nikolas Gritsch, Qizhen Zhang, Acyr Locatelli, Sara Hooker, Ahmet Üstün)

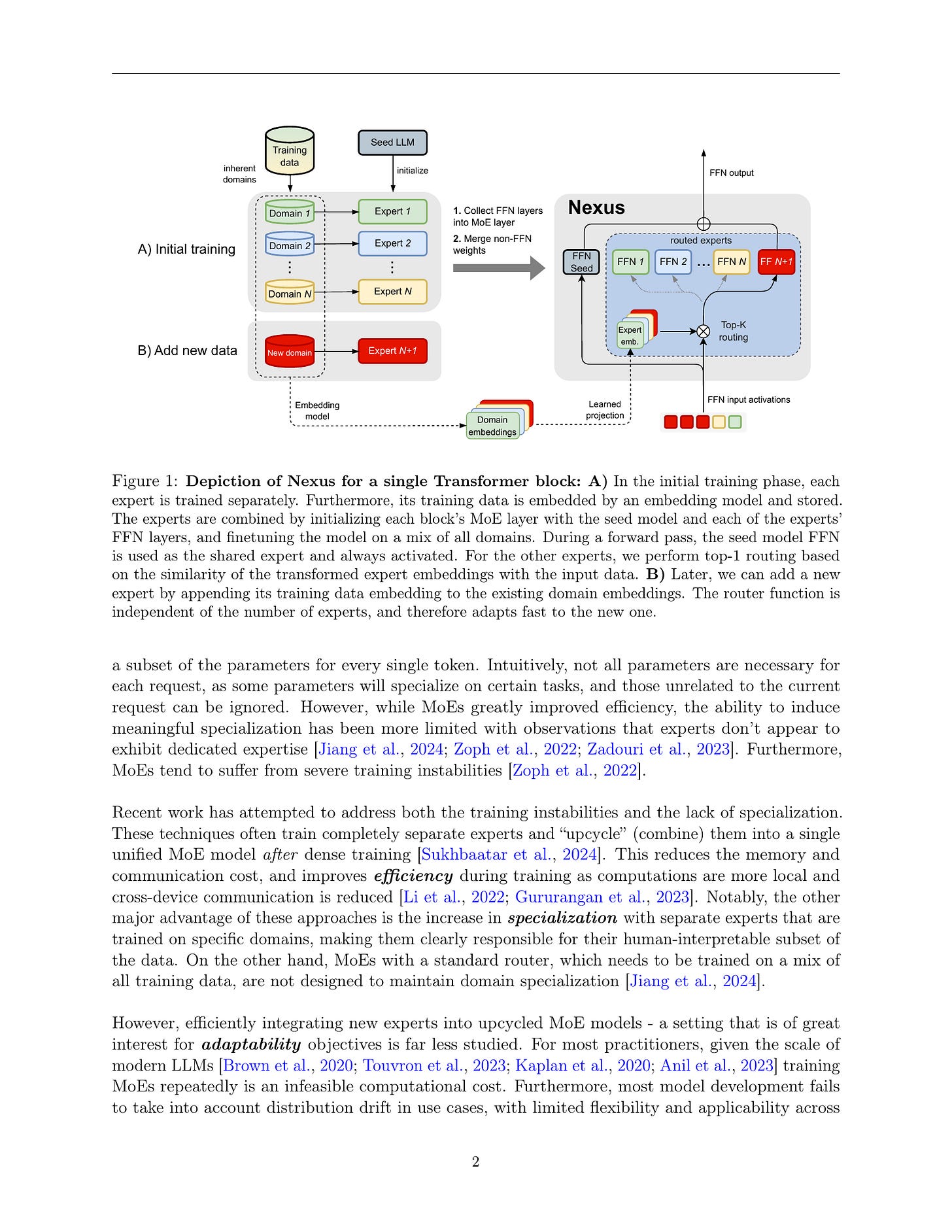

Efficiency, specialization, and adaptability to new data distributions are qualities that are hard to combine in current Large Language Models. The Mixture of Experts (MoE) architecture has been the focus of significant research because its inherent conditional computation enables such desirable properties. In this work, we focus on "upcycling" dense expert models into an MoE, aiming to improve specialization while also adding the ability to adapt to new tasks easily. We introduce Nexus, an enhanced MoE architecture with adaptive routing where the model learns to project expert embeddings from domain representations. This approach allows Nexus to flexibly add new experts after the initial upcycling through separately trained dense models, without requiring large-scale MoE training for unseen data domains. Our experiments show that Nexus achieves a relative gain of up to 2.1% over the baseline for initial upcycling, and a 18.8% relative gain for extending the MoE with a new expert by using limited finetuning data. This flexibility of Nexus is crucial to enable an open-source ecosystem where every user continuously assembles their own MoE-mix according to their needs.

도메인 특화 후 MoE로 결합하는 방법 연구. (https://arxiv.org/abs/2408.08274) DeepSeekMoE처럼 Shared Expert를 두는 것과 이후 MoE 모델을 추가 도메인에 대해 다시 확장하는 것을 탐색했군요.

사실 DeepSeekMoE처럼 Shared Expert에 더해 더 작은 Expert를 더 많이 활용하는 것이 나을 수도 있겠죠. 이쪽에 대해서는 Qwen MoE에서 시도한 FFN을 쪼개는 방법을 생각해볼 수도 있겠네요. (https://qwenlm.github.io/blog/qwen-moe/)

#moe

Auxiliary-Loss-Free Load Balancing Strategy for Mixture-of-Experts

(Lean Wang, Huazuo Gao, Chenggang Zhao, Xu Sun, Damai Dai)

For Mixture-of-Experts (MoE) models, an unbalanced expert load will lead to routing collapse or increased computational overhead. Existing methods commonly employ an auxiliary loss to encourage load balance, but a large auxiliary loss will introduce non-negligible interference gradients into training and thus impair the model performance. In order to control load balance while not producing undesired gradients during training, we propose Loss-Free Balancing, featured by an auxiliary-loss-free load balancing strategy. To be specific, before the top-K routing decision, Loss-Free Balancing will first apply an expert-wise bias to the routing scores of each expert. By dynamically updating the bias of each expert according to its recent load, Loss-Free Balancing can consistently maintain a balanced distribution of expert load. In addition, since Loss-Free Balancing does not produce any interference gradients, it also elevates the upper bound of model performance gained from MoE training. We validate the performance of Loss-Free Balancing on MoE models with up to 3B parameters trained on up to 200B tokens. Experimental results show that Loss-Free Balancing achieves both better performance and better load balance compared with traditional auxiliary-loss-controlled load balancing strategies.

Load Balancing Loss를 사용하는 대신 Expert Router의 Bias를 직접 조절해서 Balancing을 하는 방법. 이건 꽤 흥미롭네요. 테스트해보면 재미있을 듯 합니다.

#moe

UNA: Unifying Alignments of RLHF/PPO, DPO and KTO by a Generalized Implicit Reward Function

(Zhichao Wang, Bin Bi, Can Huang, Shiva Kumar Pentyala, Zixu James Zhu, Sitaram Asur, Na Claire Cheng)

An LLM is pretrained on trillions of tokens, but the pretrained LLM may still generate undesired responses. To solve this problem, alignment techniques such as RLHF, DPO and KTO are proposed. However, these alignment techniques have limitations. For example, RLHF requires training the reward model and policy separately, which is complex, time-consuming, memory intensive and unstable during training processes. DPO proposes a mapping between an optimal policy and a reward, greatly simplifying the training process of RLHF. However, it can not take full advantages of a reward model and it is limited to pairwise preference data. In this paper, we propose UNified Alignment (UNA) which unifies RLHF/PPO, DPO and KTO. Firstly, we mathematically prove that given the classical RLHF objective, the optimal policy is induced by a generalize implicit reward function. With this novel mapping between a reward model and an optimal policy, UNA can 1. unify RLHF/PPO, DPO and KTO into a supervised learning of minimizing the difference between an implicit reward and an explicit reward; 2. outperform RLHF/PPO while simplify, stabilize, speed up and reduce memory burden of RL fine-tuning process; 3. accommodate different feedback types including pairwise, binary and scalar feedback. Downstream experiments show UNA outperforms DPO, KTO and RLHF.

RL/PPO, DPO, KTO의 대통합. 결과적으로는 DPO의 Implicit Reward와 RL/PPO의 Reward Model에 의한 Explicit Reward의 차이를 Loss로 사용한다는 형태입니다.

#rlhf #alignment

LLaVA-MoD: Making LLaVA Tiny via MoE Knowledge Distillation

(Fangxun Shu, Yue Liao, Le Zhuo, Chenning Xu, Guanghao Zhang, Haonan Shi, Long Chen, Tao Zhong, Wanggui He, Siming Fu, Haoyuan Li, Bolin Li, Zhelun Yu, Si Liu, Hongsheng Li, Hao Jiang)

We introduce LLaVA-MoD, a novel framework designed to enable the efficient training of small-scale Multimodal Language Models (s-MLLM) by distilling knowledge from large-scale MLLM (l-MLLM). Our approach tackles two fundamental challenges in MLLM distillation. First, we optimize the network structure of s-MLLM by integrating a sparse Mixture of Experts (MoE) architecture into the language model, striking a balance between computational efficiency and model expressiveness. Second, we propose a progressive knowledge transfer strategy to ensure comprehensive knowledge migration. This strategy begins with mimic distillation, where we minimize the Kullback-Leibler (KL) divergence between output distributions to enable the student model to emulate the teacher network's understanding. Following this, we introduce preference distillation via Direct Preference Optimization (DPO), where the key lies in treating l-MLLM as the reference model. During this phase, the s-MLLM's ability to discriminate between superior and inferior examples is significantly enhanced beyond l-MLLM, leading to a better student that surpasses its teacher, particularly in hallucination benchmarks. Extensive experiments demonstrate that LLaVA-MoD outperforms existing models across various multimodal benchmarks while maintaining a minimal number of activated parameters and low computational costs. Remarkably, LLaVA-MoD, with only 2B activated parameters, surpasses Qwen-VL-Chat-7B by an average of 8.8% across benchmarks, using merely 0.3% of the training data and 23% trainable parameters. These results underscore LLaVA-MoD's ability to effectively distill comprehensive knowledge from its teacher model, paving the way for the development of more efficient MLLMs. The code will be available on: https://github.com/shufangxun/LLaVA-MoD.

일반적인 지식은 Dense-Dense 조합으로 Distill한 다음, 도메인 특화 지식들은 Sparse Upcycling으로 만든 MoE 모델에 Distill 한다는 아이디어. MoE를 도메인 특화와 연관짓는 것은 초기에 인기가 있었다가 사그라들었는데 최근 다시 등장하고 있네요. 물론 이는 Upcycling과 같은 재활용의 맥락과 연관되어 있긴 합니다만.

#vision-language #moe #distillation

SIaM: Self-Improving Code-Assisted Mathematical Reasoning of Large Language Models

(Dian Yu, Baolin Peng, Ye Tian, Linfeng Song, Haitao Mi, Dong Yu)

There is a growing trend of teaching large language models (LLMs) to solve mathematical problems through coding. Existing studies primarily focus on prompting powerful, closed-source models to generate seed training data followed by in-domain data augmentation, equipping LLMs with considerable capabilities for code-aided mathematical reasoning. However, continually training these models on augmented data derived from a few datasets such as GSM8K may impair their generalization abilities and restrict their effectiveness to a narrow range of question types. Conversely, the potential of improving such LLMs by leveraging large-scale, expert-written, diverse math question-answer pairs remains unexplored. To utilize these resources and tackle unique challenges such as code response assessment, we propose a novel paradigm that uses a code-based critic model to guide steps including question-code data construction, quality control, and complementary evaluation. We also explore different alignment algorithms with self-generated instruction/preference data to foster continuous improvement. Experiments across both in-domain (up to +5.7%) and out-of-domain (+4.4%) benchmarks in English and Chinese demonstrate the effectiveness of the proposed paradigm.

코드 기반으로 수학 문제를 푸는 방법에 대한 탐색. 벤치마크 데이터를 기반으로 생성하는 형태의 시도가 많이 있었지만 언제까지나 벤치마크만 파고 있을 수는 없죠.

따라서 이를 웹 데이터 기반으로 해보려는 시도입니다. 웹 데이터에서 정답을 추출하기는 어려우니 Critic 모델을 사용해서 질문, 모델이 생성한 코드, 코드 실행 결과, 정답을 주고 분류하게 합니다.

Scalable한가? 이 질문이 방법을 평가하는데 있어 꽤 유용하다고 봅니다. 이는 모델에 대해서 뿐만 아니라 데이터 구축에 대해서도 마찬가지죠.

#code #synthetic-data