2024년 8월 20일

LongVILA: Scaling Long-Context Visual Language Models for Long Videos

(Fuzhao Xue, Yukang Chen, Dacheng Li, Qinghao Hu, Ligeng Zhu, Xiuyu Li, Yunhao Fang, Haotian Tang, Shang Yang, Zhijian Liu, Ethan He, Hongxu Yin, Pavlo Molchanov, Jan Kautz, Linxi Fan, Yuke Zhu, Yao Lu, Song Han)

Long-context capability is critical for multi-modal foundation models. We introduce LongVILA, a full-stack solution for long-context vision-language models, including system, model training, and dataset development. On the system side, we introduce the first Multi-Modal Sequence Parallelism (MM-SP) system that enables long-context training and inference, enabling 2M context length training on 256 GPUs. MM-SP is also efficient, being 2.1x - 5.7x faster than Ring-Style Sequence Parallelism and 1.1x - 1.4x faster than Megatron-LM in text-only settings. Moreover, it seamlessly integrates with Hugging Face Transformers. For model training, we propose a five-stage pipeline comprising alignment, pre-training, context extension, and long-short joint supervised fine-tuning. Regarding datasets, we meticulously construct large-scale visual language pre-training datasets and long video instruction-following datasets to support our multi-stage training process. The full-stack solution extends the feasible frame number of VILA by a factor of 128 (from 8 to 1024 frames) and improves long video captioning score from 2.00 to 3.26 (1.6x), achieving 99.5% accuracy in 1400-frames video (274k context length) needle in a haystack. LongVILA-8B also demonstrates a consistent improvement in performance on long videos within the VideoMME benchmark as the video frames increase.

Long Context 모델로 길이가 긴 비디오를 태클. Long Context 모델로 이미지에서 비디오로 Transfer한 시도가 떠오르네요. (https://arxiv.org/abs/2406.16852) 여기서도 이미지/짧은 비디오로 Multimodal Alignment를 한 다음 Long Context로 확장하고 다시 긴 길이의 비디오로 튜닝하는 방법을 따랐습니다.

재미있는 부분은 Multimodal Long Context 모델을 학습하기 위한 인프라네요. 노드 내에서는 DeepSpeed-Ulysses 스타일의 헤드를 나누는 Sequence Parallel을 사용하고 노드 간에는 시퀀스를 나누는 Ring Attention을 사용했습니다.

#video-text #long-context

Performance Law of Large Language Models

(Chuhan Wu, Ruiming Tang)

Guided by the belief of the scaling law, large language models (LLMs) have achieved impressive performance in recent years. However, scaling law only gives a qualitative estimation of loss, which is influenced by various factors such as model architectures, data distributions, tokenizers, and computation precision. Thus, estimating the real performance of LLMs with different training settings rather than loss may be quite useful in practical development. In this article, we present an empirical equation named "Performance Law" to directly predict the MMLU score of an LLM, which is a widely used metric to indicate the general capability of LLMs in real-world conversations and applications. Based on only a few key hyperparameters of the LLM architecture and the size of training data, we obtain a quite accurate MMLU prediction of various LLMs with diverse sizes and architectures developed by different organizations in different years. Performance law can be used to guide the choice of LLM architecture and the effective allocation of computational resources without extensive experiments.

모델 학습 규모로 MMLU 예측하기. 다들 한 번 해보는 작업 같네요. 뭔가 함수의 형태가 작위적인 느낌은 있긴 합니다만 예측은 그럴 듯 하네요. 레이어의 수, 히든 임베딩 차원, FFN의 크기, 학습 토큰 수, (MoE의 경우) Activated Parameter. 수많은 차이에도 결국 Scaling Law로 회귀한다는 것이 재미있습니다. 과연 Scaling Law는 속일 수 없는 것인지.

#scaling-law

Transformers to SSMs: Distilling Quadratic Knowledge to Subquadratic Models

(Aviv Bick, Kevin Y. Li, Eric P. Xing, J. Zico Kolter, Albert Gu)

Transformer architectures have become a dominant paradigm for domains like language modeling but suffer in many inference settings due to their quadratic-time self-attention. Recently proposed subquadratic architectures, such as Mamba, have shown promise, but have been pretrained with substantially less computational resources than the strongest Transformer models. In this work, we present a method that is able to distill a pretrained Transformer architecture into alternative architectures such as state space models (SSMs). The key idea to our approach is that we can view both Transformers and SSMs as applying different forms of mixing matrices over the token sequences. We can thus progressively distill the Transformer architecture by matching different degrees of granularity in the SSM: first matching the mixing matrices themselves, then the hidden units at each block, and finally the end-to-end predictions. Our method, called MOHAWK, is able to distill a Mamba-2 variant based on the Phi-1.5 architecture (Phi-Mamba) using only 3B tokens and a hybrid version (Hybrid Phi-Mamba) using 5B tokens. Despite using less than 1% of the training data typically used to train models from scratch, Phi-Mamba boasts substantially stronger performance compared to all past open-source non-Transformer models. MOHAWK allows models like SSMs to leverage computational resources invested in training Transformer-based architectures, highlighting a new avenue for building such models.

Transformer에서 State Space Model로 Distillation. Attention도 SSM도 Sequence Mixing의 문제로 생각하기에 이 두 Mixing 행렬에 대한 Distillation이 기본이 되네요.

Local Attention과 Global Attention의 조합이 Long Context에서 Attention의 추론 부담을 줄여주는 방법으로 정립되고 있는 듯 해서 추론 성능을 위해 SSM을 사용할 동인이 줄어든 것 같긴 합니다.

#distillation #state-space-model

SkyScript-100M: 1,000,000,000 Pairs of Scripts and Shooting Scripts for Short Drama

(Jing Tang, Quanlu Jia, Yuqiang Xie, Zeyu Gong, Xiang Wen, Jiayi Zhang, Yalong Guo, Guibin Chen, Jiangping Yang)

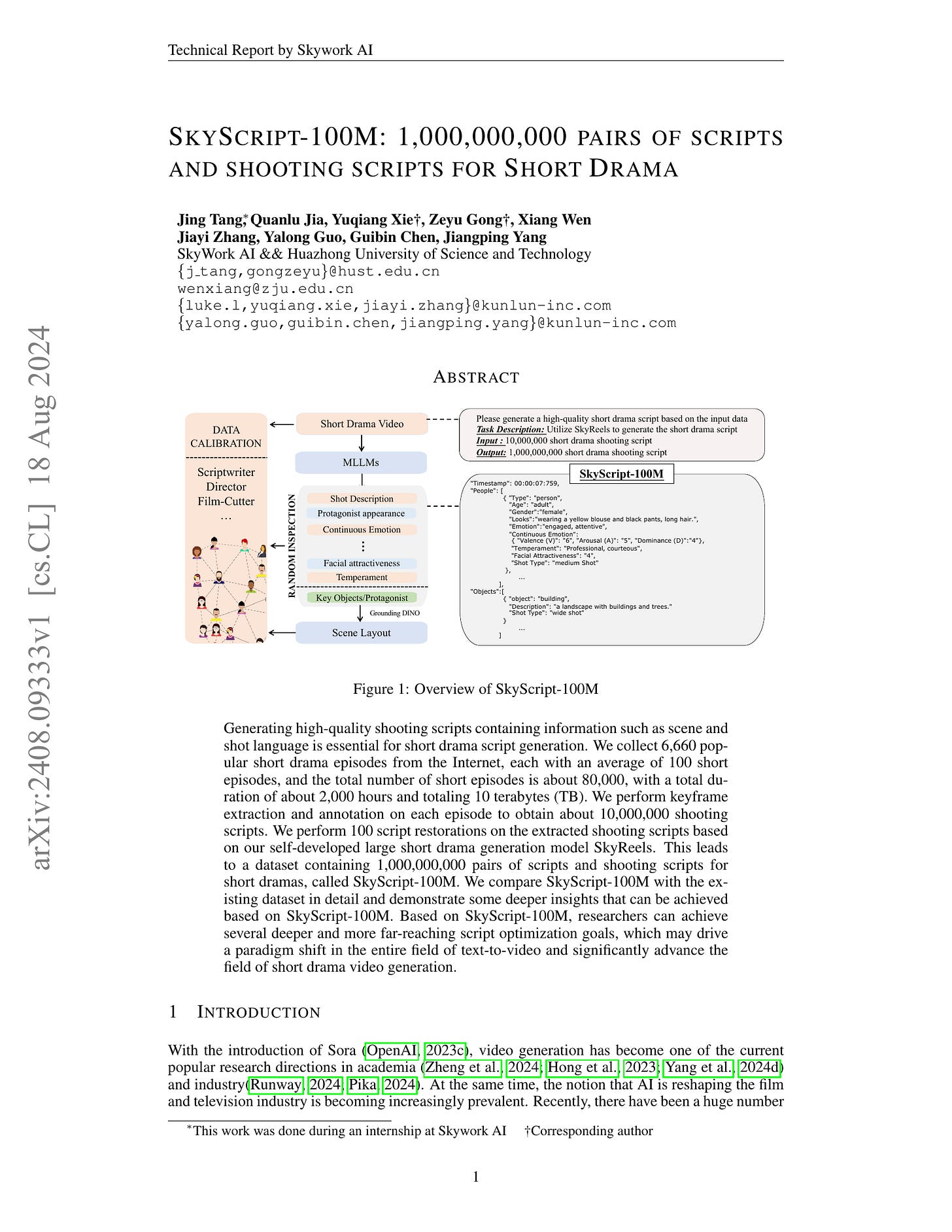

Generating high-quality shooting scripts containing information such as scene and shot language is essential for short drama script generation. We collect 6,660 popular short drama episodes from the Internet, each with an average of 100 short episodes, and the total number of short episodes is about 80,000, with a total duration of about 2,000 hours and totaling 10 terabytes (TB). We perform keyframe extraction and annotation on each episode to obtain about 10,000,000 shooting scripts. We perform 100 script restorations on the extracted shooting scripts based on our self-developed large short drama generation model SkyReels. This leads to a dataset containing 1,000,000,000 pairs of scripts and shooting scripts for short dramas, called SkyScript-100M. We compare SkyScript-100M with the existing dataset in detail and demonstrate some deeper insights that can be achieved based on SkyScript-100M. Based on SkyScript-100M, researchers can achieve several deeper and more far-reaching script optimization goals, which may drive a paradigm shift in the entire field of text-to-video and significantly advance the field of short drama video generation. The data and code are available at https://github.com/vaew/SkyScript-100M.

드라마 대본 데이터셋. 이 대본에는 장면에 대한 설명과 카메라, 각 객체에 대한 배치 및 촬영에 대한 정보를 모두 기술하고 있네요. 비디오에서 정보를 추출하는 작업에도 혹은 비디오 생성을 위한 조건을 입력하는 용도로도 사용이 가능할 듯 합니다. 비디오 생성과 관련해서 이 문제에 대한 관심이 높은 듯 하네요.

#dataset