2024년 8월 14일

Mutual Reasoning Makes Smaller LLMs Stronger Problem-Solvers

(Zhenting Qi, Mingyuan Ma, Jiahang Xu, Li Lyna Zhang, Fan Yang, Mao Yang)

This paper introduces rStar, a self-play mutual reasoning approach that significantly improves reasoning capabilities of small language models (SLMs) without fine-tuning or superior models. rStar decouples reasoning into a self-play mutual generation-discrimination process. First, a target SLM augments the Monte Carlo Tree Search (MCTS) with a rich set of human-like reasoning actions to construct higher quality reasoning trajectories. Next, another SLM, with capabilities similar to the target SLM, acts as a discriminator to verify each trajectory generated by the target SLM. The mutually agreed reasoning trajectories are considered mutual consistent, thus are more likely to be correct. Extensive experiments across five SLMs demonstrate rStar can effectively solve diverse reasoning problems, including GSM8K, GSM-Hard, MATH, SVAMP, and StrategyQA. Remarkably, rStar boosts GSM8K accuracy from 12.51% to 63.91% for LLaMA2-7B, from 36.46% to 81.88% for Mistral-7B, from 74.53% to 91.13% for LLaMA3-8B-Instruct. Code will be available at https://github.com/zhentingqi/rStar.

LLM + MCTS. 각 단계에서 LLM이 취할 수 있는 액션을 다양화하고, Self Evaluation은 한계가 있다고 판단해서 다시 정답 여부를 Reward로 사용했군요. 대신 정답 선택을 다른 LLM을 사용해 Trajectory의 초기 부분을 주고 나머지를 생성시켰을 때 같은 정답으로 이어지는 점검하는 방법을 사용했습니다.

#search

AquilaMoE: Efficient Training for MoE Models with Scale-Up and Scale-Out Strategies

(Bo-Wen Zhang, Liangdong Wang, Ye Yuan, Jijie Li, Shuhao Gu, Mengdi Zhao, Xinya Wu, Guang Liu, Chengwei Wu, Hanyu Zhao, Li Du, Yiming Ju, Quanyue Ma, Yulong Ao, Yingli Zhao, Songhe Zhu, Zhou Cao, Dong Liang, Yonghua Lin, Ming Zhang, Shunfei Wang, Yanxin Zhou, Min Ye, Xuekai Chen, Xinyang Yu, Xiangjun Huang, Jian Yang)

In recent years, with the rapid application of large language models across various fields, the scale of these models has gradually increased, and the resources required for their pre-training have grown exponentially. Training an LLM from scratch will cost a lot of computation resources while scaling up from a smaller model is a more efficient approach and has thus attracted significant attention. In this paper, we present AquilaMoE, a cutting-edge bilingual 816B Mixture of Experts (MoE) language model that has 8 experts with 16 billion parameters each and is developed using an innovative training methodology called EfficientScale. This approach optimizes performance while minimizing data requirements through a two-stage process. The first stage, termed Scale-Up, initializes the larger model with weights from a pre-trained smaller model, enabling substantial knowledge transfer and continuous pretraining with significantly less data. The second stage, Scale-Out, uses a pre-trained dense model to initialize the MoE experts, further enhancing knowledge transfer and performance. Extensive validation experiments on 1.8B and 7B models compared various initialization schemes, achieving models that maintain and reduce loss during continuous pretraining. Utilizing the optimal scheme, we successfully trained a 16B model and subsequently the 816B AquilaMoE model, demonstrating significant improvements in performance and training efficiency.

모델 재사용을 통해 학습을 가속하고자 한 시도. Sparse Upcycling에서 흔히 보고되듯 이런 방법은 오래 학습하면 효과가 감소하거나 오히려 From Scratch 학습보다 손해를 보는 경우도 있죠. 그에 대한 고려가 늘 필요합니다.

#llm #efficient-training

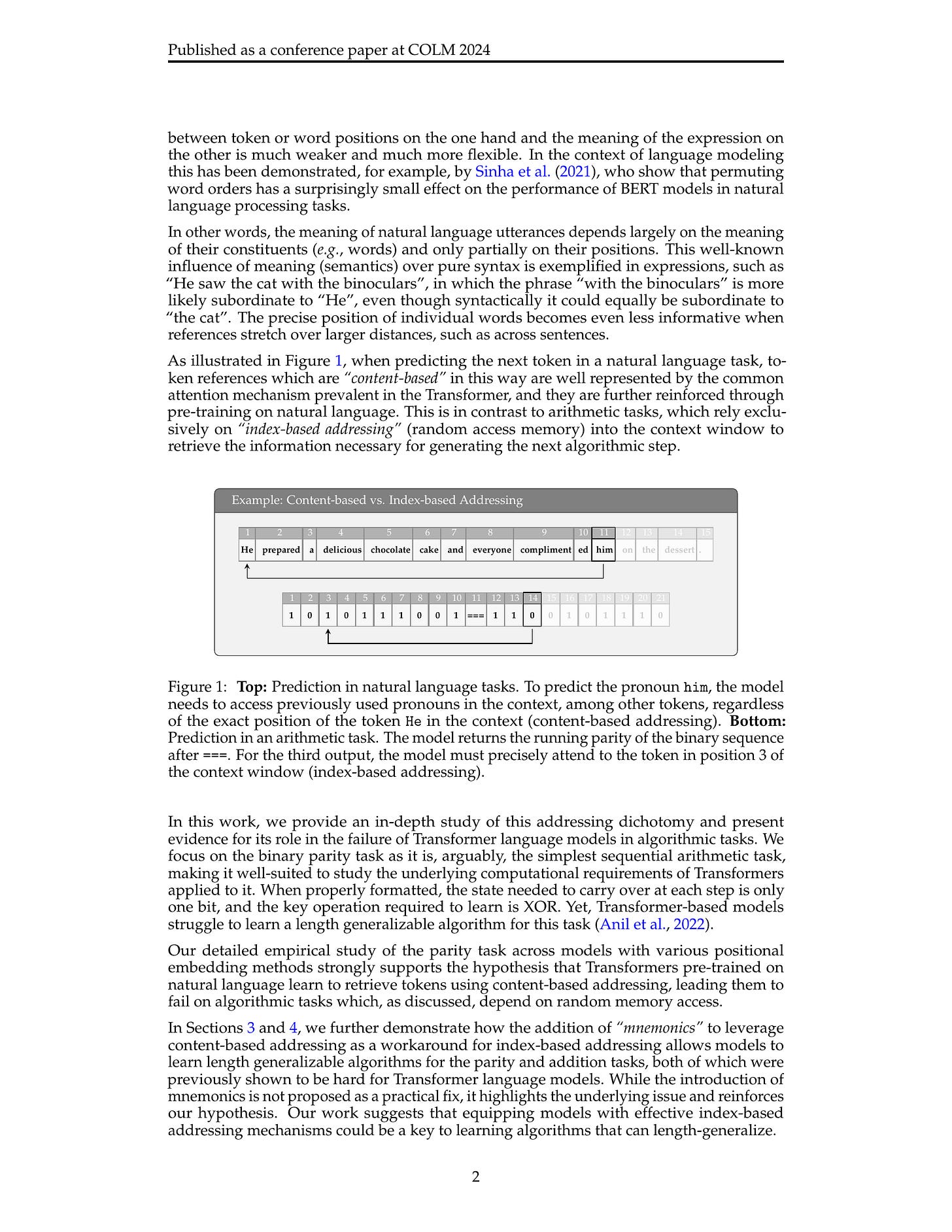

Your Context Is Not an Array: Unveiling Random Access Limitations in Transformers

(MohammadReza Ebrahimi, Sunny Panchal, Roland Memisevic)

Despite their recent successes, Transformer-based large language models show surprising failure modes. A well-known example of such failure modes is their inability to length-generalize: solving problem instances at inference time that are longer than those seen during training. In this work, we further explore the root cause of this failure by performing a detailed analysis of model behaviors on the simple parity task. Our analysis suggests that length generalization failures are intricately related to a model's inability to perform random memory accesses within its context window. We present supporting evidence for this hypothesis by demonstrating the effectiveness of methodologies that circumvent the need for indexing or that enable random token access indirectly, through content-based addressing. We further show where and how the failure to perform random memory access manifests through attention map visualizations.

Transformer의 Length Extrapolation 문제가 Attention이 Content 기반 Addressing은 가능하지만 Index/Location 기반 Addressing은 어렵다는 점에서 기인한다는 아이디어. 따라서 Location 기반 Addressing을 쉽게 할 수 있도록 토큰을 끼워넣거나 시퀀스를 재배치하면 Length Extrapolation이 발생할 수 있다고 합니다.

Location 기반 Addressing이라는 표현이 말해주듯 Length Extrapolation이 결국 Positional Encoding과 관련된 문제라는 것과 연결되는 것 같네요. Location 기반 Addressing이라고 하니 Neural Turing Machine도 떠오르는군요.

#transformer #positional-encoding #extrapolation

CogVideoX: Text-to-Video Diffusion Models with An Expert Transformer

(Zhuoyi Yang, Jiayan Teng, Wendi Zheng, Ming Ding, Shiyu Huang, Jiazheng Xu, Yuanming Yang, Wenyi Hong, Xiaohan Zhang, Guanyu Feng, Da Yin, Xiaotao Gu, Yuxuan Zhang, Weihan Wang, Yean Cheng, Ting Liu, Bin Xu, Yuxiao Dong, Jie Tang)

We introduce CogVideoX, a large-scale diffusion transformer model designed for generating videos based on text prompts. To efficently model video data, we propose to levearge a 3D Variational Autoencoder (VAE) to compress videos along both spatial and temporal dimensions. To improve the text-video alignment, we propose an expert transformer with the expert adaptive LayerNorm to facilitate the deep fusion between the two modalities. By employing a progressive training technique, CogVideoX is adept at producing coherent, long-duration videos characterized by significant motions. In addition, we develop an effective text-video data processing pipeline that includes various data preprocessing strategies and a video captioning method. It significantly helps enhance the performance of CogVideoX, improving both generation quality and semantic alignment. Results show that CogVideoX demonstrates state-of-the-art performance across both multiple machine metrics and human evaluations. The model weights of both the 3D Causal VAE and CogVideoX are publicly available at https://github.com/THUDM/CogVideo.

Text to Video 모델. 일단 가능하다는 것만 알려지면 정말 빠르게 발전하는군요.

#text-to-video

Speculative Diffusion Decoding: Accelerating Language Generation through Diffusion

(Jacob K Christopher, Brian R Bartoldson, Bhavya Kailkhura, Ferdinando Fioretto)

Speculative decoding has emerged as a widely adopted method to accelerate large language model inference without sacrificing the quality of the model outputs. While this technique has facilitated notable speed improvements by enabling parallel sequence verification, its efficiency remains inherently limited by the reliance on incremental token generation in existing draft models. To overcome this limitation, this paper proposes an adaptation of speculative decoding which uses discrete diffusion models to generate draft sequences. This allows parallelization of both the drafting and verification steps, providing significant speed-ups to the inference process. Our proposed approach, \textit{Speculative Diffusion Decoding (SpecDiff)}, is validated on standard language generation benchmarks and empirically demonstrated to provide a \textbf{up to 8.7x speed-up over standard generation processes and up to 2.5x speed-up over existing speculative decoding approaches.}

Speculative Decoding에서 Drafter를 Diffusion LM으로 교체한 시도. Drafter도 병렬로 디코딩을 할 수 있으면 좋고, Verification은 Autoregressive LM으로 하면 되니 성능 문제가 없다는 아이디어군요. 신박하네요.

#efficiency