2024년 7월 4일

Diffusion Forcing: Next-token Prediction Meets Full-Sequence Diffusion

(Boyuan Chen, Diego Marti Monso, Yilun Du, Max Simchowitz, Russ Tedrake, Vincent Sitzmann)

This paper presents Diffusion Forcing, a new training paradigm where a diffusion model is trained to denoise a set of tokens with independent per-token noise levels. We apply Diffusion Forcing to sequence generative modeling by training a causal next-token prediction model to generate one or several future tokens without fully diffusing past ones. Our approach is shown to combine the strengths of next-token prediction models, such as variable-length generation, with the strengths of full-sequence diffusion models, such as the ability to guide sampling to desirable trajectories. Our method offers a range of additional capabilities, such as (1) rolling-out sequences of continuous tokens, such as video, with lengths past the training horizon, where baselines diverge and (2) new sampling and guiding schemes that uniquely profit from Diffusion Forcing's variable-horizon and causal architecture, and which lead to marked performance gains in decision-making and planning tasks. In addition to its empirical success, our method is proven to optimize a variational lower bound on the likelihoods of all subsequences of tokens drawn from the true joint distribution. Project website: https://boyuan.space/diffusion-forcing/

Diffusion과 Autoregressive 모델의 결합으로 두 모델이 장점을 합칠 수 있다는 아이디어. 여기서의 특징은 한 시퀀스 내의 각 토큰마다 서로 다른 노이즈 레벨을 적용했다는 것이네요.

Autoregressive 모델을 통해 많은 모달리티를 통합하고 싶은 시점에서 Diffusion Loss를 사용해 Autoregressive 모델의 한계를 커버하는 것은 흥미로운 접근인 것 같습니다. (https://arxiv.org/abs/2403.05196, https://arxiv.org/abs/2406.11838)

#diffusion #autoregressive-model

Let the Expert Stick to His Last: Expert-Specialized Fine-Tuning for Sparse Architectural Large Language Models

(Zihan Wang, Deli Chen, Damai Dai, Runxin Xu, Zhuoshu Li, Y. Wu)

Parameter-efficient fine-tuning (PEFT) is crucial for customizing Large Language Models (LLMs) with constrained resources. Although there have been various PEFT methods for dense-architecture LLMs, PEFT for sparse-architecture LLMs is still underexplored. In this work, we study the PEFT method for LLMs with the Mixture-of-Experts (MoE) architecture and the contents of this work are mainly threefold: (1) We investigate the dispersion degree of the activated experts in customized tasks, and found that the routing distribution for a specific task tends to be highly concentrated, while the distribution of activated experts varies significantly across different tasks. (2) We propose Expert-Specialized Fine-Tuning, or ESFT, which tunes the experts most relevant to downstream tasks while freezing the other experts and modules; experimental results demonstrate that our method not only improves the tuning efficiency, but also matches or even surpasses the performance of full-parameter fine-tuning. (3) We further analyze the impact of the MoE architecture on expert-specialized fine-tuning. We find that MoE models with finer-grained experts are more advantageous in selecting the combination of experts that are most relevant to downstream tasks, thereby enhancing both the training efficiency and effectiveness.

MoE 모델의 Expert Activation이 각각 특정한 과제에 집중되어 있다는 것을 발견. 과제에 대해 Activate 되는 Expert를 골라 그 Expert만 학습시키는 것으로 PEFT를 할 수 있다는 아이디어. 공유 Expert가 있고 작은 Expert를 여러 개 사용하는 DeepSeek 스타일의 MoE의 특성일 수도 있을 것 같습니다. (Mixtral 같은 경우 Expert가 서로 유사하죠. https://arxiv.org/abs/2406.18219)

#moe #efficient-training #multitask

TheoremLlama: Transforming General-Purpose LLMs into Lean4 Experts

(Ruida Wang, Jipeng Zhang, Yizhen Jia, Rui Pan, Shizhe Diao, Renjie Pi, Tong Zhang)

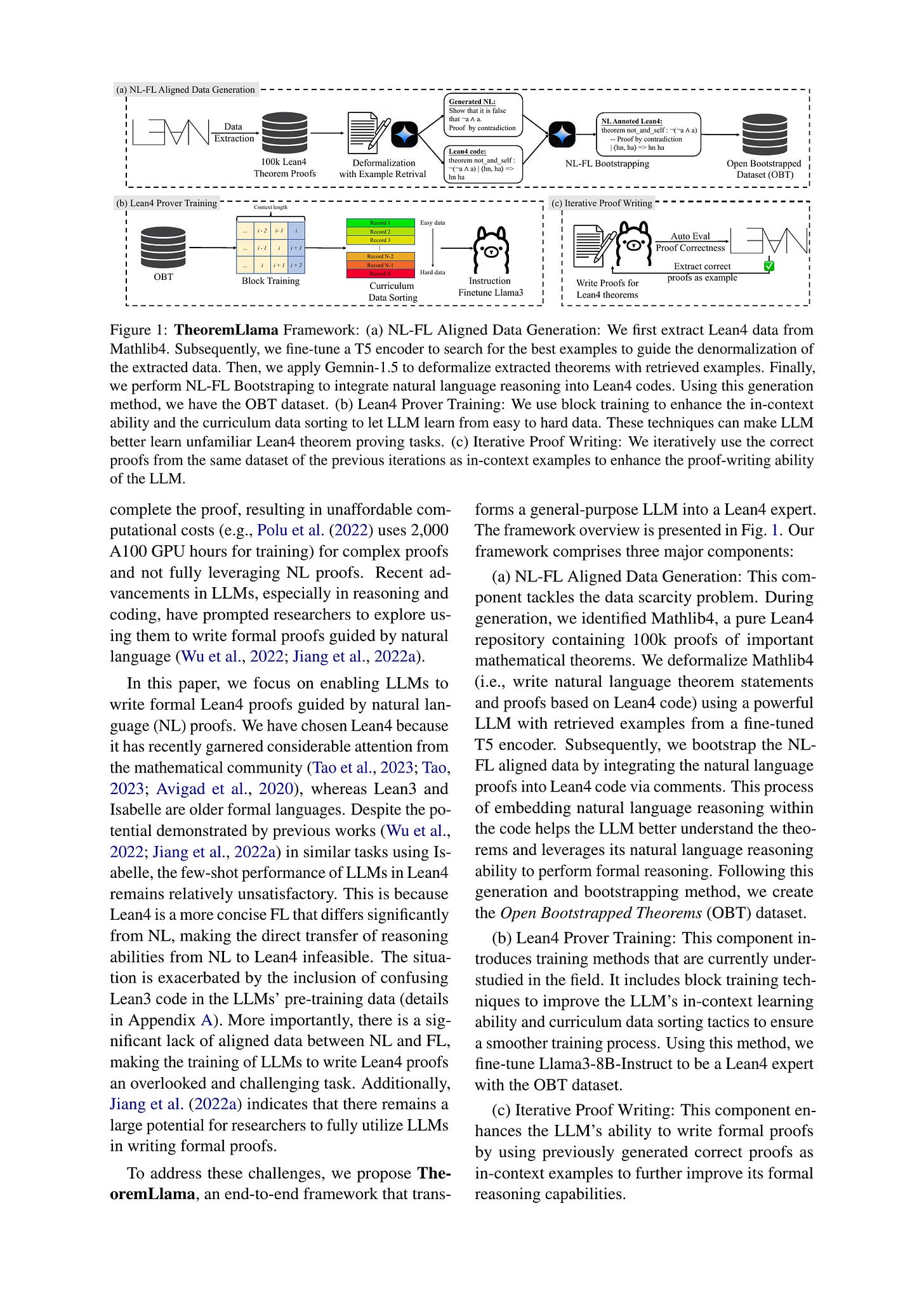

Proving mathematical theorems using computer-verifiable formal languages like Lean significantly impacts mathematical reasoning. One approach to formal theorem proving involves generating complete proofs using Large Language Models (LLMs) based on Natural Language (NL) proofs. Similar methods have shown promising results in code generation. However, most modern LLMs exhibit suboptimal performance due to the scarcity of aligned NL and Formal Language (FL) theorem-proving data. This scarcity results in a paucity of methodologies for training LLMs and techniques to fully utilize their capabilities in composing formal proofs. To address the challenges, this paper proposes TheoremLlama, an end-to-end framework to train a general-purpose LLM to become a Lean4 expert. This framework encompasses NL-FL aligned dataset generation methods, training approaches for the LLM formal theorem prover, and techniques for LLM Lean4 proof writing. Using the dataset generation method, we provide Open Bootstrapped Theorems (OBT), an NL-FL aligned and bootstrapped dataset. A key innovation in this framework is the NL-FL bootstrapping method, where NL proofs are integrated into Lean4 code for training datasets, leveraging the NL reasoning ability of LLMs for formal reasoning. The TheoremLlama framework achieves cumulative accuracies of 36.48% and 33.61% on MiniF2F-Valid and Test datasets respectively, surpassing the GPT-4 baseline of 22.95% and 25.41%. We have also open-sourced our model checkpoints and generated dataset, and will soon make all the code publicly available.

요즘 유행하는 Lean 4를 사용한 수학 증명 능력 학습. Lean 4의 Mathlib4에 포함된 정의와 증명을 사용해 형식 언어를 자연어로 Deformalization을 하고 Lean 코드에 대한 설명을 자연어 주석으로 붙이도록 합니다.

이를 통해 Lean으로 증명을 작성하는 Instruction Tuning을 하고 샘플을 생성한 다음 Lean으로 검증한다는 흐름이군요.

Lean을 사용하는 연구는 결국 Formalization 문제가 가장 중요한 문제가 되고 있는 것 같긴 합니다. 수학자들이 참여하는 Formalization 작업이 수학계에 대해서나 AI에서나 의미가 있을 것 같다는 생각이 드네요. 물론 굉장히 어려운 작업이라고 하긴 합니다만.

#search #math