2024년 7월 19일

GPT-4o mini: advancing cost-efficient intelligence

(OpenAI)

GPT-4o mini가 나왔습니다. Gemini 1.5 Flash와 Claude Haiku보다 더 고성능이면서 더 저렴하다고 합니다. (1M 입력에 $0.15, 출력에 $0.6)

https://x.com/karpathy/status/1814038096218083497

사실 성능이나 가격보다 더 궁금한 건 이런 경량 모델들은 대체 어떤 방법으로 만들어지고 있는가겠죠. 일단 큰 모델을 만들고 그 모델을 사용해 작은 모델을 만든다는 흐름이 맞는 것 같은데 큰 모델을 어떻게 활용하는가 부분에는 밝혀지지 않는 것이 많네요. Knowledge Distillation 같은 기본적인 방법 이상으로 합성 데이터를 적극적으로 사용하고 있을 것 같긴 합니다.

Karpathy의 표현에 따르면 웹 데이터에 대한 프리트레이닝 어려운 이유는 지식과 사고가 서로 얽혀 있기 때문이라는 것입니다. 따라서 이 둘을 분리하는 것이 합성 데이터의 역할이라는 의미일 듯 한데 어쩌면 행간을 생성하는 것이 한 부분일지도 모르겠다 싶네요.

#llm #synthetic-data

Mistral NeMo

(Mistral AI)

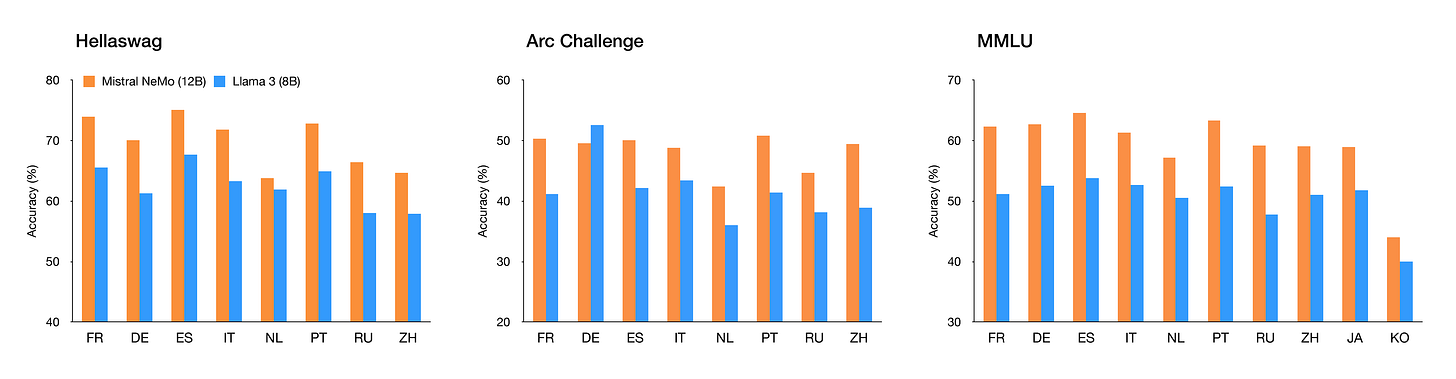

Mistral의 12B Multilingual 모델. 한국어와 일본어도 포함되기 시작했군요. (한국어 성능은 썩 좋지 않은 것 같긴 합니다만.) NVIDIA와의 협업 때문인지 NeMo가 이름에 붙었네요.

Tiktoken 기반 Multilingual Tokenizer도 채택했고 Function Calling을 지원하고 128K Context. 그런 느낌입니다.

Scaling Laws with Vocabulary: Larger Models Deserve Larger Vocabularies

(Chaofan Tao, Qian Liu, Longxu Dou, Niklas Muennighoff, Zhongwei Wan, Ping Luo, Min Lin, Ngai Wong)

Research on scaling large language models (LLMs) has primarily focused on model parameters and training data size, overlooking the role of vocabulary size. % Intuitively, larger vocabularies enable more efficient tokenization by representing sentences with fewer tokens, but they also increase the risk of under-fitting representations for rare tokens. We investigate how vocabulary size impacts LLM scaling laws by training models ranging from 33M to 3B parameters on up to 500B characters with various vocabulary configurations. We propose three complementary approaches for predicting the compute-optimal vocabulary size: IsoFLOPs analysis, derivative estimation, and parametric fit of the loss function. Our approaches converge on the same result that the optimal vocabulary size depends on the available compute budget and that larger models deserve larger vocabularies. However, most LLMs use too small vocabulary sizes. For example, we predict that the optimal vocabulary size of Llama2-70B should have been at least 216K, 7 times larger than its vocabulary of 32K. We validate our predictions empirically by training models with 3B parameters across different FLOPs budgets. Adopting our predicted optimal vocabulary size consistently improves downstream performance over commonly used vocabulary sizes. By increasing the vocabulary size from the conventional 32K to 43K, we improve performance on ARC-Challenge from 29.1 to 32.0 with the same 2.3e21 FLOPs. Our work emphasizes the necessity of jointly considering model parameters and vocabulary size for efficient scaling.

연산량에 따라 최적의 Vocabulary 수에 대한 Scaling Law. 투입하는 연산량이 많을수록 Vocabulary 수도 늘어나야 한다는 것이죠. 논문에서는 언급되지 않지만 Softmax Bottleneck 같은 이슈와도 관계된 현상일 듯 합니다.

#scaling-law #tokenizer

Weak-to-Strong Reasoning

(Yuqing Yang, Yan Ma, Pengfei Liu)

When large language models (LLMs) exceed human-level capabilities, it becomes increasingly challenging to provide full-scale and accurate supervisions for these models. Weak-to-strong learning, which leverages a less capable model to unlock the latent abilities of a stronger model, proves valuable in this context. Yet, the efficacy of this approach for complex reasoning tasks is still untested. Furthermore, tackling reasoning tasks under the weak-to-strong setting currently lacks efficient methods to avoid blindly imitating the weak supervisor including its errors. In this paper, we introduce a progressive learning framework that enables the strong model to autonomously refine its training data, without requiring input from either a more advanced model or human-annotated data. This framework begins with supervised fine-tuning on a selective small but high-quality dataset, followed by preference optimization on contrastive samples identified by the strong model itself. Extensive experiments on the GSM8K and MATH datasets demonstrate that our method significantly enhances the reasoning capabilities of Llama2-70b using three separate weak models. This method is further validated in a forward-looking experimental setup, where Llama3-8b-instruct effectively supervises Llama3-70b on the highly challenging OlympicArena dataset. This work paves the way for a more scalable and sophisticated strategy to enhance AI reasoning powers. All relevant code and resources are available in \url{https://github.com/GAIR-NLP/weak-to-strong-reasoning}.

약한 모델을 통해 강한 모델의 성능을 개선하는 방법. 약한 모델을 통해 응답을 생성하고 이 응답을 사용해 강한 모델에 In-context Learning을 적용해 응답 생성. 약한 모델과 강한 모델의 응답에서 답이 같은 경우를 사용해 튜닝. 이를 반복하고 강한 모델의 Confidence를 사용해 응답 페어를 만들어 DPO. 재미있네요.

#alignment