2024년 7월 11일

PaliGemma: A versatile 3B VLM for transfer

(Lucas Beyer, Andreas Steiner, André Susano Pinto, Alexander Kolesnikov, Xiao Wang, Daniel Salz, Maxim Neumann, Ibrahim Alabdulmohsin, Michael Tschannen, Emanuele Bugliarello, Thomas Unterthiner, Daniel Keysers, Skanda Koppula, Fangyu Liu, Adam Grycner, Alexey Gritsenko, Neil Houlsby, Manoj Kumar, Keran Rong, Julian Eisenschlos, Rishabh Kabra, Matthias Bauer, Matko Bošnjak, Xi Chen, Matthias Minderer, Paul Voigtlaender, Ioana Bica, Ivana Balazevic, Joan Puigcerver, Pinelopi Papalampidi, Olivier Henaff, Xi Xiong, Radu Soricut, Jeremiah Harmsen, Xiaohua Zhai)

PaliGemma is an open Vision-Language Model (VLM) that is based on the SigLIP-So400m vision encoder and the Gemma-2B language model. It is trained to be a versatile and broadly knowledgeable base model that is effective to transfer. It achieves strong performance on a wide variety of open-world tasks. We evaluate PaliGemma on almost 40 diverse tasks including standard VLM benchmarks, but also more specialized tasks such as remote-sensing and segmentation.

PaliGemma의 테크니컬 리포트가 나왔군요. SigLip 400M + Gemma 2B의 조합입니다.

이미지와 Prefix에 대해 Bidirectional Attention을 사용한 PrefixLM, 인코더도 같이 학습하되 인코더에 대해서는 더 긴 LR Warmup을 사용, 크롭 없이 이미지 해상도 확장 등이 특징이군요. 그리고 여전히 Inv-Sqrt Infinite LR 스케줄을 쓰고 있네요.

#vision-language

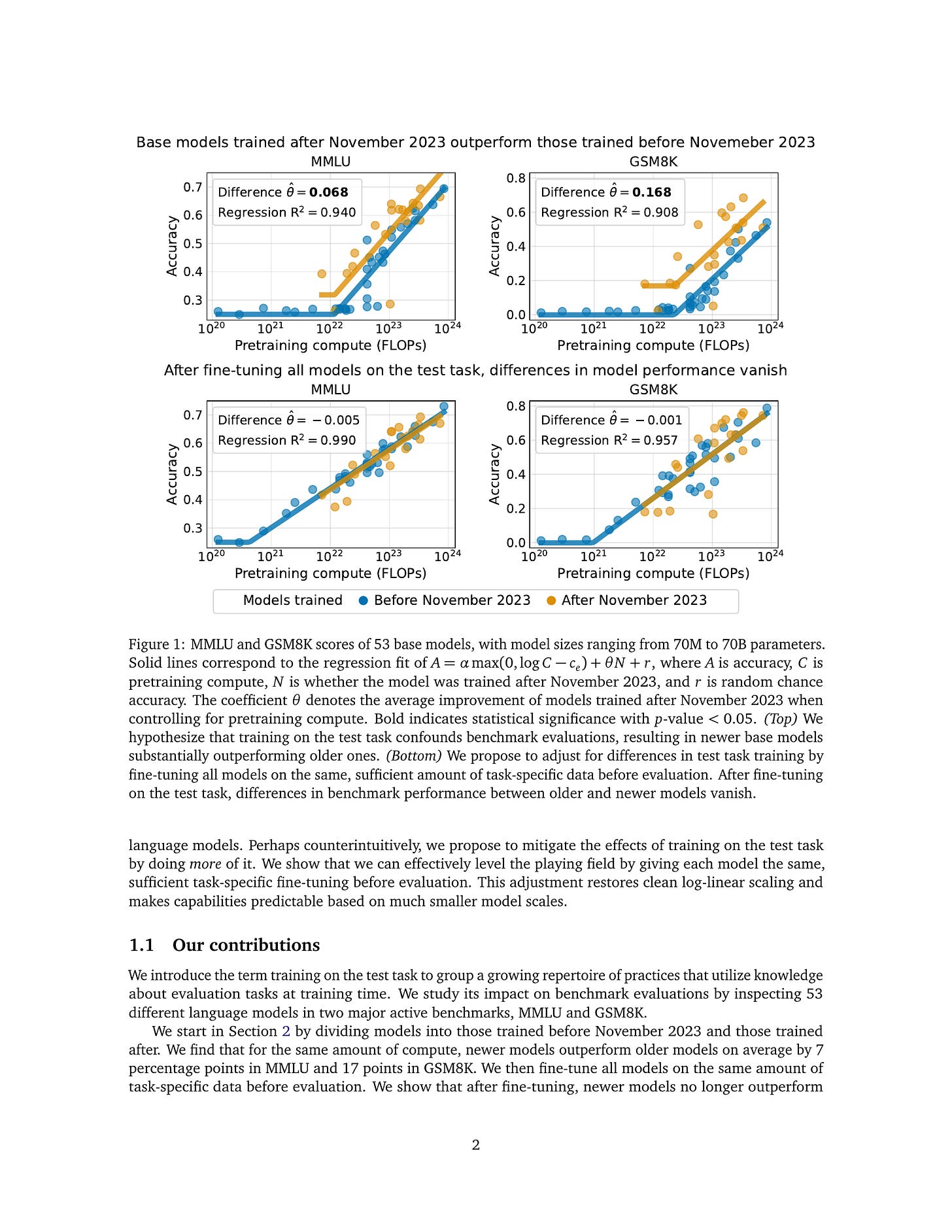

Training on the Test Task Confounds Evaluation and Emergence

(Ricardo Dominguez-Olmedo, Florian E. Dorner, Moritz Hardt)

We study a fundamental problem in the evaluation of large language models that we call training on the test task. Unlike wrongful practices like training on the test data, leakage, or data contamination, training on the test task is not a malpractice. Rather, the term describes a growing set of techniques to include task-relevant data in the pretraining stage of a language model. We demonstrate that training on the test task confounds both relative model evaluations and claims about emergent capabilities. We argue that the seeming superiority of one model family over another may be explained by a different degree of training on the test task. To this end, we propose an effective method to adjust for training on the test task by fine-tuning each model under comparison on the same task-relevant data before evaluation. We then show that instances of emergent behavior largely vanish once we adjust for training on the test task. This also applies to reported instances of emergent behavior that cannot be explained by the choice of evaluation metric. Our work promotes a new perspective on the evaluation of large language models with broad implications for benchmarking and the study of emergent capabilities.

2023년 11월을 기점으로 이전 모델과 이후 모델의 벤치마크 성능 차이가 크게 나타나는데, 이전 모델들을 벤치마크 학습셋으로 학습시키면 이 차이가 사라진다는 결과. 이는 벤치마크 데이터에 대한 오염이라기 보다는 Multiple Choice 같은 문제의 형식에 더 익숙해졌기 때문이라고 합니다. DeepSeek LLM (https://arxiv.org/abs/2401.02954) 에서 주장했던 것과 같은 맥락이겠네요.

Yi Tay가 팟캐스트에서 (

https://latent.space/p/yitay

) Scaling Law를 속일 수는 없다고 했던 것이 생각나네요.

#scaling-law #benchmark

Reuse, Don't Retrain: A Recipe for Continued Pretraining of Language Models

(Jupinder Parmar, Sanjev Satheesh, Mostofa Patwary, Mohammad Shoeybi, Bryan Catanzaro)

As language models have scaled both their number of parameters and pretraining dataset sizes, the computational cost for pretraining has become intractable except for the most well-resourced teams. This increasing cost makes it ever more important to be able to reuse a model after it has completed pretraining; allowing for a model's abilities to further improve without needing to train from scratch. In this work, we detail a set of guidelines that cover how to design efficacious data distributions and learning rate schedules for continued pretraining of language models. When applying these findings within a continued pretraining run on top of a well-trained 15B parameter model, we show an improvement of 9% in average model accuracy compared to the baseline of continued training on the pretraining set. The resulting recipe provides a practical starting point with which to begin developing language models through reuse rather than retraining.

NVIDIA의 Continual Pretraining 실험인데 다른 언어에 학습시킨다거나 하는 세팅이 아니라 QA 데이터셋에 학습시키는 과제네요. 학습 초반에는 분포가 같은 프리트레이닝 데이터를 쓰다가 후반에 QA 데이터를 사용한다, 구간을 나눠 후반에는 높은 퀄리티의 데이터만 사용한다 등등의 방법들인데 약간 Continual Pretraining보다도 학습 단계를 나누는 접근처럼 느껴지기도 하네요.

#continual-learning #pretraining

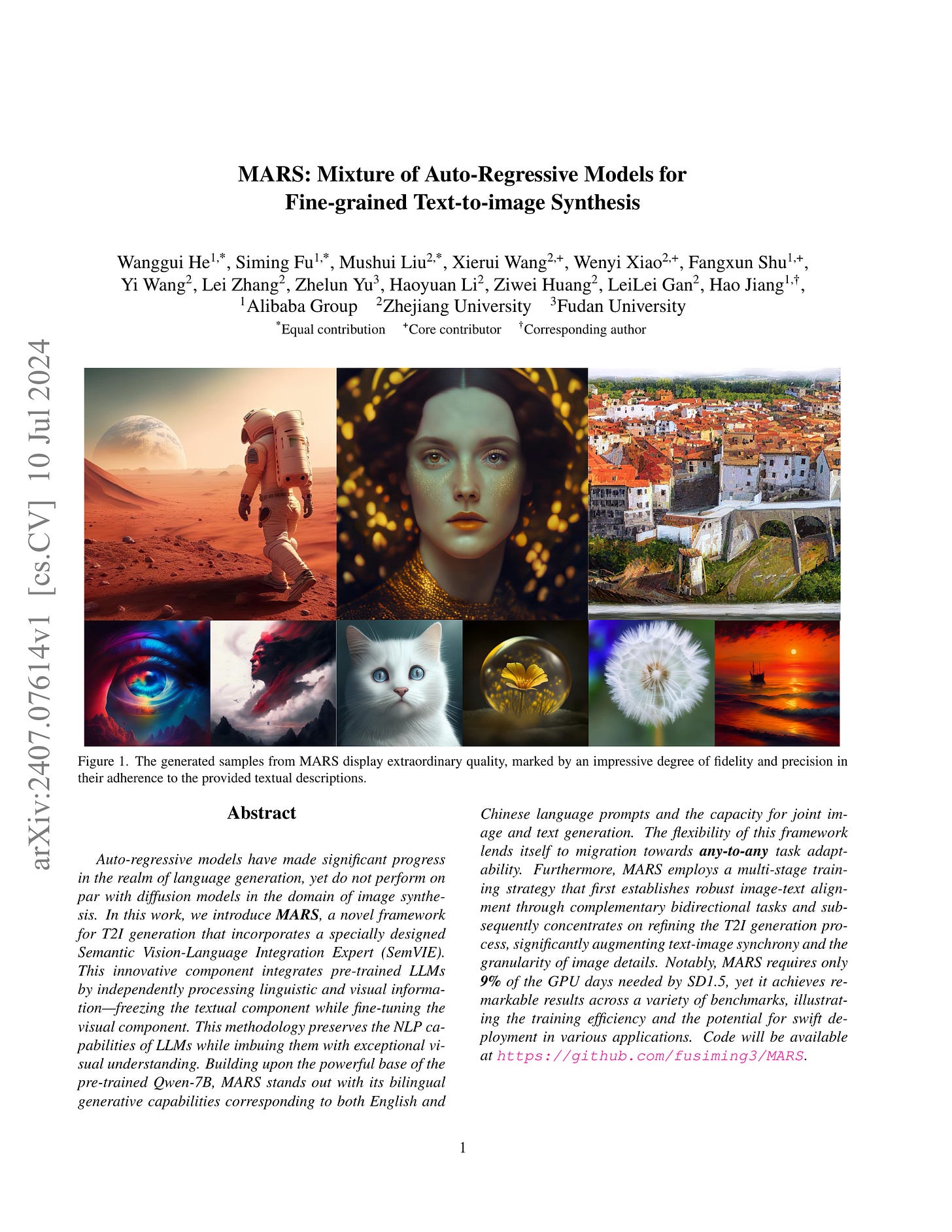

MARS: Mixture of Auto-Regressive Models for Fine-grained Text-to-image Synthesis

(Wanggui He, Siming Fu, Mushui Liu, Xierui Wang, Wenyi Xiao, Fangxun Shu, Yi Wang, Lei Zhang, Zhelun Yu, Haoyuan Li, Ziwei Huang, LeiLei Gan, Hao Jiang)

Auto-regressive models have made significant progress in the realm of language generation, yet they do not perform on par with diffusion models in the domain of image synthesis. In this work, we introduce MARS, a novel framework for T2I generation that incorporates a specially designed Semantic Vision-Language Integration Expert (SemVIE). This innovative component integrates pre-trained LLMs by independently processing linguistic and visual information, freezing the textual component while fine-tuning the visual component. This methodology preserves the NLP capabilities of LLMs while imbuing them with exceptional visual understanding. Building upon the powerful base of the pre-trained Qwen-7B, MARS stands out with its bilingual generative capabilities corresponding to both English and Chinese language prompts and the capacity for joint image and text generation. The flexibility of this framework lends itself to migration towards any-to-any task adaptability. Furthermore, MARS employs a multi-stage training strategy that first establishes robust image-text alignment through complementary bidirectional tasks and subsequently concentrates on refining the T2I generation process, significantly augmenting text-image synchrony and the granularity of image details. Notably, MARS requires only 9% of the GPU days needed by SD1.5, yet it achieves remarkable results across a variety of benchmarks, illustrating the training efficiency and the potential for swift deployment in various applications.

Autoregressive Text to Image 모델. 새로 추가되는 이미지 토큰에 대해 Attention, FFN Expert를 추가하는 접근. Rewriting된 캡션을 사용했고, 좀 더 특이한 것은 고해상도 생성에 대응하기 위해 Super Resolution 모델을 N개 토큰 예측으로 학습시켰다는 것이네요.

#autoregressive-model #text2img #vq

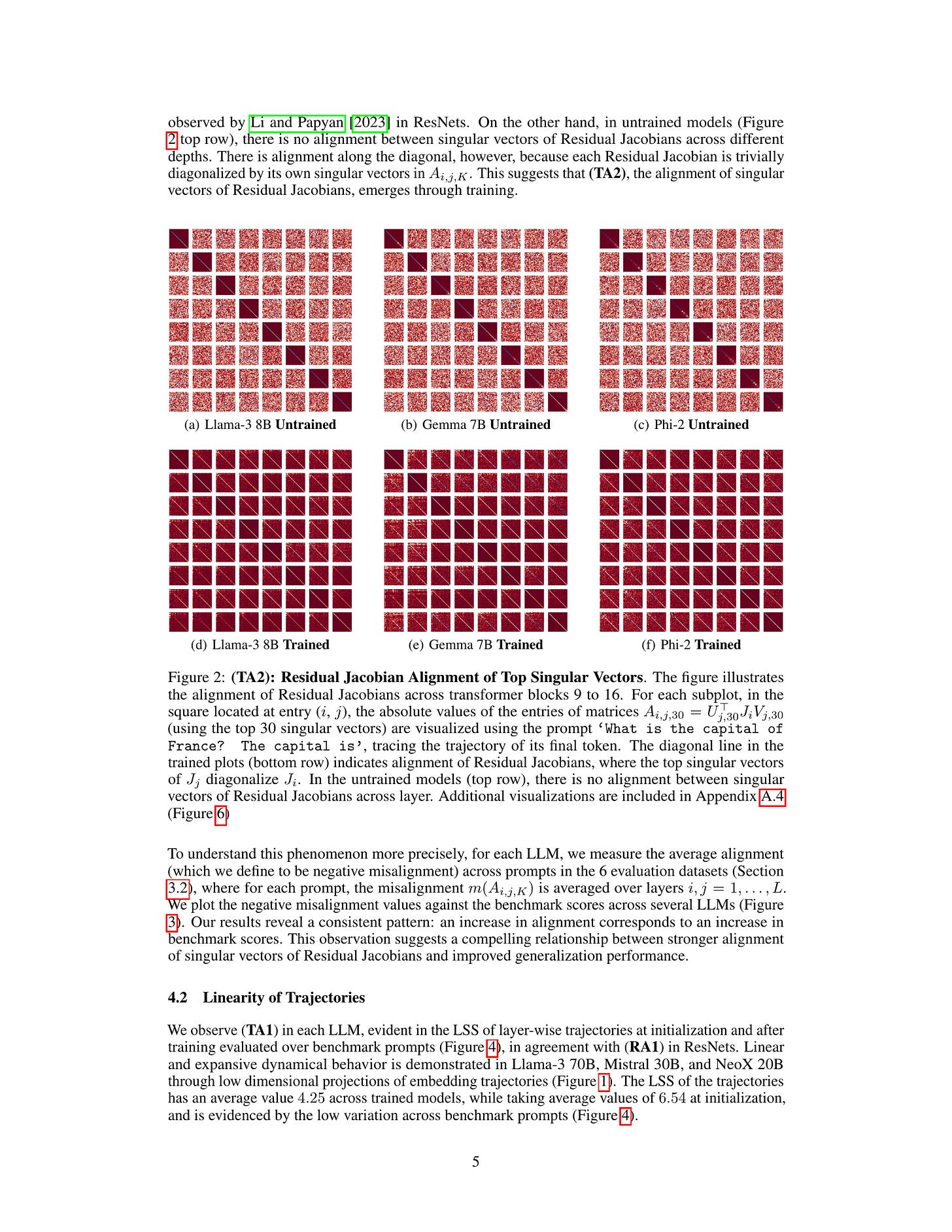

Transformer Alignment in Large Language Models

(Murdock Aubry, Haoming Meng, Anton Sugolov, Vardan Papyan)

Large Language Models (LLMs) have made significant strides in natural language processing, and a precise understanding of the internal mechanisms driving their success is essential. We regard LLMs as transforming embeddings via a discrete, coupled, nonlinear, dynamical system in high dimensions. This perspective motivates tracing the trajectories of individual tokens as they pass through transformer blocks, and linearizing the system along these trajectories through their Jacobian matrices. In our analysis of 38 openly available LLMs, we uncover the alignment of top left and right singular vectors of Residual Jacobians, as well as the emergence of linearity and layer-wise exponential growth. Notably, we discover that increased alignment positively correlates with model performance. Metrics evaluated post-training show significant improvement in comparison to measurements made with randomly initialized weights, highlighting the significant effects of training in transformers. These findings reveal a remarkable level of regularity that has previously been overlooked, reinforcing the dynamical interpretation and paving the way for deeper understanding and optimization of LLM architectures.

트랜스포머 블록의 야코비안의 Singular Vector들이 서로 다른 레이어의 Singular Vector들과 정렬되어 있다는 결과. 더 나아가서 더 잘 학습된 모델일수록 더 잘 정렬되어 있다는 추정. (이 효과는 좀 약한 것 같긴 합니다만.) 뭔가 초기화를 잘 하면 이런 정렬을 임의로 유도할 수도 있지 않을까요.

#transformer

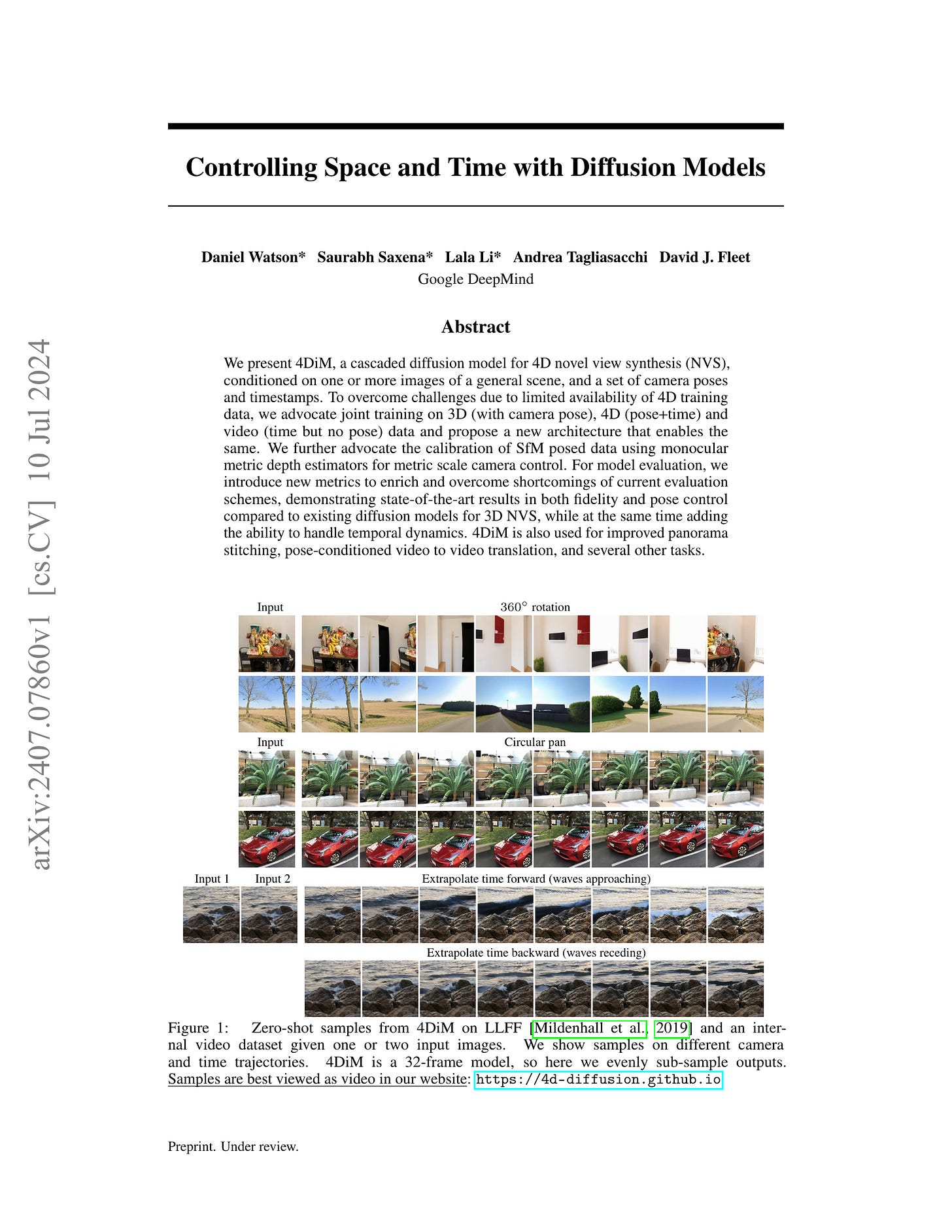

Controlling Space and Time with Diffusion Models

(Daniel Watson, Saurabh Saxena, Lala Li, Andrea Tagliasacchi, David J. Fleet)

We present 4DiM, a cascaded diffusion model for 4D novel view synthesis (NVS), conditioned on one or more images of a general scene, and a set of camera poses and timestamps. To overcome challenges due to limited availability of 4D training data, we advocate joint training on 3D (with camera pose), 4D (pose+time) and video (time but no pose) data and propose a new architecture that enables the same. We further advocate the calibration of SfM posed data using monocular metric depth estimators for metric scale camera control. For model evaluation, we introduce new metrics to enrich and overcome shortcomings of current evaluation schemes, demonstrating state-of-the-art results in both fidelity and pose control compared to existing diffusion models for 3D NVS, while at the same time adding the ability to handle temporal dynamics. 4DiM is also used for improved panorama stitching, pose-conditioned video to video translation, and several other tasks. For an overview see

https://4d-diffusion.github.io

https://4d-diffusion.github.io/

Few-shot Novel View Synthesis + Time. 사실 이제 이런 모델들에서는 Neural Rendering스러운 점은 거의 없네요. 카메라 포즈와 시간 정보를 조건으로 주고 카메라 포즈 없는 비디오 데이터도 같이 학습했군요. Sora에서 나타난 강력한 3D Consistency를 생각하면 이 문제에 대해 비디오가 할 수 있는 역할이 많은 것 같습니다.

#neural-rendering #diffusion