2024년 7월 10일

SoftDedup: an Efficient Data Reweighting Method for Speeding Up Language Model Pre-training

(Nan He, Weichen Xiong, Hanwen Liu, Yi Liao, Lei Ding, Kai Zhang, Guohua Tang, Xiao Han, Wei Yang)

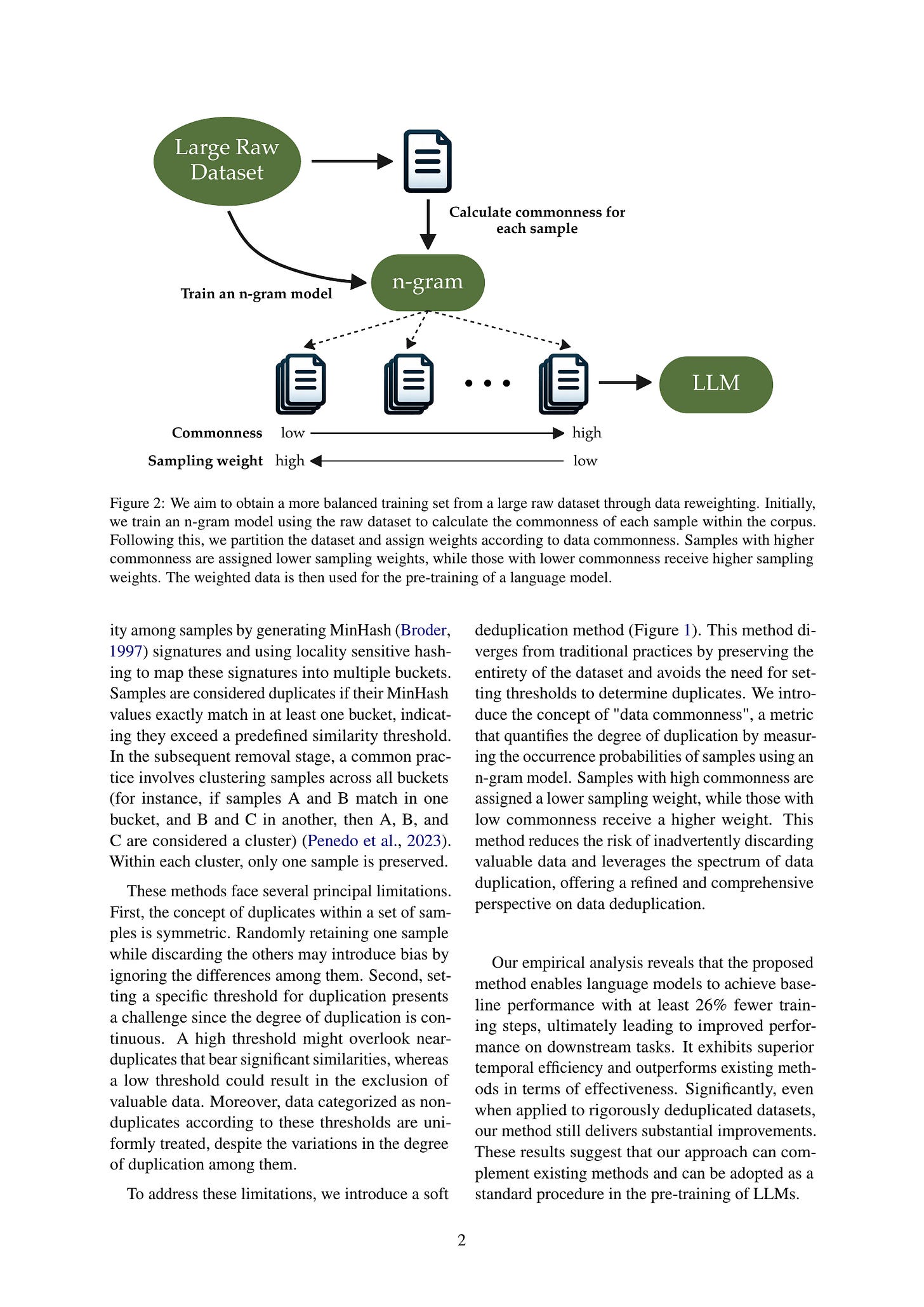

The effectiveness of large language models (LLMs) is often hindered by duplicated data in their extensive pre-training datasets. Current approaches primarily focus on detecting and removing duplicates, which risks the loss of valuable information and neglects the varying degrees of duplication. To address this, we propose a soft deduplication method that maintains dataset integrity while selectively reducing the sampling weight of data with high commonness. Central to our approach is the concept of "data commonness", a metric we introduce to quantify the degree of duplication by measuring the occurrence probabilities of samples using an n-gram model. Empirical analysis shows that this method significantly improves training efficiency, achieving comparable perplexity scores with at least a 26% reduction in required training steps. Additionally, it enhances average few-shot downstream accuracy by 1.77% when trained for an equivalent duration. Importantly, this approach consistently improves performance, even on rigorously deduplicated datasets, indicating its potential to complement existing methods and become a standard pre-training process for LLMs.

기존의 Hard Deduplication 대신 n-gram 모델로 텍스트의 확률을 추정하고 확률의 역수로 샘플링 비율을 결정하는 Soft Deduplication. 확률이 높을수록 통상적이고 자주 등장한다는 아이디어겠죠. Semantic Deduplication의 측면에서 생각할 수 있지 않을까 싶네요. (https://arxiv.org/abs/2405.15613)

#corpus #pretraining

Data, Data Everywhere: A Guide for Pretraining Dataset Construction

(Jupinder Parmar, Shrimai Prabhumoye, Joseph Jennings, Bo Liu, Aastha Jhunjhunwala, Zhilin Wang, Mostofa Patwary, Mohammad Shoeybi, Bryan Catanzaro)

The impressive capabilities of recent language models can be largely attributed to the multi-trillion token pretraining datasets that they are trained on. However, model developers fail to disclose their construction methodology which has lead to a lack of open information on how to develop effective pretraining sets. To address this issue, we perform the first systematic study across the entire pipeline of pretraining set construction. First, we run ablations on existing techniques for pretraining set development to identify which methods translate to the largest gains in model accuracy on downstream evaluations. Then, we categorize the most widely used data source, web crawl snapshots, across the attributes of toxicity, quality, type of speech, and domain. Finally, we show how such attribute information can be used to further refine and improve the quality of a pretraining set. These findings constitute an actionable set of steps that practitioners can use to develop high quality pretraining sets.

프리트레이닝 코퍼스에 대한 큐레이션 실험. 여기서는 DSIR (https://arxiv.org/abs/2302.03169) 같은 샘플링, UniMax (https://arxiv.org/abs/2304.09151) 같은 샘플링 비율 설정, 그리고 도메인을 구분한 다음 도메인별 샘플링 등을 시도했습니다. 재미있네요.

#corpus #pretraining

A Single Transformer for Scalable Vision-Language Modeling

(Yangyi Chen, Xingyao Wang, Hao Peng, Heng Ji)

We present SOLO, a single transformer for Scalable visiOn-Language mOdeling. Current large vision-language models (LVLMs) such as LLaVA mostly employ heterogeneous architectures that connect pre-trained visual encoders with large language models (LLMs) to facilitate visual recognition and complex reasoning. Although achieving remarkable performance with relatively lightweight training, we identify four primary scalability limitations: (1) The visual capacity is constrained by pre-trained visual encoders, which are typically an order of magnitude smaller than LLMs. (2) The heterogeneous architecture complicates the use of established hardware and software infrastructure. (3) Study of scaling laws on such architecture must consider three separate components - visual encoder, connector, and LLMs, which complicates the analysis. (4) The use of existing visual encoders typically requires following a pre-defined specification of image inputs pre-processing, for example, by reshaping inputs to fixed-resolution square images, which presents difficulties in processing and training on high-resolution images or those with unusual aspect ratio. A unified single Transformer architecture, like SOLO, effectively addresses these scalability concerns in LVLMs; however, its limited adoption in the modern context likely stems from the absence of reliable training recipes that balance both modalities and ensure stable training for billion-scale models. In this paper, we introduce the first open-source training recipe for developing SOLO, an open-source 7B LVLM using moderate academic resources. The training recipe involves initializing from LLMs, sequential pre-training on ImageNet and web-scale data, and instruction fine-tuning on our curated high-quality datasets. On extensive evaluation, SOLO demonstrates performance comparable to LLaVA-v1.5-7B, particularly excelling in visual mathematical reasoning.

이미지 패치를 직접 입력으로 받는 VLM. 가장 중요한 지점은 ImageNet21K로 분류 과제를 학습하는 것으로 시작해야 작동했다는 부분일 것 같네요. 기존의 CV 과제들을 결합하는 것은 좋은 방향이 아닐까 하는 생각을 합니다.

#vision-language #multimodal