2024년 6월 14일

HelpSteer2: Open-source dataset for training top-performing reward models

(Zhilin Wang, Yi Dong, Olivier Delalleau, Jiaqi Zeng, Gerald Shen, Daniel Egert, Jimmy J. Zhang, Makesh Narsimhan Sreedhar, Oleksii Kuchaiev)

High-quality preference datasets are essential for training reward models that can effectively guide large language models (LLMs) in generating high-quality responses aligned with human preferences. As LLMs become stronger and better aligned, permissively licensed preference datasets, such as Open Assistant, HH-RLHF, and HelpSteer need to be updated to remain effective for reward modeling. Methods that distil preference data from proprietary LLMs such as GPT-4 have restrictions on commercial usage imposed by model providers. To improve upon both generated responses and attribute labeling quality, we release HelpSteer2, a permissively licensed preference dataset (CC-BY-4.0). Using a powerful internal base model trained on HelpSteer2, we are able to achieve the SOTA score (92.0%) on Reward-Bench's primary dataset, outperforming currently listed open and proprietary models, as of June 12th, 2024. Notably, HelpSteer2 consists of only ten thousand response pairs, an order of magnitude fewer than existing preference datasets (e.g., HH-RLHF), which makes it highly efficient for training reward models. Our extensive experiments demonstrate that reward models trained with HelpSteer2 are effective in aligning LLMs. In particular, we propose SteerLM 2.0, a model alignment approach that can effectively make use of the rich multi-attribute score predicted by our reward models. HelpSteer2 is available at https://huggingface.co/datasets/nvidia/HelpSteer2 and code is available at https://github.com/NVIDIA/NeMo-Aligner

NVIDIA도 꾸준히 LLM을 만들고 튜닝하고 있군요. 15B 레시피로 (https://arxiv.org/abs/2402.16819) 340B 모델도 만들었고 연구하던 방식을 따라 (https://arxiv.org/abs/2311.09528) 데이터셋 구축도 했습니다.

최근 어노테이션을 직접 하고 있는 쪽에서는 샘플 하나를 한 명이 어노테이션하는 것을 넘어 여러 명이 참여하는 방식으로 바꿨으리라고 생각하고 있었는데 여기에서도 최소 세 명이 어노테이션을 했다고 하는군요.

#alignment

An Image is Worth More Than 16x16 Patches: Exploring Transformers on Individual Pixels

(Duy-Kien Nguyen, Mahmoud Assran, Unnat Jain, Martin R. Oswald, Cees G. M. Snoek, Xinlei Chen)

This work does not introduce a new method. Instead, we present an interesting finding that questions the necessity of the inductive bias -- locality in modern computer vision architectures. Concretely, we find that vanilla Transformers can operate by directly treating each individual pixel as a token and achieve highly performant results. This is substantially different from the popular design in Vision Transformer, which maintains the inductive bias from ConvNets towards local neighborhoods (e.g. by treating each 16x16 patch as a token). We mainly showcase the effectiveness of pixels-as-tokens across three well-studied tasks in computer vision: supervised learning for object classification, self-supervised learning via masked autoencoding, and image generation with diffusion models. Although directly operating on individual pixels is less computationally practical, we believe the community must be aware of this surprising piece of knowledge when devising the next generation of neural architectures for computer vision.

픽셀 기반 ViT. 이전이라면 224 * 224 = 50176 토큰이 너무 많다고 생각했겠지만 지금은 충분히 가능한 수준이긴 하죠.

#vit

OmniTokenizer: A Joint Image-Video Tokenizer for Visual Generation

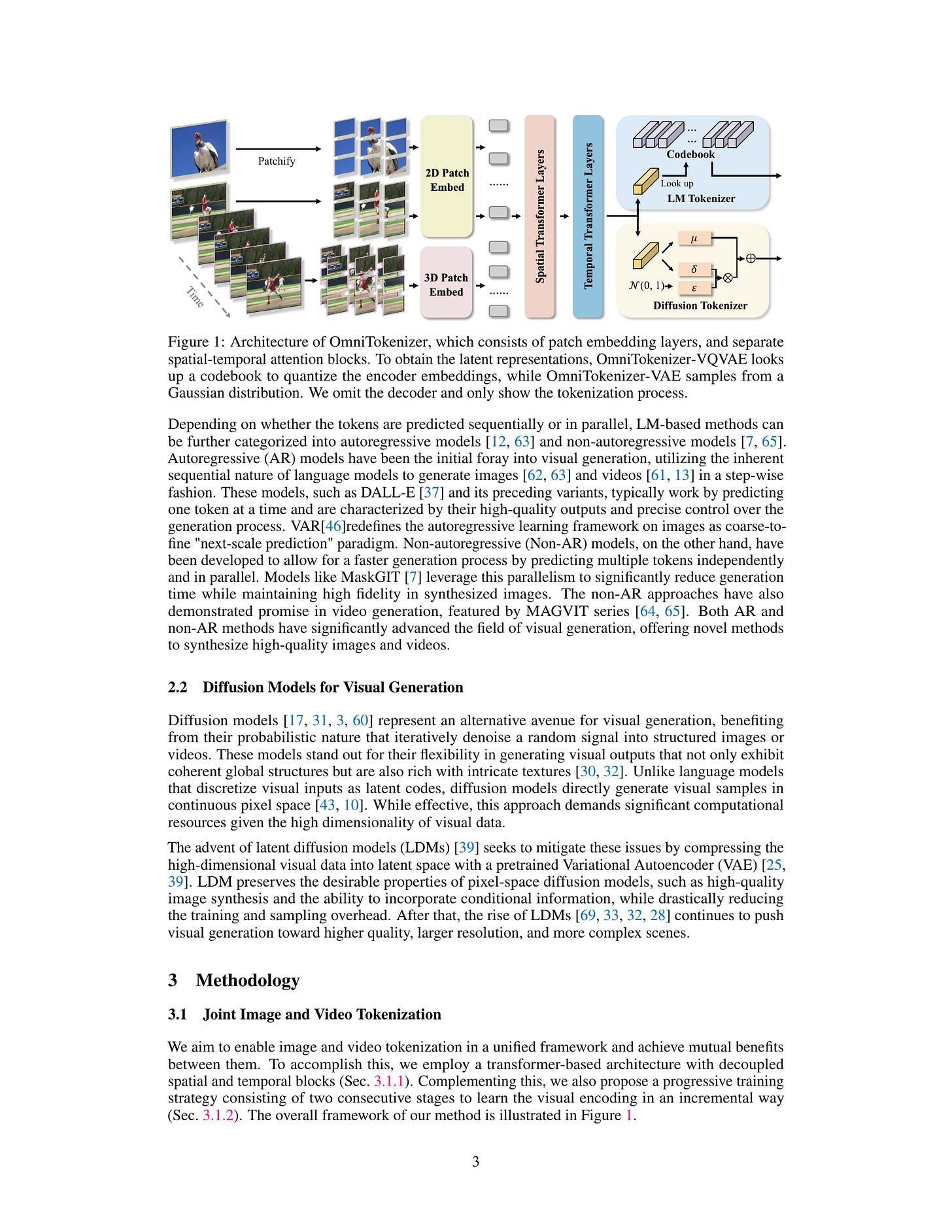

(Junke Wang, Yi Jiang, Zehuan Yuan, Binyue Peng, Zuxuan Wu, Yu-Gang Jiang)

Tokenizer, serving as a translator to map the intricate visual data into a compact latent space, lies at the core of visual generative models. Based on the finding that existing tokenizers are tailored to image or video inputs, this paper presents OmniTokenizer, a transformer-based tokenizer for joint image and video tokenization. OmniTokenizer is designed with a spatial-temporal decoupled architecture, which integrates window and causal attention for spatial and temporal modeling. To exploit the complementary nature of image and video data, we further propose a progressive training strategy, where OmniTokenizer is first trained on image data on a fixed resolution to develop the spatial encoding capacity and then jointly trained on image and video data on multiple resolutions to learn the temporal dynamics. OmniTokenizer, for the first time, handles both image and video inputs within a unified framework and proves the possibility of realizing their synergy. Extensive experiments demonstrate that OmniTokenizer achieves state-of-the-art (SOTA) reconstruction performance on various image and video datasets, e.g., 1.11 reconstruction FID on ImageNet and 42 reconstruction FVD on UCF-101, beating the previous SOTA methods by 13% and 26%, respectively. Additionally, we also show that when integrated with OmniTokenizer, both language model-based approaches and diffusion models can realize advanced visual synthesis performance, underscoring the superiority and versatility of our method. Code is available at https://github.com/FoundationVision/OmniTokenizer.

이미지/비디오 토크나이저에 대한 관심이 다시 높아지고 있는 것 같네요. Spatio-Temporal 트랜스포머 인코더/디코더를 사용하고 이미지로 1단계, 비디오로 2단계 학습해서 이미지/비디오에 대한 토크나이저를 학습. 추가로 VQ 대신 VAE로 파인튜닝을 해보기도 했습니다.

그나저나 이제 Omni라는 단어가 인기를 얻겠군요.

#vq

Unpacking DPO and PPO: Disentangling Best Practices for Learning from Preference Feedback

(Hamish Ivison, Yizhong Wang, Jiacheng Liu, Zeqiu Wu, Valentina Pyatkin, Nathan Lambert, Noah A. Smith, Yejin Choi, Hannaneh Hajishirzi)

Learning from preference feedback has emerged as an essential step for improving the generation quality and performance of modern language models (LMs). Despite its widespread use, the way preference-based learning is applied varies wildly, with differing data, learning algorithms, and evaluations used, making disentangling the impact of each aspect difficult. In this work, we identify four core aspects of preference-based learning: preference data, learning algorithm, reward model, and policy training prompts, systematically investigate the impact of these components on downstream model performance, and suggest a recipe for strong learning for preference feedback. Our findings indicate that all aspects are important for performance, with better preference data leading to the largest improvements, followed by the choice of learning algorithm, the use of improved reward models, and finally the use of additional unlabeled prompts for policy training. Notably, PPO outperforms DPO by up to 2.5% in math and 1.2% in general domains. High-quality preference data leads to improvements of up to 8% in instruction following and truthfulness. Despite significant gains of up to 5% in mathematical evaluation when scaling up reward models, we surprisingly observe marginal improvements in other categories. We publicly release the code used for training (https://github.com/hamishivi/EasyLM) and evaluating (https://github.com/allenai/open-instruct) our models, along with the models and datasets themselves (https://huggingface.co/collections/allenai/tulu-v25-suite-66676520fd578080e126f618).

LLM의 RL 과정의 요인들의 효과 평가. 데이터셋 퀄리티가 중요하고, PPO > DPO이고, Reward Model이 강력하면 좋겠지만 Reward Bench 스코어가 성능으로 이어지는 것은 아니고, PPO 과정에서 레이블은 없더라도 타겟 도메인에 대한 프롬프트를 사용할 수 있으면 좋다는 결과. PPO + 프롬프트 증폭은 Anthropic이 (https://arxiv.org/abs/2204.05862) 사용해왔던 트릭이기도 하죠.

데이터를 잘 만들고 방법에 집착하지 않을 것. ML 업계의 오래된 교훈이지만 그만큼 어려운 것인 것 같기도 합니다.

#alignment

ReMI: A Dataset for Reasoning with Multiple Images

(Mehran Kazemi, Nishanth Dikkala, Ankit Anand, Petar Devic, Ishita Dasgupta, Fangyu Liu, Bahare Fatemi, Pranjal Awasthi, Dee Guo, Sreenivas Gollapudi, Ahmed Qureshi)

With the continuous advancement of large language models (LLMs), it is essential to create new benchmarks to effectively evaluate their expanding capabilities and identify areas for improvement. This work focuses on multi-image reasoning, an emerging capability in state-of-the-art LLMs. We introduce ReMI, a dataset designed to assess LLMs' ability to Reason with Multiple Images. This dataset encompasses a diverse range of tasks, spanning various reasoning domains such as math, physics, logic, code, table/chart understanding, and spatial and temporal reasoning. It also covers a broad spectrum of characteristics found in multi-image reasoning scenarios. We have benchmarked several cutting-edge LLMs using ReMI and found a substantial gap between their performance and human-level proficiency. This highlights the challenges in multi-image reasoning and the need for further research. Our analysis also reveals the strengths and weaknesses of different models, shedding light on the types of reasoning that are currently attainable and areas where future models require improvement. To foster further research in this area, we are releasing ReMI publicly: https://huggingface.co/datasets/mehrankazemi/ReMI.

이미지 여러 장을 사용한 추론 과제 벤치마크. 시계 읽기는 비전에서 본래도 악명 높은 과제인데 여기서도 아주 끔찍한 결과가 나왔군요. (Claude 3 Sonnet의 5.0 vs Human 80.0)

#benchmark #vision-language