2024년 5월 29일

Scaling Laws and Compute-Optimal Training Beyond Fixed Training Durations

(Alexander Hägele, Elie Bakouch, Atli Kosson, Loubna Ben Allal, Leandro Von Werra, Martin Jaggi)

Scale has become a main ingredient in obtaining strong machine learning models. As a result, understanding a model's scaling properties is key to effectively designing both the right training setup as well as future generations of architectures. In this work, we argue that scale and training research has been needlessly complex due to reliance on the cosine schedule, which prevents training across different lengths for the same model size. We investigate the training behavior of a direct alternative - constant learning rate and cooldowns - and find that it scales predictably and reliably similar to cosine. Additionally, we show that stochastic weight averaging yields improved performance along the training trajectory, without additional training costs, across different scales. Importantly, with these findings we demonstrate that scaling experiments can be performed with significantly reduced compute and GPU hours by utilizing fewer but reusable training runs.

요즘 유행하는 Constant LR + Cooldown 스케줄을 Scaling Law 추정에 활용한 방법이네요. 코사인 스케줄을 쓴다면 모델 크기/학습량에 따라 새로운 모델을 학습시켜야 하죠. 그런데 Constant LR을 쓴다고 하면 같은 모델을 추가 학습한 다음 Cooldown을 시키면 되기에 재활용을 할 수 있습니다.

사실 DeepSeek LLM (https://arxiv.org/abs/2401.02954) 에서도 사용한 방법이고 MiniCPM에서도 Scaling Law 추정에 활용했을 것 같네요. (https://shengdinghu.notion.site/MiniCPM-Unveiling-the-Potential-of-End-side-Large-Language-Models-d4d3a8c426424654a4e80e42a711cb20)

#scaling-law

Long Context is Not Long at All: A Prospector of Long-Dependency Data for Large Language Models

(Longze Chen, Ziqiang Liu, Wanwei He, Yunshui Li, Run Luo, Min Yang)

Long-context modeling capabilities are important for large language models (LLMs) in various applications. However, directly training LLMs with long context windows is insufficient to enhance this capability since some training samples do not exhibit strong semantic dependencies across long contexts. In this study, we propose a data mining framework \textbf{ProLong} that can assign each training sample with a long dependency score, which can be used to rank and filter samples that are more advantageous for enhancing long-context modeling abilities in LLM training. Specifically, we first use delta perplexity scores to measure the \textit{Dependency Strength} between text segments in a given document. Then we refine this metric based on the \textit{Dependency Distance} of these segments to incorporate spatial relationships across long-contexts. Final results are calibrated with a \textit{Dependency Specificity} metric to prevent trivial dependencies introduced by repetitive patterns. Moreover, a random sampling approach is proposed to optimize the computational efficiency of ProLong. Comprehensive experiments on multiple benchmarks indicate that ProLong effectively identifies documents that carry long dependencies and LLMs trained on these documents exhibit significantly enhanced long-context modeling capabilities.

텍스트 내 부분들 사이의 의존 관계가 높은 데이터들이 Long Context 튜닝에 더 유용한 높은 퀄리티의 텍스트일 것이라는 아이디어.

#long-context

Phased Consistency Model

(Fu-Yun Wang, Zhaoyang Huang, Alexander William Bergman, Dazhong Shen, Peng Gao, Michael Lingelbach, Keqiang Sun, Weikang Bian, Guanglu Song, Yu Liu, Hongsheng Li, Xiaogang Wang)

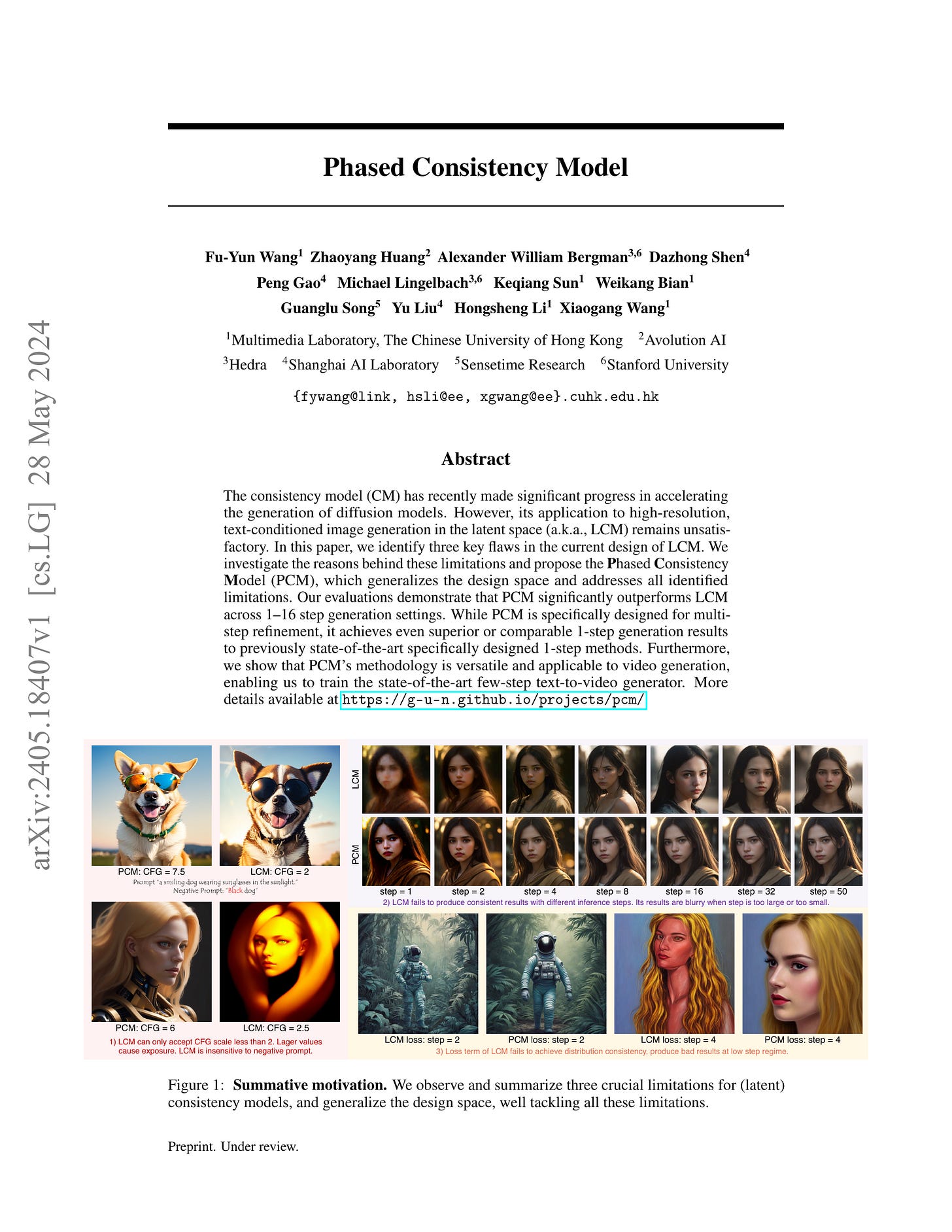

The consistency model (CM) has recently made significant progress in accelerating the generation of diffusion models. However, its application to high-resolution, text-conditioned image generation in the latent space (a.k.a., LCM) remains unsatisfactory. In this paper, we identify three key flaws in the current design of LCM. We investigate the reasons behind these limitations and propose the Phased Consistency Model (PCM), which generalizes the design space and addresses all identified limitations. Our evaluations demonstrate that PCM significantly outperforms LCM across 1--16 step generation settings. While PCM is specifically designed for multi-step refinement, it achieves even superior or comparable 1-step generation results to previously state-of-the-art specifically designed 1-step methods. Furthermore, we show that PCM's methodology is versatile and applicable to video generation, enabling us to train the state-of-the-art few-step text-to-video generator. More details are available at https://g-u-n.github.io/projects/pcm/.

Consistency Distillation에 대한 개선. Trajectory를 쪼갠다는 점에서는 Multistep Consistency Models (https://arxiv.org/abs/2403.06807) 와 통하는 점이 있는 것 같습니다. Text Condition에 대해서 했고 Adversarial Loss를 사용했다는 점이 추가 포인트겠네요.

#diffusion