2024년 5월 17일

Chameleon: Mixed-Modal Early-Fusion Foundation Models

(Chameleon Team)

We present Chameleon, a family of early-fusion token-based mixed-modal models capable of understanding and generating images and text in any arbitrary sequence. We outline a stable training approach from inception, an alignment recipe, and an architectural parameterization tailored for the early-fusion, token-based, mixed-modal setting. The models are evaluated on a comprehensive range of tasks, including visual question answering, image captioning, text generation, image generation, and long-form mixed modal generation. Chameleon demonstrates broad and general capabilities, including state-of-the-art performance in image captioning tasks, outperforms Llama-2 in text-only tasks while being competitive with models such as Mixtral 8x7B and Gemini-Pro, and performs non-trivial image generation, all in a single model. It also matches or exceeds the performance of much larger models, including Gemini Pro and GPT-4V, according to human judgments on a new long-form mixed-modal generation evaluation, where either the prompt or outputs contain mixed sequences of both images and text. Chameleon marks a significant step forward in a unified modeling of full multimodal documents.

드디어 나왔군요. 메타의 Early Fusion Vision Language 모델. VQ-VAE를 사용해 토크나이즈했고 9.2T 학습했네요.

학습 안정성을 위한 세팅들이 흥미롭습니다. QK Norm과 Swin Transformer V2 스타일의 Post Normalization (https://arxiv.org/abs/2111.09883) 혹은 Dropout을 사용했군요.

다만 벤치마크, 특히 이미지 생성에 대한 벤치마크가 적은 걸 보면 좀 급하게 낸 것이 아닌가 싶습니다. 모델 자체는 5개월 전에 학습이 끝났다고 합니다만. (https://x.com/ArmenAgha/status/1791275538625241320)

#vision-language #autoregressive-model

LoRA Learns Less and Forgets Less

(Dan Biderman, Jose Gonzalez Ortiz, Jacob Portes, Mansheej Paul, Philip Greengard, Connor Jennings, Daniel King, Sam Havens, Vitaliy Chiley, Jonathan Frankle, Cody Blakeney, John P. Cunningham)

Low-Rank Adaptation (LoRA) is a widely-used parameter-efficient finetuning method for large language models. LoRA saves memory by training only low rank perturbations to selected weight matrices. In this work, we compare the performance of LoRA and full finetuning on two target domains, programming and mathematics. We consider both the instruction finetuning (≈100K prompt-response pairs) and continued pretraining (≈10B unstructured tokens) data regimes. Our results show that, in most settings, LoRA substantially underperforms full finetuning. Nevertheless, LoRA exhibits a desirable form of regularization: it better maintains the base model's performance on tasks outside the target domain. We show that LoRA provides stronger regularization compared to common techniques such as weight decay and dropout; it also helps maintain more diverse generations. We show that full finetuning learns perturbations with a rank that is 10-100X greater than typical LoRA configurations, possibly explaining some of the reported gaps. We conclude by proposing best practices for finetuning with LoRA.

LoRA가 학습량이 적은 만큼 모델의 기존 성능 저하도 약하다는 연구. 사실 학습 성능과 성능 저하의 트레이드오프, 즉 파레토 커브에서 어느 위치에 있는가가 중요하겠죠. 조금 허무하지만 파레토 커브에서는 대체로 풀 파인튜닝과 비슷하고 가끔 LoRA가 더 나은 위치에 있는 경우가 존재하네요.

#finetuning

CAT3D: Create Anything in 3D with Multi-View Diffusion Models

(Ruiqi Gao, Aleksander Holynski, Philipp Henzler, Arthur Brussee, Ricardo Martin-Brualla, Pratul Srinivasan, Jonathan T. Barron, Ben Poole)

Advances in 3D reconstruction have enabled high-quality 3D capture, but require a user to collect hundreds to thousands of images to create a 3D scene. We present CAT3D, a method for creating anything in 3D by simulating this real-world capture process with a multi-view diffusion model. Given any number of input images and a set of target novel viewpoints, our model generates highly consistent novel views of a scene. These generated views can be used as input to robust 3D reconstruction techniques to produce 3D representations that can be rendered from any viewpoint in real-time. CAT3D can create entire 3D scenes in as little as one minute, and outperforms existing methods for single image and few-view 3D scene creation. See our project page for results and interactive demos at

https://cat3d.github.io

.

https://cat3d.github.io/

이미지 1+장으로 3D 생성. Multi view Diffusion으로 서로 다른 View의 이미지를 여러 장 만들고 NeRF를 학습시키는 형태로 접근했네요. Multi view Diffusion이 핵심일 텐데 비디오 Diffusion처럼 여러 이미지에 대해 Latent Diffusion을 확장하고 카메라 레이의 원점과 방향 정보를 이미지에 붙여서 입력으로 주는 방식입니다.

#3d-generation #diffusion #nerf

FFF: Fixing Flawed Foundations in contrastive pre-training results in very strong Vision-Language models

(Adrian Bulat, Yassine Ouali, Georgios Tzimiropoulos)

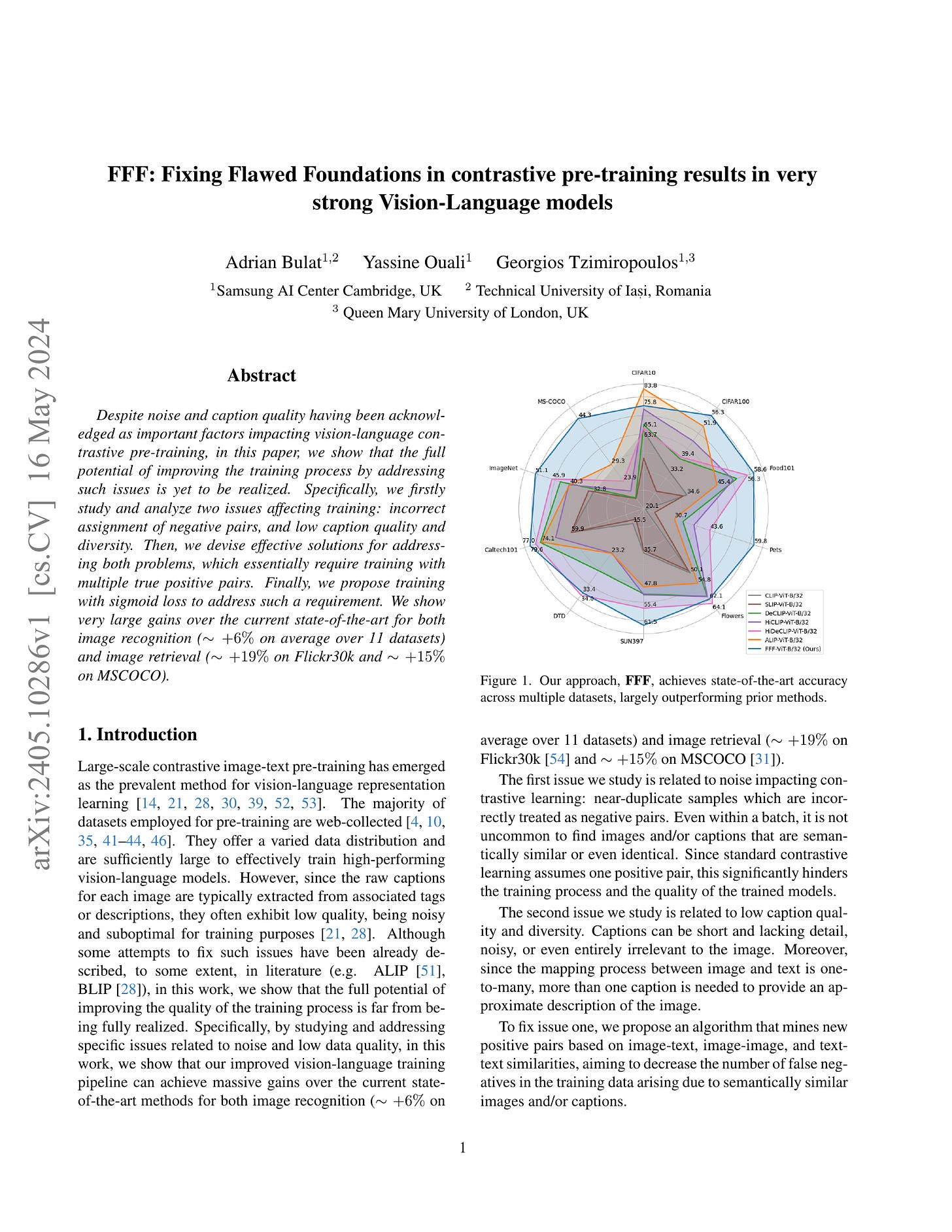

Despite noise and caption quality having been acknowledged as important factors impacting vision-language contrastive pre-training, in this paper, we show that the full potential of improving the training process by addressing such issues is yet to be realized. Specifically, we firstly study and analyze two issues affecting training: incorrect assignment of negative pairs, and low caption quality and diversity. Then, we devise effective solutions for addressing both problems, which essentially require training with multiple true positive pairs. Finally, we propose training with sigmoid loss to address such a requirement. We show very large gains over the current state-of-the-art for both image recognition (∼ +6% on average over 11 datasets) and image retrieval (∼ +19% on Flickr30k and ∼ +15% on MSCOCO).

CLIP 개선. 이미지-이미지, 이미지-텍스트, 텍스트-텍스트 유사도를 사용해 False negative에서 Positive 페어를 발굴합니다. 추가로 이미지에 대해 캡션을 여러 개 만들어서 추가 Positive 페어로 사용합니다.

원칙적으로는 Distillation이긴 하네요.

#vision-language #contrastive-learning

Spectral Editing of Activations for Large Language Model Alignment

(Yifu Qiu, Zheng Zhao, Yftah Ziser, Anna Korhonen, Edoardo M. Ponti, Shay B. Cohen)

Large language models (LLMs) often exhibit undesirable behaviours, such as generating untruthful or biased content. Editing their internal representations has been shown to be effective in mitigating such behaviours on top of the existing alignment methods. We propose a novel inference-time editing method, namely spectral editing of activations (SEA), to project the input representations into directions with maximal covariance with the positive demonstrations (e.g., truthful) while minimising covariance with the negative demonstrations (e.g., hallucinated). We also extend our method to non-linear editing using feature functions. We run extensive experiments on benchmarks concerning truthfulness and bias with six open-source LLMs of different sizes and model families. The results demonstrate the superiority of SEA in effectiveness, generalisation to similar tasks, as well as inference and data efficiency. We also show that SEA editing only has a limited negative impact on other model capabilities.

Activation Editing을 통한 정렬. Positive와 Negative 예시에 대한 임베딩을 얻고 Positive 임베딩에 대한 공분산을 최대화/Negative에 대한 공분산을 최소화 하는 형태군요. 적당한 수의 데이터로 튜닝 데이터 구축을 위한 부트스트래핑에 쓸 수 있을까 싶습니다.

#alignment