2024년 4월 4일

Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction

(Keyu Tian, Yi Jiang, Zehuan Yuan, Bingyue Peng, Liwei Wang)

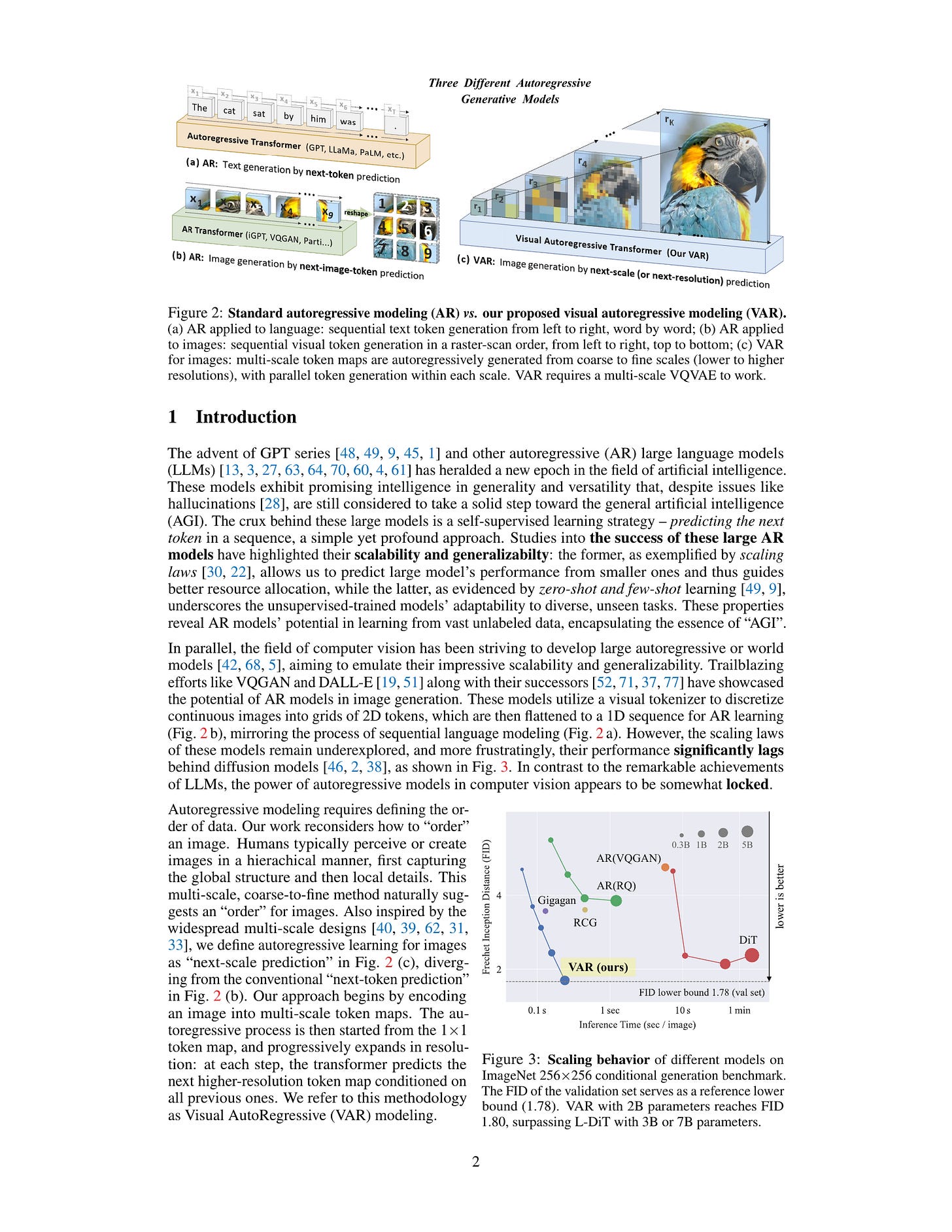

We present Visual AutoRegressive modeling (VAR), a new generation paradigm that redefines the autoregressive learning on images as coarse-to-fine "next-scale prediction" or "next-resolution prediction", diverging from the standard raster-scan "next-token prediction". This simple, intuitive methodology allows autoregressive (AR) transformers to learn visual distributions fast and generalize well: VAR, for the first time, makes AR models surpass diffusion transformers in image generation. On ImageNet 256x256 benchmark, VAR significantly improve AR baseline by improving Frechet inception distance (FID) from 18.65 to 1.80, inception score (IS) from 80.4 to 356.4, with around 20x faster inference speed. It is also empirically verified that VAR outperforms the Diffusion Transformer (DiT) in multiple dimensions including image quality, inference speed, data efficiency, and scalability. Scaling up VAR models exhibits clear power-law scaling laws similar to those observed in LLMs, with linear correlation coefficients near -0.998 as solid evidence. VAR further showcases zero-shot generalization ability in downstream tasks including image in-painting, out-painting, and editing. These results suggest VAR has initially emulated the two important properties of LLMs: Scaling Laws and zero-shot task generalization. We have released all models and codes to promote the exploration of AR/VAR models for visual generation and unified learning.

이건 굉장히 흥미롭네요. VQVAE 기반으로 Autoregressive 생성을 하되 이전 토큰에 대해 Conditioning을 하는 것이 아니라 이전 스케일, 그러니까 좀 더 작은 스케일에서 나온 토큰들에 Conditioning을 해서 생성하는 방법입니다. 단순하면서도 흥미로운 결과가 나왔네요. VQ-VAE-2 (https://arxiv.org/abs/1906.00446) 의 Hierarchical한 시도가 다시 등장했다는 느낌도 있습니다.

Scaling Law를 추정한 결과도 나와있습니다. 어쩌면 이미지 생성 업계의 판도가 다시 한 번 뒤바뀔지도 모르겠군요.

#autoregressive-model #image-generation

Mixture-of-Depths: Dynamically allocating compute in transformer-based language models

(David Raposo, Sam Ritter, Blake Richards, Timothy Lillicrap, Peter Conway Humphreys, Adam Santoro)

Transformer-based language models spread FLOPs uniformly across input sequences. In this work we demonstrate that transformers can instead learn to dynamically allocate FLOPs (or compute) to specific positions in a sequence, optimising the allocation along the sequence for different layers across the model depth. Our method enforces a total compute budget by capping the number of tokens (k) that can participate in the self-attention and MLP computations at a given layer. The tokens to be processed are determined by the network using a top-k routing mechanism. Since k is defined a priori, this simple procedure uses a static computation graph with known tensor sizes, unlike other conditional computation techniques. Nevertheless, since the identities of the k tokens are fluid, this method can expend FLOPs non-uniformly across the time and model depth dimensions. Thus, compute expenditure is entirely predictable in sum total, but dynamic and context-sensitive at the token-level. Not only do models trained in this way learn to dynamically allocate compute, they do so efficiently. These models match baseline performance for equivalent FLOPS and wall-clock times to train, but require a fraction of the FLOPs per forward pass, and can be upwards of 50% faster to step during post-training sampling.

Adaptive Depth / Early Exit과 비슷한데 연산을 레이어 중간에서 끊는 것이 아니라 각 레이어가 사용할 토큰의 양을 줄이는 방식이군요. 예를 들어 Self Attention이나 MLP가 시퀀스 내의 토큰 50%만 사용하도록 하고, Router를 사용해서 Top-K개를 끊어 레이어를 적용하는 방식입니다.

다만 Autoregressive Generation 상황이 문제겠군요. 일단은 성능 문제는 그렇게 크지 않다고 합니다.

#moe

Toward Inference-optimal Mixture-of-Expert Large Language Models

(Longfei Yun, Yonghao Zhuang, Yao Fu, Eric P Xing, Hao Zhang)

Mixture-of-Expert (MoE) based large language models (LLMs), such as the recent Mixtral and DeepSeek-MoE, have shown great promise in scaling model size without suffering from the quadratic growth of training cost of dense transformers. Like dense models, training MoEs requires answering the same question: given a training budget, what is the optimal allocation on the model size and number of tokens? We study the scaling law of MoE-based LLMs regarding the relations between the model performance, model size, dataset size, and the expert degree. Echoing previous research studying MoE in different contexts, we observe the diminishing return of increasing the number of experts, but this seems to suggest we should scale the number of experts until saturation, as the training cost would remain constant, which is problematic during inference time. We propose to amend the scaling law of MoE by introducing inference efficiency as another metric besides the validation loss. We find that MoEs with a few (4/8) experts are the most serving efficient solution under the same performance, but costs 2.5-3.5x more in training. On the other hand, training a (16/32) expert MoE much smaller (70-85%) than the loss-optimal solution, but with a larger training dataset is a promising setup under a training budget.

MoE Scaling Law와 비용 모델을 사용해 같은 Loss를 최소 비용으로 달성하는 Expert의 수가 얼마일지를 추정한 연구. Overtraining까지 고려하면 16 Expert 정도가 좋은 것 같다는 결론입니다.

다만 MoE Scaling Law, 비용 모델이 모두 맞아야 하고 MoE도 Expert의 크기를 줄이고 Top-K를 높이는 방식 등이 나오고 있어서 정확한 추정이 어려울 것 같긴 하네요. 다만 공교롭게도 요즘 나 MoE 모델들은 (Expert를 줄인 경우를 제외하면) 8, 16 Expert가 많긴 하군요.

#moe #scaling-law