2024년 4월 30일

Replacing Judges with Juries: Evaluating LLM Generations with a Panel of Diverse Models

(Pat Verga, Sebastian Hofstatter, Sophia Althammer, Yixuan Su, Aleksandra Piktus, Arkady Arkhangorodsky, Minjie Xu, Naomi White, Patrick Lewis)

As Large Language Models (LLMs) have become more advanced, they have outpaced our abilities to accurately evaluate their quality. Not only is finding data to adequately probe particular model properties difficult, but evaluating the correctness of a model's freeform generation alone is a challenge. To address this, many evaluations now rely on using LLMs themselves as judges to score the quality of outputs from other LLMs. Evaluations most commonly use a single large model like GPT4. While this method has grown in popularity, it is costly, has been shown to introduce intramodel bias, and in this work, we find that very large models are often unnecessary. We propose instead to evaluate models using a Panel of LLm evaluators (PoLL). Across three distinct judge settings and spanning six different datasets, we find that using a PoLL composed of a larger number of smaller models outperforms a single large judge, exhibits less intra-model bias due to its composition of disjoint model families, and does so while being over seven times less expensive.

GPT-4 대신 저렴한 Haiku, Command R, GPT-3.5 같은 모델들을 여럿 사용해서 평가하는 방법. 결과적으로는 이쪽이 평가 성능도 더 높습니다. GPT-4의 평가 성능이 상당히 낮게 잡히고 있는 결과라는 점을 유의해야 할 것 같긴 합니다.

#evaluation

Benchmarking Benchmark Leakage in Large Language Models

(Ruijie Xu, Zengzhi Wang, Run-Ze Fan, Pengfei Liu)

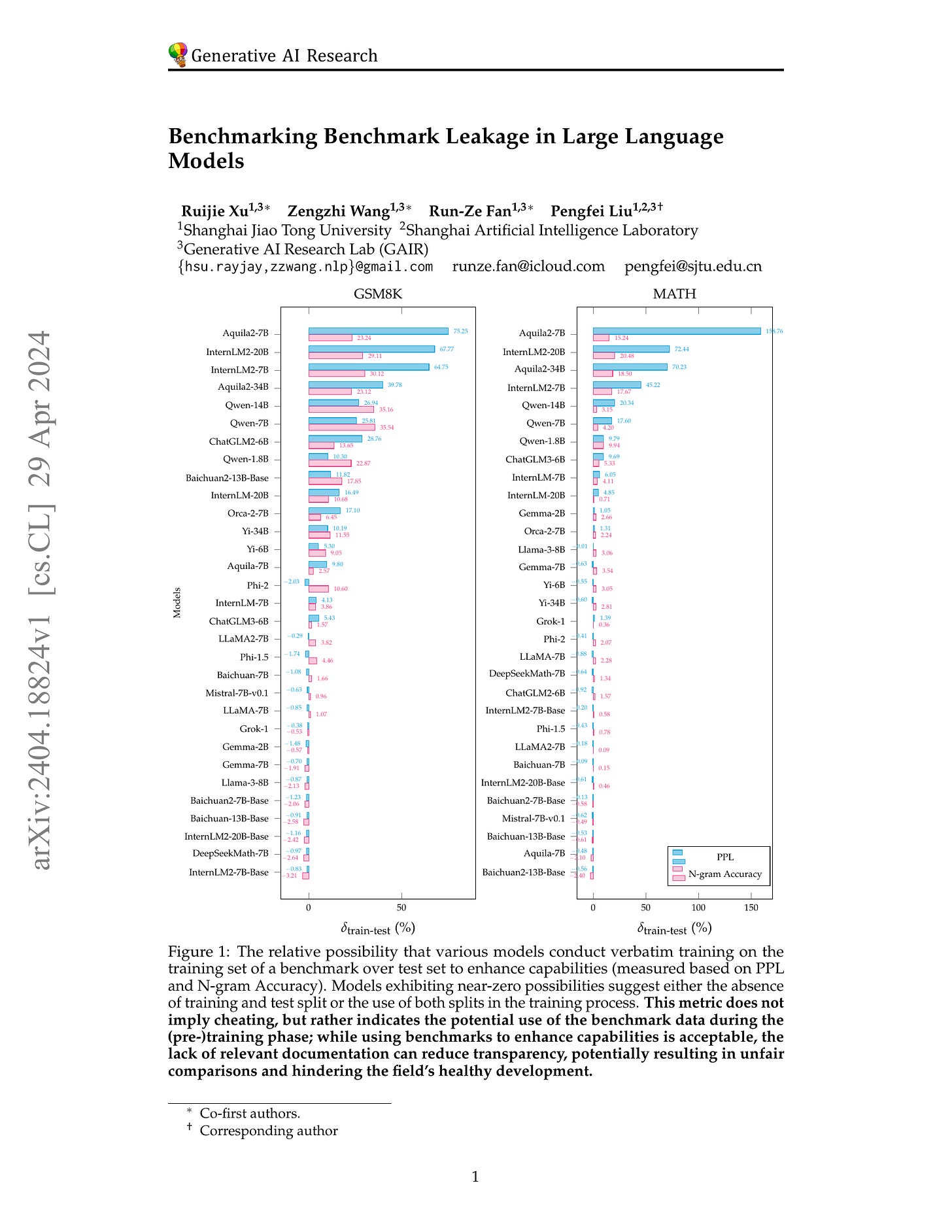

Amid the expanding use of pre-training data, the phenomenon of benchmark dataset leakage has become increasingly prominent, exacerbated by opaque training processes and the often undisclosed inclusion of supervised data in contemporary Large Language Models (LLMs). This issue skews benchmark effectiveness and fosters potentially unfair comparisons, impeding the field's healthy development. To address this, we introduce a detection pipeline utilizing Perplexity and N-gram accuracy, two simple and scalable metrics that gauge a model's prediction precision on benchmark, to identify potential data leakages. By analyzing 31 LLMs under the context of mathematical reasoning, we reveal substantial instances of training even test set misuse, resulting in potentially unfair comparisons. These findings prompt us to offer several recommendations regarding model documentation, benchmark setup, and future evaluations. Notably, we propose the "Benchmark Transparency Card" to encourage clear documentation of benchmark utilization, promoting transparency and healthy developments of LLMs. we have made our leaderboard, pipeline implementation, and model predictions publicly available, fostering future research.

벤치마크 데이터셋에 대한 오염 검증. Perplexity와 n-gram을 평가 척도로 해서 데이터셋과 데이터셋을 Paraphrasing한 데이터셋 사이의 스코어 차이를 사용해서 평가했습니다. 논문에서 제안하는 것은 벤치마크 학습 데이터셋 같은 걸 썼다는 것 자체는 문제는 아닐 수 있는데 썼으면 썼다고 하는 것이 좋지 않겠는가군요.

#benchmark