2024년 4월 18일

Probing the 3D Awareness of Visual Foundation Models

(Mohamed El Banani, Amit Raj, Kevis-Kokitsi Maninis, Abhishek Kar, Yuanzhen Li, Michael Rubinstein, Deqing Sun, Leonidas Guibas, Justin Johnson, Varun Jampani)

Recent advances in large-scale pretraining have yielded visual foundation models with strong capabilities. Not only can recent models generalize to arbitrary images for their training task, their intermediate representations are useful for other visual tasks such as detection and segmentation. Given that such models can classify, delineate, and localize objects in 2D, we ask whether they also represent their 3D structure? In this work, we analyze the 3D awareness of visual foundation models. We posit that 3D awareness implies that representations (1) encode the 3D structure of the scene and (2) consistently represent the surface across views. We conduct a series of experiments using task-specific probes and zero-shot inference procedures on frozen features. Our experiments reveal several limitations of the current models. Our code and analysis can be found at https://github.com/mbanani/probe3d.

Depth/Surface Normal/Correspondence Estimation으로 CLIP, DINO, StableDiffusion 같은 Vision 인코더 모델들의 3d Awareness를 측정했군요.

DINO와 Stable Diffusion이 꽤 좋은 성능을 보여줍니다. 다만 Correspondence에서 모델들이 모두 약한 모습을 보여주네요. 3D Consistency에 대해 문제가 있다는 의미가 됩니다.

비디오나 3D 데이터에 대해 Contrastive 혹은 Generative Objective로 학습된 인코더가 가장 우수한 특성을 보여줄 것 같다는 느낌이 드네요.

#vision

OLMo 1.7

OLMo의 업데이트 버전 1.7이 공개됐네요. 데이터셋 측면에서 많은 부분이 달라졌습니다. (Dolma 1.7) 최근 나타나고 있는 방법들이 많이 등장했습니다.

Common Crawl만으로 커버하는 대신 가치가 높은 데이터셋을 따로 분류하여 관리. Stack Exchange 등.

Instruction Tuning 데이터셋들을 사용. FLAN 등.

Deduplication. Dolma에서는 Paragraph Exact Deduplication만 수행했었죠. Fuzzy Deduplication이 추가됐습니다.

Quality Filtering. Dolma는 본래 휴리스틱 기반 필터링을 사용했었죠. FastText Classifier를 사용한 퀄리티 필터링을 사용했습니다.

3T Cosine Decay 스케줄을 사용한 다음 2T에서 끊고 높은 퀄리티의 데이터셋에 대해 50B 학습시켰습니다.

6T 학습, 더 많은 데이터소스 등 아직 고려할만한 것들이 많이 남아 있네요.

#llm #dataset

Chinchilla Scaling: A replication attempt

(Tamay Besiroglu, Ege Erdil, Matthew Barnett, Josh You)

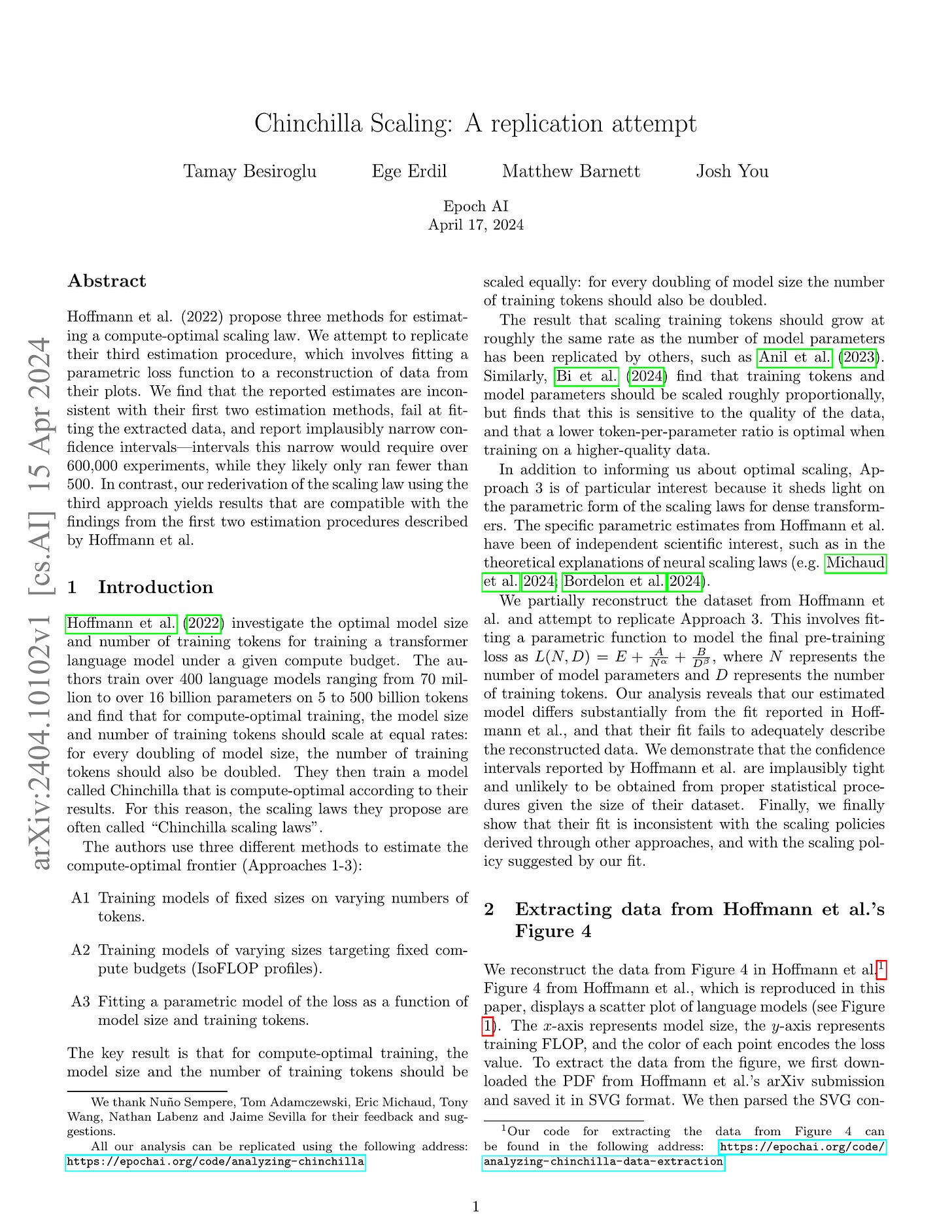

Hoffmann et al. (2022) propose three methods for estimating a compute-optimal scaling law. We attempt to replicate their third estimation procedure, which involves fitting a parametric loss function to a reconstruction of data from their plots. We find that the reported estimates are inconsistent with their first two estimation methods, fail at fitting the extracted data, and report implausibly narrow confidence intervals--intervals this narrow would require over 600,000 experiments, while they likely only ran fewer than 500. In contrast, our rederivation of the scaling law using the third approach yields results that are compatible with the findings from the first two estimation procedures described by Hoffmann et al.

Chinchilla 논문의 SVG 이미지에서 값들을 뽑아 Chinchilla의 Parametric Function을 다시 추정해봤군요. 결과적으로 파라미터가 상당히 다르다고 합니다. Chinchilla의 Parameteric Fit으로 최적 파라미터와 데이터의 규모를 유도했을 때 다른 접근과 차이가 상당히 크다는 점에서 이상하다고 생각하는 사람들이 많았을 것 같은데, 다시 추정한 파라미터를 사용하면 1:20이라는 파라미터 대 데이터의 비율에 좀 더 가까운 값이 나오는군요.

#scaling-law

Is DPO Superior to PPO for LLM Alignment? A Comprehensive Study

(Shusheng Xu, Wei Fu, Jiaxuan Gao, Wenjie Ye, Weilin Liu, Zhiyu Mei, Guangju Wang, Chao Yu, Yi Wu)

Reinforcement Learning from Human Feedback (RLHF) is currently the most widely used method to align large language models (LLMs) with human preferences. Existing RLHF methods can be roughly categorized as either reward-based or reward-free. Novel applications such as ChatGPT and Claude leverage reward-based methods that first learn a reward model and apply actor-critic algorithms, such as Proximal Policy Optimization (PPO). However, in academic benchmarks, state-of-the-art results are often achieved via reward-free methods, such as Direct Preference Optimization (DPO). Is DPO truly superior to PPO? Why does PPO perform poorly on these benchmarks? In this paper, we first conduct both theoretical and empirical studies on the algorithmic properties of DPO and show that DPO may have fundamental limitations. Moreover, we also comprehensively examine PPO and reveal the key factors for the best performances of PPO in fine-tuning LLMs. Finally, we benchmark DPO and PPO across various a collection of RLHF testbeds, ranging from dialogue to code generation. Experiment results demonstrate that PPO is able to surpass other alignment methods in all cases and achieve state-of-the-art results in challenging code competitions.

PPO가 스스로를 다시 증명해야 하는가 하는 생각이 들긴 합니다만 현재 자체 구축한 데이터셋에서 PPO와 다른 방법들을 비교한 사례는 아주 드물다는 것을 고려하면 그 자체로 의미가 있을 것 같긴 합니다.

여기서 지적하는 DPO의 문제는 OOD 응답을 선호하는 경향이 강하다는 것이네요. 최근 DPO로 인해 길이가 길어지는 문제에 대한 분석에서 (https://arxiv.org/abs/2403.19159) 이 현상이 OOD 문제로 인해 발생하는 것 같다는 추정이 있었죠.

#rlhf

Fewer Truncations Improve Language Modeling

(Hantian Ding, Zijian Wang, Giovanni Paolini, Varun Kumar, Anoop Deoras, Dan Roth, Stefano Soatto)

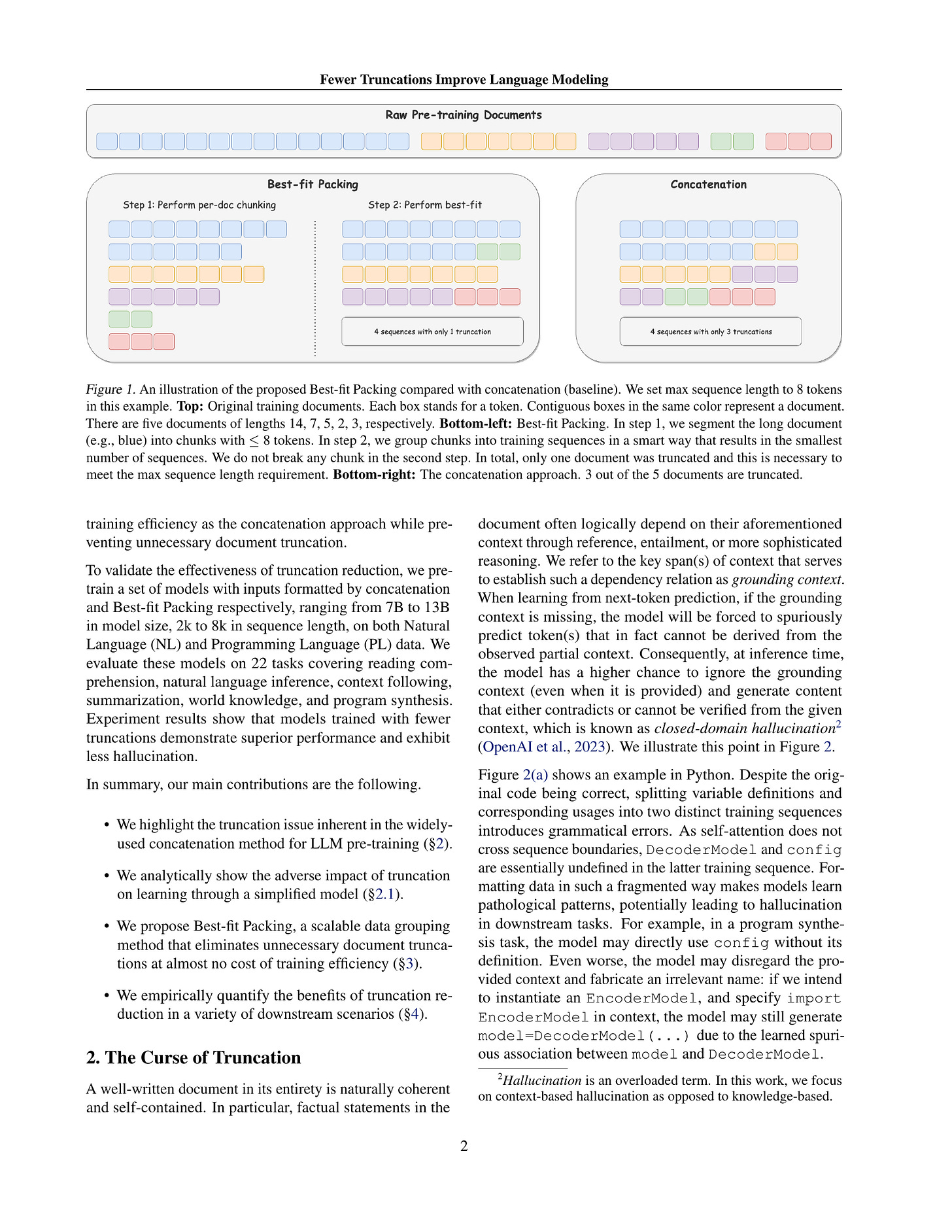

In large language model training, input documents are typically concatenated together and then split into sequences of equal length to avoid padding tokens. Despite its efficiency, the concatenation approach compromises data integrity -- it inevitably breaks many documents into incomplete pieces, leading to excessive truncations that hinder the model from learning to compose logically coherent and factually consistent content that is grounded on the complete context. To address the issue, we propose Best-fit Packing, a scalable and efficient method that packs documents into training sequences through length-aware combinatorial optimization. Our method completely eliminates unnecessary truncations while retaining the same training efficiency as concatenation. Empirical results from both text and code pre-training show that our method achieves superior performance (e.g., relatively +4.7% on reading comprehension; +16.8% in context following; and +9.2% on program synthesis), and reduces closed-domain hallucination effectively by up to 58.3%.

LLM 프리트레이닝 시점에서 문서를 이어붙인 다음 시퀀스 길이 제한에 맞게 자르다보니 문서가 잘려나가는 경우가 많으니 문서가 절단되는 경우를 줄이도록 패킹한다는 아이디어. 재미있네요.

#llm #pretraining

Many-Shot In-Context Learning

(Rishabh Agarwal, Avi Singh, Lei M. Zhang, Bernd Bohnet, Stephanie Chan, Ankesh Anand, Zaheer Abbas, Azade Nova, John D. Co-Reyes, Eric Chu, Feryal Behbahani, Aleksandra Faust, Hugo Larochelle)

Large language models (LLMs) excel at few-shot in-context learning (ICL) -- learning from a few examples provided in context at inference, without any weight updates. Newly expanded context windows allow us to investigate ICL with hundreds or thousands of examples -- the many-shot regime. Going from few-shot to many-shot, we observe significant performance gains across a wide variety of generative and discriminative tasks. While promising, many-shot ICL can be bottlenecked by the available amount of human-generated examples. To mitigate this limitation, we explore two new settings: Reinforced and Unsupervised ICL. Reinforced ICL uses model-generated chain-of-thought rationales in place of human examples. Unsupervised ICL removes rationales from the prompt altogether, and prompts the model only with domain-specific questions. We find that both Reinforced and Unsupervised ICL can be quite effective in the many-shot regime, particularly on complex reasoning tasks. Finally, we demonstrate that, unlike few-shot learning, many-shot learning is effective at overriding pretraining biases and can learn high-dimensional functions with numerical inputs. Our analysis also reveals the limitations of next-token prediction loss as an indicator of downstream ICL performance.

In-context Learning의 샷을 늘려보기. 1M LLM이 있으니 가뿐하군요.

Chain of Thought Rationale을 사람이 작성해줘야 한다는 것이 문제이므로 답을 사용해서 생성하는 ReST 같은 방법이나, 아예 Rationale을 몇 개 예제만 빼고 없앤 다음 질문과 답의 페어만 늘리는 방식을 테스트해봤습니다.

#in-context-learning