2024년 4월 12일

GPT-4-Turbo-2024-04-09

https://github.com/openai/simple-evals

OpenAI가 GPT-4-Turbo-2024-04-09에 대한 벤치마크와 함께 벤치마크 수트를 공개했네요. 확실히 이전 모델들보다 벤치마크 성능이 높아졌습니다. 그리고 응답이 좀 더 간결하고 대화에 가까운 방향으로 스타일이 바뀌었다고 하네요.

벤치마크에서 가장 크게 향상된 것은 GPQA와 MATH인 것 같습니다. GPQA는 Claude 3 Opus와 비슷한 수준이긴 한데 MATH가 압도적인 수준이 됐네요. 수학에 대한 특별한 향상이 들어갔다는 것을 시사하는 듯 싶습니다.

#llm

Rho-1: Not All Tokens Are What You Need

(Zhenghao Lin, Zhibin Gou, Yeyun Gong, Xiao Liu, Yelong Shen, Ruochen Xu, Chen Lin, Yujiu Yang, Jian Jiao, Nan Duan, Weizhu Chen)

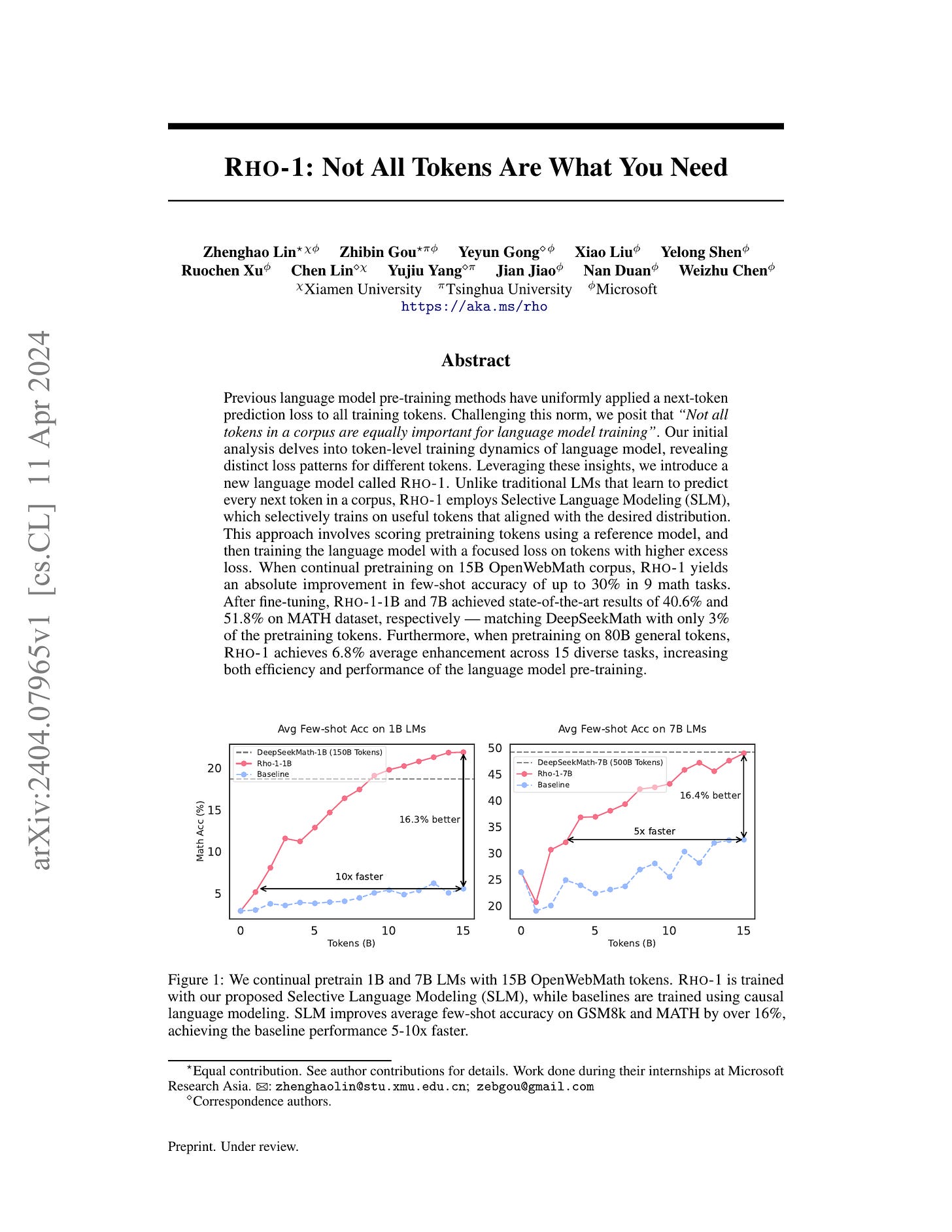

Previous language model pre-training methods have uniformly applied a next-token prediction loss to all training tokens. Challenging this norm, we posit that "Not all tokens in a corpus are equally important for language model training". Our initial analysis delves into token-level training dynamics of language model, revealing distinct loss patterns for different tokens. Leveraging these insights, we introduce a new language model called Rho-1. Unlike traditional LMs that learn to predict every next token in a corpus, Rho-1 employs Selective Language Modeling (SLM), which selectively trains on useful tokens that aligned with the desired distribution. This approach involves scoring pretraining tokens using a reference model, and then training the language model with a focused loss on tokens with higher excess loss. When continual pretraining on 15B OpenWebMath corpus, Rho-1 yields an absolute improvement in few-shot accuracy of up to 30% in 9 math tasks. After fine-tuning, Rho-1-1B and 7B achieved state-of-the-art results of 40.6% and 51.8% on MATH dataset, respectively - matching DeepSeekMath with only 3% of the pretraining tokens. Furthermore, when pretraining on 80B general tokens, Rho-1 achieves 6.8% average enhancement across 15 diverse tasks, increasing both efficiency and performance of the language model pre-training.

일단 학습 과정에서 Loss가 꾸준히 낮은 경우, Loss가 높았다가 낮아지는 경우, 오히려 높아지는 경우, 그리고 계속 높은 경우가 있다는 구분에서 시작하네요. 후자 두 가지는 노이즈인 경우가 많다고 합니다.이 발견을 어떻게 활용할 것인가? 레퍼런스 모델을 높은 퀄리티의 데이터셋에 학습시킨 다음 현재 학습 중인 모델과의 Loss 차이가 큰 경우만 골라서 학습시키는 방법을 사용했습니다. 벤치마크에 대한 효과가 굉장하네요.

Hard Example Mining이나 Focal Loss가 생각나는군요. 다만 단순히 Loss가 높은 것을 고르는 것이 아니라 노이즈로 인해 높은 경우를 걸러내는 효과가 중요한 것이 아닐까 싶습니다.

#pretraining #llm

RecurrentGemma: Moving Past Transformers for Efficient Open Language Models

(Aleksandar Botev, Soham De, Samuel L Smith, Anushan Fernando, George-Cristian Muraru, Ruba Haroun, Leonard Berrada, Razvan Pascanu, Pier Giuseppe Sessa, Robert Dadashi, Léonard Hussenot, Johan Ferret, Sertan Girgin, Olivier Bachem, Alek Andreev, Kathleen Kenealy, Thomas Mesnard, Cassidy Hardin, Surya Bhupatiraju, Shreya Pathak, Laurent Sifre, Morgane Rivière, Mihir Sanjay Kale, Juliette Love, Pouya Tafti, Armand Joulin, Noah Fiedel, Evan Senter, Yutian Chen, Srivatsan Srinivasan, Guillaume Desjardins, David Budden, Arnaud Doucet, Sharad Vikram, Adam Paszke, Trevor Gale, Sebastian Borgeaud, Charlie Chen, Andy Brock, Antonia Paterson, Jenny Brennan, Meg Risdal, Raj Gundluru, Nesh Devanathan, Paul Mooney, Nilay Chauhan, Phil Culliton, Luiz GUStavo Martins, Elisa Bandy, David Huntsperger, Glenn Cameron, Arthur Zucker, Tris Warkentin, Ludovic Peran, Minh Giang, Zoubin Ghahramani, Clément Farabet, Koray Kavukcuoglu, Demis Hassabis, Raia Hadsell, Yee Whye Teh, Nando de Frietas)

We introduce RecurrentGemma, an open language model which uses Google's novel Griffin architecture. Griffin combines linear recurrences with local attention to achieve excellent performance on language. It has a fixed-sized state, which reduces memory use and enables efficient inference on long sequences. We provide a pre-trained model with 2B non-embedding parameters, and an instruction tuned variant. Both models achieve comparable performance to Gemma-2B despite being trained on fewer tokens.

Gemma 2B + Griffin (https://arxiv.org/abs/2402.19427) 이 나왔군요. 전반적으로 (2T vs 3T 학습이라는 것도 고려하면) 성능이 비슷하게 나왔다는 것이 포인트입니다.Gemma 1.1-it에 대해서도 그렇고 새로운 RLHF 알고리즘을 적용했다고 하는데 (https://huggingface.co/google/gemma-1.1-7b-it) 이쪽의 정체가 무엇일지 궁금하네요.

#llm #state-space-model #rlhf

JetMoE: Reaching Llama2 Performance with 0.1M Dollars

(Yikang Shen, Zhen Guo, Tianle Cai, Zengyi Qin)

Large Language Models (LLMs) have achieved remarkable results, but their increasing resource demand has become a major obstacle to the development of powerful and accessible super-human intelligence. This report introduces JetMoE-8B, a new LLM trained with less than $0.1 million, using 1.25T tokens from carefully mixed open-source corpora and 30,000 H100 GPU hours. Despite its low cost, the JetMoE-8B demonstrates impressive performance, with JetMoE-8B outperforming the Llama2-7B model and JetMoE-8B-Chat surpassing the Llama2-13B-Chat model. These results suggest that LLM training can be much more cost-effective than generally thought. JetMoE-8B is based on an efficient Sparsely-gated Mixture-of-Experts (SMoE) architecture, composed of attention and feedforward experts. Both layers are sparsely activated, allowing JetMoE-8B to have 8B parameters while only activating 2B for each input token, reducing inference computation by about 70% compared to Llama2-7B. Moreover, JetMoE-8B is highly open and academia-friendly, using only public datasets and training code. All training parameters and data mixtures have been detailed in this report to facilitate future efforts in the development of open foundation models. This transparency aims to encourage collaboration and further advancements in the field of accessible and efficient LLMs. The model weights are publicly available at https://github.com/myshell-ai/JetMoE.

JetMoE (https://research.myshell.ai/jetmoe) 의 테크니컬 리포트. MiniCPM과 비슷하게 학습 스케줄을 둘로 나눠 후반에 SFT 데이터를 사용하는 방식 + MoE FFN과 Mixture of Attention Heads 사용이라는 조합.

#moe #llm

From Words to Numbers: Your Large Language Model Is Secretly A Capable Regressor When Given In-Context Examples

(Robert Vacareanu, Vlad-Andrei Negru, Vasile Suciu, Mihai Surdeanu)

We analyze how well pre-trained large language models (e.g., Llama2, GPT-4, Claude 3, etc) can do linear and non-linear regression when given in-context examples, without any additional training or gradient updates. Our findings reveal that several large language models (e.g., GPT-4, Claude 3) are able to perform regression tasks with a performance rivaling (or even outperforming) that of traditional supervised methods such as Random Forest, Bagging, or Gradient Boosting. For example, on the challenging Friedman #2 regression dataset, Claude 3 outperforms many supervised methods such as AdaBoost, SVM, Random Forest, KNN, or Gradient Boosting. We then investigate how well the performance of large language models scales with the number of in-context exemplars. We borrow from the notion of regret from online learning and empirically show that LLMs are capable of obtaining a sub-linear regret.

In-context Learning으로 선형 회귀 뿐만 아니라 비선형 회귀도 풀 수 있다는 결과. LLM으로 이런 회귀 문제를 풀어야 할 일은 없겠지만 In-context Learning에서 Gradient Descent 같은 알고리즘이 구현된다는 아이디어의 측면에서 생각해볼 수 있겠네요. 물론 회귀 문제를 풀 수 있다는 것을 회귀 문제를 풀 수 있는 알고리즘이 들어 있다는 결과로 바로 연결할 수는 없겠지만요.

#in-context-learning

HGRN2: Gated Linear RNNs with State Expansion

(Zhen Qin, Songlin Yang, Weixuan Sun, Xuyang Shen, Dong Li, Weigao Sun, Yiran Zhong)

Hierarchically gated linear RNN (HGRN,Qin et al. 2023) has demonstrated competitive training speed and performance in language modeling, while offering efficient inference. However, the recurrent state size of HGRN remains relatively small, which limits its expressiveness.To address this issue, inspired by linear attention, we introduce a simple outer-product-based state expansion mechanism so that the recurrent state size can be significantly enlarged without introducing any additional parameters. The linear attention form also allows for hardware-efficient training.Our extensive experiments verify the advantage of HGRN2 over HGRN1 in language modeling, image classification, and Long Range Arena.Our largest 3B HGRN2 model slightly outperforms Mamba and LLaMa Architecture Transformer for language modeling in a controlled experiment setting; and performs competitively with many open-source 3B models in downstream evaluation while using much fewer total training tokens.

Mamba 같은 State Space Model이나 RWKV-5/6와 같이 Matrix valued State를 RNN에 결합하기 위한 시도. 결과적으로는 Gated Linear Attention과 거의 같은 형태가 됩니다. (https://arxiv.org/abs/2312.06635)

여전히 Attention을 필요할 것 같지만 State Space Model과 Attention의 하이브리드의 트랜스포머에 대비한 한계가 있는가? 하면 아직까지 그런 문제가 표면적으로는 드러나지 않는 것 같네요.

#state-space-model

Why do small language models underperform? Studying Language Model Saturation via the Softmax Bottleneck

(Nathan Godey, Éric de la Clergerie, Benoît Sagot)

Recent advances in language modeling consist in pretraining highly parameterized neural networks on extremely large web-mined text corpora. Training and inference with such models can be costly in practice, which incentivizes the use of smaller counterparts. However, it has been observed that smaller models can suffer from saturation, characterized as a drop in performance at some advanced point in training followed by a plateau. In this paper, we find that such saturation can be explained by a mismatch between the hidden dimension of smaller models and the high rank of the target contextual probability distribution. This mismatch affects the performance of the linear prediction head used in such models through the well-known softmax bottleneck phenomenon. We measure the effect of the softmax bottleneck in various settings and find that models based on less than 1000 hidden dimensions tend to adopt degenerate latent representations in late pretraining, which leads to reduced evaluation performance.

작은 LM에서 학습 후반에 성능이 감소하는 현상에 대한 분석. 이 문제의 원인을 Representation의 Anistropy로 짚고 있고 이를 Softmax Bottleneck과 연결하고 있네요. Contrastive Learning에서의 Rank Collapse 문제를 다시 떠오르게 하는 주제입니다.

#llm #contrastive-learning #representation

Ferret-v2: An Improved Baseline for Referring and Grounding with Large Language Models

(Haotian Zhang, Haoxuan You, Philipp Dufter, Bowen Zhang, Chen Chen, Hong-You Chen, Tsu-Jui Fu, William Yang Wang, Shih-Fu Chang, Zhe Gan, Yinfei Yang)

While Ferret seamlessly integrates regional understanding into the Large Language Model (LLM) to facilitate its referring and grounding capability, it poses certain limitations: constrained by the pre-trained fixed visual encoder and failed to perform well on broader tasks. In this work, we unveil Ferret-v2, a significant upgrade to Ferret, with three key designs. (1) Any resolution grounding and referring: A flexible approach that effortlessly handles higher image resolution, improving the model's ability to process and understand images in greater detail. (2) Multi-granularity visual encoding: By integrating the additional DINOv2 encoder, the model learns better and diverse underlying contexts for global and fine-grained visual information. (3) A three-stage training paradigm: Besides image-caption alignment, an additional stage is proposed for high-resolution dense alignment before the final instruction tuning. Experiments show that Ferret-v2 provides substantial improvements over Ferret and other state-of-the-art methods, thanks to its high-resolution scaling and fine-grained visual processing.

Ferret (https://arxiv.org/abs/2310.07704) 의 개선 버전. 고해상도 이미지를 일정 그리드로 크롭하는 최근 거의 정석인 접근에 Global 이미지에는 CLIP를, 크롭에는 DINOv2를 사용하고 Visual Sampler의 입력으로 Global Feature를 Interpolation해서 결합. 그리고 Dense Alignment를 위한 학습 단계를 사용.

#vision-language #visual-grounding

Learning to Localize Objects Improves Spatial Reasoning in Visual-LLMs

(Kanchana Ranasinghe, Satya Narayan Shukla, Omid Poursaeed, Michael S. Ryoo, Tsung-Yu Lin)

Integration of Large Language Models (LLMs) into visual domain tasks, resulting in visual-LLMs (V-LLMs), has enabled exceptional performance in vision-language tasks, particularly for visual question answering (VQA). However, existing V-LLMs (e.g. BLIP-2, LLaVA) demonstrate weak spatial reasoning and localization awareness. Despite generating highly descriptive and elaborate textual answers, these models fail at simple tasks like distinguishing a left vs right location. In this work, we explore how image-space coordinate based instruction fine-tuning objectives could inject spatial awareness into V-LLMs. We discover optimal coordinate representations, data-efficient instruction fine-tuning objectives, and pseudo-data generation strategies that lead to improved spatial awareness in V-LLMs. Additionally, our resulting model improves VQA across image and video domains, reduces undesired hallucination, and generates better contextual object descriptions. Experiments across 5 vision-language tasks involving 14 different datasets establish the clear performance improvements achieved by our proposed framework.

Visual Grounding이나 지정한 위치를 기술하도록 하는 Location 관련 과제를 학습시키면 공간적 추론 능력이 향상된다는 결과.

Vision Language 모델에서 이미지에 포함된 정보를 최대한 추출하도록 하려면 다양한 이미지 관련 과제들이 학습에 포함되어야 하는 것이 아닌가 하는 생각이 있습니다. 다만 이쪽은 한 가지 Objective로 Unsupervised Training을 한다고 하는 텍스트에 대한 학습과는 전혀 다른 방향이죠. 이 차이를 해소할 수 있다면 재미있지 않을까 싶습니다.

이미지나 텍스트 자체에 대한 Unsupervised Training이 각 모달리티에 대해서 풍성한 구조를 추출해낼 수 있겠지만, 이 둘을 잇는 Alignment 과정에서의 Caption Generation은 이미지에 대해서 할 수 있는 수많은 과제 중 하나에 그친다는 것이 문제일지도 모르겠습니다. 물론 Captioning이 가지는 다양성도 아주 크긴 하지만요. 전 이미지에 포함된 밀집된 정보에 비해 언어에 포함된 정보는 성긴 경우가 많다고 봅니다. (물론 이것도 의견이 달라서 텍스트에 비해 이미지는 정보량이 적다고 생각하는 사람들도 있더군요. 용량으로 따지면 그럴지도 모르겠습니다.)

#vision-language #visual-grounding