2024년 3월 6일

Masked Thought: Simply Masking Partial Reasoning Steps Can Improve Mathematical Reasoning Learning of Language Models

(Changyu Chen, Xiting Wang, Ting-En Lin, Ang Lv, Yuchuan Wu, Xin Gao, Ji-Rong Wen, Rui Yan, Yongbin Li)

In reasoning tasks, even a minor error can cascade into inaccurate results, leading to suboptimal performance of large language models in such domains. Earlier fine-tuning approaches sought to mitigate this by leveraging more precise supervisory signals from human labeling, larger models, or self-sampling, although at a high cost. Conversely, we develop a method that avoids external resources, relying instead on introducing perturbations to the input. Our training approach randomly masks certain tokens within the chain of thought, a technique we found to be particularly effective for reasoning tasks. When applied to fine-tuning with GSM8K, this method achieved a 5% improvement in accuracy over standard supervised fine-tuning with a few codes modified and no additional labeling effort. Furthermore, it is complementary to existing methods. When integrated with related data augmentation methods, it leads to an average improvement of 3% improvement in GSM8K accuracy and 1% improvement in MATH accuracy across five datasets of various quality and size, as well as two base models. We further investigate the mechanisms behind this improvement through case studies and quantitative analysis, suggesting that our approach may provide superior support for the model in capturing long-distance dependencies, especially those related to questions. This enhancement could deepen understanding of premises in questions and prior steps. Our code is available at Github.

Chain of Thought 샘플로 파인튜닝을 할 때 응답 시퀀스에서 일부 토큰에 강한 노이즈를 주면 성능이 향상된다는 결과. 인접 토큰에 대한 지나친 의존성을 감소시킨다는 분석. 학습-추론 갭을 감소시키는 효과도 있을 수 있겠죠. (Scheduled Sampling, Temperature = 1로는 충분하지는 않습니다만.)

마스킹의 활용이 흥미로운 방향이라는 생각을 합니다. 가끔 사실 Autoregressive LM은 지나치게 쉬운 Objective인 것이 아닌가 하는 생각을 하게 되네요.

#regularization #instruction-tuning

InfiMM-HD: A Leap Forward in High-Resolution Multimodal Understanding

(Haogeng Liu, Quanzeng You, Xiaotian Han, Yiqi Wang, Bohan Zhai, Yongfei Liu, Yunzhe Tao, Huaibo Huang, Ran He, Hongxia Yang)

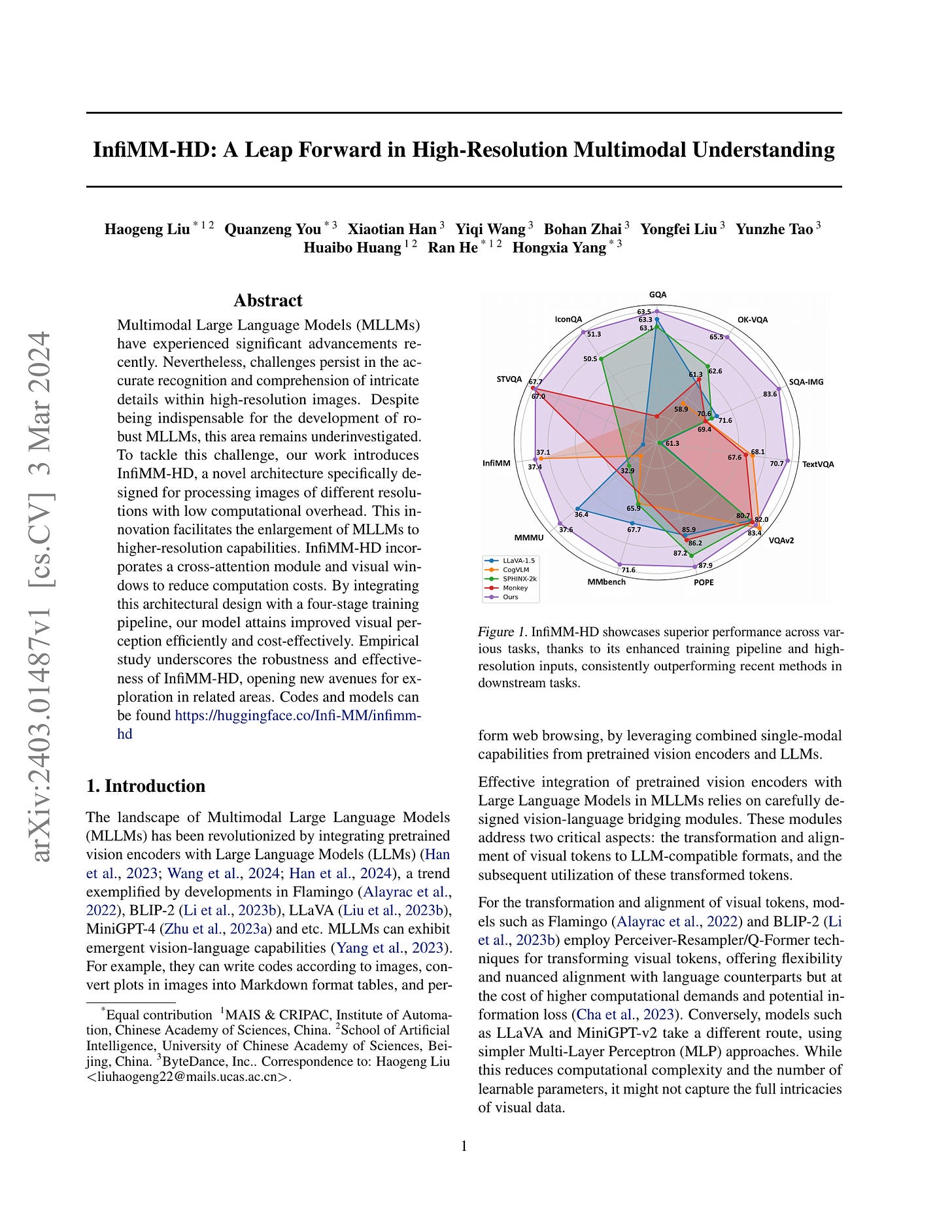

Multimodal Large Language Models (MLLMs) have experienced significant advancements recently. Nevertheless, challenges persist in the accurate recognition and comprehension of intricate details within high-resolution images. Despite being indispensable for the development of robust MLLMs, this area remains underinvestigated. To tackle this challenge, our work introduces InfiMM-HD, a novel architecture specifically designed for processing images of different resolutions with low computational overhead. This innovation facilitates the enlargement of MLLMs to higher-resolution capabilities. InfiMM-HD incorporates a cross-attention module and visual windows to reduce computation costs. By integrating this architectural design with a four-stage training pipeline, our model attains improved visual perception efficiently and cost-effectively. Empirical study underscores the robustness and effectiveness of InfiMM-HD, opening new avenues for exploration in related areas. Codes and models can be found at https://huggingface.co/Infi-MM/infimm-hd

고해상도 VLM. Flamingo 스타일의 Cross Attention 기반 모델을 채택했군요. 테스트해봤더니 Cross Attention은 4개 블럭 당 하나면 충분한 것 같고 따라서 시퀀스에 그대로 붙이는 것보다 이미지 토큰이 많아지는 경우에 더 효율적일 수 있다는 아이디어입니다.

224px로 시작한 다음 448px로 썸네일과 크롭을 붙여 사용하는 세팅을 사용했습니다.

#vision-language

Balancing Enhancement, Harmlessness, and General Capabilities: Enhancing Conversational LLMs with Direct RLHF

(Chen Zheng, Ke Sun, Hang Wu, Chenguang Xi, Xun Zhou)

In recent advancements in Conversational Large Language Models (LLMs), a concerning trend has emerged, showing that many new base LLMs experience a knowledge reduction in their foundational capabilities following Supervised Fine-Tuning (SFT). This process often leads to issues such as forgetting or a decrease in the base model's abilities. Moreover, fine-tuned models struggle to align with user preferences, inadvertently increasing the generation of toxic outputs when specifically prompted. To overcome these challenges, we adopted an innovative approach by completely bypassing SFT and directly implementing Harmless Reinforcement Learning from Human Feedback (RLHF). Our method not only preserves the base model's general capabilities but also significantly enhances its conversational abilities, while notably reducing the generation of toxic outputs. Our approach holds significant implications for fields that demand a nuanced understanding and generation of responses, such as customer service. We applied this methodology to Mistral, the most popular base model, thereby creating Mistral-Plus. Our validation across 11 general tasks demonstrates that Mistral-Plus outperforms similarly sized open-source base models and their corresponding instruct versions. Importantly, the conversational abilities of Mistral-Plus were significantly improved, indicating a substantial advancement over traditional SFT models in both safety and user preference alignment.

SFT 없이 RLHF만으로 정렬해보기. 사실 Constitutional AI에서 Anthropic이 쓰고 있다고 했던 방법이기도 하죠.

#alignment #rlhf