2024년 3월 13일

Synth$^2$: Boosting Visual-Language Models with Synthetic Captions and Image Embeddings

(Sahand Sharifzadeh, Christos Kaplanis, Shreya Pathak, Dharshan Kumaran, Anastasija Ilic, Jovana Mitrovic, Charles Blundell, Andrea Banino)

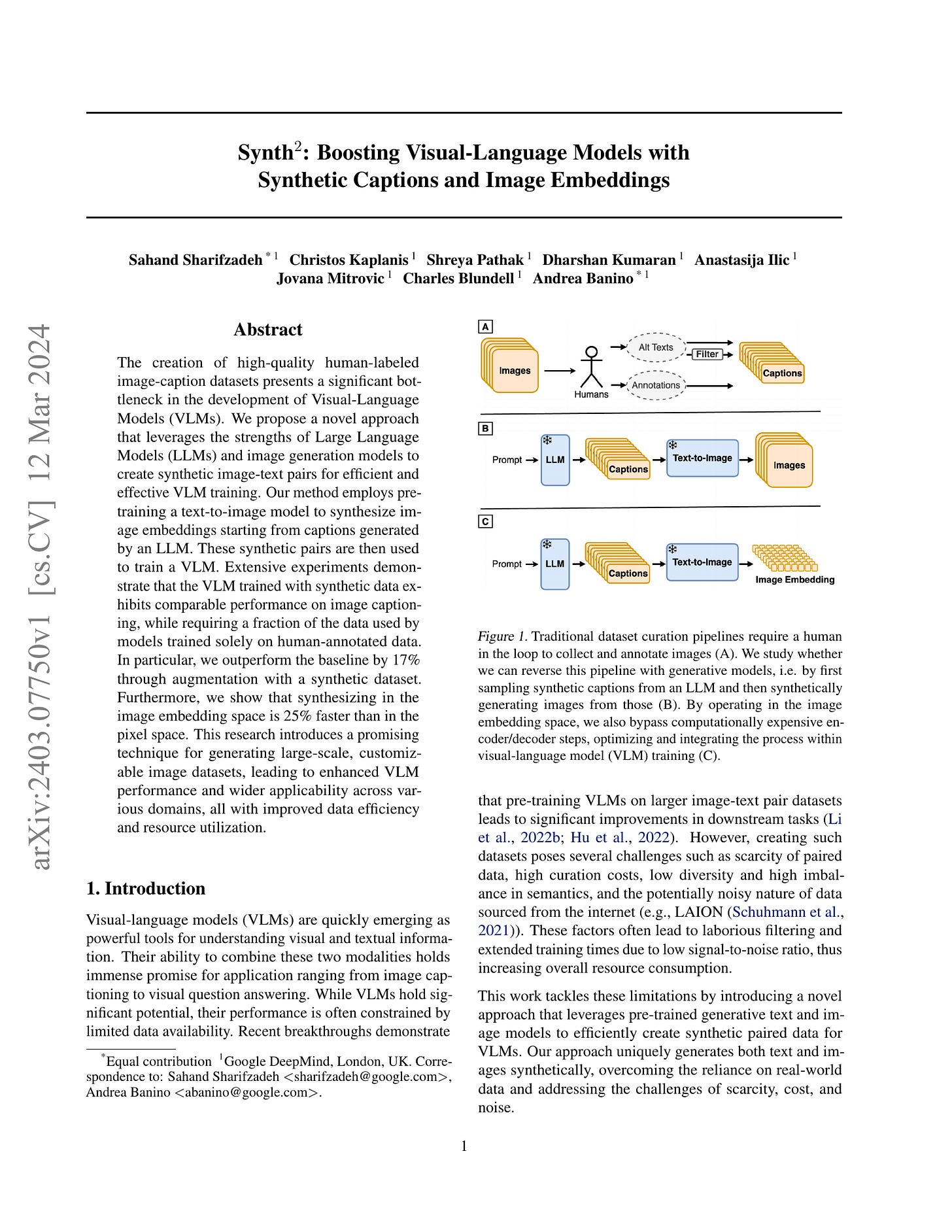

The creation of high-quality human-labeled image-caption datasets presents a significant bottleneck in the development of Visual-Language Models (VLMs). We propose a novel approach that leverages the strengths of Large Language Models (LLMs) and image generation models to create synthetic image-text pairs for efficient and effective VLM training. Our method employs pretraining a text-to-image model to synthesize image embeddings starting from captions generated by an LLM. These synthetic pairs are then used to train a VLM. Extensive experiments demonstrate that the VLM trained with synthetic data exhibits comparable performance on image captioning, while requiring a fraction of the data used by models trained solely on human-annotated data. In particular, we outperform the baseline by 17% through augmentation with a synthetic dataset. Furthermore, we show that synthesizing in the image embedding space is 25% faster than in the pixel space. This research introduces a promising technique for generating large-scale, customizable image datasets, leading to enhanced VLM performance and wider applicability across various domains, all with improved data efficiency and resource utilization.

캡션 생성 -> 이미지 생성 -> 이미지와 캡션으로 Vision Language 모델 학습. Back Translation스럽기도 하네요.

#vision-language #synthetic-data

Improving Reinforcement Learning from Human Feedback Using Contrastive Rewards

(Wei Shen, Xiaoying Zhang, Yuanshun Yao, Rui Zheng, Hongyi Guo, Yang Liu)

Reinforcement learning from human feedback (RLHF) is the mainstream paradigm used to align large language models (LLMs) with human preferences. Yet existing RLHF heavily relies on accurate and informative reward models, which are vulnerable and sensitive to noise from various sources, e.g. human labeling errors, making the pipeline fragile. In this work, we improve the effectiveness of the reward model by introducing a penalty term on the reward, named as \textit{contrastive rewards}. %Contrastive rewards Our approach involves two steps: (1) an offline sampling step to obtain responses to prompts that serve as baseline calculation and (2) a contrastive reward calculated using the baseline responses and used in the Proximal Policy Optimization (PPO) step. We show that contrastive rewards enable the LLM to penalize reward uncertainty, improve robustness, encourage improvement over baselines, calibrate according to task difficulty, and reduce variance in PPO. We show empirically contrastive rewards can improve RLHF substantially, evaluated by both GPTs and humans, and our method consistently outperforms strong baselines.

SFT 모델로 샘플을 여러 개 뽑은 다음 Reward 스코어의 평균을 내서 PPO 시점에 샘플의 Reward에서 이 평균을 뺀 값을 Reward로 사용한다는 아이디어. 샘플을 여러 개 생성 - 이 샘플들의 스코어를 사용이라고 하는 최근 많이 나오는 패턴이죠.

#rlhf

Branch-Train-MiX: Mixing Expert LLMs into a Mixture-of-Experts LLM

(Sainbayar Sukhbaatar, Olga Golovneva, Vasu Sharma, Hu Xu, Xi Victoria Lin, Baptiste Rozière, Jacob Kahn, Daniel Li, Wen-tau Yih, Jason Weston, Xian Li)

We investigate efficient methods for training Large Language Models (LLMs) to possess capabilities in multiple specialized domains, such as coding, math reasoning and world knowledge. Our method, named Branch-Train-MiX (BTX), starts from a seed model, which is branched to train experts in embarrassingly parallel fashion with high throughput and reduced communication cost. After individual experts are asynchronously trained, BTX brings together their feedforward parameters as experts in Mixture-of-Expert (MoE) layers and averages the remaining parameters, followed by an MoE-finetuning stage to learn token-level routing. BTX generalizes two special cases, the Branch-Train-Merge method, which does not have the MoE finetuning stage to learn routing, and sparse upcycling, which omits the stage of training experts asynchronously. Compared to alternative approaches, BTX achieves the best accuracy-efficiency tradeoff.

Domain Expert를 학습한 다음에 이 Expert를 묶어 MoE 모델로 만듭니다. FFN은 MoE로 묶으면 되고 Self Attention은 그냥 평균을 내버리네요. 굳이 도메인 레이블 같은 것을 결합하진 않고 일반적인 토큰 기반 Routing 입니다.

Sparse Upcycling (https://arxiv.org/abs/2212.05055) 의 7B 규모 실험도 같이 나오게 됐네요. Sparse Upcycling과 비교하면 어떤가? 라는 문제에 대해서는 각 Domain Expert를 학습시키는 것은 (GPU만 넉넉하다면) 완전히 병렬적으로 할 수 있다는 점을 강조하고 있습니다.

#moe