2024년 3월 12일

Command-R

https://huggingface.co/CohereForAI/c4ai-command-r-v01

Cohere가 Command-R이라는 35B, 128K Context, 한국어를 포함하는 10개 언어에 대한 Multilingual LM을 공개했네요. Cohere답게 RAG/Grounded Generation 상황과 도구 사용 능력을 고려해 튜닝했다고 합니다.

#llm

Gaudi 2

Stability AI가 Gaudi 2를 테스트했군요. 쓸만한 모양입니다. 학습 가속기 시장에서도 NVIDIA, Intel, AMD가 경쟁하는 구도가 되는 건가 싶네요.

Gaudi 3는 Gaudi 2의 4배 성능이 목표라고 합니다.

NVIDIA 쪽에서도 Blackwell B100이 곧 등장할 것 같은 분위기네요. 700W라는 말도 있고 1000W라는 말도 있던데 그래도 아직까지는 공랭인 모양이더군요. 다음 세대는 확실하게 수랭이 되지 않을까 싶습니다. (https://www.tomshardware.com/tech-industry/artificial-intelligence/nvidias-b100-and-b200-processors-could-draw-an-astounding-1000-watts-per-gpu-dell-spills-the-beans-in-earnings-call)

구글도 이번 기밀 유출 사건과 관련해 TPUv6에 대한 이야기가 나오고 있습니다.

여하간 재미있는 시점이네요.

#hardware

Stealing Part of a Production Language Model

(Nicholas Carlini, Daniel Paleka, Krishnamurthy Dj Dvijotham, Thomas Steinke, Jonathan Hayase, A. Feder Cooper, Katherine Lee, Matthew Jagielski, Milad Nasr, Arthur Conmy, Eric Wallace, David Rolnick, Florian Tramèr)

We introduce the first model-stealing attack that extracts precise, nontrivial information from black-box production language models like OpenAI's ChatGPT or Google's PaLM-2. Specifically, our attack recovers the embedding projection layer (up to symmetries) of a transformer model, given typical API access. For under $20 USD, our attack extracts the entire projection matrix of OpenAI's Ada and Babbage language models. We thereby confirm, for the first time, that these black-box models have a hidden dimension of 1024 and 2048, respectively. We also recover the exact hidden dimension size of the gpt-3.5-turbo model, and estimate it would cost under $2,000 in queries to recover the entire projection matrix. We conclude with potential defenses and mitigations, and discuss the implications of possible future work that could extend our attack.

API로 모델 차원 크기와 임베딩 가중치를 뽑아내는 방법. Log Prob와 Logit Bias를 사용하면 효율적으로 공격할 수 있습니다. 그래서 OpenAI가 최근에 Logit Bias가 Log Prob에 영향을 미치지 않도록 수정했군요. (모델 차원 크기와 임베딩 가중치 정도는 알려줘도 되지 않을까 싶긴 합니다만.)

#attack

The pitfalls of next-token prediction

(Gregor Bachmann, Vaishnavh Nagarajan)

Can a mere next-token predictor faithfully model human intelligence? We crystallize this intuitive concern, which is fragmented in the literature. As a starting point, we argue that the two often-conflated phases of next-token prediction -- autoregressive inference and teacher-forced training -- must be treated distinctly. The popular criticism that errors can compound during autoregressive inference, crucially assumes that teacher-forcing has learned an accurate next-token predictor. This assumption sidesteps a more deep-rooted problem we expose: in certain classes of tasks, teacher-forcing can simply fail to learn an accurate next-token predictor in the first place. We describe a general mechanism of how teacher-forcing can fail, and design a minimal planning task where both the Transformer and the Mamba architecture empirically fail in that manner -- remarkably, despite the task being straightforward to learn. We provide preliminary evidence that this failure can be resolved when training to predict multiple tokens in advance. We hope this finding can ground future debates and inspire explorations beyond the next-token prediction paradigm. We make our code available under https://github.com/gregorbachmann/Next-Token-Failures

Teacher Forcing에서 발생하는 문제. 이전 스텝의 시퀀스들이 정답에 대한 지나치게 많은 정보를 주는 경우에 실제 문제를 푸는 알고리즘이 아니라 이 정보를 이용하는 패턴을 학습할 수 있다는 아이디어. 그렇다면 이 정보를 가리고 토큰을 여러 개 예측하게 하면 되지 않을까 하는 생각으로 이어집니다.

여러모로 Autoregressive / Next Token Prediction은 생각보다 쉽다는 것이 문제가 아닐까 하는 생각이 드네요.

#autoregressive-model

Unraveling the Mystery of Scaling Laws: Part I

(Hui Su, Zhi Tian, Xiaoyu Shen, Xunliang Cai)

Scaling law principles indicate a power-law correlation between loss and variables such as model size, dataset size, and computational resources utilized during training. These principles play a vital role in optimizing various aspects of model pre-training, ultimately contributing to the success of large language models such as GPT-4, Llama and Gemini. However, the original scaling law paper by OpenAI did not disclose the complete details necessary to derive the precise scaling law formulas, and their conclusions are only based on models containing up to 1.5 billion parameters. Though some subsequent works attempt to unveil these details and scale to larger models, they often neglect the training dependency of important factors such as the learning rate, context length and batch size, leading to their failure to establish a reliable formula for predicting the test loss trajectory. In this technical report, we confirm that the scaling law formulations proposed in the original OpenAI paper remain valid when scaling the model size up to 33 billion, but the constant coefficients in these formulas vary significantly with the experiment setup. We meticulously identify influential factors and provide transparent, step-by-step instructions to estimate all constant terms in scaling-law formulas by training on models with only 1M~60M parameters. Using these estimated formulas, we showcase the capability to accurately predict various attributes for models with up to 33B parameters before their training, including (1) the minimum possible test loss; (2) the minimum required training steps and processed tokens to achieve a specific loss; (3) the critical batch size with an optimal time/computation trade-off at any loss value; and (4) the complete test loss trajectory with arbitrary batch size.

모델 크기, 학습 스텝, 배치 크기에 따른 Scaling Law를 추정해서 33B/3T 모델에까지 평가해 봤군요. 한 번 테스트해보면 재미있을 것 같습니다.

#scaling-law

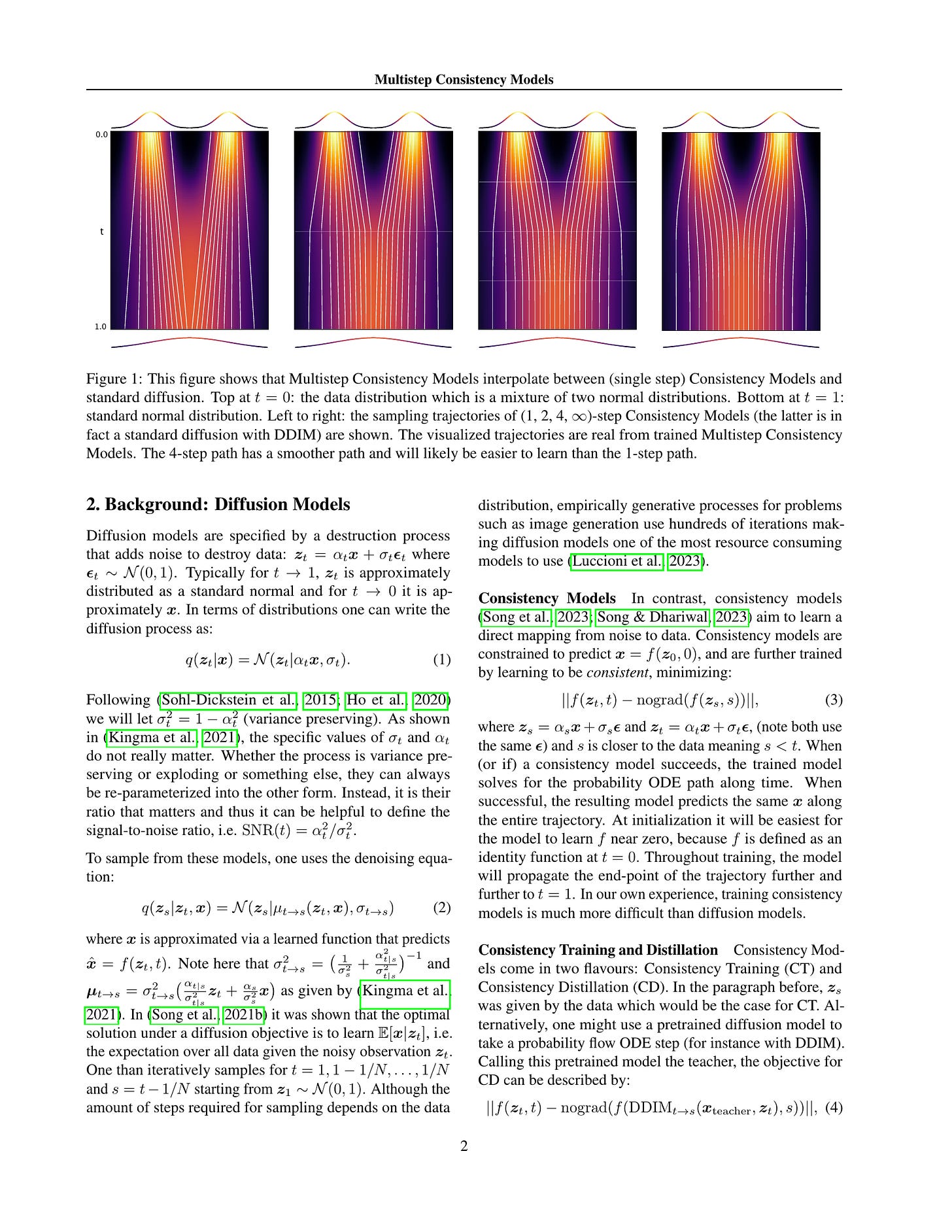

Multistep Consistency Models

(Jonathan Heek, Emiel Hoogeboom, Tim Salimans)

Diffusion models are relatively easy to train but require many steps to generate samples. Consistency models are far more difficult to train, but generate samples in a single step. In this paper we propose Multistep Consistency Models: A unification between Consistency Models (Song et al., 2023) and TRACT (Berthelot et al., 2023) that can interpolate between a consistency model and a diffusion model: a trade-off between sampling speed and sampling quality. Specifically, a 1-step consistency model is a conventional consistency model whereas we show that a ∞∞-step consistency model is a diffusion model. Multistep Consistency Models work really well in practice. By increasing the sample budget from a single step to 2-8 steps, we can train models more easily that generate higher quality samples, while retaining much of the sampling speed benefits. Notable results are 1.4 FID on Imagenet 64 in 8 step and 2.1 FID on Imagenet128 in 8 steps with consistency distillation. We also show that our method scales to a text-to-image diffusion model, generating samples that are very close to the quality of the original model.

Consistency Models를 스텝 0 뿐만 아니라 중간 스텝을 예측할 수 있도록 수정한 방법. 이 스텝의 수가 전체 스텝 수와 일치하게 되면 Diffusion과 동일해집니다. 추가로 Deterministic Sampler에서 오차에 의해 발생하는 지나치게 스무딩된 샘플을 추정한 노이즈의 비중을 높이는 방식으로 개선했습니다. (aDDIM) 8 스텝 정도로 ImageNet에서 SOTA 성능을 내고 있네요.

추가로 구글에서 20B 규모 Text2Image 모델을 만들었다는 것을 귀띔하고 있군요.

#diffusion

An Image is Worth 1/2 Tokens After Layer 2: Plug-and-Play Inference Acceleration for Large Vision-Language Models

(Liang Chen, Haozhe Zhao, Tianyu Liu, Shuai Bai, Junyang Lin, Chang Zhou, Baobao Chang)

In this study, we identify the inefficient attention phenomena in Large Vision-Language Models (LVLMs), notably within prominent models like LLaVA-1.5, QwenVL-Chat and Video-LLaVA. We find out that the attention computation over visual tokens is of extreme inefficiency in the deep layers of popular LVLMs, suggesting a need for a sparser approach compared to textual data handling. To this end, we introduce FastV, a versatile plug-and-play method designed to optimize computational efficiency by learning adaptive attention patterns in early layers and pruning visual tokens in subsequent ones. Our evaluations demonstrate FastV's ability to dramatically reduce computational costs (e.g., a 45 reduction in FLOPs for LLaVA-1.5-13B) without sacrificing performance in a wide range of image and video understanding tasks. The computational efficiency and performance trade-off of FastV are highly customizable and pareto-efficient. It can compress the FLOPs of a 13B-parameter model to achieve a lower budget than that of a 7B-parameter model, while still maintaining superior performance. We believe FastV has practical values for deployment of LVLMs in edge devices and commercial models. Code is released at https://github.com/pkunlp-icler/FastV.

이미지 토큰이 시퀀스 길이에 비해 실제로 주어지는 Attention 가중치는 적다는 결과. 즉 높은 단계의 레이어에서 이미지 토큰에는 Attention이 거의 주어지지 않고 다른 토큰에 이미지의 정보가 모두 흡수되는 것 같다고 주장합니다. 그러니 이런 이미지 토큰을 Pruning 하면 어떨까 하는 아이디어네요.

ViT에서 토큰을 Pruning하거나 Merging 하던 시도들이 생각나네요. (https://arxiv.org/abs/2210.09461)

이미지 시퀀스를 텍스트 시퀀스와 결합하는 것이 Long Context 측면과 잘 결합되는 감이 있어서 좋은 점이 있는 듯 한데 동시에 비효율성이 따라오는 것 같네요. Flamingo 스타일의 Cross Attention (https://arxiv.org/abs/2403.01487) 이 다시 나온 것도 그런 측면 때문이겠죠. 이 부분에서도 좋은 디자인을 할 수 있다면 재미있을 것 같네요.

#vision-language

Augmentations vs Algorithms: What Works in Self-Supervised Learning

(Warren Morningstar, Alex Bijamov, Chris Duvarney, Luke Friedman, Neha Kalibhat, Luyang Liu, Philip Mansfield, Renan Rojas-Gomez, Karan Singhal, Bradley Green, Sushant Prakash)

We study the relative effects of data augmentations, pretraining algorithms, and model architectures in Self-Supervised Learning (SSL). While the recent literature in this space leaves the impression that the pretraining algorithm is of critical importance to performance, understanding its effect is complicated by the difficulty in making objective and direct comparisons between methods. We propose a new framework which unifies many seemingly disparate SSL methods into a single shared template. Using this framework, we identify aspects in which methods differ and observe that in addition to changing the pretraining algorithm, many works also use new data augmentations or more powerful model architectures. We compare several popular SSL methods using our framework and find that many algorithmic additions, such as prediction networks or new losses, have a minor impact on downstream task performance (often less than 1%1%), while enhanced augmentation techniques offer more significant performance improvements (2−4%2−4%). Our findings challenge the premise that SSL is being driven primarily by algorithmic improvements, and suggest instead a bitter lesson for SSL: that augmentation diversity and data / model scale are more critical contributors to recent advances in self-supervised learning.

Contrastive Learning 알고리즘들을 하나의 프레임워크에서 살펴봤을 때 모델이나 Momentum Encoder 사용 등의 조건들을 맞추고 나면 알고리즘의 차이가 감소하고 Augmentation의 차이가 부각된다는 결과.

#contrastive-learning #self-supervision