2024년 2월 8일

InfLLM: Unveiling the Intrinsic Capacity of LLMs for Understanding Extremely Long Sequences with Training-Free Memory

(Chaojun Xiao, Pengle Zhang, Xu Han, Guangxuan Xiao, Yankai Lin, Zhengyan Zhang, Zhiyuan Liu, Song Han, Maosong Sun)

Large language models (LLMs) have emerged as a cornerstone in real-world applications with lengthy streaming inputs, such as LLM-driven agents. However, existing LLMs, pre-trained on sequences with restricted maximum length, cannot generalize to longer sequences due to the out-of-domain and distraction issues. To alleviate these issues, existing efforts employ sliding attention windows and discard distant tokens to achieve the processing of extremely long sequences. Unfortunately, these approaches inevitably fail to capture long-distance dependencies within sequences to deeply understand semantics. This paper introduces a training-free memory-based method, InfLLM, to unveil the intrinsic ability of LLMs to process streaming long sequences. Specifically, InfLLM stores distant contexts into additional memory units and employs an efficient mechanism to lookup token-relevant units for attention computation. Thereby, InfLLM allows LLMs to efficiently process long sequences while maintaining the ability to capture long-distance dependencies. Without any training, InfLLM enables LLMs pre-trained on sequences of a few thousand tokens to achieve superior performance than competitive baselines continually training these LLMs on long sequences. Even when the sequence length is scaled to $1,024$K, InfLLM still effectively captures long-distance dependencies.

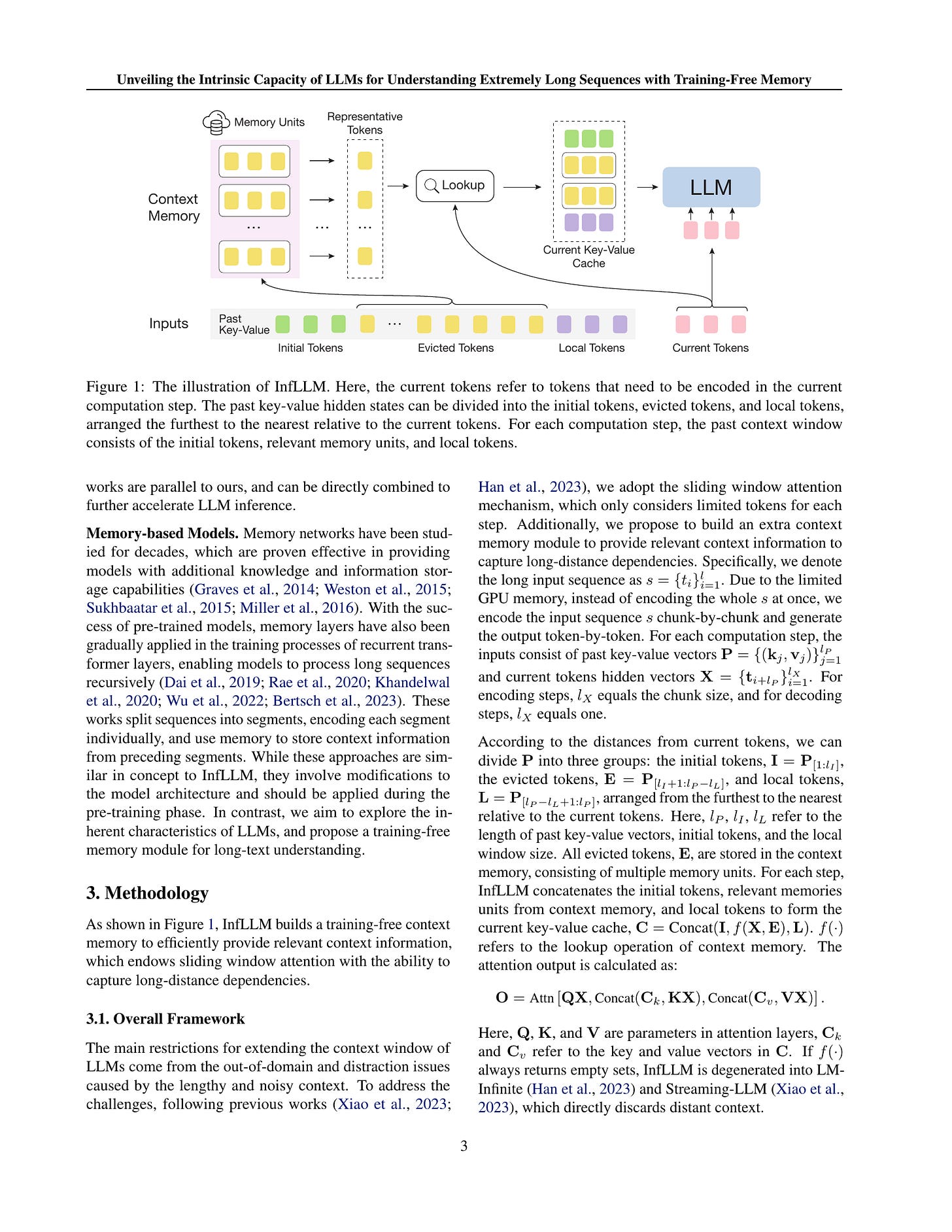

LM-Infinite (https://arxiv.org/abs/2308.16137) 에서 초기 토큰과 Local Window로 Attention Mask를 만들어 Long Context 문제에 대응하는 아이디어에 덧붙여 초기 토큰과 Local Window 토큰들 사이의 토큰들을 의미 있는 토큰으로 채워보자는 아이디어.

KV Cache를 쪼개고 쪼갠 각 청크마다 대표 토큰 임베딩을 만들어서 현 토큰과 관련도가 높은 청크를 끼워넣는 방식입니다. 즉 초기 토큰, 관련도가 높은 토큰들, 근방의 토큰들 순서로 KV Cache가 구성되는 것이죠.

LM 내에서 RAG를 하는 것과 비슷하고 이런 식으로 관련된 상태를 Retrieval 하자는 아이디어는 종종 나왔었죠. (https://arxiv.org/abs/2305.01625) 재미있네요.

#long-context

The Hedgehog & the Porcupine: Expressive Linear Attentions with Softmax Mimicry

(Michael Zhang, Kush Bhatia, Hermann Kumbong, Christopher Ré)

Linear attentions have shown potential for improving Transformer efficiency, reducing attention's quadratic complexity to linear in sequence length. This holds exciting promise for (1) training linear Transformers from scratch, (2) "finetuned-conversion" of task-specific Transformers into linear versions that recover task performance, and (3) "pretrained-conversion" of Transformers such as large language models into linear versions finetunable on downstream tasks. However, linear attentions often underperform standard softmax attention in quality. To close this performance gap, we find prior linear attentions lack key properties of softmax attention tied to good performance: low-entropy (or "spiky") weights and dot-product monotonicity. We further observe surprisingly simple feature maps that retain these properties and match softmax performance, but are inefficient to compute in linear attention. We thus propose Hedgehog, a learnable linear attention that retains the spiky and monotonic properties of softmax attention while maintaining linear complexity. Hedgehog uses simple trainable MLPs to produce attention weights mimicking softmax attention. Experiments show Hedgehog recovers over 99% of standard Transformer quality in train-from-scratch and finetuned-conversion settings, outperforming prior linear attentions up to 6 perplexity points on WikiText-103 with causal GPTs, and up to 8.7 GLUE score points on finetuned bidirectional BERTs. Hedgehog also enables pretrained-conversion. Converting a pretrained GPT-2 into a linear attention variant achieves state-of-the-art 16.7 perplexity on WikiText-103 for 125M subquadratic decoder models. We finally turn a pretrained Llama-2 7B into a viable linear attention Llama. With low-rank adaptation, Hedgehog-Llama2 7B achieves 28.1 higher ROUGE-1 points over the base standard attention model, where prior linear attentions lead to 16.5 point drops.

Softmax Attention과 Linear Attention 사이의 가장 큰 차이가 Softmax는 엔트로피가 낮은, 즉 특정 위치에 Attention Weight가 집중되는 "뾰족한" 패턴을 보여준다는 것에 있다는 주장. 이를 Tayler 근사로 커버해서 Linear Attention의 성능을 높일 수 있다고 합니다. 이에 더해 Softmax Attention을 Linear Attention으로 Distill하는 것을 시도했네요.

Associative Recall과 관련된 블로그에서 먼저 소개된 결과인데 (https://arxiv.org/abs/2305.01625) 논문으로 나왔군요.

#linear-attention

MEMORYLLM: Towards Self-Updatable Large Language Models

(Yu Wang, Xiusi Chen, Jingbo Shang, Julian McAuley)

Existing Large Language Models (LLMs) usually remain static after deployment, which might make it hard to inject new knowledge into the model. We aim to build models containing a considerable portion of self-updatable parameters, enabling the model to integrate new knowledge effectively and efficiently. To this end, we introduce MEMORYLLM, a model that comprises a transformer and a fixed-size memory pool within the latent space of the transformer. MEMORYLLM can self-update with text knowledge and memorize the knowledge injected earlier. Our evaluations demonstrate the ability of MEMORYLLM to effectively incorporate new knowledge, as evidenced by its performance on model editing benchmarks. Meanwhile, the model exhibits long-term information retention capacity, which is validated through our custom-designed evaluations and long-context benchmarks. MEMORYLLM also shows operational integrity without any sign of performance degradation even after nearly a million memory updates.

LLM에 대한 새로운 지식 추가를 학습으로 풀자는 접근. 트랜스포머 레이어 입력에 학습 가능한 임베딩들을 추가하고 이 임베딩을 학습해나가면서 오래된 임베딩을 새 임베딩으로 대체해나가는 방식입니다.

새로운 지식과 능력을 추가해나가는 문제가 반드시 풀려야 한다고 봅니다. 문제는 그 방법이네요. RAG, 혹은 KV 캐시를 보존한다거나, 혹은 모델 자체를 튜닝한다거나. 그 외에 효과적이면서도 저렴한 방법이 나올 수 있을지 궁금하네요.

#long-context #continual-learning

Direct Language Model Alignment from Online AI Feedback

(Shangmin Guo, Biao Zhang, Tianlin Liu, Tianqi Liu, Misha Khalman, Felipe Llinares, Alexandre Rame, Thomas Mesnard, Yao Zhao, Bilal Piot, Johan Ferret, Mathieu Blondel)

Direct alignment from preferences (DAP) methods, such as DPO, have recently emerged as efficient alternatives to reinforcement learning from human feedback (RLHF), that do not require a separate reward model. However, the preference datasets used in DAP methods are usually collected ahead of training and never updated, thus the feedback is purely offline. Moreover, responses in these datasets are often sampled from a language model distinct from the one being aligned, and since the model evolves over training, the alignment phase is inevitably off-policy. In this study, we posit that online feedback is key and improves DAP methods. Our method, online AI feedback (OAIF), uses an LLM as annotator: on each training iteration, we sample two responses from the current model and prompt the LLM annotator to choose which one is preferred, thus providing online feedback. Despite its simplicity, we demonstrate via human evaluation in several tasks that OAIF outperforms both offline DAP and RLHF methods. We further show that the feedback leveraged in OAIF is easily controllable, via instruction prompts to the LLM annotator.

DPO 같은 방법이 실질적으로 Policy가 아닌 다른 모델이 생성한 샘플을 한 번 어노테이션한 데이터셋에 대해 지속적으로 학습하는 형태로 사용되고 있다는 문제 의식. 단순하게 Policy로 샘플링하고, LLM으로 어노테이션하고, 그 결과로 학습하는 루프로 학습시키는 방법을 채택했습니다.

#rlaif #alignment

Long Is More for Alignment: A Simple but Tough-to-Beat Baseline for Instruction Fine-Tuning

(Hao Zhao, Maksym Andriushchenko, Francesco Croce, Nicolas Flammarion)

There is a consensus that instruction fine-tuning of LLMs requires high-quality data, but what are they? LIMA (NeurIPS 2023) and AlpaGasus (ICLR 2024) are state-of-the-art methods for selecting such high-quality examples, either via manual curation or using GPT-3.5-Turbo as a quality scorer. We show that the extremely simple baseline of selecting the 1,000 instructions with longest responses from standard datasets can consistently outperform these sophisticated methods according to GPT-4 and PaLM-2 as judges, while remaining competitive on the OpenLLM benchmarks that test factual knowledge. We demonstrate this for several state-of-the-art LLMs (Llama-2-7B, Llama-2-13B, and Mistral-7B) and datasets (Alpaca-52k and Evol-Instruct-70k). In addition, a lightweight refinement of such long instructions can further improve the abilities of the fine-tuned LLMs, and allows us to obtain the 2nd highest-ranked Llama-2-7B-based model on AlpacaEval 2.0 while training on only 1,000 examples and no extra preference data. We also conduct a thorough analysis of our models to ensure that their enhanced performance is not simply due to GPT-4's preference for longer responses, thus ruling out any artificial improvement. In conclusion, our findings suggest that fine-tuning on the longest instructions should be the default baseline for any research on instruction fine-tuning.

좋은 Instruction Tuning 샘플들을 어떻게 필터링할 것인가? 그냥 응답이 가장 긴 샘플들을 뽑는 것으로도 되더라는 결과. 뒤집어서 보면 Automatic Evaluation이 길이에 취약하다는 증거도 될 수 있는데 그에 대한 분석이 그렇게 충분하지는 않은 듯 합니다.

#instruction-tuning