2024년 2월 6일

Qwen 1.5

https://github.com/QwenLM/Qwen1.5

Qwen 1.5가 나왔습니다. 1.5라고 해서 Alignment 쪽의 개선이 위주인가 했는데 베이스 모델 자체에 업데이트가 있었군요. Qwen 2의 베타 버전이라고 이야기하고 있습니다.

다만 베이스 모델의 벤치마크 스코어가 아주 크게 향상된 것 같지는 않습니다. 주로 32K Context Length와 다국어 지원 강화에 포커스를 맞추지 않았나 싶네요.

Alignment 측면에서는 도구 사용과 코드 인터프리터를 언급하고 있네요. 그리고 아마도 Long Context/RAG에 대한 튜닝도 포함되지 않았을까요.

#llm

DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models

(Zhihong Shao, Peiyi Wang, Qihao Zhu, Runxin Xu, Junxiao Song, Mingchuan Zhang, Y.K. Li, Y. Wu, Daya Guo)

Mathematical reasoning poses a significant challenge for language models due to its complex and structured nature. In this paper, we introduce DeepSeekMath 7B, which continues pre-training DeepSeek-Coder-Base-v1.5 7B with 120B math-related tokens sourced from Common Crawl, together with natural language and code data. DeepSeekMath 7B has achieved an impressive score of 51.7% on the competition-level MATH benchmark without relying on external toolkits and voting techniques, approaching the performance level of Gemini-Ultra and GPT-4. Self-consistency over 64 samples from DeepSeekMath 7B achieves 60.9% on MATH. The mathematical reasoning capability of DeepSeekMath is attributed to two key factors: First, we harness the significant potential of publicly available web data through a meticulously engineered data selection pipeline. Second, we introduce Group Relative Policy Optimization (GRPO), a variant of Proximal Policy Optimization (PPO), that enhances mathematical reasoning abilities while concurrently optimizing the memory usage of PPO.

DeepSeek의 수학 모델. OpenWebMath를 기반으로 유사한 문서를 찾는 방식으로 데이터 구축 + 추가적으로 관련된 도메인을 발굴하는 방식으로 데이터를 확보했습니다. 이렇게 구축한 데이터셋으로 기존 데이터보다 더 나은 성능을 확보했습니다. (크기 자체도 크긴 하지만요.)

여기서 코드 학습이 수학 성능에 도움이 된다는 것, arXiv 데이터는 그대로는 도움이 되지 않는 것 같다는 것을 발견했습니다.

추가적으로 SFT와 RL 실험을 했는데 RL 실험의 비중이 크네요. 일단 Value function을 빼버리고 여러 샘플에 대한 Reward 평균으로 대체한 GRPO를 사용했습니다. 여기에 MathShepherd (https://arxiv.org/abs/2312.08935) 로 데이터를 생성해서 Process Supervision을 줬네요. Online vs Offline, 1-0 vs Reward Score, Iterative RL 등에 대한 실험도 했습니다.

#math #llm

BRAIn: Bayesian Reward-conditioned Amortized Inference for natural language generation from feedback

(Gaurav Pandey, Yatin Nandwani, Tahira Naseem, Mayank Mishra, Guangxuan Xu, Dinesh Raghu, Sachindra Joshi, Asim Munawar, Ramón Fernandez Astudillo)

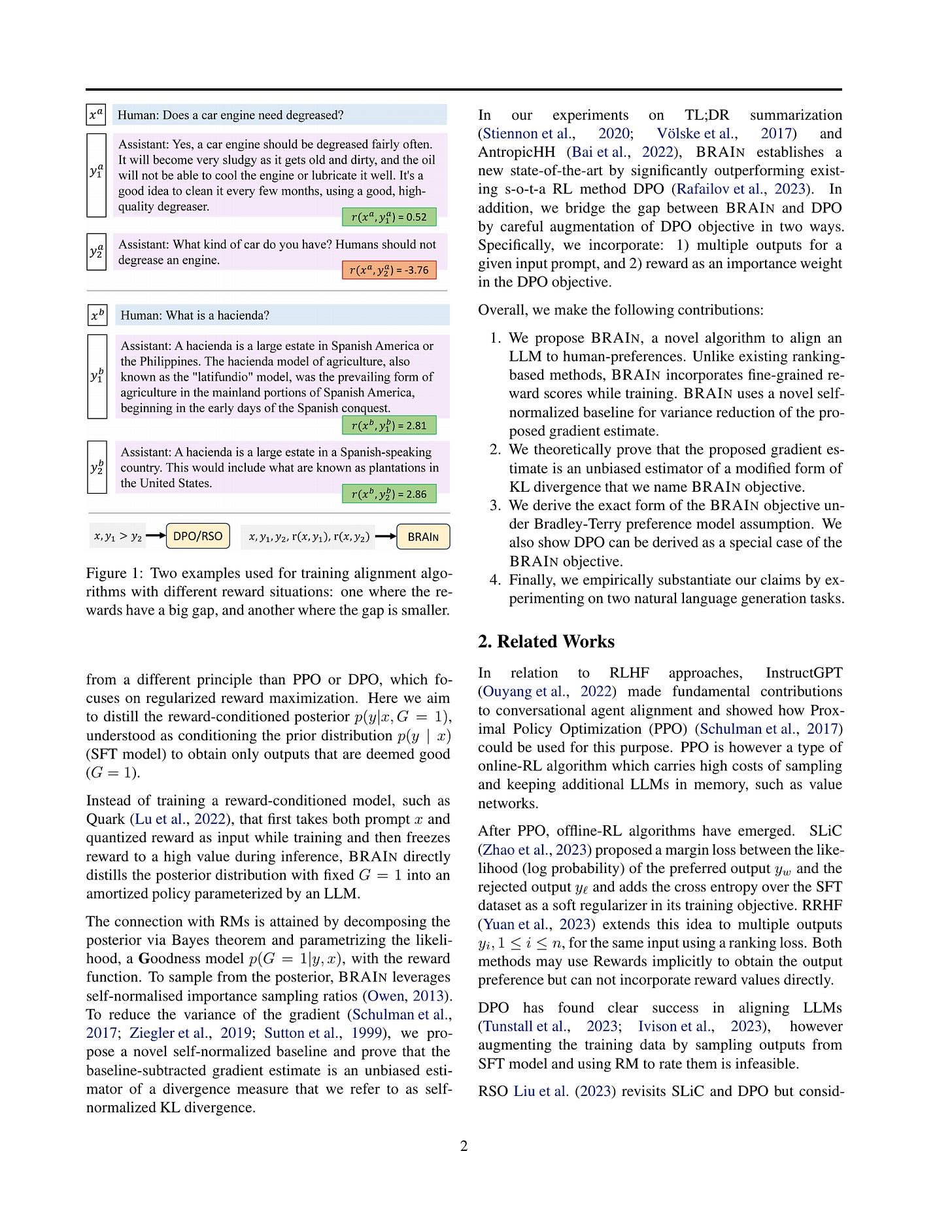

Following the success of Proximal Policy Optimization (PPO) for Reinforcement Learning from Human Feedback (RLHF), new techniques such as Sequence Likelihood Calibration (SLiC) and Direct Policy Optimization (DPO) have been proposed that are offline in nature and use rewards in an indirect manner. These techniques, in particular DPO, have recently become the tools of choice for LLM alignment due to their scalability and performance. However, they leave behind important features of the PPO approach. Methods such as SLiC or RRHF make use of the Reward Model (RM) only for ranking/preference, losing fine-grained information and ignoring the parametric form of the RM (eg., Bradley-Terry, Plackett-Luce), while methods such as DPO do not use even a separate reward model. In this work, we propose a novel approach, named BRAIn, that re-introduces the RM as part of a distribution matching approach.BRAIn considers the LLM distribution conditioned on the assumption of output goodness and applies Bayes theorem to derive an intractable posterior distribution where the RM is explicitly represented. BRAIn then distills this posterior into an amortized inference network through self-normalized importance sampling, leading to a scalable offline algorithm that significantly outperforms prior art in summarization and AntropicHH tasks. BRAIn also has interesting connections to PPO and DPO for specific RM choices.

N개 샘플에 대해 Reward Score로 Weight를 주고 Policy에서의 확률로 베이스라인을 설정한 DPO라고 할 수 있겠네요. 샘플을 여러 개 생성하자 - Reward Score를 사용하자라는 아이디어가 계속 나오고 있긴 합니다.

#rlhf #alignment

Decoding-time Realignment of Language Models

(Tianlin Liu, Shangmin Guo, Leonardo Bianco, Daniele Calandriello, Quentin Berthet, Felipe Llinares, Jessica Hoffmann, Lucas Dixon, Michal Valko, Mathieu Blondel)

Aligning language models with human preferences is crucial for reducing errors and biases in these models. Alignment techniques, such as reinforcement learning from human feedback (RLHF), are typically cast as optimizing a tradeoff between human preference rewards and a proximity regularization term that encourages staying close to the unaligned model. Selecting an appropriate level of regularization is critical: insufficient regularization can lead to reduced model capabilities due to reward hacking, whereas excessive regularization hinders alignment. Traditional methods for finding the optimal regularization level require retraining multiple models with varying regularization strengths. This process, however, is resource-intensive, especially for large models. To address this challenge, we propose decoding-time realignment (DeRa), a simple method to explore and evaluate different regularization strengths in aligned models without retraining. DeRa enables control over the degree of alignment, allowing users to smoothly transition between unaligned and aligned models. It also enhances the efficiency of hyperparameter tuning by enabling the identification of effective regularization strengths using a validation dataset.

RLHF 학습 과정에서 KL Penalty 계수를 바꿨을 때의 결과를 재학습 없이 알 수 있을까? SFT 모델과 RLHF 모델의 Logit을 결합하는 것으로 시뮬레이션을 할 수 있다는 결과입니다. RLHF 튜닝 작업에 대해 굉장히 유용한 도구가 될 수 있을 것 같네요.

#rlhf

Video-LaVIT: Unified Video-Language Pre-training with Decoupled Visual-Motional Tokenization

(Yang Jin, Zhicheng Sun, Kun Xu, Kun Xu, Liwei Chen, Hao Jiang, Quzhe Huang, Chengru Song, Yuliang Liu, Di Zhang, Yang Song, Kun Gai, Yadong Mu)

In light of recent advances in multimodal Large Language Models (LLMs), there is increasing attention to scaling them from image-text data to more informative real-world videos. Compared to static images, video poses unique challenges for effective large-scale pre-training due to the modeling of its spatiotemporal dynamics. In this paper, we address such limitations in video-language pre-training with an efficient video decomposition that represents each video as keyframes and temporal motions. These are then adapted to an LLM using well-designed tokenizers that discretize visual and temporal information as a few tokens, thus enabling unified generative pre-training of videos, images, and text. At inference, the generated tokens from the LLM are carefully recovered to the original continuous pixel space to create various video content. Our proposed framework is both capable of comprehending and generating image and video content, as demonstrated by its competitive performance across 13 multimodal benchmarks in image and video understanding and generation. Our code and models will be available at

https://video-lavit.github.io

.

https://video-lavit.github.io/

Video-Text에 대한 Autoregressive 모델링. 영상을 키프레임과 모션으로 분해하고, 이 둘을 모두 Quantization해서 텍스트와 함께 Autoregressive 학습을 시킵니다. 이 위에 Detokenizer를 올려 비디오와 텍스트에 대한 생성이 가능하게 했네요. 비디오에 대한 흥미로운 접근이 아닌가 싶습니다.

#video-generation #video-text #multimodal

Make Every Move Count: LLM-based High-Quality RTL Code Generation Using MCTS

(Matthew DeLorenzo, Animesh Basak Chowdhury, Vasudev Gohil, Shailja Thakur, Ramesh Karri, Siddharth Garg, Jeyavijayan Rajendran)

Existing large language models (LLMs) for register transfer level code generation face challenges like compilation failures and suboptimal power, performance, and area (PPA) efficiency. This is due to the lack of PPA awareness in conventional transformer decoding algorithms. In response, we present an automated transformer decoding algorithm that integrates Monte Carlo tree-search for lookahead, guiding the transformer to produce compilable, functionally correct, and PPA-optimized code. Empirical evaluation with a fine-tuned language model on RTL codesets shows that our proposed technique consistently generates functionally correct code compared to prompting-only methods and effectively addresses the PPA-unawareness drawback of naive large language models. For the largest design generated by the state-of-the-art LLM (16-bit adder), our technique can achieve a 31.8% improvement in the area-delay product.

LLM 디코딩 시점의 MCTS. 이런 계통의 시도들이 있었는데 (https://arxiv.org/abs/2309.15028) 여기서는 Verilog로 코딩을 하는 문제에 접근했습니다. 컴파일이 되는가, 작동하는가, 그리고 Area Delay Product가 Reward가 되는데 속도와 관련된 지표인 것 같군요.

#decoding #search