2024년 2월 29일

StartCoder 2

StarCoder 2와 The Stack v2가 나왔습니다. 이전과는 달리 Permissive 라이선스 뿐만 아니라 라이선스가 없는 사례도 포함시켜서 전체 데이터 용량이 7배 정도로 증가했습니다. 거기에 Pull Request와 문서들, LLVM IR, 코드와 수학 데이터셋, 자연어 데이터셋들까지 포함시켰습니다.

이걸 모아서 600 - 900B 가량의 데이터셋을 구성한 다음 3 - 4T 정도 학습시켰네요. 여기까지는 좋은데...벤치마크 스코어 여기저기에서 2T 학습 모델 DeepSeek-Coder에 밀리는 모습을 보여줍니다. 물론 Reasoning이나 Low Resource Language에 대한 성능 등 우세한 부분도 있긴 하지만요. 저자들에게도 좀 당황스러운 결과인 듯 하네요.

얼핏 봐서는 Markdown 같은 문서가 많이 들어갔다는 것, 퀄리티 필터링에서의 차이, 그리고 Dependency에 대한 고려 등의 차이가 눈에 띄긴 합니다. 원인이 무엇일지 찾아보는 것이 필요할 듯 하네요.

#llm #code

Llama 3

The Information에서 Llama 3가 7월에 나올 것이라는 이야기가 나왔네요. 생각보다 시간이 걸린다는 느낌이네요. 학습이 완료됐다는 이야기는 들었는데 프리트레이닝 한정이었을지도 모르겠습니다.

#llm

Diffusion-based Neural Network Weights Generation

(Bedionita Soro, Bruno Andreis, Hayeon Lee, Song Chong, Frank Hutter, Sung Ju Hwang)

Transfer learning is a topic of significant interest in recent deep learning research because it enables faster convergence and improved performance on new tasks. While the performance of transfer learning depends on the similarity of the source data to the target data, it is costly to train a model on a large number of datasets. Therefore, pretrained models are generally blindly selected with the hope that they will achieve good performance on the given task. To tackle such suboptimality of the pretrained models, we propose an efficient and adaptive transfer learning scheme through dataset-conditioned pretrained weights sampling. Specifically, we use a latent diffusion model with a variational autoencoder that can reconstruct the neural network weights, to learn the distribution of a set of pretrained weights conditioned on each dataset for transfer learning on unseen datasets. By learning the distribution of a neural network on a variety pretrained models, our approach enables adaptive sampling weights for unseen datasets achieving faster convergence and reaching competitive performance.

Neural Network Diffusion (https://arxiv.org/abs/2402.13144) 같은 시나리오가 하나 더 나왔군요. 이쪽은 데이터셋에 대한 임베딩을 결합해서 데이터셋에 대한 모델을 생성하도록 했습니다.

모델을 생성하는 모델을 생성하는 모델도 가능할까요? 궁금해지네요.

#diffusion #hypernetwork

Stable LM 2 1.6B Technical Report

(Marco Bellagente, Jonathan Tow, Dakota Mahan, Duy Phung, Maksym Zhuravinskyi, Reshinth Adithyan, James Baicoianu, Ben Brooks, Nathan Cooper, Ashish Datta, Meng Lee, Emad Mostaque, Michael Pieler, Nikhil Pinnaparju, Paulo Rocha, Harry Saini, Hannah Teufel, Niccolo Zanichelli, Carlos Riquelme)

We introduce StableLM 2 1.6B, the first in a new generation of our language model series. In this technical report, we present in detail the data and training procedure leading to the base and instruction-tuned versions of StableLM 2 1.6B. The weights for both models are available via Hugging Face for anyone to download and use. The report contains thorough evaluations of these models, including zero- and few-shot benchmarks, multilingual benchmarks, and the MT benchmark focusing on multi-turn dialogues. At the time of publishing this report, StableLM 2 1.6B was the state-of-the-art open model under 2B parameters by a significant margin. Given its appealing small size, we also provide throughput measurements on a number of edge devices. In addition, we open source several quantized checkpoints and provide their performance metrics compared to the original model.

1.6B 2T 학습 모델이군요. The Pile 스타일로 데이터셋을 큐레이션 했네요. Multilingual, Instruction 데이터 사용과 Cosine과 Inverse-Sqrt 스케쥴을 붙여 만든 Infinite Learning Rate Scheduling + Cooldown이 특징이겠네요.

#lm #pretraining

Learning or Self-aligning? Rethinking Instruction Fine-tuning

(Mengjie Ren, Boxi Cao, Hongyu Lin, Liu Cao, Xianpei Han, Ke Zeng, Guanglu Wan, Xunliang Cai, Le Sun)

Instruction Fine-tuning~(IFT) is a critical phase in building large language models~(LLMs). Previous works mainly focus on the IFT's role in the transfer of behavioral norms and the learning of additional world knowledge. However, the understanding of the underlying mechanisms of IFT remains significantly limited. In this paper, we design a knowledge intervention framework to decouple the potential underlying factors of IFT, thereby enabling individual analysis of different factors. Surprisingly, our experiments reveal that attempting to learn additional world knowledge through IFT often struggles to yield positive impacts and can even lead to markedly negative effects. Further, we discover that maintaining internal knowledge consistency before and after IFT is a critical factor for achieving successful IFT. Our findings reveal the underlying mechanisms of IFT and provide robust support for some very recent and potential future works.

Instruction Tuning의 반복되는 주제인 지식을 주입하는 것인가 혹은 가진 지식을 꺼내는 것인가라는 주제에 대한 탐색. Instruction Tuning 데이터셋을 나눠 1. 모델이 가진 지식과 일치하는 경우 2. 모델이 가진 지식과 불일치하는 경우 3. 모델이 가진 지식과 불일치하는 경우의 데이터셋에서 응답을 모델의 지식과 일치하도록 수정한 경우 세 가지를 가지고 분석합니다. 3의 경우에는 오답만 들어있게 되죠.

그런데 놀랍게도 2보다 3에서 나은 성능이 나타납니다. 즉 모델이 가진 지식을 바꾸려고 하면 성능이 낮아진다는 것이죠. 추가적인 분석을 통해 모델이 Instruction Tuning 이전과 이후에 갖고 있는 지식이 얼마나 일관된 지가 성능에 중요하다는 이야기를 합니다. 그런 의미에서는 3도 확실하게 갖고 있지 않던 지식을 반대 방향으로 강화시키기에 문제를 발생시킨다는 이야기를 하네요.

모델이 가진 지식과 부합한다는 것과 부합하지 않는다는 것을 분리하는 것이 까다로운 일이라 더 깊게 검토해봐야겠지만 흥미로운 주장인 것 같습니다. Instruction Tuning은 행동 방식만 바꾼다는 것을 넘어 행동 방식만 바꿀 때에야 최적이라는 아이디어일 수 있겠네요.

#instruction-tuning

Keeping LLMs Aligned After Fine-tuning: The Crucial Role of Prompt Templates

(Kaifeng Lyu, Haoyu Zhao, Xinran Gu, Dingli Yu, Anirudh Goyal, Sanjeev Arora)

Public LLMs such as the Llama 2-Chat have driven huge activity in LLM research. These models underwent alignment training and were considered safe. Recently Qi et al. (2023) reported that even benign fine-tuning (e.g., on seemingly safe datasets) can give rise to unsafe behaviors in the models. The current paper is about methods and best practices to mitigate such loss of alignment. Through extensive experiments on several chat models (Meta's Llama 2-Chat, Mistral AI's Mistral 7B Instruct v0.2, and OpenAI's GPT-3.5 Turbo), this paper uncovers that the prompt templates used during fine-tuning and inference play a crucial role in preserving safety alignment, and proposes the "Pure Tuning, Safe Testing" (PTST) principle -- fine-tune models without a safety prompt, but include it at test time. Fine-tuning experiments on GSM8K, ChatDoctor, and OpenOrca show that PTST significantly reduces the rise of unsafe behaviors, and even almost eliminates them in some cases.

안전에 대해 정렬된 LM을 Helpfulness에 대해 파인튜닝하면 안전성이 깨지는 경향이 있는데 이에 대한 대응. 파인튜닝 할 때는 안전 관련 프롬프트가 없이 하고 추론할 때는 안전 프롬프트를 사용하면 된다고 합니다. 재미있네요.

#finetuning #prompt #safety

RNNs are not Transformers (Yet): The Key Bottleneck on In-context Retrieval

(Kaiyue Wen, Xingyu Dang, Kaifeng Lyu)

This paper investigates the gap in representation powers of Recurrent Neural Networks (RNNs) and Transformers in the context of solving algorithmic problems. We focus on understanding whether RNNs, known for their memory efficiency in handling long sequences, can match the performance of Transformers, particularly when enhanced with Chain-of-Thought (CoT) prompting. Our theoretical analysis reveals that CoT improves RNNs but is insufficient to close the gap with Transformers. A key bottleneck lies in the inability of RNNs to perfectly retrieve information from the context, even with CoT: for several tasks that explicitly or implicitly require this capability, such as associative recall and determining if a graph is a tree, we prove that RNNs are not expressive enough to solve the tasks while Transformers can solve them with ease. Conversely, we prove that adopting techniques to enhance the in-context retrieval capability of RNNs, including Retrieval-Augmented Generation (RAG) and adding a single Transformer layer, can elevate RNNs to be capable of solving all polynomial-time solvable problems with CoT, hence closing the representation gap with Transformers.

RNN의 표현력 한계에 대한 연구. 결국 Context 내의 정보에 대한 Retrieval의 한계가 문제가 됩니다. 그리고 그 한계를 해소할 수 있는 것은 Retrieval 메커니즘의 결합이고 그에 대해 가장 간단한 방법은 (명시적으로 할 수도 있겠지만) 트랜스포머 레이어를 추가하는 것이죠.

여러모로 SSM이 쓰이더라도 트랜스포머와의 하이브리드가 맞는 방향이 아닐까 싶습니다. Based 같이 Linear Attention 내에서 해결하려는 시도도 있긴 합니다만 아무래도 좀 더 어려운 경로가 될 것 같네요.

#transformer #rnn #state-space-model

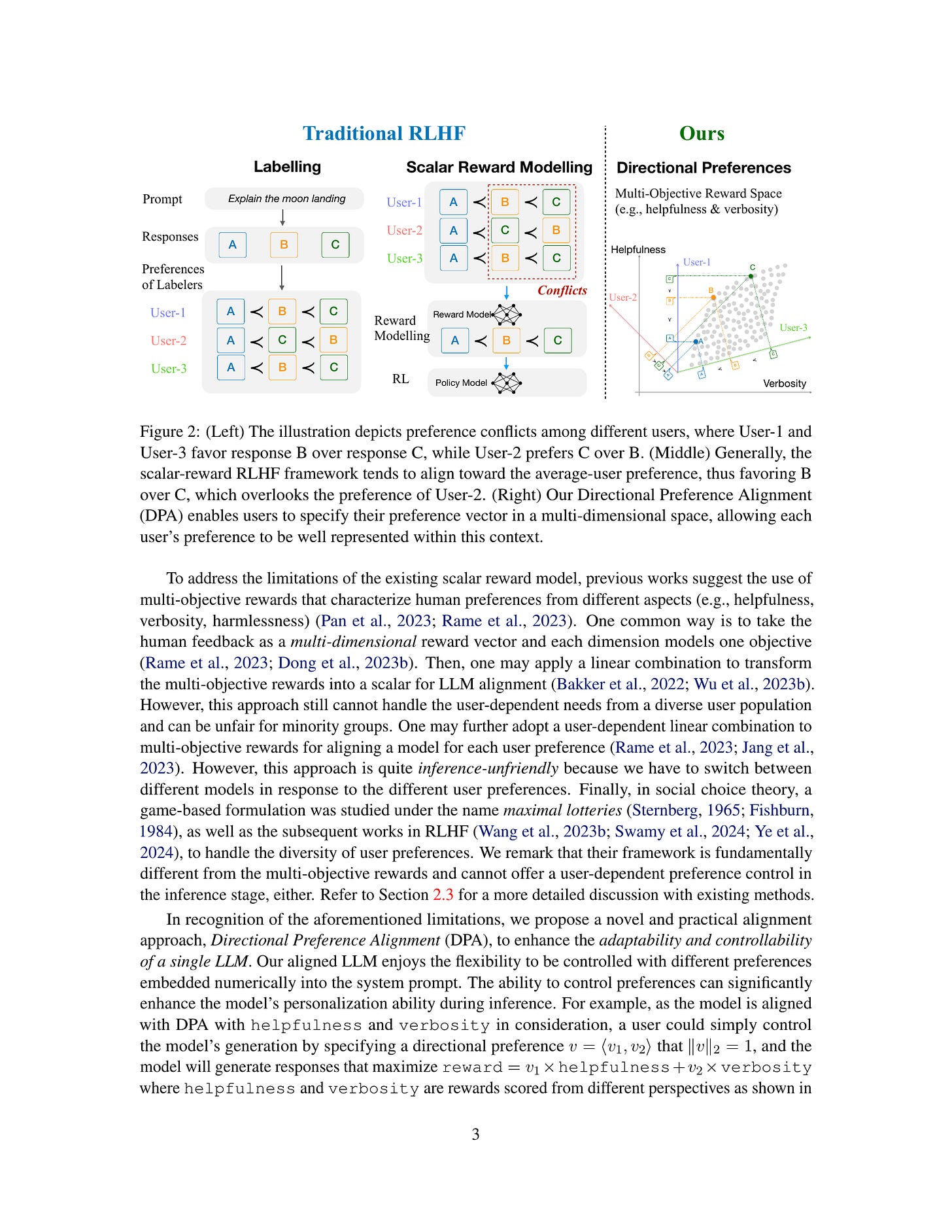

Arithmetic Control of LLMs for Diverse User Preferences: Directional Preference Alignment with Multi-Objective Rewards

(Haoxiang Wang, Yong Lin, Wei Xiong, Rui Yang, Shizhe Diao, Shuang Qiu, Han Zhao, Tong Zhang)

Fine-grained control over large language models (LLMs) remains a significant challenge, hindering their adaptability to diverse user needs. While Reinforcement Learning from Human Feedback (RLHF) shows promise in aligning LLMs, its reliance on scalar rewards often limits its ability to capture diverse user preferences in real-world applications. To address this limitation, we introduce the Directional Preference Alignment (DPA) framework. Unlike the scalar-reward RLHF, DPA incorporates multi-objective reward modeling to represent diverse preference profiles. Additionally, DPA models user preferences as directions (i.e., unit vectors) in the reward space to achieve user-dependent preference control. Our method involves training a multi-objective reward model and then fine-tuning the LLM with a preference-conditioned variant of Rejection Sampling Finetuning (RSF), an RLHF method adopted by Llama 2. This method enjoys a better performance trade-off across various reward objectives. In comparison with the scalar-reward RLHF, DPA offers users intuitive control over LLM generation: they can arithmetically specify their desired trade-offs (e.g., more helpfulness with less verbosity). We also validate the effectiveness of DPA with real-world alignment experiments on Mistral-7B. Our method provides straightforward arithmetic control over the trade-off between helpfulness and verbosity while maintaining competitive performance with strong baselines such as Direct Preference Optimization (DPO).

SteerLM (https://arxiv.org/abs/2310.05344) 처럼 조작 가능한 슬라이더를 제공하는 모델 개발. 여기서는 유저의 선호를 Unit Vector로 본다는 것이 특징이네요. 즉 방향을 선택하는 것이죠. 이 Unit Vector를 사용해 Reward Score를 결합합니다.

이건 Helpfulness와 Conciseness 같이 종종 충돌하는 측면을 결합할 때 자연스러울 수 있을 것 같긴 합니다. 그런데 아주 독립적인 경우는 어떨지 궁금하긴 하네요.

시스템 프롬프트 측면에서 흥미로운 질문인 것 같긴 합니다.

#alignment